Celery is an asynchronous task queue(异步任务队列).

RabbitMQ is a message broker(消息代理,消息中间件) widely used with Celery.

基本概念

Broker

The Broker (RabbitMQ) is used for dispatching tasks to task queues according to some routing rules, and then delivering tasks from task queues to workers.

Broker (RabbitMQ)按照一定的路由规则派遣任务到任务队列,然后把任务队列中的任务交付给 workers。

Consumer (Celery Workers)

The Consumer is the one or multiple Celery workers executing the tasks. You could start many workers depending on your use case.

Consumer 是一个或多个正在执行任务的 Celery workers。你可以开始许多 workers 根据您的使用情况。

Result Backend

The Result Backend is used for storing the results of your tasks. However, it is not a required element, so if you do not include it in your settings, you cannot access the results of your tasks.

Result Backend 用于存储你的任务结果。但是,它不是必需的元素,因此,如果你的设置中不包括它,你便不能访问你的任务结果。

安装 Celery

pip install celery

选择 Broker

Why do we need another thing called broker?

It’s because Celery does not actually construct a message queue itself, so it needs an extra(额外的) message transport (a broker) to do that work.

In fact, you can choose from a few different brokers, like RabbitMQ, Redis or a database.

We are using RabbitMQ as our broker because it is feature-complete, stable and recommended by Celery.

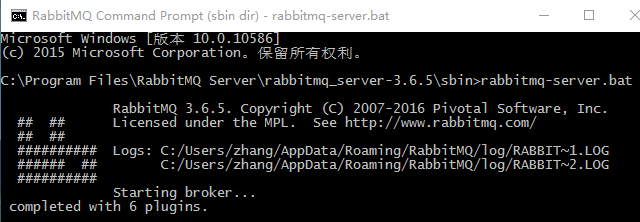

启动 RabbitMQ

You will see similar output if the RabbitMQ server starts successfully.

配置 RabbitMQ

要使用 Celery,我们需要创建一个 RabbitMQ 用户、一个虚拟主机,并且允许这个用户访问这个虚拟主机:

rabbitmqctl add_user myuser mypassword

rabbitmqctl add_vhost myvhost

rabbitmqctl set_permissions -p myvhost myuser ".*" ".*" ".*"

There are three kinds of operations in RabbitMQ: configure, write and read.

The "." "." ".*" means that the user “myuser” will have all configure, write and read permissions.

A Simple Demo Project

Project Structure

celery_demo

__init__.py

celery.py

tasks.py

run_tasks.py

celery.py

# -*-coding:utf-8-*-

from __future__ import absolute_import

from celery import Celery

app = Celery('celery_demo',

broker='amqp://myuser:mypassword@localhost/myvhost',

backend='rpc://',

include=['celery_demo.tasks'])

The first argument of Celery is just the name of the project package, which is “celery_demo”.

The broker argument specifies the broker URL, which should be the RabbitMQ we started earlier. Note that the format of broker URL should be: transport://userid:password@hostname:port/virtual_host

For RabbitMQ, the transport is amqp.

The backend argument specifies a backend URL. A backend in Celery is used for storing the task results. So if you need to access the results of your task when it is finished, you should set a backend for Celery.

rpc means sending the results back as AMQP messages, which is an acceptable format for our demo. More choices for message formats can be found here.

The include argument specifies a list of modules that you want to import when Celery worker starts. We add the tasks module here so that the worker can find our task.

tasks.py

# -*-coding:utf-8-*-

from .celery import app

import time

@app.task

def longtime_add(x, y):

print 'long time task begins'

time.sleep(5)

print 'long time task finished'

return x + y

run_tasks.py

# -*-coding:utf-8-*-

from .tasks import longtime_add

import time

if __name__ == '__main__':

result = longtime_add.delay(1, 2)

# at this time, our task is not finished, so it will return False

print 'Task finished? ', result.ready()

print 'Task result: ', result.result

# sleep 10 seconds to ensure the task has been finished

time.sleep(10)

print 'Task finished? ', result.ready()

print 'Task result: ', result.result

Here, we call the task longtime_add using the delay method, which is needed if we want to process the task asynchronously.

Start Celery Worker

Now, we can start Celery worker using the command below (run in the parent folder of our project folder celery_demo):

celery -A celery_demo worker --loglevel=info

You will see something like this if Celery successfully connects to RabbitMQ:

Run Tasks

In another console, input the following (run in the parent folder of our project folder celery_demo):

python -m celery_demo.run_tasks

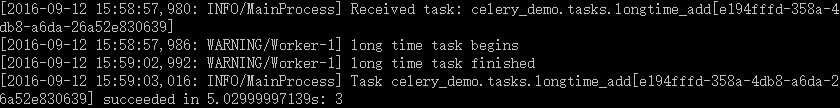

Now if you look at the Celery console, you will see that our worker received the task:

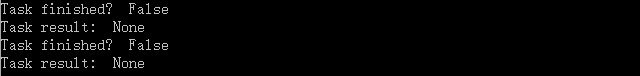

In the current console, you will see the following output:

This is the expected behavior. At first, our task was not ready, and the result was None. After 10 seconds, our task has been finished and the result is 3.

Monitor Celery in Real Time

Flower is a real-time web-based monitor for Celery. Using Flower, you could easily monitor your task progress and history.

To start the Flower web console, we need to run the following command (run in the parent folder of our project folder celery_demo):

celery -A test_celery flower

Flower will run a server with default port 5555, and you can access the web console at http://localhost:5555.