以SurfaceFlinger服务为例

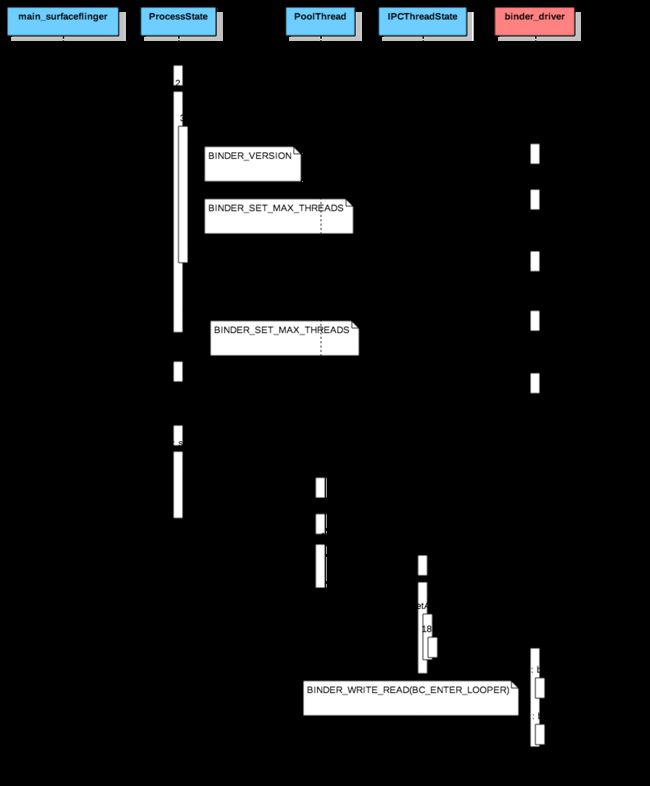

SurfaceFlinger进程支持Binder IPC的准备工作

下面从SurfaceFlinger进程的main方法开始分析。

int main(int, char**) {

signal(SIGPIPE, SIG_IGN);

// When SF is launched in its own process, limit the number of

// binder threads to 4.

// 创建ProcessState,设置binder驱动请求创建的最大binder线程数

ProcessState::self()->setThreadPoolMaxThreadCount(4);

// start the thread pool

sp ps(ProcessState::self());

// 启动binder线程,等待客户端的请求

ps->startThreadPool();

......

}

首先看ProcessState对象的创建。

// 单例模式

sp ProcessState::self()

{

// 访问ProcessState需要持有锁

Mutex::Autolock _l(gProcessMutex);

if (gProcess != NULL) {

return gProcess;

}

// 创建ProcessState

gProcess = new ProcessState;

return gProcess;

}

下面看ProcessState的构造方法

ProcessState::ProcessState()

// 打开binder驱动

: mDriverFD(open_driver())

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

// binder线程名从"1"开始

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual address space to receive transactions.

// 映射虚拟地址空间用于接受binder数据

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using /dev/binder failed: unable to mmap transaction memory.\n");

close(mDriverFD);

mDriverFD = -1;

}

}

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

}

对于打开binder驱动以及映射虚拟地址空间的过程不再赘述。

下面看setThreadPoolMaxThreadCount()的实现

status_t ProcessState::setThreadPoolMaxThreadCount(size_t maxThreads) {

status_t result = NO_ERROR;

// 通过ioctl设置binder驱动能够请求创建的最大binder线程数

if (ioctl(mDriverFD, BINDER_SET_MAX_THREADS, &maxThreads) != -1) {

mMaxThreads = maxThreads;

} else {

result = -errno;

ALOGE("Binder ioctl to set max threads failed: %s", strerror(-result));

}

return result;

}

下面看启动binder线程池,等待客户端请求。

void ProcessState::startThreadPool()

{

AutoMutex _l(mLock);

if (!mThreadPoolStarted) {

mThreadPoolStarted = true;

// 启动binder线程, 参数为true表示是binder主线程

spawnPooledThread(true);

}

}

下面看spawnPooledThread()的实现

void ProcessState::spawnPooledThread(bool isMain)

{

if (mThreadPoolStarted) {

// 设置Binder线程的名字

String8 name = makeBinderThreadName();

ALOGV("Spawning new pooled thread, name=%s\n", name.string());

sp t = new PoolThread(isMain);

// 启动binder线程

t->run(name.string());

}

}

当binder线程运行后,执行PoolThread的threadLoop()方法。

virtual bool threadLoop()

{

// 为binder线程创建IPCThreadState,加入binder线程池

IPCThreadState::self()->joinThreadPool(mIsMain);

// loop结束

return false;

}

下面首先看IPCThreadState的创建

IPCThreadState* IPCThreadState::self()

{

// gHaveTLS为全局标志,binder线程共享

if (gHaveTLS) {

restart:

const pthread_key_t k = gTLS;

// 获取binder线程私有value

IPCThreadState* st = (IPCThreadState*)pthread_getspecific(k);

if (st) return st;

// 创建IPCThreadState

return new IPCThreadState;

}

if (gShutdown) {

ALOGW("Calling IPCThreadState::self() during shutdown is dangerous, expect a crash.\n");

return NULL;

}

pthread_mutex_lock(&gTLSMutex);

if (!gHaveTLS) {

// 第一个binder线程创建gTLS,gTLS为进程中的binder线程共享,但gTLS对应的value为线程私有

int key_create_value = pthread_key_create(&gTLS, threadDestructor);

if (key_create_value != 0) {

pthread_mutex_unlock(&gTLSMutex);

ALOGW("IPCThreadState::self() unable to create TLS key, expect a crash: %s\n",

strerror(key_create_value));

return NULL;

}

gHaveTLS = true;

}

pthread_mutex_unlock(&gTLSMutex);

goto restart;

}

下面看IPCThreadState的构造函数

IPCThreadState::IPCThreadState()

: mProcess(ProcessState::self()),

mMyThreadId(gettid()),

mStrictModePolicy(0),

mLastTransactionBinderFlags(0)

{

// 设置gTLS对应的IPCThreadState(binder线程私有)

pthread_setspecific(gTLS, this);

clearCaller();

// 设置接收binder驱动发送的IPC数据的Parcel容量

mIn.setDataCapacity(256);

// 设置存储发送给binder驱动IPC数据的Parcel容量

mOut.setDataCapacity(256);

}

回到threadLoop()下面看joinThreadPool()的实现

void IPCThreadState::joinThreadPool(bool isMain)

{

LOG_THREADPOOL("**** THREAD %p (PID %d) IS JOINING THE THREAD POOL\n", (void*)pthread_self(), getpid());

// 这里isMain为true,mOut写入命令协议BC_ENTER_LOOPER

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER);

// This thread may have been spawned by a thread that was in the background

// scheduling group, so first we will make sure it is in the foreground

// one to avoid performing an initial transaction in the background.

set_sched_policy(mMyThreadId, SP_FOREGROUND);

status_t result;

do {

processPendingDerefs();

// now get the next command to be processed, waiting if necessary

// 处理客户端请求或者等待客户端请求

result = getAndExecuteCommand();

if (result < NO_ERROR && result != TIMED_OUT && result != -ECONNREFUSED && result != -EBADF) {

ALOGE("getAndExecuteCommand(fd=%d) returned unexpected error %d, aborting",

mProcess->mDriverFD, result);

abort();

}

// Let this thread exit the thread pool if it is no longer

// needed and it is not the main process thread.

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

LOG_THREADPOOL("**** THREAD %p (PID %d) IS LEAVING THE THREAD POOL err=%p\n",

(void*)pthread_self(), getpid(), (void*)result);

// binder线程退出,向binder驱动发送BC_EXIT_LOOPER

mOut.writeInt32(BC_EXIT_LOOPER);

talkWithDriver(false);

}

下面看getAndExecuteCommand()的实现

status_t IPCThreadState::getAndExecuteCommand()

{

status_t result;

int32_t cmd;

// 与binder驱动交互

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) return result;

cmd = mIn.readInt32();

......

// 处理客户端请求

result = executeCommand(cmd);

......

}

return result;

}

下面看talkWithDriver()的实现

// 参数doReceive默认为true

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

// 这里needRead为true

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

// binder IPC数据的大小与地址

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

// 接收binder驱动返回的数据

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

......

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(__ANDROID__)

// 进入binder驱动

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

......

}

......

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

alog << "Remaining data size: " << mOut.dataSize() << endl;

alog << "Received commands from driver: " << indent;

const void* cmds = mIn.data();

const void* end = mIn.data() + mIn.dataSize();

alog << HexDump(cmds, mIn.dataSize()) << endl;

while (cmds < end) cmds = printReturnCommand(alog, cmds);

alog << dedent;

}

return NO_ERROR;

}

......

}

下面看binder_ioctl()

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

// arg是用户态binder_write_read地址

void __user *ubuf = (void __user *)arg;

......

switch (cmd) {

case BINDER_WRITE_READ:

// 处理BINDER_WRITE_READ

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

......

}

......

}

下面看binder_ioctl_write_read()

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

......

// 用户态binder_write_read拷贝至内核态

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

......

if (bwr.write_size > 0) {

// 向binder驱动发送命令,这里是BC_ENTER_LOOPER

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

// 从binder驱动读取请求,可能睡眠

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

......

// 内核态binder_write_read拷贝至用户态

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

下面首先看binder_thread_write()的实现

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

struct binder_context *context = proc->context;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

......

while (ptr < end && thread->return_error == BR_OK) {

// 获取binder命令协议

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

// 更新binder IPC统计信息

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

binder_stats.bc[_IOC_NR(cmd)]++;

proc->stats.bc[_IOC_NR(cmd)]++;

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

case BC_INCREFS:

case BC_ACQUIRE:

......

case BC_ENTER_LOOPER:

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

// 更新binder线程looper状态

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

}

// binder驱动消耗的数据大小

*consumed = ptr - buffer;

}

return 0;

}

binder驱动处理命令协议BC_ENTER_LOOPER只是修改binder线程的looper状态,下面看binder_thread_read()的实现

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

// buffer存放binder驱动返回用户态的IPC数据

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

if (*consumed == 0) {

// BR_NOOP为空操作

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

retry:

// binder线程是否有待处理的工作

wait_for_proc_work = thread->transaction_stack == NULL &&

list_empty(&thread->todo);

......

// 更新binder looper状态

thread->looper |= BINDER_LOOPER_STATE_WAITING;

// binder线程没有待处理的工作,增加进程中空闲的binder线程计数

if (wait_for_proc_work)

proc->ready_threads++;

......

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)\n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else

// binder线程空闲,进入睡眠等待binder_has_proc_work(proc, thread)为true

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

} else {

if (non_block) {

if (!binder_has_thread_work(thread))

ret = -EAGAIN;

} else

// binder线程进入睡眠等待binder_has_thread_work(thread)为true

ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread));

}

......

}

至此,binder线程进入睡眠等待客户端的请求。

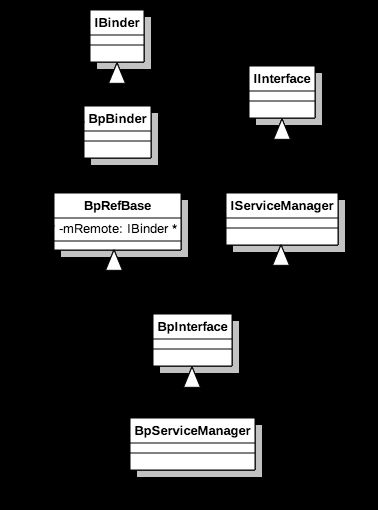

ServiceManager代理对象的获取

类图

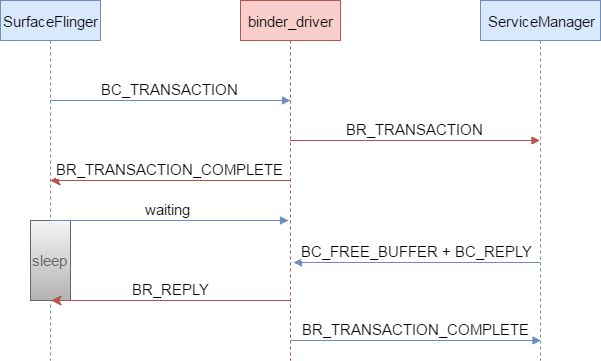

SurfaceFlinger向ServiceManager发送PING_TRANSACTION请求的过程

ServiceManager处理完PING_TRANSACTION请求后回复SurfaceFlinger的过程

小结

ServiceManager代理对象的获取过程与服务注册或者服务获取类似,这里不详细讨论。

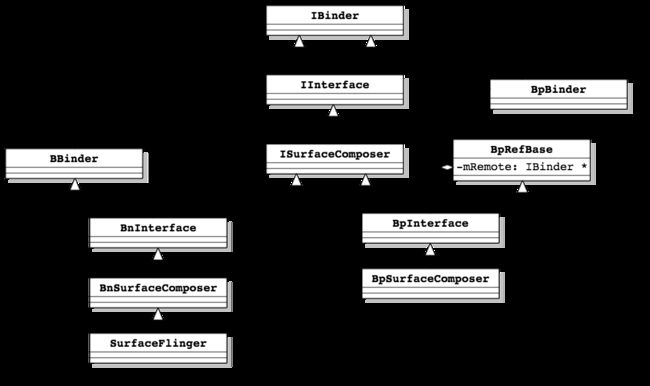

SurfaceFlinger服务注册

SurfaceFlinger的类图

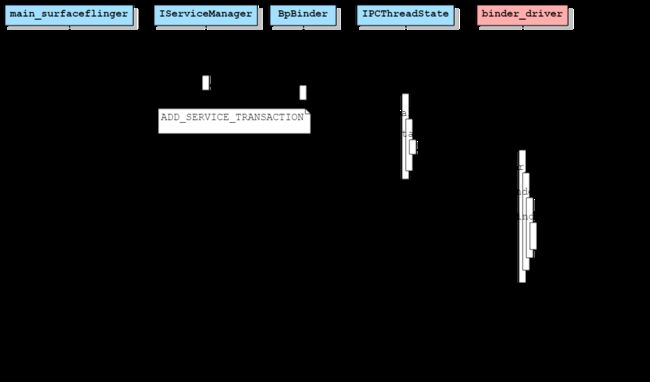

SurfaceFlinger向binder驱动发送ADD_SERVICE_TRANSACTION(3)请求

下面从SurfaceFlinger的main()方法开始分析

int main(int, char**) {

......

// publish surface flinger

sp sm(defaultServiceManager());

// sm为BpServiceManager对象

// SurfaceFlinger::getServiceName()返回"SurfaceFlinger"

// flinger为SurfaceFlinger对象的强指针

sm->addService(String16(SurfaceFlinger::getServiceName()), flinger, false);

......

}

下面看BpServiceManager的addService()方法

virtual status_t addService(const String16& name, const sp& service,

bool allowIsolated)

{

Parcel data, reply;

// 封装IPC数据data, 以4字节对齐

// IServiceManager::getInterfaceDescriptor()返回"android.os.IServiceManager"

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

// remote()返回BpBinder对象

status_t err = remote()->transact(ADD_SERVICE_TRANSACTION, data, &reply);

return err == NO_ERROR ? reply.readExceptionCode() : err;

}

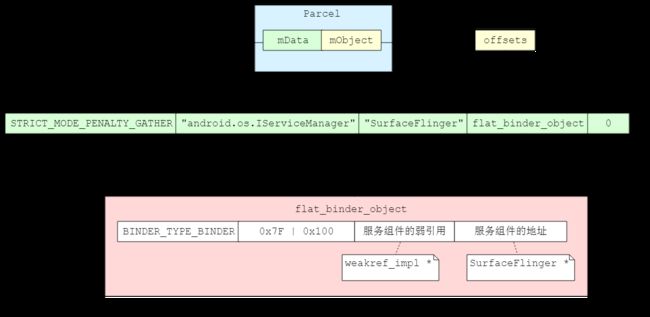

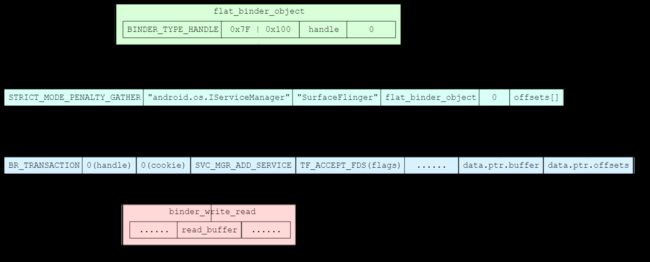

addService()首先封装IPC数据,然后调用BpBinder的transact()方法,打包后的IPC数据data如下图所示

下面看BpBinder的transact()方法

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

// flags默认为0

// mAlive标示服务端是否活着

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

下面看IPCThreadState的transact()方法

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

if (err == NO_ERROR) {

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

// 构造发往binder驱动的IPC数据mOut

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

......

// 向binder驱动发送数据并等待回复

if ((flags & TF_ONE_WAY) == 0) {

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

// 非ONE_WAY,也就是同步IPC

if (reply) {

// 这里reply非空

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

......

}

......

}

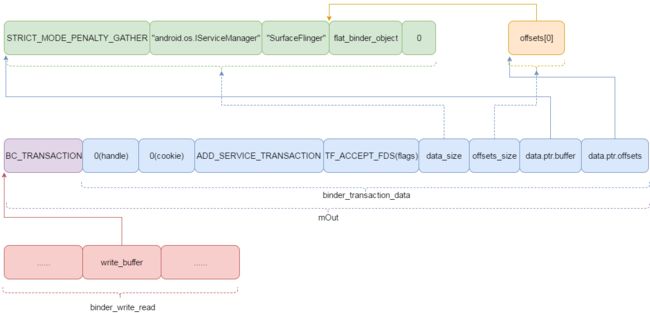

最终,发往binder驱动的IPC数据mOut格式如下图所示:

下面看waitForResponse()的实现

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

// 向binder驱动发送数据

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

......

下面看talkWithDriver()的实现

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

// read buffer为空,需要读取binder驱动返回的数据

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

......

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

// 记录binder驱动消耗的write_buffer的大小

bwr.write_consumed = 0;

// 记录binder驱动填充的read_buffer的大小

bwr.read_consumed = 0;

do {

......

#if defined(__ANDROID__)

// 通过ioctl向binder驱动发送命令

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

......

} while (err == -EINTR);

......

if (err >= NO_ERROR) {

// binder驱动未消耗完传递的数据

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

// binder驱动返回的数据

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

......

}

......

}

下面看binder驱动如何将ADD_SERVICE请求转发给ServiceManager。

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

// arg为用户态binder_write_read的地址

void __user *ubuf = (void __user *)arg;

......

// 获取当前线程的binder_thread

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ:

// 调用binder_ioctl_write_read处理BINDER_WRITE_READ

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

......

}

......

}

下面看binder_ioctl_write_read()的实现

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

// 用户态binder_write_read拷贝至内核空间

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

......

if (bwr.write_size > 0) {

// 发送IPC数据

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

// 返回IPC数据

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

......

// 内核binder_write_read拷贝至用户空间

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

下面看binder_thread_write()的实现

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

// 从用户空间中读取cmd,也就是BC_TRANSACTION

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

trace_binder_command(cmd);

// 更新binder驱动状态

if (_IOC_NR(cmd) < ARRAY_SIZE(binder_stats.bc)) {

// binder驱动状态

binder_stats.bc[_IOC_NR(cmd)]++;

// binder进程状态

proc->stats.bc[_IOC_NR(cmd)]++;

// binder线程状态

thread->stats.bc[_IOC_NR(cmd)]++;

}

switch (cmd) {

......

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

// 用户态binder_transaction_data拷贝至内核态

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

// 发送IPC数据到目标进程

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

下面看binder_transaction()的实现

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error;

// binder通信log

e = binder_transaction_log_add(&binder_transaction_log);

// 非ONE_WAY BC_TRANSACTION: 0

// ONE_WAY BC_TRANSACTION: 1

// BC_REPLY: 2

e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

// binder通信发起者的进程id以及线程id

e->from_proc = proc->pid;

e->from_thread = thread->pid;

// binder引用句柄

e->target_handle = tr->target.handle;

// 发送到目标进程的数据大小以及binder object的数量

e->data_size = tr->data_size;

e->offsets_size = tr->offsets_size;

// 这里reply = 0

if (reply) {

......

} else {

if (tr->target.handle) {

......

} else {

// binder引用句柄为0对应的binder实体为binder_context_mgr_node

target_node = binder_context_mgr_node;

if (target_node == NULL) {

return_error = BR_DEAD_REPLY;

goto err_no_context_mgr_node;

}

}

......

// binder实体对应的binder_proc也就是ServiceManager进程

target_proc = target_node->proc;

......

}

if (target_thread) {

// 特定的binder线程处理

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

// binder进程处理

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

// binder IPC目标进程id

e->to_proc = target_proc->pid;

// 创建binder_transaction

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

// 创建binder_work

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

// binder调试id

t->debug_id = ++binder_last_id;

e->debug_id = t->debug_id;

.......

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

// 目标进程

t->to_proc = target_proc;

// 目标线程,可以为NULL

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

t->priority = task_nice(current);

trace_binder_transaction(reply, t, target_node);

// 在目标进程中分配binder_buffer

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

if (t->buffer == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_alloc_buf_failed;

}

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

// 发往目标进程的用户态数据拷贝至binder_buffer

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

binder_user_error("%d:%d got transaction with invalid data ptr\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

// 用户态binder object偏移信息拷贝至binder_buffer

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size)) {

binder_user_error("%d:%d got transaction with invalid offsets ptr\n",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

......

off_end = (void *)offp + tr->offsets_size;

// 解析目标进程binder_buffer中的flat_binder_object对象并修改信息

for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

if (*offp > t->buffer->data_size - sizeof(*fp) ||

t->buffer->data_size < sizeof(*fp) ||

!IS_ALIGNED(*offp, sizeof(u32))) {

binder_user_error("%d:%d got transaction with invalid offset, %lld\n",

proc->pid, thread->pid, (u64)*offp);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

fp = (struct flat_binder_object *)(t->buffer->data + *offp);

// 这里我们传递的flat_binder_object的类型是BINDER_TYPE_BINDER

switch (fp->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct binder_ref *ref;

// fp->binder为binder实体对应的服务组件的弱引用

// 获取binder_node,服务正在注册,这里返回null

struct binder_node *node = binder_get_node(proc, fp->binder);

if (node == NULL) {

// 为服务组件创建binder_node

// fp->cookie为用户态服务组件地址

node = binder_new_node(proc, fp->binder, fp->cookie);

if (node == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_new_node_failed;

}

node->min_priority = fp->flags & FLAT_BINDER_FLAG_PRIORITY_MASK;

node->accept_fds = !!(fp->flags & FLAT_BINDER_FLAG_ACCEPTS_FDS);

}

......

// 为binder_node创建对应的binder_ref对象

ref = binder_get_ref_for_node(target_proc, node);

if (ref == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_for_node_failed;

}

// 改写flat_binder_object信息

if (fp->type == BINDER_TYPE_BINDER)

fp->type = BINDER_TYPE_HANDLE;

else

fp->type = BINDER_TYPE_WEAK_HANDLE;

fp->binder = 0;

fp->handle = ref->desc;

fp->cookie = 0;

binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE,

&thread->todo);

trace_binder_transaction_node_to_ref(t, node, ref);

binder_debug(BINDER_DEBUG_TRANSACTION,

" node %d u%016llx -> ref %d desc %d\n",

node->debug_id, (u64)node->ptr,

ref->debug_id, ref->desc);

} break;

......

}

}

if (reply) {

BUG_ON(t->buffer->async_transaction != 0);

binder_pop_transaction(target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {

BUG_ON(t->buffer->async_transaction != 0);

t->need_reply = 1;

// binder_transaction添加到发送方的transaction_stack中

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

BUG_ON(target_node == NULL);

BUG_ON(t->buffer->async_transaction != 1);

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

t->work.type = BINDER_WORK_TRANSACTION;

// 添加到目标进程(线程)的待处理的工作列表

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

// 添加到发送方待处理的工作列表

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait) {

// 唤醒目标进程(线程)

if (reply || !(t->flags & TF_ONE_WAY))

wake_up_interruptible_sync(target_wait);

else

wake_up_interruptible(target_wait);

}

return;

......

}

下面看ServiceManager处理ADD_SERVICE请求。

ServiceManager处理SVC_MGR_ADD_SERVICE(3)请求

通常ServiceManager的binder线程在binder_thread_read()中进入睡眠等待,下面从binder线程被唤醒后开始分析。

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

......

if (wait_for_proc_work)

// 如果binder线程在等待进程工作,唤醒后调整空闲binder线程计数

proc->ready_threads--;

thread->looper &= ~BINDER_LOOPER_STATE_WAITING;

......

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

// 获取待处理的工作项

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct binder_work,

entry);

} else if (!list_empty(&proc->todo) && wait_for_proc_work) {

w = list_first_entry(&proc->todo, struct binder_work,

entry);

} else {

/* no data added */

if (ptr - buffer == 4 &&

!(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN))

goto retry;

break;

}

if (end - ptr < sizeof(tr) + 4)

break;

switch (w->type) {

// 这里工作项的类型为BINDER_WORK_TRANSACTION

case BINDER_WORK_TRANSACTION: {

// 获取binder_transaction

t = container_of(w, struct binder_transaction, work);

} break;

......

}

......

if (t->buffer->target_node) {

// 目标binder实体,这里是binder_context_mgr_node

struct binder_node *target_node = t->buffer->target_node;

// 目标服务的弱引用

tr.target.ptr = target_node->ptr;

// 目标服务的地址

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

cmd = BR_TRANSACTION;

} else {

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;

}

// code为ADD_SERVICE_TRANSACTION(3)

tr.code = t->code;

tr.flags = t->flags;

tr.sender_euid = from_kuid(current_user_ns(), t->sender_euid);

if (t->from) {

struct task_struct *sender = t->from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

tr.sender_pid = 0;

}

// 接收的IPC数据的大小

tr.data_size = t->buffer->data_size;

// 接收的binder object的数量

tr.offsets_size = t->buffer->offsets_size;

// 接收的IPC数据用户态地址

tr.data.ptr.buffer = (binder_uintptr_t)(

(uintptr_t)t->buffer->data +

proc->user_buffer_offset);

// 接收的binder object偏移信息用户态地址

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

// BR_TRANSACTION拷贝至用户态read_buffer

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

// binder_transaction_data拷贝至用户态read_buffer

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

trace_binder_transaction_received(t);

binder_stat_br(proc, thread, cmd);

......

// binder_work从待处理工作项中删除

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

if (cmd == BR_TRANSACTION && !(t->flags & TF_ONE_WAY)) {

// binder_transaction添加到transaction_stack

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

break;

}

done:

// 记录binder驱动返回的数据大小

*consumed = ptr - buffer;

......

}

接下来返回用户态,下面分析返回用户态后的工作。

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

// 从此返回用户态

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

......

// 处理客户端的请求

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

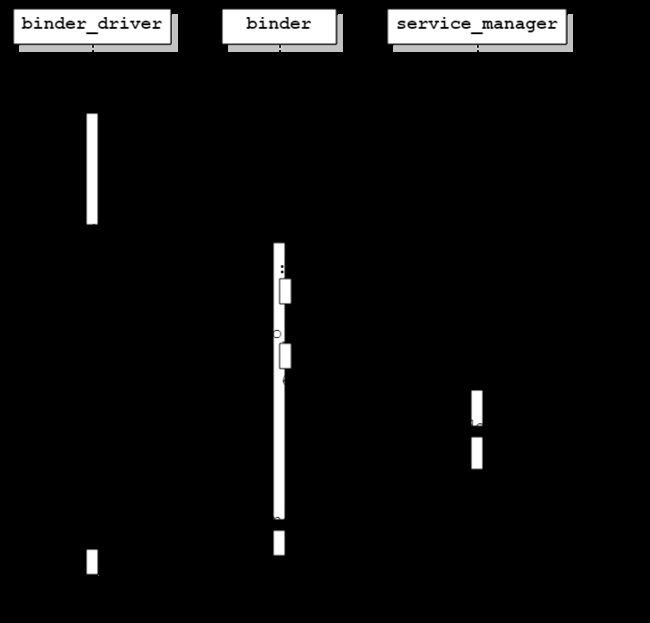

在分析binder_parse()之前,首先看ServiceManager收到的IPC数据的内容,如图所示。

下面继续看binder_parse()的实现

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

......

// cmd是BR_TRANSACTION(我们忽略BR_NOOP的处理)

switch(cmd) {

......

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

// func是svcmgr_handler

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

// reply用于记录返回客户端的IPC数据

bio_init(&reply, rdata, sizeof(rdata), 4);

// msg用于记录客户端发送的IPC数据

bio_init_from_txn(&msg, txn);

// 调用svcmgr_handler处理客户端请求

res = func(bs, txn, &msg, &reply);

if (txn->flags & TF_ONE_WAY) {

// ONE_WAY无需回复,释放binder_buffer

binder_free_buffer(bs, txn->data.ptr.buffer);

} else {

// 非ONE_WAY,需要回复

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

}

ptr += sizeof(*txn);

break;

}

......

}

}

......

}

下面看svcmgr_handler()的实现

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

......

// policy通常为STRICT_NODE_PENALTY_GATHER

strict_policy = bio_get_uint32(msg);

// "android.os.IServiceManager"

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

// 检查IServiceManager接口描述符字符串

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s, len));

return -1;

}

......

switch(txn->code) {

......

case SVC_MGR_ADD_SERVICE:

// 获取要注册的服务名字

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

// handle为binder引用描述符(对应于特定服务binder实体)

handle = bio_get_ref(msg);

// 是否允许孤立的进程访问服务

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

// 注册服务

if (do_add_service(bs, s, len, handle, txn->sender_euid,

allow_isolated, txn->sender_pid))

return -1;

break;

......

}

......

}

下面看do_add_service()的实现

int do_add_service(struct binder_state *bs,

const uint16_t *s, size_t len,

uint32_t handle, uid_t uid, int allow_isolated,

pid_t spid)

{

struct svcinfo *si;

......

// 检查是否有权限注册服务

if (!svc_can_register(s, len, spid, uid)) {

ALOGE("add_service('%s',%x) uid=%d - PERMISSION DENIED\n",

str8(s, len), handle, uid);

return -1;

}

// 查询服务是否已经注册(find_svc实现效率可优化)

si = find_svc(s, len);

if (si) {

// 已经注册过,更新handle

if (si->handle) {

ALOGE("add_service('%s',%x) uid=%d - ALREADY REGISTERED, OVERRIDE\n",

str8(s, len), handle, uid);

svcinfo_death(bs, si);

}

si->handle = handle;

} else {

// 为新服务分配svcinfo

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

if (!si) {

ALOGE("add_service('%s',%x) uid=%d - OUT OF MEMORY\n",

str8(s, len), handle, uid);

return -1;

}

// 记录服务信息

si->handle = handle;

si->len = len;

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

// 添加到全局服务链表中

si->next = svclist;

svclist = si;

}

// 增加binder引用计数

binder_acquire(bs, handle);

// 注册服务死亡通知

binder_link_to_death(bs, handle, &si->death);

return 0;

}

至此,服务注册的大部分工作已经完成,BC_REPLY以及BR_REPLY的过程相对简单,不再讨论。