- 调试Hadoop源代码

一张假钞

hadoopeclipse大数据

个人博客地址:调试Hadoop源代码|一张假钞的真实世界Hadoop版本Hadoop2.7.3调试模式下启动HadoopNameNode在${HADOOP_HOME}/etc/hadoop/hadoop-env.sh中设置NameNode启动的JVM参数,如下:exportHADOOP_NAMENODE_OPTS="-Xdebug-Xrunjdwp:transport=dt_socket,addr

- Yarn介绍 - 大数据框架

why do not

大数据hadoop

YARN的概述YARN是一个资源调度平台,负责为运算程序提供服务器运算资源,相当于一个分布式的操作系统平台,而MapReduce等运算程序则相当于运行于操作系统之上的应用程序YARN是Hadoop2.x版本中的一个新特性。它的出现其实是为了解决第一代MapReduce编程框架的不足,提高集群环境下的资源利用率,这些资源包括内存,磁盘,网络,IO等。Hadoop2.X版本中重新设计的这个YARN集群

- 大数据知识总结(三):Hadoop之Yarn重点架构原理

Lansonli

大数据大数据hadoop架构Yarn

文章目录Hadoop之Yarn重点架构原理一、Yarn介绍二、Yarn架构三、Yarn任务运行流程四、Yarn三种资源调度器特点及使用场景Hadoop之Yarn重点架构原理一、Yarn介绍ApacheHadoopYarn(YetAnotherReasourceNegotiator,另一种资源协调者)是Hadoop2.x版本后使用的资源管理器,可以为上层应用提供统一的资源管理平台。二、Yarn架构Y

- 《Hadoop系列》Docker安装Hadoop

DATA数据猿

HadoopDockerdockerhadoop

文章目录Docker安装Hadoop1安装docker1.1添加docker到yum源1.2安装docker2安装Hadoop2.1使用docker自带的hadoop安装2.2免密操作2.2.1master节点2.2.2slave1节点2.2.3slave2节点2.2.4将三个容器中的authorized_keys拷贝到本地合并2.2.5将本地authorized_keys文件分别拷贝到3个容器中

- Spark整合hive(保姆级教程)

万家林

sparkhivesparkhadoop

准备工作:1、需要安装配置好hive,如果不会安装可以跳转到Linux下编写脚本自动安装hive2、需要安装配置好spark,如果不会安装可以跳转到Spark安装与配置(单机版)3、需要安装配置好Hadoop,如果不会安装可以跳转到Linux安装配置Hadoop2.6操作步骤:1、将hive的conf目录下的hive-site.xml拷贝到spark的conf目录下(也可以建立软连接)cp/opt

- hadoop-yarn资源分配介绍-以及推荐常用优化参数

Winhole

hadoopLinux

根据网上的学习,结合工作进行的一个整理。如果有什么不正确的欢迎大家一起交流学习~Yarn前言作为Hadoop2.x的一部分,YARN采用MapReduce中的资源管理功能并对其进行打包,以便新引擎可以使用它们。这也简化了MapReduce,使其能够做到最好,处理数据。使用YARN,您现在可以在Hadoop中运行多个应用程序,所有应用程序都共享一个公共资源管理。那资源是有限的,YARN如何识别资源并

- Hadoop手把手逐级搭建 第二阶段: Hadoop完全分布式(full)

郑大能

前置步骤:1).第一阶段:Hadoop单机伪分布(single)0.步骤概述1).克隆4台虚拟机2).为完全分布式配置ssh免密3).将hadoop配置修改为完全分布式4).启动完全分布式集群5).在完全分布式集群上测试wordcount程序1.克隆4台虚拟机1.1使用hadoop0克隆4台虚拟机hadoop1,hadoop2,hadoop3,hadoop41.1.0克隆虚拟机hadoop11.1

- 【解决方案】pyspark 初次连接mongo 时报错Class not found exception:com.mongodb.spark.sql.DefaultSource

能白话的程序员♫

Sparkspark

部分报错如下:Traceback(mostrecentcalllast): File"/home/cisco/spark-mongo-test.py",line7,in df=spark.read.format("com.mongodb.spark.sql.DefaultSource").load() File"/home/cisco/spark-2.4.1-bin-hadoop2.

- Hadoop-Yarn-ResourceManagerHA

隔着天花板看星星

hadoop大数据分布式

在这里先给屏幕面前的你送上祝福,祝你在未来一年:技术步步高升、薪资节节攀升,身体健健康康,家庭和和美美。一、介绍在Hadoop2.4之前,ResourceManager是YARN集群中的单点故障ResourceManagerHA是通过Active/Standby体系结构实现的,在任何时候其中一个RM都是活动的,并且一个或多个RM处于备用模式,等待在活动发生任何事情时接管。二、架构官网的架构图如下:

- java大数据hadoop2.9.2 hive操作

crud-boy

java大数据大数据hivehadoop

1、创建常规数据库表(1)创建表createtablet_stu2(idint,namestring,hobbymap)rowformatdelimitedfieldsterminatedby','collectionitemsterminatedby'-'mapkeysterminatedby':';(2)创建文件student.txt1,zhangsan,唱歌:非常喜欢-跳舞:喜欢-游泳:一般

- java大数据hadoop2.9.2 Flume安装&操作

crud-boy

java大数据大数据flume

1、flume安装(1)解压缩tar-xzvfapache-flume-1.9.0-bin.tar.gzrm-rfapache-flume-1.9.0-bin.tar.gzmv./apache-flume-1.9.0-bin//usr/local/flume(2)配置cd/usr/local/flume/confcp./flume-env.sh.template./flume-env.shvifl

- Hadoop2.7配置

不会吐丝的蜘蛛侠。

Hadoophadoop大数据hdfs

core-site.xmlfs.defaultFShdfs://bigdata/ha.zookeeper.quorum192.168.56.70:2181,192.168.56.71:2181,192.168.56.72:2181-->hadoop.tmp.dir/export/data/hadoop/tmpfs.trash.interval1440io.file.buffer.size13107

- 现成Hadoop安装和配置,图文手把手交你

叫我小唐就好了

一些好玩的事hadoop大数据分布式课程设计运维

为了可以更加快速的可以使用Hadoop,便写了这篇文章,想尝试自己配置一下的可以参考从零开始配置Hadoop,图文手把手教你,定位错误资源1.两台已经配置好的hadoop2.xshell+Vmware链接:https://pan.baidu.com/s/1oX35G8CVCOzVqmtjdwrfzQ?pwd=3biz提取码:3biz--来自百度网盘超级会员V4的分享两台虚拟机用户名和密码均为roo

- 如何对HDFS进行节点内(磁盘间)数据平衡

格格巫 MMQ!!

hadoophdfshdfshadoop大数据

1.文档编写目的当HDFS的DataNode节点挂载多个磁盘时,往往会出现两种数据不均衡的情况:1.不同DataNode节点间数据不均衡;2.挂载数据盘的磁盘间数据不均衡。特别是这种情况:当DataNode原来是挂载了几个数据盘,当磁盘占用率很高之后,再挂载新的数据盘。由于Hadoop2.x版本并不支持HDFS的磁盘间数据均衡,因此,会造成老数据磁盘占用率很高,新挂载的数据盘几乎很空。在这种情况下

- spark运维问题记录

lishengping_max

Sparkspark

环境:spark-2.1.0-bin-hadoop2.71.Spark启动警告:neitherspark.yarn.jarsnotspark.yarn.archiveisset,fallingbacktouploadinglibrariesunderSPARK_HOME原因:如果没设置spark.yarn.jars,每次提交到yarn,都会把$SPARK_HOME/jars打包成zip文件上传到H

- 大数据组件部署下载链接

运维道上奔跑者

大数据zookeeperhbasekafkahadoophive

Hadoop2.7下载连接:https://archive.apache.org/dist/hadoop/core/hadoop-2.7.6/Hive2.3.2下载连接:http://archive.apache.org/dist/hive/hive-2.3.2/Zookeeper下载连接:https://archive.apache.org/dist/zookeeper/zookeeper-3.

- 【大数据开发运维解决方案】Hadoop+Hive+HBase+Kylin 伪分布式安装指南

运维道上奔跑者

大数据hadoop分布式

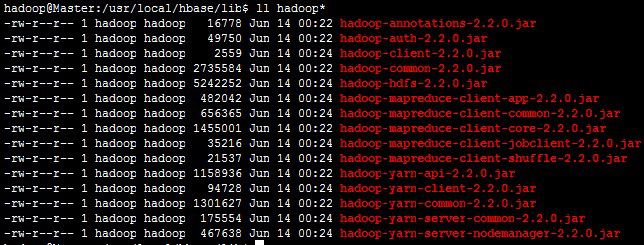

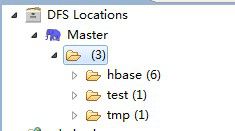

Hadoop2.7.6+Mysql5.7+Hive2.3.2+Hbase1.4.9+Kylin2.4单机伪分布式安装文档注意:####################################################################本文档已经有了最新版本,主要改动地方为:1、zookeeper改为使用安装的外置zookeeper而非hbase自带zookeeper,新

- Hadoop2.7.6+Mysql5.7+Hive2.3.2+zookeeper3.4.6+kafka2.11+Hbase1.4.9+Sqoop1.4.7+Kylin2.4单机伪分布式安装及官方案例测

运维道上奔跑者

分布式hbasezookeeperhadoop

####################################################################最新消息:关于spark和Hudi的安装部署文档,本人已经写完,连接:Hadoop2.7.6+Spark2.4.4+Scala2.11.12+Hudi0.5.1单机伪分布式安装注意:本篇文章是在本人写的Hadoop+Hive+HBase+Kylin伪分布式安装指南

- hadoop2.0之环境搭建详细流程

hhf_Engineer

1、在安装hadoop2.0之前,需要准备好以下软件(如下图1)图1:然后将这两个软件共享到centos上(如下图2红箭头指向和图3红箭头指向所示)在vm这上面有个虚拟机,点击虚拟机后有个硬件和选项,点选项,下面有个共享文件夹。图2:点击虚拟机那个地方图3:添加上去以后按确定按钮即可!2、为了有个集群的概念,我们把一台linux机器复制成有三份!如下图4所示:注:在复制前,必须要把linux的机器

- apache hadoop 2.4.0 64bit 在windows8.1下直接安装指南(无需虚拟机和cygwin)

夜魔009

技术windows8hadoop64bit库hdfs

工作需要,要开始搞hadoop了,又是大数据,自己感觉大数据、云,只是ERP、SOAP风潮之后与智能地球一起诞生的概念炒作。不过Apache是个神奇的组织,Java如果没有它也不会现在如火中天。言归正传:首先需要下载Apachehadoop2.4.0的tar.gz包,到本地解压缩到某个盘下,注意路径里不要带空格。否则你配置文件里需要用windows8.3格式的路径!第二确保操作系统是64bit,已

- docker搭建单机hadoop

阿桔是只猫

大数据hadoopdocker大数据

docker搭建单机hadoop前言一、docker是什么?二、hadoop是什么?三、使用步骤1.下载jdkhadoop2.编写Dockerfile3.构建镜像4.运行镜像5.创建客户端前言在华为云上使用docker搭建一个简单的hadoop单机环境。一、docker是什么?Docker是一个开源的应用容器引擎。开发者将需要的东西整理成镜像文件,然后再容器化这些镜像文件,容器之前相互隔离,互不影

- Hadoop-生产调优(更新中)

OnePandas

Hadoophadoop大数据分布式

第1章HDFS-核心参数1.1NameNode内存生产配置1)NameNode内存计算每个文件块大概占用150byte,一台服务器128G内存为例,能存储多少文件块呢?128*1024*1024*1024/150byte≈9.1亿GMBKBByte2)Hadoop2.x系列,配置NameNode内存NameNode内存默认2000m,如果内存服务器内存4G,NameNode内存可以配置3g。在ha

- 大数据-Hadoop概论

Mr.史

Hadoophadoop大数据

文章目录大数据概论1、大数据概念2、大数据特点1、Volume(大量)2、Velocity(高速)3、Variety(多样)4、Value(低价值密度)3、大数据应用场景4、大数据部门业务流程分析5、大数据部门组织机构Hadoop1、Hadoop是什么?2、Hadoop发展史3、Hadoop三大发行版本1、ApacheHadoop2、ClouderaHadoop3、HortonworksHadoo

- Elk运维-Elastic7.6.1集群安装部署

消逝的bug

运维elk数据库

集群安装结果说明实例配置安装软件安装账号hadoop12C4G磁盘:50G云服务器elasticsearchkibanardhadoop22C4G磁盘:50G云服务器elasticsearchrdhadoop32C4G磁盘:50G云服务器elasticsearchrd整个安装过程使用的账号:root、rd(自己新建的账号)安装包下载:下载包中包含esfilebeatkibanaik等相关软件链接:

- 记一次Flink自带jar包与第三方jar包依赖冲突解决

一枚小刺猬

flinkflinkjarhadoop

flink版本1.14.5hadoop2.6.0为了实现flink读取hive数据写入第三方的数据库,写入数据库需要调用数据库的SDK,当前SDK依赖的protobuf-java-3.11.0.jar,guava-29.0-android.jar与flink中lib下的部分jar包冲突,flink与hadoop、hive编译的jar中使用的guava,protobuf都要低于第三方sdk,因此会遇

- [SparkSQL] Rdd转化DataFrame 通过StructType为字段添加Schema

林沐之森

1、开发环境spark-2.1.0-bin-hadoop2.62、Rdd转换成DataFrame,为字段添加列信息参数nullable说明:Indicatesifvaluesofthisfieldcanbenullvaluesvalschema=StructType(List(StructField("name",StringType,nullable=false),StructField("ag

- YARN 工作原理

无羡爱诗诗

1、Hadoop2新增了YARN,YARN的引入主要有两个方面的变更:其一、HDFS的NameNode可以以集群的方式部署,增强了NameNode的水平扩展能力和高可靠性,水平扩展能力对应HDFSFederation,高可靠性对应HA。其二、MapReduce将Hadoop1时代的JobTracker中的资源管理及任务生命周期管理拆分成两个独立的组件,资源管理对应ResourceManager,任

- Hadoop2.0架构及其运行机制,HA原理

Toner_唐纳

大数据

文章目录一、Hadoop2.0架构1.架构图2.HA1)NameNode主备切换2)watcher监听3)脑裂问题3.组件1.HDFS2.MapReduce3.Yarn1.组件2.调度流程一、Hadoop2.0架构1.架构图以上是hadoop2.0的架构图,根据hadoop1.0的不足,改进而来。1.NameNode节点,由原先的一个变成两个,解决单点故障问题2.JournalNode集群,处理E

- idea上搭建pyspark开发环境

jackyan163

1环境版本说明python版本:Anaconda3.6.5spark版本:spark-2.4.8-bin-hadoop2.7idea版本:2019.32环境变量配置2.1python环境变量配置将python.exe所在的目录配置到path环境变量中2.2spark环境变量配置下载spark安装包,我下载的是spark-2.4.8-bin-hadoop2.7.tgz将安装包解压到一个非中文目录配置

- 指导手册05:MapReduce编程入门

weixin_30655219

大数据

指导手册05:MapReduce编程入门Part1:使用Eclipse创建MapReduce工程操作系统:Centos6.8,hadoop2.6.4情景描述:因为Hadoop本身就是由Java开发的,所以通常也选用Eclipse作为MapReduce的编程工具,本小节将完成Eclipse安装,MapReduce集成环境配置。1.下载与安装Eclipse(1)在官网下载Eclipse安装包“Ecli

- Hadoop(一)

朱辉辉33

hadooplinux

今天在诺基亚第一天开始培训大数据,因为之前没接触过Linux,所以这次一起学了,任务量还是蛮大的。

首先下载安装了Xshell软件,然后公司给了账号密码连接上了河南郑州那边的服务器,接下来开始按照给的资料学习,全英文的,头也不讲解,说锻炼我们的学习能力,然后就开始跌跌撞撞的自学。这里写部分已经运行成功的代码吧.

在hdfs下,运行hadoop fs -mkdir /u

- maven An error occurred while filtering resources

blackproof

maven报错

转:http://stackoverflow.com/questions/18145774/eclipse-an-error-occurred-while-filtering-resources

maven报错:

maven An error occurred while filtering resources

Maven -> Update Proje

- jdk常用故障排查命令

daysinsun

jvm

linux下常见定位命令:

1、jps 输出Java进程

-q 只输出进程ID的名称,省略主类的名称;

-m 输出进程启动时传递给main函数的参数;

&nb

- java 位移运算与乘法运算

周凡杨

java位移运算乘法

对于 JAVA 编程中,适当的采用位移运算,会减少代码的运行时间,提高项目的运行效率。这个可以从一道面试题说起:

问题:

用最有效率的方法算出2 乘以8 等於几?”

答案:2 << 3

由此就引发了我的思考,为什么位移运算会比乘法运算更快呢?其实简单的想想,计算机的内存是用由 0 和 1 组成的二

- java中的枚举(enmu)

g21121

java

从jdk1.5开始,java增加了enum(枚举)这个类型,但是大家在平时运用中还是比较少用到枚举的,而且很多人和我一样对枚举一知半解,下面就跟大家一起学习下enmu枚举。先看一个最简单的枚举类型,一个返回类型的枚举:

public enum ResultType {

/**

* 成功

*/

SUCCESS,

/**

* 失败

*/

FAIL,

- MQ初级学习

510888780

activemq

1.下载ActiveMQ

去官方网站下载:http://activemq.apache.org/

2.运行ActiveMQ

解压缩apache-activemq-5.9.0-bin.zip到C盘,然后双击apache-activemq-5.9.0-\bin\activemq-admin.bat运行ActiveMQ程序。

启动ActiveMQ以后,登陆:http://localhos

- Spring_Transactional_Propagation

布衣凌宇

springtransactional

//事务传播属性

@Transactional(propagation=Propagation.REQUIRED)//如果有事务,那么加入事务,没有的话新创建一个

@Transactional(propagation=Propagation.NOT_SUPPORTED)//这个方法不开启事务

@Transactional(propagation=Propagation.REQUIREDS_N

- 我的spring学习笔记12-idref与ref的区别

aijuans

spring

idref用来将容器内其他bean的id传给<constructor-arg>/<property>元素,同时提供错误验证功能。例如:

<bean id ="theTargetBean" class="..." />

<bean id ="theClientBean" class=&quo

- Jqplot之折线图

antlove

jsjqueryWebtimeseriesjqplot

timeseriesChart.html

<script type="text/javascript" src="jslib/jquery.min.js"></script>

<script type="text/javascript" src="jslib/excanvas.min.js&

- JDBC中事务处理应用

百合不是茶

javaJDBC编程事务控制语句

解释事务的概念; 事务控制是sql语句中的核心之一;事务控制的作用就是保证数据的正常执行与异常之后可以恢复

事务常用命令:

Commit提交

- [转]ConcurrentHashMap Collections.synchronizedMap和Hashtable讨论

bijian1013

java多线程线程安全HashMap

在Java类库中出现的第一个关联的集合类是Hashtable,它是JDK1.0的一部分。 Hashtable提供了一种易于使用的、线程安全的、关联的map功能,这当然也是方便的。然而,线程安全性是凭代价换来的――Hashtable的所有方法都是同步的。此时,无竞争的同步会导致可观的性能代价。Hashtable的后继者HashMap是作为JDK1.2中的集合框架的一部分出现的,它通过提供一个不同步的

- ng-if与ng-show、ng-hide指令的区别和注意事项

bijian1013

JavaScriptAngularJS

angularJS中的ng-show、ng-hide、ng-if指令都可以用来控制dom元素的显示或隐藏。ng-show和ng-hide根据所给表达式的值来显示或隐藏HTML元素。当赋值给ng-show指令的值为false时元素会被隐藏,值为true时元素会显示。ng-hide功能类似,使用方式相反。元素的显示或

- 【持久化框架MyBatis3七】MyBatis3定义typeHandler

bit1129

TypeHandler

什么是typeHandler?

typeHandler用于将某个类型的数据映射到表的某一列上,以完成MyBatis列跟某个属性的映射

内置typeHandler

MyBatis内置了很多typeHandler,这写typeHandler通过org.apache.ibatis.type.TypeHandlerRegistry进行注册,比如对于日期型数据的typeHandler,

- 上传下载文件rz,sz命令

bitcarter

linux命令rz

刚开始使用rz上传和sz下载命令:

因为我们是通过secureCRT终端工具进行使用的所以会有上传下载这样的需求:

我遇到的问题:

sz下载A文件10M左右,没有问题

但是将这个文件A再传到另一天服务器上时就出现传不上去,甚至出现乱码,死掉现象,具体问题

解决方法:

上传命令改为;rz -ybe

下载命令改为:sz -be filename

如果还是有问题:

那就是文

- 通过ngx-lua来统计nginx上的虚拟主机性能数据

ronin47

ngx-lua 统计 解禁ip

介绍

以前我们为nginx做统计,都是通过对日志的分析来完成.比较麻烦,现在基于ngx_lua插件,开发了实时统计站点状态的脚本,解放生产力.项目主页: https://github.com/skyeydemon/ngx-lua-stats 功能

支持分不同虚拟主机统计, 同一个虚拟主机下可以分不同的location统计.

可以统计与query-times request-time

- java-68-把数组排成最小的数。一个正整数数组,将它们连接起来排成一个数,输出能排出的所有数字中最小的。例如输入数组{32, 321},则输出32132

bylijinnan

java

import java.util.Arrays;

import java.util.Comparator;

public class MinNumFromIntArray {

/**

* Q68输入一个正整数数组,将它们连接起来排成一个数,输出能排出的所有数字中最小的一个。

* 例如输入数组{32, 321},则输出这两个能排成的最小数字32132。请给出解决问题

- Oracle基本操作

ccii

Oracle SQL总结Oracle SQL语法Oracle基本操作Oracle SQL

一、表操作

1. 常用数据类型

NUMBER(p,s):可变长度的数字。p表示整数加小数的最大位数,s为最大小数位数。支持最大精度为38位

NVARCHAR2(size):变长字符串,最大长度为4000字节(以字符数为单位)

VARCHAR2(size):变长字符串,最大长度为4000字节(以字节数为单位)

CHAR(size):定长字符串,最大长度为2000字节,最小为1字节,默认

- [强人工智能]实现强人工智能的路线图

comsci

人工智能

1:创建一个用于记录拓扑网络连接的矩阵数据表

2:自动构造或者人工复制一个包含10万个连接(1000*1000)的流程图

3:将这个流程图导入到矩阵数据表中

4:在矩阵的每个有意义的节点中嵌入一段简单的

- 给Tomcat,Apache配置gzip压缩(HTTP压缩)功能

cwqcwqmax9

apache

背景:

HTTP 压缩可以大大提高浏览网站的速度,它的原理是,在客户端请求网页后,从服务器端将网页文件压缩,再下载到客户端,由客户端的浏览器负责解压缩并浏览。相对于普通的浏览过程HTML ,CSS,Javascript , Text ,它可以节省40%左右的流量。更为重要的是,它可以对动态生成的,包括CGI、PHP , JSP , ASP , Servlet,SHTML等输出的网页也能进行压缩,

- SpringMVC and Struts2

dashuaifu

struts2springMVC

SpringMVC VS Struts2

1:

spring3开发效率高于struts

2:

spring3 mvc可以认为已经100%零配置

3:

struts2是类级别的拦截, 一个类对应一个request上下文,

springmvc是方法级别的拦截,一个方法对应一个request上下文,而方法同时又跟一个url对应

所以说从架构本身上 spring3 mvc就容易实现r

- windows常用命令行命令

dcj3sjt126com

windowscmdcommand

在windows系统中,点击开始-运行,可以直接输入命令行,快速打开一些原本需要多次点击图标才能打开的界面,如常用的输入cmd打开dos命令行,输入taskmgr打开任务管理器。此处列出了网上搜集到的一些常用命令。winver 检查windows版本 wmimgmt.msc 打开windows管理体系结构(wmi) wupdmgr windows更新程序 wscrip

- 再看知名应用背后的第三方开源项目

dcj3sjt126com

ios

知名应用程序的设计和技术一直都是开发者需要学习的,同样这些应用所使用的开源框架也是不可忽视的一部分。此前《

iOS第三方开源库的吐槽和备忘》中作者ibireme列举了国内多款知名应用所使用的开源框架,并对其中一些框架进行了分析,同样国外开发者

@iOSCowboy也在博客中给我们列出了国外多款知名应用使用的开源框架。另外txx's blog中详细介绍了

Facebook Paper使用的第三

- Objective-c单例模式的正确写法

jsntghf

单例iosiPhone

一般情况下,可能我们写的单例模式是这样的:

#import <Foundation/Foundation.h>

@interface Downloader : NSObject

+ (instancetype)sharedDownloader;

@end

#import "Downloader.h"

@implementation

- jquery easyui datagrid 加载成功,选中某一行

hae

jqueryeasyuidatagrid数据加载

1.首先你需要设置datagrid的onLoadSuccess

$(

'#dg'

).datagrid({onLoadSuccess :

function

(data){

$(

'#dg'

).datagrid(

'selectRow'

,3);

}});

2.onL

- jQuery用户数字打分评价效果

ini

JavaScripthtmljqueryWebcss

效果体验:http://hovertree.com/texiao/jquery/5.htmHTML文件代码:

<!DOCTYPE html>

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<title>jQuery用户数字打分评分代码 - HoverTree</

- mybatis的paramType

kerryg

DAOsql

MyBatis传多个参数:

1、采用#{0},#{1}获得参数:

Dao层函数方法:

public User selectUser(String name,String area);

对应的Mapper.xml

<select id="selectUser" result

- centos 7安装mysql5.5

MrLee23

centos

首先centos7 已经不支持mysql,因为收费了你懂得,所以内部集成了mariadb,而安装mysql的话会和mariadb的文件冲突,所以需要先卸载掉mariadb,以下为卸载mariadb,安装mysql的步骤。

#列出所有被安装的rpm package rpm -qa | grep mariadb

#卸载

rpm -e mariadb-libs-5.

- 利用thrift来实现消息群发

qifeifei

thrift

Thrift项目一般用来做内部项目接偶用的,还有能跨不同语言的功能,非常方便,一般前端系统和后台server线上都是3个节点,然后前端通过获取client来访问后台server,那么如果是多太server,就是有一个负载均衡的方法,然后最后访问其中一个节点。那么换个思路,能不能发送给所有节点的server呢,如果能就

- 实现一个sizeof获取Java对象大小

teasp

javaHotSpot内存对象大小sizeof

由于Java的设计者不想让程序员管理和了解内存的使用,我们想要知道一个对象在内存中的大小变得比较困难了。本文提供了可以获取对象的大小的方法,但是由于各个虚拟机在内存使用上可能存在不同,因此该方法不能在各虚拟机上都适用,而是仅在hotspot 32位虚拟机上,或者其它内存管理方式与hotspot 32位虚拟机相同的虚拟机上 适用。

- SVN错误及处理

xiangqian0505

SVN提交文件时服务器强行关闭

在SVN服务控制台打开资源库“SVN无法读取current” ---摘自网络 写道 SVN无法读取current修复方法 Can't read file : End of file found

文件:repository/db/txn_current、repository/db/current

其中current记录当前最新版本号,txn_current记录版本库中版本