ceph-deploy部署jewel版本cpeh集群

这里说明一下,ceph集群可以用ceph-deploy快速部署,可以手动一步一步部署。

为了省去麻烦,我建议ceph-deploy部署,手动部署步骤太多。所以这里也不会介绍手动部署,有兴趣的同学可以去官网查阅步骤。

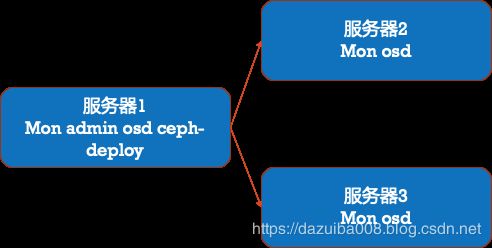

节点规划,目前3台服务器,由于我安装的jewel版本,已经更新到10.2.11,所以这里没装mgr

192.168.183.11 mon admin osd ceph-deploy

192.168.183.12 mon osd

192.168.183.13 mon osd

由于每台机器我有4块千兆网卡,所以,我这里使用public network和cluster network分配相关网卡,后面见配置,这里我只列出网卡IP,顺序分别对应eth0~3

服务器1:192.168.183.11,192.168.183.14,192.168.183.15,192.168.183.16

服务器2:192.168.183.12,192.168.183.17,192.168.183.18,192.168.183.19

服务器3:192.168.183.13,192.168.183.61,192.168.183.62,192.168.183.63

每台服务器添加/etc/hosts内容:

192.168.183.11 ceph01

192.168.183.12 ceph02

192.168.183.13 ceph03

admin节点需要配置透明登陆其他节点,而且这里我防火墙和SElinux都已关闭

yum配置,这里我都使用阿里云镜像:

epel.repo配置

[epel]

name=Extra Packages for Enterprise Linux 7 - $basearch

baseurl=http://mirrors.aliyun.com/epel/7/$basearch

failovermethod=priority

enabled=1

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

[epel-debuginfo]

name=Extra Packages for Enterprise Linux 7 - $basearch - Debug

baseurl=http://mirrors.aliyun.com/epel/7/$basearch/debug

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=0

[epel-source]

name=Extra Packages for Enterprise Linux 7 - $basearch - Source

baseurl=http://mirrors.aliyun.com/epel/7/SRPMS

failovermethod=priority

enabled=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7

gpgcheck=0

ceph.repo配置

[ceph]

name=Ceph packages for $basearch

baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/$basearch

enabled=1

priority=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch

enabled=1

priority=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS

enabled=0

priority=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

如果之前安装过,请清理已安装的ceph,这里我清理我之前安装的环境

ceph-deploy purge ceph01 ceph02 ceph03

ceph-deploy purgedata ceph01 ceph02 ceph03

ceph-deploy forgetkeys

进入到创建的集群配置目录,删除相关文件

cd /opt/ceph-cluster/

rm -f ceph*

部署集群,这里我暂时只在ceph01安装一个monitor,执行以下命令会生成配置文件以及monitor的key

ceph-deploy new ceph01

每个节点都安装安装ceph

ceph-deploy install ceph01 ceph02 ceph03

初始化monitor

ceph-deploy --ceph-conf ceph.conf --overwrite-conf mon create-initial

添加monitor

ceph-deploy mon add --address 192.168.183.12 ceph02

ceph-deploy mon add --address 192.168.183.13 ceph03

添加后需要同步monitor,可以通过以下命令查看quorum状态

ceph quorum_status --format json-pretty

从当前配置文件覆盖指定远程节点的配置文件/etc/ceph/ceph.conf

ceph-deploy --overwrite-conf admin ceph01 ceph02 ceph03

修改ceph.conf配置文件,这里注意虽然我上面通过 192.168.183.12 和 192.168.183.13添加了monitor,但是实际上跑在192.168.183.15,192.168.183.18,192.168.183.62的6789端口,这里一定要注意不要写192.168.183.11,192.168.183.12,192.168.183.13,要写实际跑monitor服务的对应IP

cat ceph.conf

[global]

fsid = c72e69ab-3c3c-4f76-a714-86d746ffdf41

mon_initial_members = ceph01, ceph02, ceph03

mon_host = 192.168.183.15,192.168.183.18,192.168.183.62

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 192.168.183.15/32,192.168.183.16/32,192.168.183.18/32,192.168.183.19/32,192.168.183.62/32,192.168.183.63/32

cluster network = 192.168.183.11/32,192.168.183.14/32,192.168.183.12/32,192.168.183.17/32,192.168.183.13/32,192.168.183.61/32

创建OSD

每台12块sata盘

ceph-deploy osd create --fs-type xfs ceph01:/dev/sdb ceph01:/dev/sdc ceph01:/dev/sdd ceph01:/dev/sde ceph01:/dev/sdf ceph01:/dev/sdg ceph01:/dev/sdh ceph01:/dev/sdi ceph01:/dev/sdj ceph01:/dev/sdk ceph01:/dev/sdl ceph01:/dev/sdm

ceph-deploy osd create --fs-type xfs ceph02:/dev/sdb ceph02:/dev/sdc ceph02:/dev/sdd ceph02:/dev/sde ceph02:/dev/sdf ceph02:/dev/sdg ceph02:/dev/sdh ceph02:/dev/sdi ceph02:/dev/sdj ceph02:/dev/sdk ceph02:/dev/sdl ceph02:/dev/sdm

ceph-deploy osd create --fs-type xfs ceph03:/dev/sdb ceph03:/dev/sdc ceph03:/dev/sdd ceph03:/dev/sde ceph03:/dev/sdf ceph03:/dev/sdg ceph03:/dev/sdh ceph03:/dev/sdi ceph03:/dev/sdj ceph03:/dev/sdk ceph03:/dev/sdl ceph03:/dev/sdm

3块SSD

ceph-deploy osd create --fs-type xfs ceph01:/dev/dfa

ceph-deploy osd create --fs-type xfs ceph01:/dev/dfa

ceph-deploy osd create --fs-type xfs ceph01:/dev/dfa

查看journal,都在本身的磁盘划分一块空间,这里我们先不做调整

ceph --admin-daemon /var/run/ceph/ceph-osd.0.asok config show | grep osd_journal

"osd_journal": "\/var\/lib\/ceph\/osd\/ceph-0\/journal",

"osd_journal_size": "5120",

另外我还是准备使用ceph cache tier,可以参考ceph cache tier配置

crushmap-skymobi.txt是我的配置,如下:

[root@ceph01 ceph-cluster]# cat crushmap-skymobi.txt

# begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable straw_calc_version 1

# devices

device 0 osd.0

device 1 osd.1

device 2 osd.2

device 3 osd.3

device 4 osd.4

device 5 osd.5

device 6 osd.6

device 7 osd.7

device 8 osd.8

device 9 osd.9

device 10 osd.10

device 11 osd.11

device 12 osd.12

device 13 osd.13

device 14 osd.14

device 15 osd.15

device 16 osd.16

device 17 osd.17

device 18 osd.18

device 19 osd.19

device 20 osd.20

device 21 osd.21

device 22 osd.22

device 23 osd.23

device 24 osd.24

device 25 osd.25

device 26 osd.26

device 27 osd.27

device 28 osd.28

device 29 osd.29

device 30 osd.30

device 31 osd.31

device 32 osd.32

device 33 osd.33

device 34 osd.34

device 35 osd.35

device 36 osd.36

device 37 osd.37

device 38 osd.38

# types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

type 11 skymobi

# buckets

skymobi ceph01-sata {

id -2 # do not change unnecessarily

# weight 44.664

alg straw

hash 0 # rjenkins1

item osd.0 weight 3.631

item osd.1 weight 3.631

item osd.2 weight 3.631

item osd.3 weight 3.631

item osd.4 weight 3.631

item osd.5 weight 3.631

item osd.6 weight 3.631

item osd.7 weight 3.631

item osd.8 weight 3.631

item osd.9 weight 3.631

item osd.10 weight 3.631

item osd.11 weight 3.631

}

skymobi ceph01-ssd {

id -3 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.36 weight 1.086

}

skymobi ceph02-sata {

id -4 # do not change unnecessarily

# weight 44.664

alg straw

hash 0 # rjenkins1

item osd.12 weight 3.631

item osd.13 weight 3.631

item osd.14 weight 3.631

item osd.15 weight 3.631

item osd.16 weight 3.631

item osd.17 weight 3.631

item osd.18 weight 3.631

item osd.19 weight 3.631

item osd.20 weight 3.631

item osd.21 weight 3.631

item osd.22 weight 3.631

item osd.23 weight 3.631

}

skymobi ceph02-ssd {

id -5 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.37 weight 1.086

}

skymobi ceph03-sata {

id -6 # do not change unnecessarily

# weight 44.664

alg straw

hash 0 # rjenkins1

item osd.24 weight 3.631

item osd.25 weight 3.631

item osd.26 weight 3.631

item osd.27 weight 3.631

item osd.28 weight 3.631

item osd.29 weight 3.631

item osd.30 weight 3.631

item osd.31 weight 3.631

item osd.32 weight 3.631

item osd.33 weight 3.631

item osd.34 weight 3.631

item osd.35 weight 3.631

}

skymobi ceph03-ssd {

id -7 # do not change unnecessarily

alg straw

hash 0 # rjenkins1

item osd.38 weight 1.086

}

root ssd {

id -1 # do not change unnecessarily

# weight 133.991

alg straw

hash 0 # rjenkins1

item ceph01-ssd weight 1.086

item ceph02-ssd weight 1.086

item ceph03-ssd weight 1.086

}

root sata {

id -8 # do not change unnecessarily

# weight 133.991

alg straw

hash 0 # rjenkins1

item ceph01-sata weight 43.572

item ceph02-sata weight 43.572

item ceph03-sata weight 43.572

}

# rules

rule ssd_ruleset {

ruleset 0

type replicated

min_size 1

max_size 10

step take ssd

step chooseleaf firstn 0 type skymobi

step emit

}

rule sata_ruleset {

ruleset 1

type replicated

min_size 1

max_size 10

step take sata

step chooseleaf firstn 0 type skymobi

step emit

}

# end crush map

创建cache tier

crushtool -c crushmap-skymobi.txt -o crushmap-skymobi.map

ceph osd setcrushmap -i crushmap-skymobi.map

ceph osd pool create ssd-pool 256 256 ssd_ruleset

ceph osd pool create sata-pool 2048 2048 sata_ruleset

ceph osd pool set sata-pool size 2

ceph osd pool set ssd-pool size 2

ceph osd tier add sata-pool ssd-pool

ceph osd tier cache-mode ssd-pool writeback

ceph osd tier set-overlay sata-pool ssd-pool

ceph osd pool set ssd-pool hit_set_type bloom

ceph osd pool set ssd-pool hit_set_count 12

ceph osd pool set ssd-pool hit_set_period 14400

ceph osd pool set ssd-pool target_max_bytes 900000000000

ceph osd pool set ssd-pool min_read_recency_for_promote 1

ceph osd pool set ssd-pool min_write_recency_for_promote 1

ceph osd pool set ssd-pool cache_target_dirty_ratio 0.4

ceph osd pool set ssd-pool cache_target_dirty_high_ratio 0.6

ceph osd pool set ssd-pool cache_target_full_ratio 0.8

至此集群创建完毕,查看状态

[root@ceph01 ceph-cluster]# ceph osd pool ls //查看pool

ssd-pool

sata-pool

[root@ceph01 ceph-cluster]# ceph -s //查看集群状态

cluster 3ffa23e7-0a9f-4486-b39b-ccfc868343a8

health HEALTH_OK

monmap e3: 3 mons at {ceph01=192.168.183.15:6789/0,ceph02=192.168.183.18:6789/0,ceph03=192.168.183.62:6789/0}

election epoch 6, quorum 0,1,2 ceph01,ceph02,ceph03

osdmap e214: 39 osds: 39 up, 39 in

flags sortbitwise,require_jewel_osds

pgmap v24185: 2304 pgs, 2 pools, 18280 MB data, 7455 objects

41099 MB used, 133 TB / 133 TB avail

2304 active+clean

参考:https://docs.ceph.com/docs/master/start/quick-ceph-deploy/