Kylin-2.5.0安装-详细教程

Kylin安装文档

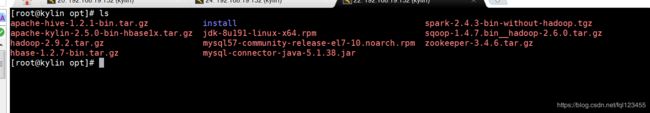

安装包版本

- 系统:CentOS7

- jdk:jdk-8u191-linux-x64

- Hadoop:hadoop-2.9.2.tar

- hbase:hbase-1.2.7-bin.tar

- hive: apache-hive-1.2.1-bin.tar

- Kylin:apache-kylin-2.4.0-bin-hbase1x.tar

- spark:spark-2.4.3-bin-without-hadoop.tgz

- zookeeper:zookeeper-3.4.6.tar

- mysql:mysql57-community-release-el7-10.noarch(用作替换hive的derby存储元数据)

- sqoop:sqoop-1.4.7.bin__hadoop-2.6.0.tar(向hive中导入数据)

安装操作系统

略

操作系统配置

配置网络

# ip a 查看当前的服务器网络设置

vi /etc/sysconfig/network-scripts/ifcfg-ens33

# 将配置文件中的ONBOOT=yes

systemctl restart network

关闭防火墙

[root@localhost ~]# systemctl stop firewalld

[root@localhost ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

修改服务器主机名

[root@localhost ~]# vi /etc/hostname

自定义主机名

配置主机名和ip地址的映射关系

[root@localhost ~]# vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.19.134 Kylin2

~

[root@localhost ~]# ping Kylin

PING Kylin2 (192.168.19.134) 56(84) bytes of data.

64 bytes from Kylin2 (192.168.19.134): icmp_seq=1 ttl=64 time=0.020 ms

64 bytes from Kylin2 (192.168.19.134): icmp_seq=2 ttl=64 time=0.025 ms

配置SSH(Secure Shell)免密远程登录

[root@localhost ~]# ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

Generating public/private rsa key pair.

Created directory '/root/.ssh'.

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:tIejCLlCW+iQUwWzOWFqZde2smsz8UKrA2sKgrdVoSs root@localhost

The key's randomart image is:

+---[RSA 2048]----+

| =+... |

| oo*. o |

|..= o o |

|.o.o o + o |

|+oo.. + S . |

|=ooo B . o |

|=oE = * |

|o= = B . |

|+ ..+ + |

+----[SHA256]-----+

[root@localhost ~]# cd .ssh/

[root@localhost .ssh]# ll

总用量 8

-rw-------. 1 root root 1675 1月 14 09:16 id_rsa

-rw-r--r--. 1 root root 396 1月 14 09:16 id_rsa.pub

[root@localhost .ssh]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

[root@localhost .ssh]# ll

总用量 12

-rw-r--r--. 1 root root 396 1月 14 09:16 authorized_keys

-rw-------. 1 root root 1675 1月 14 09:16 id_rsa

-rw-r--r--. 1 root root 396 1月 14 09:16 id_rsa.pub

[root@localhost .ssh]# chmod 0600 ~/.ssh/authorized_keys

[root@localhost .ssh]# ssh Kylin2

The authenticity of host 'kylin2 (192.168.19.134)' can't be established.

ECDSA key fingerprint is SHA256:h0KM6u7P3rzKiEPjNWO7H6FNRXtvRRpWgBs2aHJu2VU.

ECDSA key fingerprint is MD5:33:7d:02:f1:7c:61:86:74:f2:32:d0:a9:c7:42:46:bd.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'kylin2,192.168.19.134' (ECDSA) to the list of known hosts.

Last login: Tue Jan 14 09:11:34 2020 from 192.168.19.1

安装相关软件

上传文件

安装jdk

[root@Kylin2 opt]# rpm -ivh jdk-8u191-linux-x64.rpm

警告:jdk-8u191-linux-x64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID ec551f03: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:jdk1.8-2000:1.8.0_191-fcs ################################# [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

[root@Kylin2 opt]# java -version

java version "1.8.0_191"

Java(TM) SE Runtime Environment (build 1.8.0_191-b12)

Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode)

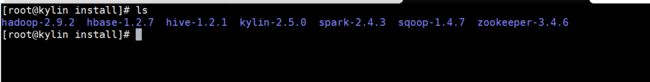

解压相应软件

[root@Kylin2 opt]# tar -zxf hadoop-2.7.1.tar.gz -C install/

[root@Kylin2 opt]# tar -zxf hbase-1.2.5-bin.tar.gz -C install/

[root@Kylin2 opt]# tar -zxf apache-hive-1.2.1-bin.tar.gz -C install/

[root@Kylin2 opt]# tar -zxf apache-kylin-2.4.0-bin-hbase1x.tar.gz -C install/

[root@Kylin2 opt]# tar -zxf zookeeper-3.4.6.tar -C install/

[root@Kylin2 opt]# tar -zxf sqoop-1.4.7.bin__hadoop-2.6.0.ta -C install/

[root@Kylin2 opt]# tar -zxf spark-2.4.3-bin-without-hadoop.tgz -C install/

配置环境变量

[root@Kylin ~]# vi .bashrc

HBASE_MANAGES_ZK=false

JAVA_HOME=/usr/java/latest

HADOOP_HOME=/opt/install/hadoop-2.9.2

HIVE_HOME=/opt/install/hive-1.2.1

HBASE_HOME=/opt/install/hbase-1.2.7

KYLIN_HOME=/opt/install/kylin-2.5.0

SPARK_HOME=/opt/install/spark-2.4.3

SQOOP_HOME=/opt/install/sqoop-1.4.7

CLASSPATH=.

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HIVE_HOME/bin:$HBASE_HOME/bin:$KYLIN_HOME/bin:$SPARK_HOME/bin:$SQOOP_HOME/bin

export HADOOP_HOME

export HIVE_HOME

export HBASE_HOME

export KYLIN_HOME

export SPARK_HOME

export SQOOP_HOME

export CLASSPATH

export HBASE_MANAGES_ZK

[root@Kylin2 ~]# source .bashrc

[root@Kylin ~]# vi /etc/profile

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

##JAVA_HOME

export JAVA_HOME=/usr/java/latest

export PATH=$PATH:$JAVA_HOME/bin

##HADOOP_HOME

export HADOOP_HOME=/opt/install/hadoop-2.9.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

##HBASE_HOME

export HBASE_HOME=/opt/install/hbase-1.2.7

export PATH=$PATH:$HBASE_HOME/bin

##HIVE_HOME

export HIVE_HOME=/opt/install/hive-1.2.1

export PATH=$PATH:$HIVE_HOME/bin

##KYLIN_HOME

export KYLIN_HOME=/opt/install/kylin-2.5.0

export PATH=$PATH:$KYLIN_HOME/bin

##SPARK_HOME

export SPARK_HOME=/opt/install/spark-2.4.3

export PATH=$PATH:$SPARK_HOME/bin

##SQOOP_HOME

export SQOOP_HOME=/opt/install/sqoop-1.4.7

export PATH=$PATH:$SQOOP_HOME/bin

[root@Kylin2 ~]# source /etc/profile

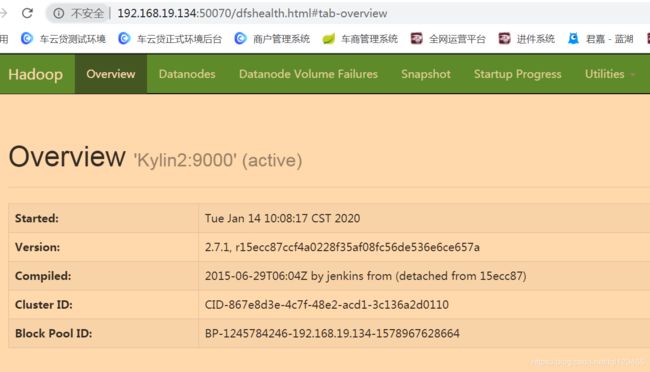

安装hadoop

- 修改配置文件

[root@Kylin hadoop]# pwd

/opt/install/hadoop-2.9.2/etc/hadoop

[root@Kylin hadoop]# vim hadoop-env.sh

# The java implementation to use.

export JAVA_HOME=/usr/java/latest

[root@Kylin hadoop]# vim core-site.xml

<!--nn访问入口-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://kylin:9000</value>

</property>

<!--hdfs工作基础目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/install/hadoop-2.9.2/hadoop-${user.name}</value>

</property>

</configuration>

[root@Kylin hadoop]# vim hdfs-site.xml

<!--block副本因子-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<!--配置Sencondary namenode所在物理主机-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>kylin:50090</value>

</property>

<!--设置datanode最大文件操作数-->

<property>

<name>dfs.datanode.max.xcievers</name>

<value>4096</value>

</property>

<!--设置datanode并行处理能力-->

<property>

<name>dfs.datanode.handler.count</name>

<value>6</value>

</property>

[root@Kylin hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@Kylin hadoop]# vim mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

~

[root@Kylin hadoop]# vim yarn-site.xml

<!--配置MapReduce计算框架的核心实现Shuffle-洗牌-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--配置资源管理器所在的目标主机-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>kylin</value>

</property>

<!--关闭物理内存检查-->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<!--关闭虚拟内存检查-->

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!--历史服务器配置-->

<property>

<name>mapreduce.jobhistory.address</name>

<value>kylin:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>kylin:19888</value>

</property>

- namenode 格式化

[root@Kylin hadoop-2.9.2]# bin/hdfs namenode -format

- 启动hdfs

[root@Kylin hadoop-2.9.2]# sbin/start-dfs.sh

Starting namenodes on [Kylin]

Kylin: starting namenode, logging to /opt/install/hadoop-2.9.2/logs/hadoop-root-namenode-Kylin.out

The authenticity of host 'localhost (::1)' can't be established.

ECDSA key fingerprint is SHA256:h0KM6u7P3rzKiEPjNWO7H6FNRXtvRRpWgBs2aHJu2VU.

ECDSA key fingerprint is MD5:33:7d:02:f1:7c:61:86:74:f2:32:d0:a9:c7:42:46:bd.

Are you sure you want to continue connecting (yes/no)? yes

localhost: Warning: Permanently added 'localhost' (ECDSA) to the list of known hosts.

localhost: starting datanode, logging to /opt/install/hadoop-2.7.1/logs/hadoop-root-datanode-Kylin2.out

Starting secondary namenodes [0.0.0.0]

The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established.

ECDSA key fingerprint is SHA256:h0KM6u7P3rzKiEPjNWO7H6FNRXtvRRpWgBs2aHJu2VU.

ECDSA key fingerprint is MD5:33:7d:02:f1:7c:61:86:74:f2:32:d0:a9:c7:42:46:bd.

Are you sure you want to continue connecting (yes/no)? yes

0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts.

0.0.0.0: starting secondarynamenode, logging to /opt/install/hadoop-2.9.2/logs/hadoop-root-secondarynamenode-Kylin.out

- 启动yarn

[root@Kylin hadoop-2.9.2]# sbin/start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/install/hadoop-2.9.2/logs/yarn-root-resourcemanager-Kylin.out

localhost: starting nodemanager, logging to /opt/install/hadoop-2.9.2/logs/yarn-root-nodemanager-Kylin.out

-

启动历史服务器

[root@kylin sbin]# mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /opt/install/hadoop-2.9.2/logs/mapred-root-historyserver-kylin.out -

验证

[root@kylin sbin]# jps

84563 NodeManager

109427 Jps

84197 SecondaryNameNode

84421 ResourceManager

83801 NameNode

83976 DataNode

109196 JobHistoryServer

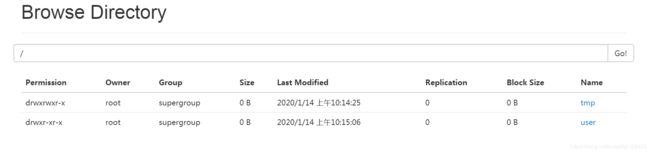

安装Hive

- 在hdfs上创建目录

root@Kylin ~]# $HADOOP_HOME/bin/hadoop fs -mkdir /tmp

[root@Kylin ~]# $HADOOP_HOME/bin/hadoop fs -mkdir -p /user/hive/warehouse

[root@Kylin ~]# $HADOOP_HOME/bin/hadoop fs -chmod g+w /tmp

[root@Kylin ~]# $HADOOP_HOME/bin/hadoop fs -chmod g+w /user/hive/warehouse

-

修改配置文件

[root@Kylin conf]# vim hive-env.sh # Set HADOOP_HOME to point to a specific hadoop install directory HADOOP_HOME=/opt/install/hadoop-2.9.2 # Hive Configuration Directory can be controlled by: export HIVE_CONF_DIR=/opt/install/hive-1.2.1/conf

切换MetaStore从derby到mysql

- 安装mysql

1. [root@Kylin opt]# wget -i -c http://dev.mysql.com/get/mysql57-community-release-el7-10.noarch.rpm

2. [root@Kylin opt]# yum -y install mysql57-community-release-el7-10.noarch.rpm

3. [root@Kylin opt]# yum -y install mysql-community-server

4. [root@Kylin opt]# systemctl start mysqld.service # 启动mysql服务

5. mysql管理员密码

5.1 查看临时密码

[root@Kylin opt]# grep "password" /var/log/mysqld.log

A temporary password is generated for root@localhost: Z>juyDor2f#L

5.2 使用临时密码登录

[root@Kylin opt]# mysql -uroot -pVqdIle,:l1b1

5.3 修改密码

mysql> set global validate_password_policy=0;

mysql> set global validate_password_length=1;

mysql> ALTER USER 'root'@'localhost' IDENTIFIED BY '123456';

mysql> exit;

5.4 重启mysql服务

[root@Kyli opt]# systemctl restart mysqld.service

6. 打开mysql远端访问权限

6.1 mysql> set global validate_password_policy=0;

6.2 mysql> set global validate_password_length=1;

6.3 mysql> GRANT ALL PRIVILEGES ON *.* TO root@"%" IDENTIFIED BY "123456";

6.4 mysql> flush privileges;

7. [root@Kylin opt]# systemctl stop firewalld

-

修改配置文件

hive_home/conf/hive-site.xml <property> <name>javax.jdo.option.ConnectionURLname> <value>jdbc:mysql://kylin:3306/hive_mysql?createDatabaseIfNotExist=true&useSSL=falsevalue> <description>JDBC connect string fora JDBC metastoredescription> property> <property> <name>javax.jdo.option.ConnectionDriverNamename> <value>com.mysql.jdbc.Drivervalue> <description>Driver class name for aJDBC metastoredescription> property> <property> <name>javax.jdo.option.ConnectionUserNamename> <value>rootvalue> <description>username to use againstmetastore databasedescription> property> <property> <name>javax.jdo.option.ConnectionPasswordname> <value>123456value> <description>password to use againstmetastore databasedescription> property> <property> <name>hive.exec.local.scratchdirname> <value>/opt/install/hive-1.2.1/tmp/${user.name}value> <description>Local scratch space for Hive jobsdescription> property> <property> <name>hive.downloaded.resources.dirname> <value>/opt/install/hive-1.2.1/tmp/${hive.session.id}_resourcesvalue> <description>Temporary local directory for added resources in the remote file system.description> property> <property> <name>hive.server2.logging.operation.log.locationname> <value>/opt/install/hive-1.2.1/tmp/operation_logsvalue> <description>Top level directory where operation logs are stored if logging functionality is enableddescription> property> -

mysql驱动jar 上传 hive/lib

安装Sqoop

- 配置

[root@kylin conf]# vim sqoop-env.sh

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/opt/install/hadoop-2.9.2

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/opt/install/hadoop-2.9.2

#set the path to where bin/hbase is available

export HBASE_HOME=/opt/install/hbase-1.2.7

#Set the path to where bin/hive is available

export HIVE_HOME=/opt/install/hive-1.2.1

#Set the path for where zookeper config dir is

export ZOOCFGDIR=/opt/install/zookeeper-3.4.6

- 将 mysql-connect.jar copy sqoop_home/lib

- 测试sqoop是否可用

[root@kylin sqoop-1.4.7]# bin/sqoop list-databases -connect jdbc:mysql://kylin:3306 -username root -password 123456

Warning: /opt/install/sqoop-1.4.7/../hcatalog does not exist! HCatalog jobs will fail.

Please set $HCAT_HOME to the root of your HCatalog installation.

Warning: /opt/install/sqoop-1.4.7/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /opt/install/sqoop-1.4.7/../zookeeper does not exist! Accumulo imports will fail.

For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

information_schema

hive_mysql

mysql

performance_schema

sys

- 将hive的lib下的所有文件copy到sqoop的lib下,否则无法对hive导入数据

- 将hive/conf下的hive-site.xml复制到sqoop/conf下,否则无法识别hive 中的数据库

安装Zookeeper

- 配置

[root@kylin zookeeper-3.4.6]# pwd

/opt/install/zookeeper-3.4.6

[root@kylin conf]# cp zoo_sample.cfg zoo.cfg

[root@kylin conf]# vi zoo.cfg

# 数据存放目录

dataDir=/root/zkdata

- 启动服务

[root@kylin zookeeper-3.4.6]# bin/zkServer.sh start conf/zoo.cfg

- 验证服务

[root@kylin zookeeper-3.4.6]# jps

2548 QuorumPeerMain # zk java进程

2597 Jps

[root@kylin zookeeper-3.4.6]# bin/zkServer.sh status conf/zoo.cfg

JMX enabled by default

Using config: conf/zoo.cfg

Mode: standalone # 独立

安装Hbase

确保Zookeeper服务正常

[root@kylin hbase-1.2.7]# jps

84563 NodeManager

84197 SecondaryNameNode

84421 ResourceManager

83801 NameNode

83976 DataNode

114906 Jps

109196 JobHistoryServer

60878 QuorumPeerMain # zk

- 修改hbase-env.sh

[root@Kylin conf]# vim hbase-env.sh

# The java implementation to use. Java 1.7+ required.

export JAVA_HOME=/usr/java/latest

- 修改hbase-site.xml

[root@Kylin conf]# vim hbase-site.xml

<property>

<name>hbase.rootdirname>

<value>hdfs://kylin:9000/hbasevalue>

property>

<property>

<name>hbase.cluster.distributedname>

<value>truevalue>

property>

<property>

<name>hbase.zookeeper.quorumname>

<value>kylinvalue>

property>

<property>

<name>hbase.zookeeper.property.clientPortname>

<value>2181value>

property>

- 启动hbase

[root@Kylin hbase-1.2.7]# bin/start-hbase.sh

[root@kylin hbase-1.2.7]# jps

84563 NodeManager

116322 Jps

84197 SecondaryNameNode

84421 ResourceManager

61157 HMaster # 管理节点

83801 NameNode

83976 DataNode

61325 HRegionServer # 存储节点

109196 JobHistoryServer

60878 QuorumPeerMain

安装Spark(基于yarn)

- 配置

[root@kylin spark-2.4.3]# vi conf/spark-env.sh

HADOOP_CONF_DIR=/opt/install/hadoop-2.9.2/etc/hadoop

YARN_CONF_DIR=/opt/install/hadoop-2.9.2/etc/hadoop

SPARK_EXECUTOR_CORES=4

SPARK_EXECUTOR_MEMORY=1g

SPARK_DRIVER_MEMORY=1g

LD_LIBRARY_PATH=/opt/install/hadoop-2.9.2/lib/native

SPARK_DIST_CLASSPATH=$(hadoop classpath)

export HADOOP_CONF_DIR

export YARN_CONF_DIR

export SPARK_EXECUTOR_CORES

export SPARK_DRIVER_MEMORY

export SPARK_EXECUTOR_MEMORY

export LD_LIBRARY_PATH

export SPARK_DIST_CLASSPATH

注意:这里和standalone不同,用户无需启动start-all.sh服务,因为任务的执行会交给YARN执行

[root@kylin spark-2.4.3]# ./bin/spark-shell

--master yarn # 连接集群的Master

--deploy-mode client # Diver运行方式:必须是client

--executor-cores 4 # 每个进程最多运行两个Core

--num-executors 2 # 分配2个Executor进程

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

19/09/25 00:14:40 WARN yarn.Client: Neither spark.yarn.jars nor spark.yarn.archive is set, falling back to uploading libraries under SPARK_HOME.

19/09/25 00:14:43 WARN hdfs.DataStreamer: Caught exception

Spark context Web UI available at http://centos:4040

Spark context available as 'sc' (master = yarn, app id = application_1569341195065_0001).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.3

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_191)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

安装Kylin

- 配置kylin.properties 根据自己的需要

kylin.env.hadoop-conf-dir=/opt/install/hadoop-2.9.2/etc/hadoop

kylin.engine.spark-conf.spark.master=yarn

kylin.engine.spark-conf.spark.submit.deployMode=cluster

kylin.engine.spark-conf.spark.yarn.queue=default

kylin.engine.spark-conf.spark.driver.memory=2G

kylin.engine.spark-conf.spark.executor.memory=2G

kylin.engine.spark-conf.spark.yarn.executor.memoryOverhead=1024

kylin.engine.spark-conf.spark.executor.instances=2

kylin.engine.spark-conf.spark.executor.cores=1

kylin.engine.spark-conf.spark.shuffle.service.enabled=false

kylin.engine.spark-conf.spark.network.timeout=600

kylin.engine.spark-conf.spark.eventLog.enabled=true

kylin.engine.spark-conf.spark.hadoop.dfs.replication=2

kylin.engine.spark-conf.spark.hadoop.mapreduce.output.fileoutputformat.compress=true

kylin.engine.spark-conf.spark.hadoop.mapreduce.output.fileoutputformat.compress.codec=org.apache.hadoop.io.compress.DefaultCodec

kylin.engine.spark-conf.spark.io.compression.codec=org.apache.spark.io.SnappyCompressionCodec

kylin.engine.spark-conf.spark.eventLog.dir=hdfs:///kylin/spark-history

kylin.engine.spark-conf.spark.history.fs.logDirectory=hdfs:///kylin/spark-history

可以先执行bin/check-env.sh,一般来说配置了上面所述的环境变量,是可以通过check

通过后直接启动即可

[root@Kylin kylin-2.4.0]# bin/kylin.sh start

web访问:

http://1主机ip:7070/kylin/login

用户名:ADMIN

密码:KYLIN

安装完成

注:在check是可能会出现的错误

- “Failed to create $WORKING_DIR. Please make sure the user has right to access $WORKING_DIR”

这是由于在hive创建目录时赋予的权限不足,可以对文件夹重新赋予权限

-

Failed to create $SPARK_HISTORYLOG_DIR. Please make sure the user has right to access $SPARK_HISTORY

一般而言在CentOS 下不会出现此错误, 原因是

get-properties.sh内容执行有问题。 ,修改这个文件源文件

## 原始文件 if [ $# != 1 ] then echo 'invalid input' exit -1 fi IFS=$'\n' result= for i in `cat ${KYLIN_HOME}/conf/kylin.properties | grep -w "^$1" | grep -v '^#' | awk -F= '{ n = index($0,"="); print substr($0,n+1)}' | cut -c 1-` do : result=$i done echo $result修改后的文件

## 修改后的文件 if [ $# != 1 ] then echo 'invalid input' exit -1 fi #IFS=$'\n' result=`cat ${KYLIN_HOME}/conf/kylin.properties | grep -w "^$1" | grep -v '^#' | awk -F= '{ n = index($0,"="); print substr($0,n+1)}' | cut -c 1-` #for i in `cat ${KYLIN_HOME}/conf/kylin.properties | grep -w "^$1" | grep -v '^#' | awk -F= '{ n = index($0,"="); print substr($0,n+1)}' | cut -c 1-` #do # : # result=$i #done echo $result