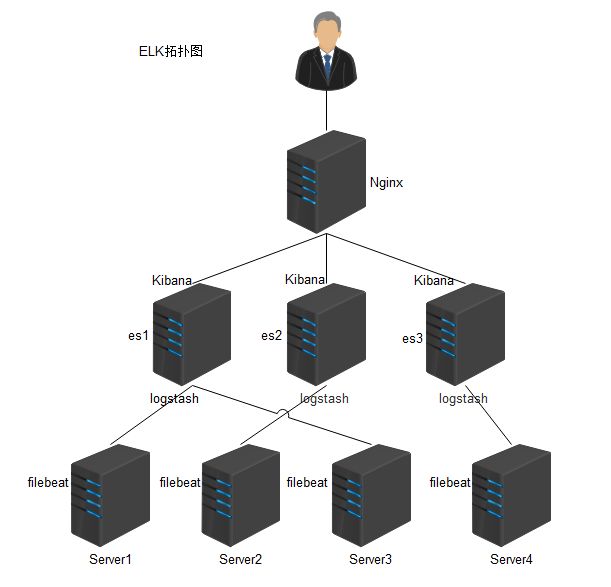

部署FileBeat+logstash+elasticsearch集群+kibana

部署FileBeat+logstash+elasticsearch集群+kibana

Nov 4, 2017 | ELK | Hits

文章目录

- 1. 环境部署

- 2. 配置

- 2.1. 配置 filebeart

- 2.2. 配置 logstash

- 2.3. 配置 elasticsearch集群

- 2.4. 配置 Kibana

- 2.5. 配置nginx

- 2.6. 启动服务

- 2.6.1. logstash 服务启动日志

- 2.6.2. filebeat 服务启动日志

- 2.6.3. elasticsearch 服务启动日志

- 2.7. 查看 elasticsearch 集群状态

- 2.7.1. 获取集群中节点列表

- 2.7.2. 集群健康检查

- 2.7.3. 获取ElasticSearch索引

- 2.7.4. 本机节点查询

- 2.7.5. 节点状态

- 2.7.6. 获取集群健康状况

- 2.7.7. 集群状态

- 2.7.8. kibana 状态

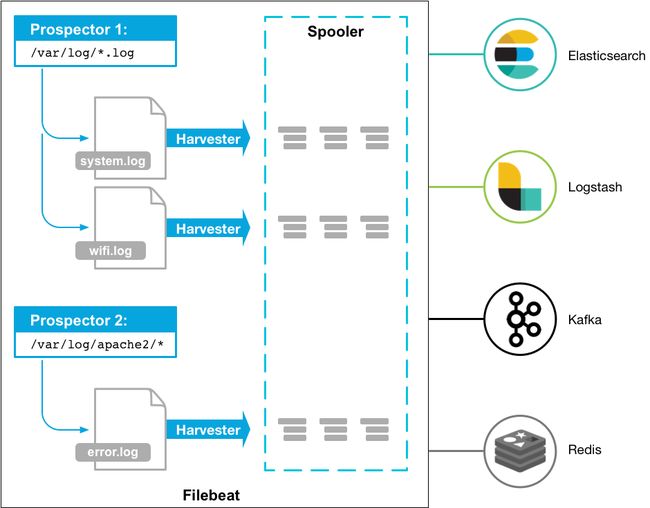

ELK由Elasticsearch、Logstash和Kibana三部分组件组成,这里新增了一个FileBeat,它是一个轻量级的日志收集处理工具(Agent),Filebeat占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,官方也推荐此工具

Filebeat隶属于Beats。目前Beats包含四种工具:

- Packetbeat(搜集网络流量数据)

- Topbeat(搜集系统、进程和文件系统级别的 CPU 和内存使用情况等数据)

- Filebeat(搜集文件数据)

- Winlogbeat(搜集 Windows 事件日志数据)

官方文档

Filebeat:

https://www.elastic.co/cn/products/beats/filebeat

https://www.elastic.co/guide/en/beats/filebeat/5.6/index.html

Logstash:

https://www.elastic.co/cn/products/logstash

https://www.elastic.co/guide/en/logstash/5.6/index.html

Elasticsearch:

https://www.elastic.co/cn/products/elasticsearch

https://www.elastic.co/guide/en/elasticsearch/reference/5.6/index.html

elasticsearch中文社区:

https://elasticsearch.cn/

环境:

Centos 7.3

Filebeat 5.6.1

Logstash 5.6.1

Kibana 5.6.1

软件下载:

filebeat-5.6.1-x86_64.rpm

kibana-5.6.1-x86_64.rpm

logstash-5.6.1.rpm

elasticsearch-5.6.1.rpm

环境部署

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 |

#环境部署(安装) #每台机器上都需要安装JDK [root@DevEVN-21 ~]# curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.6.1-x86_64.rpm [root@DevEVN-21 ~]# rpm -ivh filebeat-5.6.1-x86_64.rpm [root@DevEVN-21 ~]# rpm -qa | grep -i filebeat filebeat-5.6.1-1.x86_64 [root@DevEVN-21 ~]# [root@ELK-test software]# wget https://artifacts.elastic.co/downloads/logstash/logstash-5.6.1.rpm [root@ELK-test software]# wget http://download.oracle.com/otn-pub/java/jdk/8u144-b01/090f390dda5b47b9b721c7dfaa008135/jdk-8u144-linux-x64.tar.gz?AuthParam=1505893074_6e8dfac4ee1e712087b65acabe8b2506 -O jdk-8u144-linux-x64.tar.gz [root@ELK-test software]# tar -zxf jdk-8u144-linux-x64.tar.gz -C /usr/local/ [root@ELK-test ~]# [root@ELK-test ~]# cat /etc/profile.d/java.sh #!/bin/bash export JAVA_HOME='/usr/local/jdk1.8.0_144' export PATH=$JAVA_HOME/bin:$PATH [root@ELK-test ~]# source /etc/profile.d/java.sh [root@ELK-test ~]# java -version java version "1.8.0_144" Java(TM) SE Runtime Environment (build 1.8.0_144-b01) Java HotSpot(TM) 64-Bit Server VM (build 25.144-b01, mixed mode) [root@ELK-test ~]# [root@ELK-test software]# rpm -ivh logstash-5.6.1.rpm [root@ELK-test software]# rpm -qa | grep logstash logstash-5.6.1-1.noarch [root@ELK-test software]# [root@ELK-test software]# wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.6.1.rpm [root@ELK-test software]# yum install elasticsearch-5.6.1.rpm [root@ELK-test software]# rpm -qa | grep elasticsearch elasticsearch-5.6.1-1.noarch [root@ELK-test software]# [root@ELK-test software]# wget https://artifacts.elastic.co/downloads/kibana/kibana-5.6.1-x86_64.rpm [root@ELK-test software]# rpm -ivh kibana-5.6.1-x86_64.rpm [root@ELK-test software]# rpm -qa | grep kibana kibana-5.6.1-1.x86_64 [root@ELK-test software]# [root@ELK-test ~]# ll /data/ELK_data/* /data/ELK_data/elasticsearch: total 8 drwxr-xr-x. 3 elasticsearch elasticsearch 4096 Sep 22 17:25 data drwxr-xr-x. 2 elasticsearch elasticsearch 4096 Oct 19 19:54 logs /data/ELK_data/kibana: total 8 drwxr-xr-x. 2 kibana kibana 4096 Sep 22 16:50 data drwxr-xr-x. 2 kibana kibana 4096 Sep 22 16:50 logs /data/ELK_data/logstash: total 8 drwxr-xr-x. 4 logstash logstash 4096 Oct 19 19:51 data_192.168.1.21 drwxr-xr-x. 2 logstash logstash 4096 Oct 19 17:41 logs [root@ELK-test ~]# |

配置

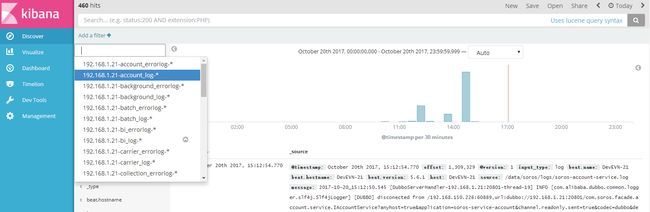

配置 filebeart

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#配置filebeart [root@DevEVN-21 ~]# grep -iv '#' /etc/filebeat/filebeat.yml | grep -iv '^$' filebeat.prospectors: - input_type: log #指定文件的输入类型log(默认)或者stdin paths: #指定要监控的日志,目前按照Go语言的glob函数处理,没有对配置目录做递归处理,如果指定的文件为/var/log/*.log的话,会读取该目录下所有以log结尾的文件,但是这样不好,在logstash上不好处理,会写到一个文件内,kibana显示会很乱,建议分开写 - /data/soros/logs/soros-account-service-error.log #指定filebeat读取的文件(绝对路径) document_type: 192.168.1.21-account_errorlog #设定Elasticsearch输出时的document的type字段,也可以用来给日志进行分类 - input_type: log paths: - /data/soros/logs/soros-account-service.log document_type: 192.168.1.21-account_log output.logstash: hosts: ["192.168.1.41:5044"] #指定logstash服务器地址及端口,可以指定多个logstash [root@DevEVN-21 ~]# |

参考:Filebeat的高级配置

filebeat.yml配置详解

ELK+Filebeat 集中式日志解决方案详解

配置 logstash

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

[root@ELK-test ~]# grep -iv '#' /etc/logstash/logstash.yml | grep -iv '^$' path.data: /data/ELK_data/logstash/data path.config: /etc/logstash/conf.d http.host: "0.0.0.0" path.logs: /data/ELK_data/logstash/logs [root@ELK-test ~]# #配置logstash [root@ELK-test ~]# grep -iv '#' /etc/logstash/conf.d/logstash_192.168.1.21.conf | grep -iv '^$' #指定logstash监听filebeat的端口 input { beats { port => 5044 codec => multiline { pattern => "^%{YEAR}[/-]%{MONTHNUM}[/-]%{MONTHDAY}[/_]%{TIME} " negate => true what => previous } } } #pattern:多行日志开始的那一行匹配的 #pattern negate:是否需要对pattern条件转置使用,不翻转设为true,反转设置为false #match:匹配pattern后,与前面(后面)的内容合并为一条日志 #max_lines:合并的最多行数(包含匹配pattern的那一行),到了timeout之后,即使没有匹配一个新的pattern(发生一个新的事件),也把已经匹配的日志事件发送出去 #output测试输出到控制台 output { #program logs if [type] == '192.168.1.21-account_errorlog'{ #'192.168.1.21-account_errorlog'为filebeat配置中由document_type定义的 elasticsearch { #输出到elasticsearch中 hosts => ["192.168.1.41:9200","192.168.1.42:9200","192.168.1.43:9200"] #指定elasticsearch主机 index => "%{type}-%{+YYYY.MM.dd}" document_type => "log" #设定Elasticsearch输出时的document的type字段,也可以用来给日志进行分类 template_overwrite => true #如果设置为true,模板名字一样的时候,新的模板会覆盖旧的模板 } } if [type] == '192.168.1.21-account_log'{ elasticsearch { hosts => ["192.168.1.41:9200","192.168.1.42:9200","192.168.1.43:9200"] index => "%{type}-%{+YYYY.MM.dd}" document_type => "log" template_overwrite => true } } } [root@ELK-test ~]# 其他机器的logstash配置同上 |

参考:Elasticsearch之default—— 为索引添加默认映射

logstash 多行数据合并 & 日志时间字段解析

配置 elasticsearch集群

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#Julend_es1 节点 [root@ELK-test ~]# grep -iv '#' /etc/elasticsearch/elasticsearch.yml | grep -iv '^$' cluster.name: "Julend_ES-cluster" node.name: Julend_es1 node.data: true http.enabled: true path.data: /data/ELK_data/elasticsearch/data path.logs: /data/ELK_data/elasticsearch/logs network.host: 0.0.0.0 http.port: 9200 http.cors.enabled: true http.cors.allow-origin: "*" discovery.zen.ping.unicast.hosts: ["192.168.1.41", "192.168.1.42", "192.168.1.43"] discovery.zen.minimum_master_nodes: 3 gateway.recover_after_nodes: 2 [root@ELK-test ~]# |

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#Julend_es2 节点 [root@ELK-test2 ~]# grep -iv '#' /etc/elasticsearch/elasticsearch.yml | grep -iv '^$' cluster.name: "Julend_ES-cluster" node.name: Julend_es2 node.data: true http.enabled: true path.data: /data/ELK_data/elasticsearch/data path.logs: /data/ELK_data/elasticsearch/logs network.host: 0.0.0.0 http.port: 9200 http.cors.enabled: true http.cors.allow-origin: "*" discovery.zen.ping.unicast.hosts: ["192.168.1.41", "192.168.1.42", "193.168.1.43"] discovery.zen.minimum_master_nodes: 3 gateway.recover_after_nodes: 2 [root@ELK-test2 ~]# |

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#Julend_es3 节点 [root@ELK-test3 ~]# grep -iv '#' /etc/elasticsearch/elasticsearch.yml | grep -iv '^$' cluster.name: "Julend_ES-cluster" node.name: Julend_es3 node.data: true http.enabled: true path.data: /data/ELK_data/elasticsearch/data path.logs: /data/ELK_data/elasticsearch/logs network.host: 0.0.0.0 http.port: 9200 http.cors.enabled: true http.cors.allow-origin: "*" discovery.zen.ping.unicast.hosts: ["192.168.1.41", "192.168.1.42","192.168.1.43"] discovery.zen.minimum_master_nodes: 3 gateway.recover_after_nodes: 2 [root@ELK-test3 ~]# |

参考:http://blog.csdn.net/shudaqi2010/article/details/53941389

http://blog.chinaunix.net/uid-532511-id-4854331.html

https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

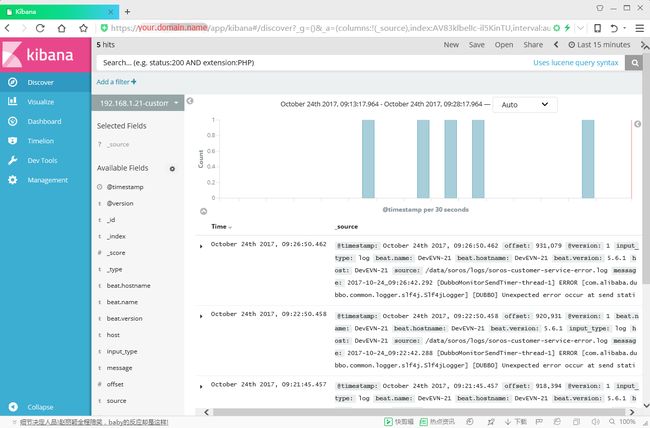

配置 Kibana

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

#kibana只是一个WEB GUI展示工具,数据均存储在elasticsearch中,故登陆任何一台kibana看到的数据都一样 #kibana1配置: [root@ELK-test ~]# grep -iv '#' /etc/kibana/kibana.yml | grep -iv '^$' server.port: 5601 server.host: "192.168.1.41" server.name: "Julend_Kibana" elasticsearch.url: "http://192.168.1.42:9200" [root@ELK-test ~]# #kibana2配置: [root@ELK-test2 ~]# grep -iv '#' /etc/kibana/kibana.yml | grep -iv '^$' server.port: 5601 server.host: "192.168.1.42" elasticsearch.url: "http://192.168.1.42:9200" [root@ELK-test2 ~]# #kibana3配置: [root@ELK-test3 ~]# grep -iv '#' /etc/kibana/kibana.yml | grep -iv '^$' server.port: 5601 server.host: "192.168.1.43" elasticsearch.url: "http://192.168.1.43:9200" [root@ELK-test3 ~]# |

参考:Kibana基本使用

ELK:kibana使用的lucene查询语法

ELK kibana查询与过滤

Lucene查询语法详解(Lucene query syntax)- 用于Kibana搜索语句

配置nginx

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

#nginx配置 [root@nginx ~]# cat /etc/nginx/conf.d/192.168.1.41-43.conf server { listen 443; server_name domain_name; ssl on; include conf.d/ssl_conf; access_log /var/log/kibana/access.log main; error_log /var/log/kibana/error.log; location / { auth_basic "Restricted"; auth_basic_user_file /etc/nginx/conf.d/.site_kibana_pass; proxy_pass http://testelk; } } server { listen 80; server_name domain_name; rewrite ^/(.*)$ https://domain_name permanent; } upstream domain_name { ip_hash; server 192.168.1.41:5601 max_fails=4 fail_timeout=20s weight=4; server 192.168.1.42:5601 max_fails=4 fail_timeout=20s weight=4; server 192.168.1.43:5601 max_fails=4 fail_timeout=20s weight=4; } [root@nginx ~]# [root@nginx ~]# nginx -t [root@nginx ~]# nginx -s reload |

启动服务

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

[root@DevEVN-21 ~]# systemctl enable filebeat [root@DevEVN-21 ~]# systemctl start filebeat [root@ELK-test ~]# /usr/share/logstash/bin/logstash --path.settings /etc/logstash/ -f /etc/logstash/conf.d/logstash_192.168.1.21.conf --path.data /data/ELK_data/logstash/data_192.168.1.21 Sending Logstash's logs to /data/ELK_data/logstash/logs which is now configured via log4j2.properties ... [root@ELK-test ~]# #启动三台elasticsearch [root@ELK-test ~]# systemctl enable elasticsearch [root@ELK-test ~]# systemctl start elasticsearch [root@ELK-test ~]# systemctl enable kibana [root@ELK-test ~]# systemctl start kibana |

logstash 服务启动日志

| 1 2 3 4 5 6 7 8 9 10 11 |

[root@localhost ~]# tail -f /data/ELK_data/logstash/logs/logstash-plain.log [2017-10-19T16:35:07,048][INFO ][logstash.outputs.elasticsearch] Running health check to see if an Elasticsearch connection is working {:healthcheck_url=>http://localhost:9 200/, :path=>"/"}[2017-10-19T16:35:07,146][WARN ][logstash.outputs.elasticsearch] Restored connection to ES instance {:url=>"http://localhost:9200/"} [2017-10-19T16:35:07,148][INFO ][logstash.outputs.elasticsearch] Using mapping template from {:path=>nil} [2017-10-19T16:35:07,188][INFO ][logstash.outputs.elasticsearch] Attempting to install template {:manage_template=>{"template"=>"logstash-*", "version"=>50001, "settings"=> {"index.refresh_interval"=>"5s"}, "mappings"=>{"_default_"=>{"_all"=>{"enabled"=>true, "norms"=>false}, "dynamic_templates"=>[{"message_field"=>{"path_match"=>"message", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false}}}, {"string_fields"=>{"match"=>"*", "match_mapping_type"=>"string", "mapping"=>{"type"=>"text", "norms"=>false, "fields"=>{"keyword"=>{"type"=>"keyword", "ignore_above"=>256}}}}}], "properties"=>{"@timestamp"=>{"type"=>"date", "include_in_all"=>false}, "@version"=>{"type"=>"keyword", "include_in_all"=>false}, "geoip"=>{"dynamic"=>true, "properties"=>{"ip"=>{"type"=>"ip"}, "location"=>{"type"=>"geo_point"}, "latitude"=>{"type"=>"half_float"}, "longitude"=>{"type"=>"half_float"}}}}}}}}[2017-10-19T16:35:07,194][INFO ][logstash.outputs.elasticsearch] New Elasticsearch output {:class=>"LogStash::Outputs::ElasticSearch", :hosts=>["//localhost:9200"]} [2017-10-19T16:35:07,197][INFO ][logstash.pipeline ] Starting pipeline {"id"=>"main", "pipeline.workers"=>2, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>5, " pipeline.max_inflight"=>250}[2017-10-19T16:35:07,582][INFO ][logstash.inputs.beats ] Beats inputs: Starting input listener {:address=>"0.0.0.0:5044"} [2017-10-19T16:35:07,615][INFO ][logstash.pipeline ] Pipeline main started [2017-10-19T16:35:07,623][INFO ][org.logstash.beats.Server] Starting server on port: 5044 [2017-10-19T16:35:07,702][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600} |

filebeat 服务启动日志

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

[root@DevEVN-21 ~]# tail -f /var/log/filebeat/filebeat 2017-10-19T15:29:38+08:00 INFO Prospector with previous states loaded: 1 2017-10-19T15:29:38+08:00 INFO Starting prospector of type: log; id: 14402121705362619298 2017-10-19T15:29:38+08:00 INFO Prospector with previous states loaded: 4 2017-10-19T15:29:38+08:00 INFO Starting prospector of type: log; id: 3085250400820318742 2017-10-19T15:29:38+08:00 INFO Prospector with previous states loaded: 10 2017-10-19T15:29:38+08:00 INFO Starting prospector of type: log; id: 9380947956116938860 2017-10-19T15:29:38+08:00 INFO Prospector with previous states loaded: 6 2017-10-19T15:29:38+08:00 INFO Starting prospector of type: log; id: 8452203097583608250 2017-10-19T15:29:38+08:00 INFO Loading and starting Prospectors completed. Enabled prospectors: 41 2017-10-19T15:29:48+08:00 INFO Harvester started for file: /data/soros/logs/soros-batch.log 2017-10-19T15:29:48+08:00 INFO Harvester started for file: /data/soros/logs/soros-marketing-service-error.log 2017-10-19T15:29:48+08:00 INFO Harvester started for file: /data/soros/logs/soros-marketing-service.log 2017-10-19T15:29:48+08:00 INFO Harvester started for file: /data/soros/logs/soros-order-service.log 2017-10-19T15:29:58+08:00 INFO Harvester started for file: /data/soros/logs/soros-pay-service.log 2017-10-19T15:29:58+08:00 INFO Harvester started for file: /data/soros/logs/soros-risk-service.log 2017-10-19T15:30:08+08:00 INFO Non-zero metrics in the last 30s: filebeat.harvester.open_files=8 filebeat.harvester.running=8 filebeat.harvester.started=8 libbeat.logstash. call_count.PublishEvents=6 libbeat.logstash.publish.read_bytes=108 libbeat.logstash.publish.write_bytes=125904 libbeat.logstash.published_and_acked_events=4469 libbeat.publisher.published_events=4469 publish.events=4535 registrar.states.current=58 registrar.states.update=4535 registrar.writes=6 2017-10-19T15:30:38+08:00 INFO Non-zero metrics in the last 30s: filebeat.harvester.open_files=12 filebeat.harvester.running=12 filebeat.harvester.started=12 libbeat.logsta sh.call_count.PublishEvents=7 libbeat.logstash.publish.read_bytes=54 libbeat.logstash.publish.write_bytes=127592 libbeat.logstash.published_and_acked_events=4581 libbeat.publisher.published_events=4581 publish.events=4593 registrar.states.update=4593 registrar.writes=7 ... [root@DevEVN-21 ~]# |

elasticsearch 服务启动日志

| 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

[root@ELK-test logs]# tail -f Julend_ES-cluster.log [2017-10-19T17:45:58,104][INFO ][o.e.n.Node ] [Julend_es1] initializing ... [2017-10-19T17:45:58,180][INFO ][o.e.e.NodeEnvironment ] [Julend_es1] using [1] data paths, mounts [[/data (/dev/sdb1)]], net usable_space [270.3gb], net total_space [29 5.1gb], spins? [possibly], types [ext4][2017-10-19T17:45:58,180][INFO ][o.e.e.NodeEnvironment ] [Julend_es1] heap size [1.9gb], compressed ordinary object pointers [true] [2017-10-19T17:45:58,857][INFO ][o.e.n.Node ] [Julend_es1] node name [Julend_es1], node ID [mXtk8dQeQh-amui-d5pVoA] [2017-10-19T17:45:58,857][INFO ][o.e.n.Node ] [Julend_es1] version[5.6.1], pid[8287], build[667b497/2017-09-14T19:22:05.189Z], OS[Linux/3.10.0-514.el7.x86_64/ amd64], JVM[Oracle Corporation/Java HotSpot(TM) 64-Bit Server VM/1.8.0_144/25.144-b01][2017-10-19T17:45:58,857][INFO ][o.e.n.Node ] [Julend_es1] JVM arguments [-Xms2g, -Xmx2g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX: +UseCMSInitiatingOccupancyOnly, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -Djdk.io.permissionsUseCanonicalPath=true, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Dlog4j.skipJansi=true, -XX:+HeapDumpOnOutOfMemoryError, -Des.path.home=/usr/share/elasticsearch][2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [aggs-matrix-stats] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [ingest-common] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [lang-expression] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [lang-groovy] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [lang-mustache] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [lang-painless] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [parent-join] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [percolator] [2017-10-19T17:45:59,707][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [reindex] [2017-10-19T17:45:59,708][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [transport-netty3] [2017-10-19T17:45:59,708][INFO ][o.e.p.PluginsService ] [Julend_es1] loaded module [transport-netty4] [2017-10-19T17:45:59,708][INFO ][o.e.p.PluginsService ] [Julend_es1] no plugins loaded [2017-10-19T17:46:01,565][INFO ][o.e.d.DiscoveryModule ] [Julend_es1] using discovery type [zen] [2017-10-19T17:46:02,716][INFO ][o.e.n.Node ] [Julend_es1] initialized [2017-10-19T17:46:02,716][INFO ][o.e.n.Node ] [Julend_es1] starting ... [2017-10-19T17:46:02,854][INFO ][o.e.t.TransportService ] [Julend_es1] publish_address {192.168.1.41:9300}, bound_addresses {[::]:9300} [2017-10-19T17:46:02,863][INFO ][o.e.b.BootstrapChecks ] [Julend_es1] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks [2017-10-19T17:46:05,946][WARN ][o.e.d.z.ZenDiscovery ] [Julend_es1] failed to connect to master [{Julend_es3}{Go1e5drSSum7gbrKpIef8g}{KF8fUy32Q-WhSJDBCrdVrQ}{192.168.1 .43}{192.168.1.43:9300}], retrying...org.elasticsearch.transport.ConnectTransportException: [Julend_es3][192.168.1.43:9300] connect_timeout[30s] at org.elasticsearch.transport.netty4.Netty4Transport.connectToChannels(Netty4Transport.java:361) ~[?:?] at org.elasticsearch.transport.TcpTransport.openConnection(TcpTransport.java:561) ~[elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.transport.TcpTransport.connectToNode(TcpTransport.java:464) ~[elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.transport.TransportService.connectToNode(TransportService.java:332) ~[elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.transport.TransportService.connectToNode(TransportService.java:319) ~[elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.discovery.zen.ZenDiscovery.joinElectedMaster(ZenDiscovery.java:458) [elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.discovery.zen.ZenDiscovery.innerJoinCluster(ZenDiscovery.java:410) [elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.discovery.zen.ZenDiscovery.access$4100(ZenDiscovery.java:82) [elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.discovery.zen.ZenDiscovery$JoinThreadControl$1.run(ZenDiscovery.java:1188) [elasticsearch-5.6.1.jar:5.6.1] at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) [elasticsearch-5.6.1.jar:5.6.1] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_144] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_144] at java.lang.Thread.run(Thread.java:748) [?:1.8.0_144] Caused by: io.netty.channel.AbstractChannel$AnnotatedConnectException: Connection refused: 192.168.1.43/192.168.1.43:9300 at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[?:?] at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) ~[?:?] at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:352) ~[?:?] at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:632) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:544) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:498) ~[?:?] at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458) ~[?:?] at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858) ~[?:?] ... 1 more Caused by: java.net.ConnectException: Connection refused at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[?:?] at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) ~[?:?] at io.netty.channel.socket.nio.NioSocketChannel.doFinishConnect(NioSocketChannel.java:352) ~[?:?] at io.netty.channel.nio.AbstractNioChannel$AbstractNioUnsafe.finishConnect(AbstractNioChannel.java:340) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:632) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKeysPlain(NioEventLoop.java:544) ~[?:?] at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:498) ~[?:?] at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:458) ~[?:?] at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:858) ~[?:?] ... 1 more [2017-10-19T17:46:08,968][WARN ][o.e.d.z.ZenDiscovery ] [Julend_es1] not enough master nodes discovered during pinging (found [[Candidate{node={Julend_es2}{VQf-ph7NRTei EVHqAT4pRw}{v2HyA4hTT0miTzlFmi1vZg}{192.168.1.42}{192.168.1.42:9300}, clusterStateVersion=-1}, Candidate{node={Julend_es1}{mXtk8dQeQh-amui-d5pVoA}{1f1jfhuQQCyor5YXnm8oAg}{192.168.1.41}{192.168.1.41:9300}, clusterStateVersion=-1}]], but needed [3]), pinging again[2017-10-19T17:46:11,971][WARN ][o.e.d.z.ZenDiscovery ] [Julend_es1] not enough master nodes discovered during pinging (found [[Candidate{node={Julend_es2}{VQf-ph7NRTei EVHqAT4pRw}{v2HyA4hTT0miTzlFmi1vZg}{192.168.1.42}{192.168.1.42:9300}, clusterStateVersion=-1}, Candidate{node={Julend_es1}{mXtk8dQeQh-amui-d5pVoA}{1f1jfhuQQCyor5YXnm8oAg}{192.168.1.41}{192.168.1.41:9300}, clusterStateVersion=-1}]], but needed [3]), pinging again[2017-10-19T17:46:15,025][INFO ][o.e.c.s.ClusterService ] [Julend_es1] detected_master {Julend_es3}{Go1e5drSSum7gbrKpIef8g}{60Fb2YJZQ0Ct2uL7PcsuRQ}{192.168.1.43}{192.168. 1.43:9300}, added {{Julend_es2}{VQf-ph7NRTeiEVHqAT4pRw}{v2HyA4hTT0miTzlFmi1vZg}{192.168.1.42}{192.168.1.42:9300},{Julend_es3}{Go1e5drSSum7gbrKpIef8g}{60Fb2YJZQ0Ct2uL7PcsuRQ}{192.168.1.43}{192.168.1.43:9300},}, reason: zen-disco-receive(from master [master {Julend_es3}{Go1e5drSSum7gbrKpIef8g}{60Fb2YJZQ0Ct2uL7PcsuRQ}{192.168.1.43}{192.168.1.43:9300} committed version [1]])[2017-10-19T17:46:15,056][INFO ][o.e.h.n.Netty4HttpServerTransport] [Julend_es1] publish_address {192.168.1.41:9200}, bound_addresses {[::]:9200} [2017-10-19T17:46:15,056][INFO ][o.e.n.Node ] [Julend_es1] started ... [root@ELK-test logs]# |

查看 elasticsearch 集群状态

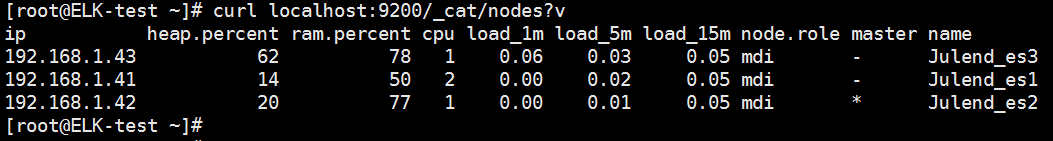

获取集群中节点列表

curl 'localhost:9200/_cat/nodes?v' 获取集群中节点列表

获取集群中节点列表

集群健康检查

curl 'localhost:9200/_cat/health?v'![]() 集群健康检查

集群健康检查

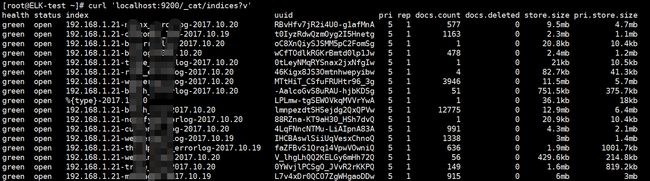

获取ElasticSearch索引

curl 'localhost:9200/_cat/indices?v' 获取ElasticSearch索引

获取ElasticSearch索引

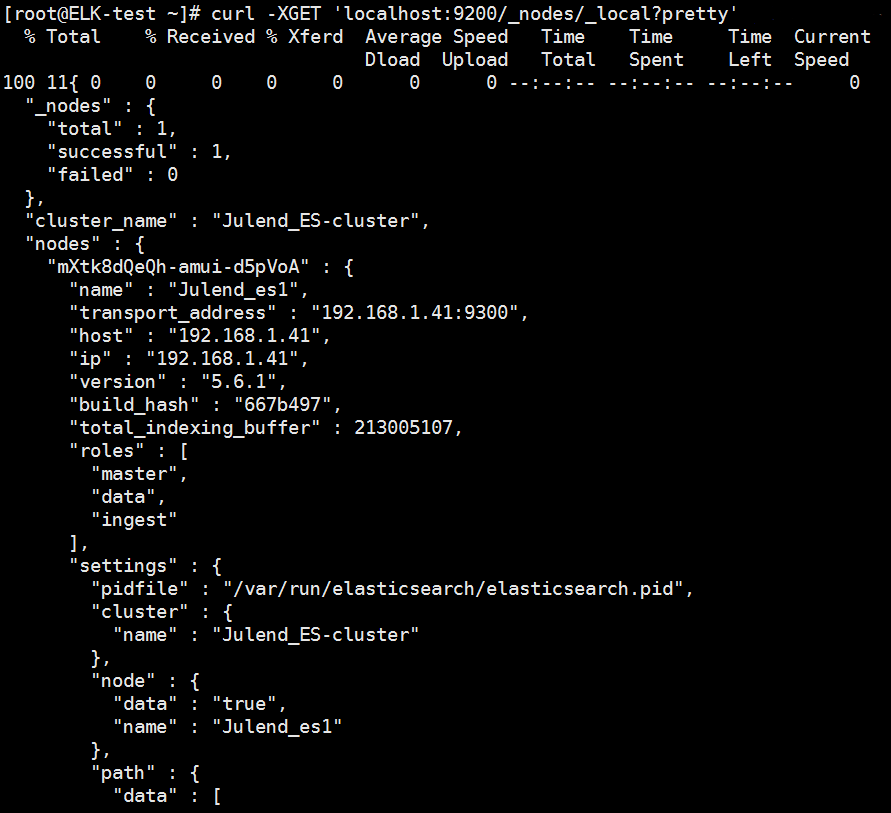

本机节点查询

curl -XGET 'localhost:9200/_nodes/_local?pretty' 本机节点查询1

本机节点查询1

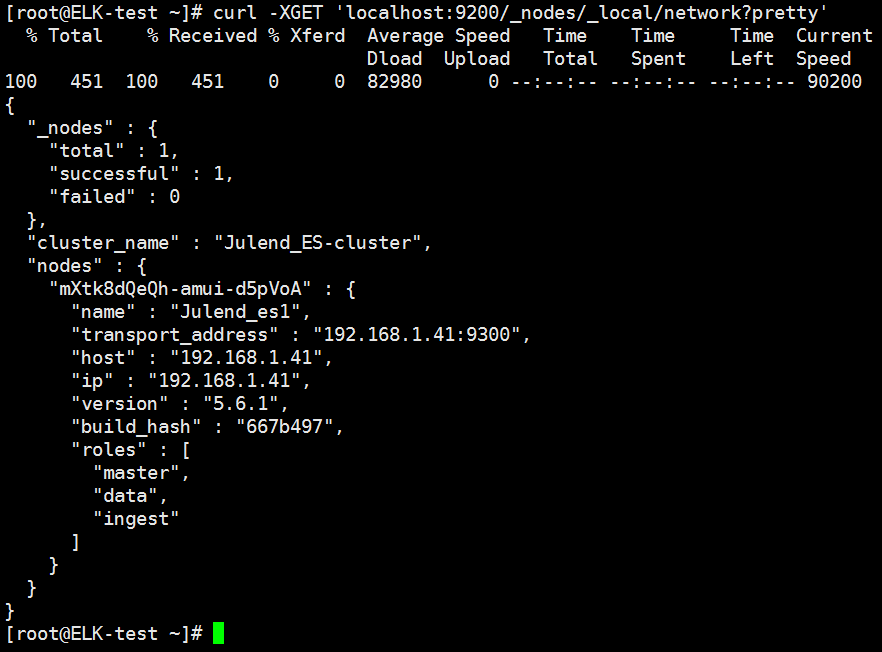

curl -XGET 'localhost:9200/_nodes/_local/network?pretty' 本机节点查询2

本机节点查询2

节点状态

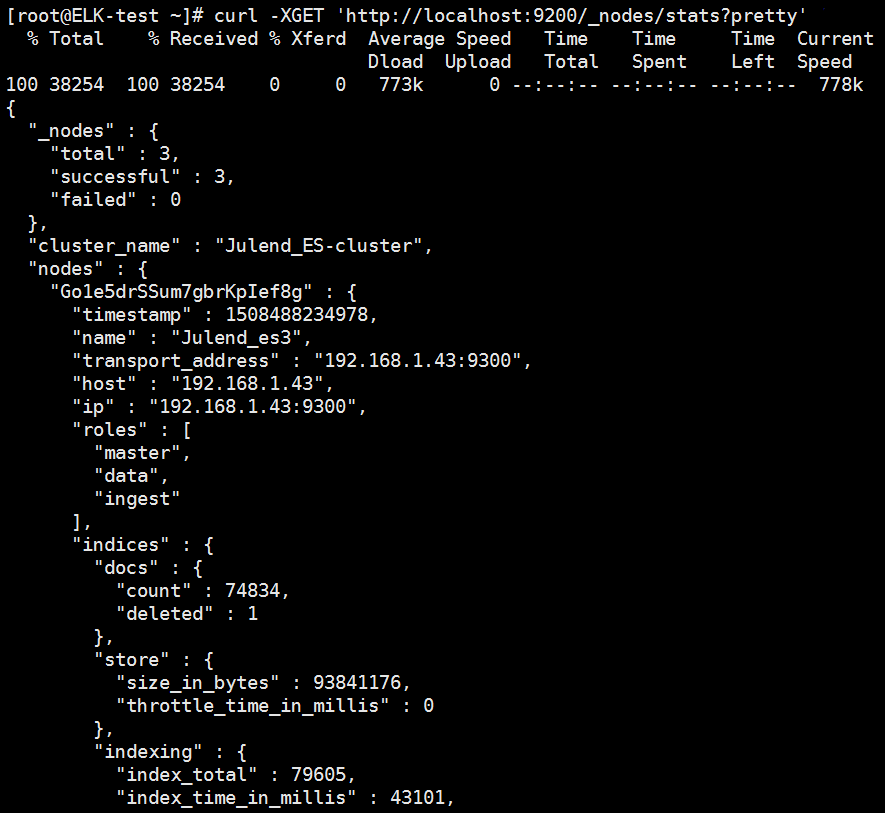

curl -XGET 'http://localhost:9200/_nodes/stats?pretty' 节点状态1

节点状态1

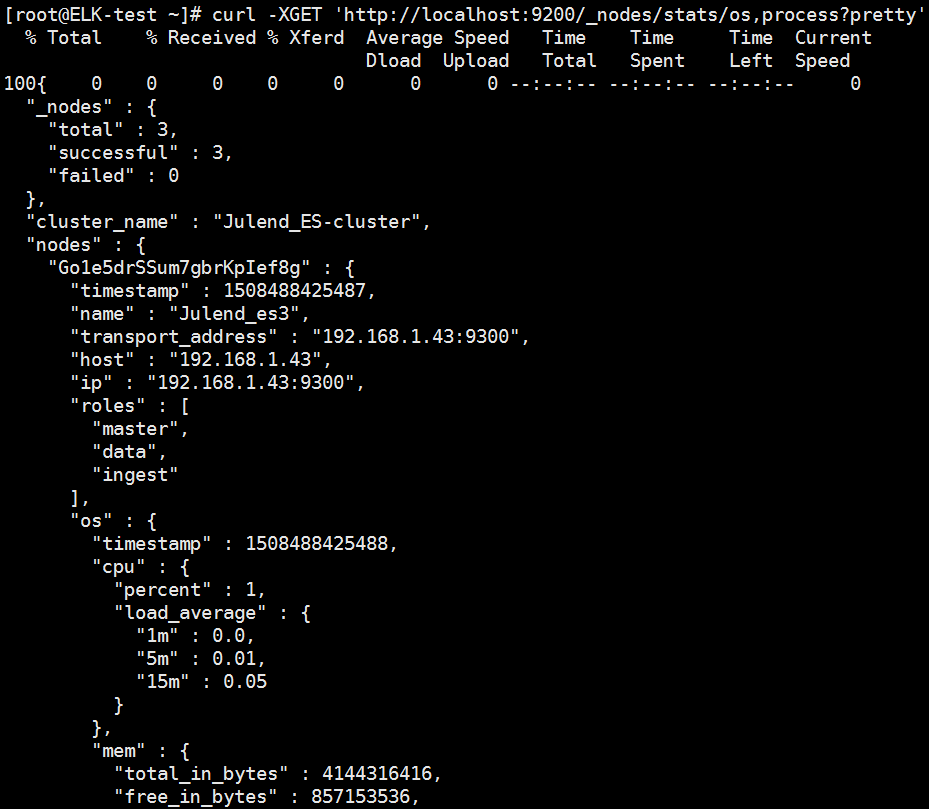

curl -XGET 'http://localhost:9200/_nodes/stats/os,process?pretty' 节点状态2

节点状态2

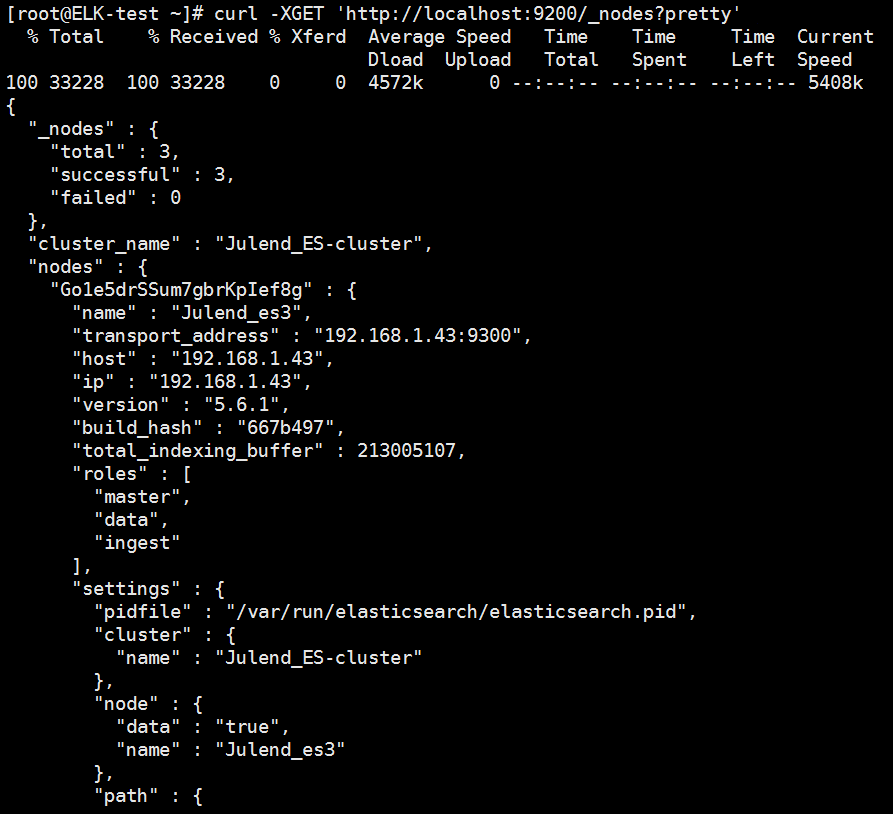

curl -XGET 'http://localhost:9200/_nodes?pretty' 节点状态3

节点状态3

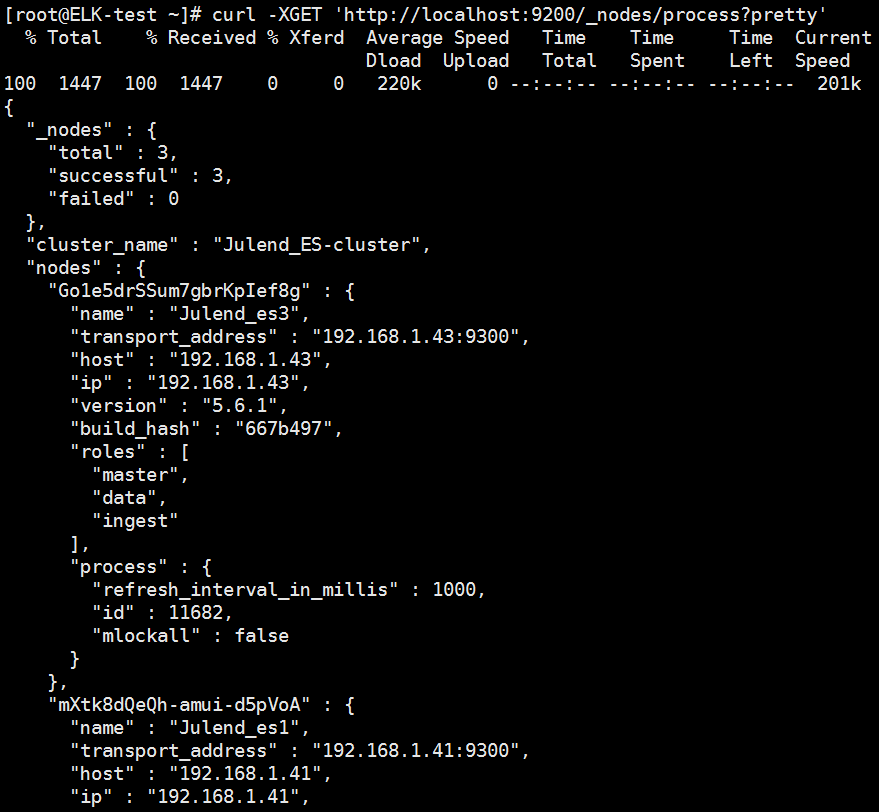

curl -XGET 'http://localhost:9200/_nodes/process?pretty' 节点状态4

节点状态4

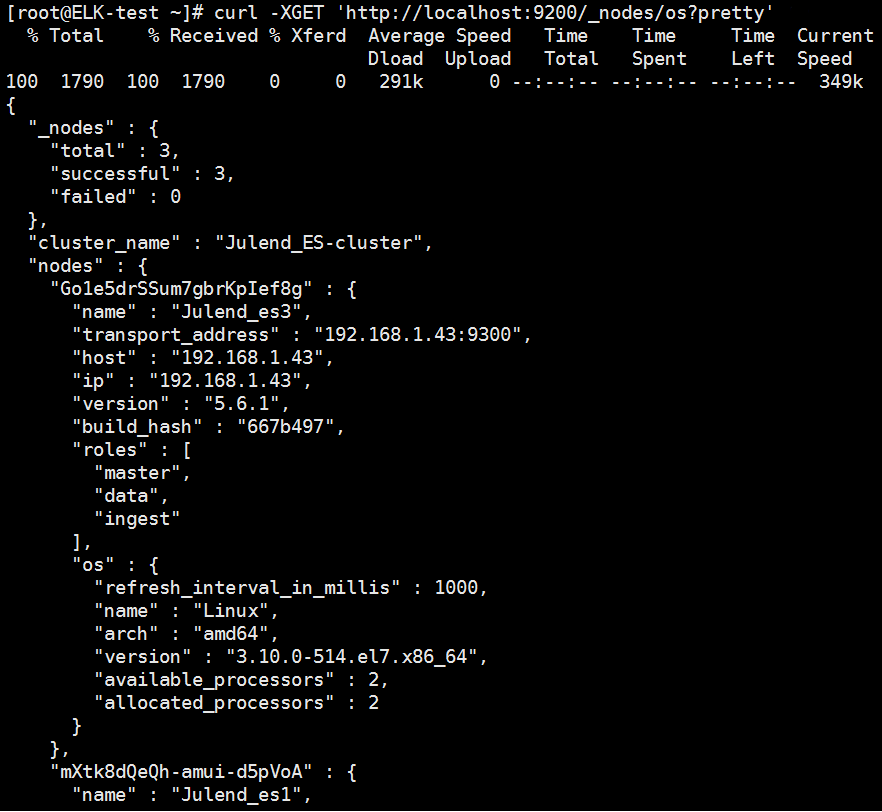

curl -XGET 'http://localhost:9200/_nodes/os?pretty' 节点状态5

节点状态5

curl -XGET 'http://localhost:9200/_nodes/settings?pretty' 节点状态6

节点状态6

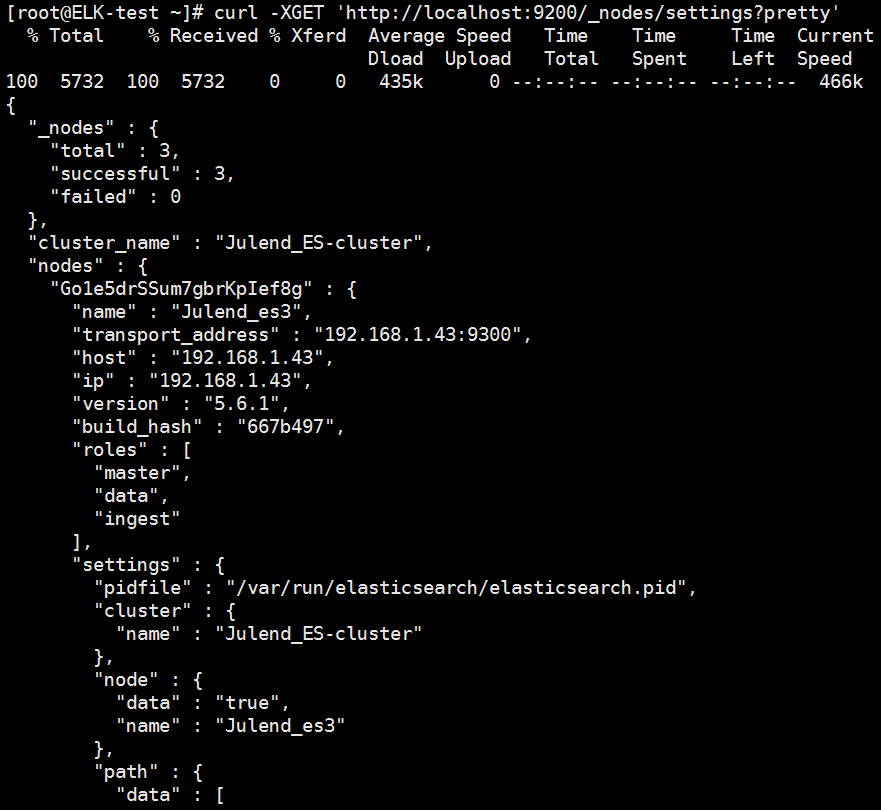

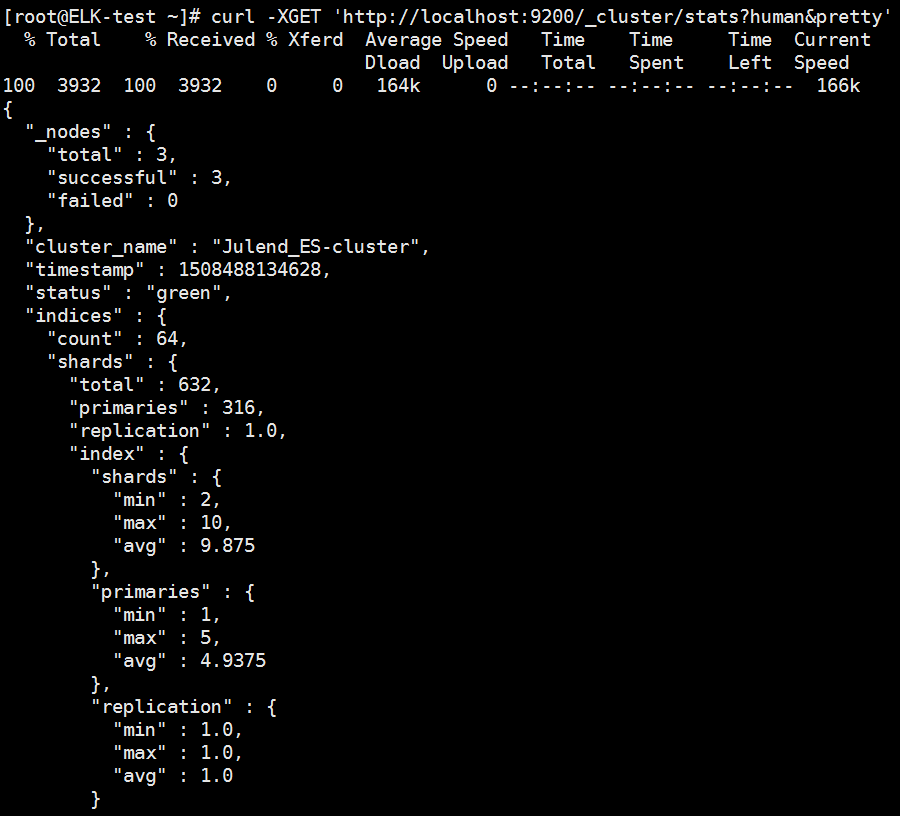

获取集群健康状况

curl -XGET 'http://localhost:9200/_cluster/health?pretty=true' 获取集群健康状况

获取集群健康状况

集群状态

curl -XGET 'http://localhost:9200/_cluster/state?pretty' 集群状态1

集群状态1

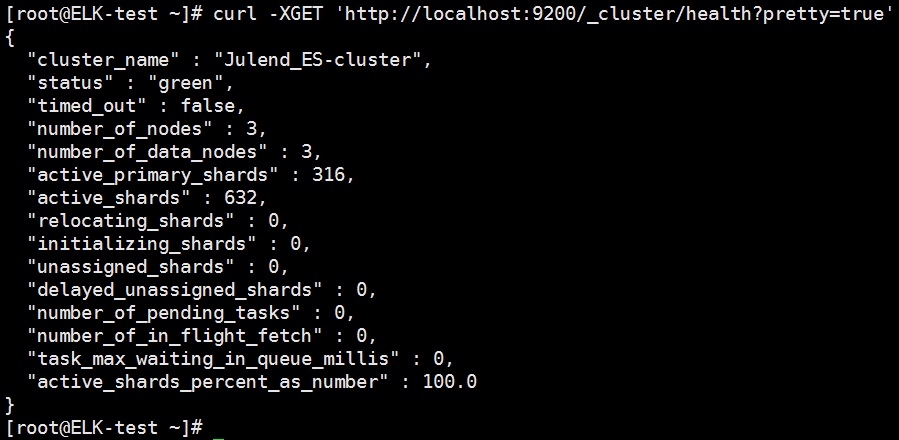

curl -XGET 'http://localhost:9200/_cluster/stats?human&pretty' 集群状态2

集群状态2

详细参考:http://www.elasticsearch.org/guide/en/elasticsearch/reference/current/cluster-nodes-stats.html

http://www.elasticsearch.org/guide/en/elasticsearch/reference/current/cluster-nodes-info.html

| 1 2 3 4 |

[root@ELK-test ~]# ls /data/ELK_data/elasticsearch/logs/ Julend_ES-cluster-2017-10-17.log Julend_ES-cluster_deprecation.log Julend_ES-cluster_index_search_slowlog.log Julend_ES-cluster-2017-10-18.log Julend_ES-cluster_index_indexing_slowlog.log Julend_ES-cluster.log [root@ELK-test ~]# |

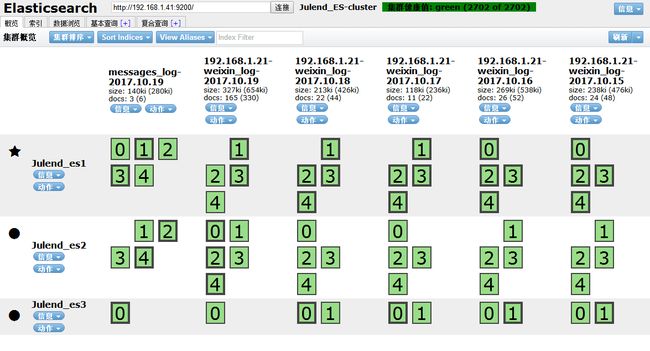

elasticsearch-head安装略

第三个elasticsearch节点(之前有两个)加入到elasticsearch集群后,前两个elasticsearch节点会自动迁移同步数据到第三个节点

elasticsearch集群开始同步 elasticsearch_cluster_status

elasticsearch_cluster_status

elasticsearch集群同步完成后,各个elasticsearch节点几乎均匀分布,允许宕机一个节点 elasticsearch_cluster_status

elasticsearch_cluster_status

kibana 状态

本作品采用知识共享署名 2.5 中国大陆许可协议进行许可,欢迎转载,但转载请注明来自Jack Wang Blog,并保持转载后文章内容的完整。本人保留所有版权相关权利。

游戏

2048小游戏

变色弹球跳台阶小游戏

最近评论

Copyright © 2019 Jack Wang Blog. Powered by Hexo. Theme by Cho.