集群规划

| 系统版本 | 主机名 | IP | 用途 |

|---|---|---|---|

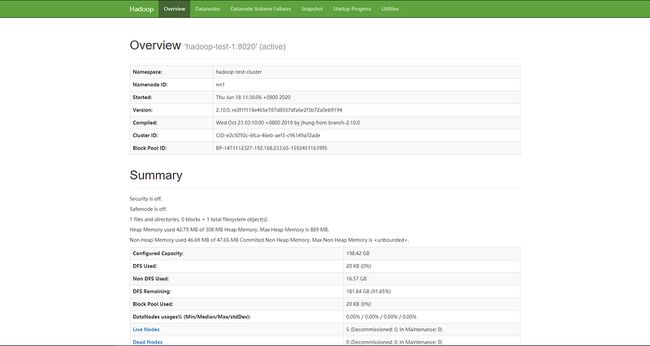

| CentOS-7.7 | hadoop-test-1 | 192.168.233.65 | namenode datanode DFSZKFailoverController hive hmaster resourcemanager NodeManager |

| CentOS-7.7 | hadoop-test-2 | 192.168.233.94 | namenode datanode DFSZKFailoverController hmaster resourcemanager NodeManager |

| CentOS-7.7 | hadoop-test-3 | 192.168.233.17 | datanode zookeeper NodeManager |

| CentOS-7.7 | hadoop-test-4 | 192.168.233.238 | datanode zookeeper NodeManager |

| CentOS-7.7 | hadoop-test-5 | 192.168.233.157 | datanode zookeeper NodeManager |

hadoop安装

$ wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-2.10.0/hadoop-2.10.0.tar.gz

$ tar -zxvf hadoop-2.10.0.tar.gz -C /data

$ for i in {1..5};do ssh hadoop-test-$i "mkdir /data/hdfs -p";done

$ for i in {1..5};do ssh hadoop-test-$i "chown -R hadoop.hadoop /data/hdfs ";done

$ su hadoop

$ cd /data/hadoop/etc/hadoop/hdfs-site.xml

$ vim hdfs-site.xml

dfs.block.size

67108864

dfs.replication

3

dfs.nameservices

hadoop-test-cluster

dfs.namenode.name.dir

/data/hdfs/nn

dfs.datanode.data.dir

/data/hdfs/dn

dfs.ha.namenodes.hadoop-test-cluster

nn1,nn2

dfs.namenode.rpc-address.hadoop-test-cluster.nn1

192.168.233.65:8020

dfs.namenode.rpc-address.hadoop-test-cluster.nn2

192.168.233.94:8020

dfs.webhdfs.enabled

true

dfs.journalnode.http-address

0.0.0.0:8480

dfs.journalnode.rpc-address

0.0.0.0:8481

dfs.namenode.http-address.hadoop-test-cluster.nn1

192.168.233.65:50070

dfs.namenode.http-address.hadoop-test-cluster.nn2

192.168.233.94:50070

dfs.namenode.shared.edits.dir

qjournal://192.168.233.17:8481;192.168.233.238:8481;192.168.233.157:8481/mtr-test-cluster

dfs.client.failover.proxy.provider.hadoop-test-cluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.ha.automatic-failover.enabled

true

dfs.ha.fencing.methods

sshfence

dfs.ha.fencing.ssh.private-key-files

/home/hadoop/.ssh/id_rsa

dfs.journalnode.edits.dir

/data/hdfs/journal

ha.zookeeper.quorum

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

dfs.datanode.hdfs-blocks-metadata.enabled

true

dfs.block.local-path-access.user

impala

dfs.client.file-block-storage-locations.timeout.millis

60000

core-site.xml

$ vim core-site.xml

fs.defaultFS

hdfs://hadoop-test-cluster

hadoop.tmp.dir

/data/hdfs/tmp

hadoop.logfile.size

10000000

每个日志文件的最大值,单位:bytes

hadoop.logfile.count

10

日志文件的最大数量

ha.zookeeper.quorum

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

dfs.client.read.shortcircuit

true

dfs.client.read.shortcircuit.skip.checksum

false

dfs.datanode.hdfs-blocks-metadata.enabled

true

mapred-site.xml

$ vim mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

192.168.233.65:10020

mapreduce.jobhistory.webapp.address

192.168.233.65:19888

yarn-site.xml

$ vim yarn-site.xml

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

259200

yarn.resourcemanager.connect.retry-interval.ms

2000

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.ha.rm-ids

rm1,rm2

ha.zookeeper.quorum

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.ha.automatic-failover.enabled

true

yarn.resourcemanager.hostname.rm1

192.168.233.65

yarn.resourcemanager.hostname.rm2

192.168.233.94

yarn.resourcemanager.ha.id

rm1

If we want to launch more than one RM in single node, we need this configuration

yarn.resourcemanager.zk-address

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.zk-state-store.address

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms

5000

yarn.resourcemanager.address.rm1

192.168.233.65:8132

yarn.resourcemanager.scheduler.address.rm1

192.168.233.65:8130

yarn.resourcemanager.webapp.address.rm1

192.168.233.65:8188

yarn.resourcemanager.resource-tracker.address.rm1

192.168.233.65:8131

yarn.resourcemanager.admin.address.rm1

192.168.233.65:8033

yarn.resourcemanager.ha.admin.address.rm1

192.168.233.65:23142

yarn.resourcemanager.address.rm2

192.168.233.94:8132

yarn.resourcemanager.scheduler.address.rm2

192.168.233.94:8130

yarn.resourcemanager.webapp.address.rm2

192.168.233.94:8188

yarn.resourcemanager.resource-tracker.address.rm2

192.168.233.94:8131

yarn.resourcemanager.admin.address.rm2

192.168.233.94:8033

yarn.resourcemanager.ha.admin.address.rm2

192.168.233.94:23142

yarn.log-aggregation-enable

true

yarn.resourcemanager.am.max-attempts

4

yarn.nodemanager.resource.memory-mb

4096

每个节点可用内存,单位MB

yarn.nodemanager.resource.cpu-mb

2

每个节点可用CPU,单位MB

yarn.client.failover-proxy-provider

yarn.resourcemanager.ha.automatic-failover.zk-base-path

/yarn-leader-election

配置dn,slaves

$ vim slaves

hadoop-test-1

hadoop-test-2

hadoop-test-3

hadoop-test-4

hadoop-test-5修改hadoop-env.sh

$ vim hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_231

export HADOOP_LOG_DIR=/data/hdfs/logs

export HADOOP_SECURE_DN_LOG_DIR=/data/hdfs/logs

export HADOOP_PRIVILEGED_NFS_LOG_DIR=/data/hdfs/logs

export HADOOP_MAPRED_LOG_DIR=/data/hdfs/logs

export HADOOP_LOG_DIR=/data/hdfs/logs

export YARN_LOG_DIR=/data/hdfs/logs将/data/hadoop拷贝至每个节点的/data/

在hadoop-test-2配置yarn

$ vim yarn-site.xml

yarn.resourcemanager.ha.id

rm1

If we want to launch more than one RM in single node, we need this configuration

所有节点配置.bashrc

$ vim ~/.bashrc

#hadoop

export HADOOP_HOME=/data/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

$ source ~/.bashrc初始化

$ hdfs zkfc -formatZK

20/06/18 11:29:37 INFO tools.DFSZKFailoverController: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting DFSZKFailoverController

...

...

...

20/06/18 11:29:38 INFO ha.ActiveStandbyElector: Session connected.

20/06/18 11:29:38 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/hadoop-test-cluster in ZK.

20/06/18 11:29:38 INFO zookeeper.ZooKeeper: Session: 0x300002f96aa0000 closed

20/06/18 11:29:38 INFO zookeeper.ClientCnxn: EventThread shut down for session: 0x300002f96aa0000

20/06/18 11:29:38 INFO tools.DFSZKFailoverController: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DFSZKFailoverController at hadoop-test-1/192.168.233.65

************************************************************在journalnode启动journalnode

$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /data/hdfs/logs/hadoop-hadoop-journalnode-hadoop-test-3.out

$ jps

9140 Jps

9078 JournalNode

4830 QuorumPeerMain在主namenode格式化journalnode

$ hadoop namenode -format

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

20/06/18 11:32:42 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

...

...

...

20/06/18 11:32:44 INFO common.Storage: Storage directory /data/hdfs/nn has been successfully formatted.

20/06/18 11:32:44 INFO namenode.FSImageFormatProtobuf: Saving image file /data/hdfs/nn/current/fsimage.ckpt_0000000000000000000 using no compression

20/06/18 11:32:44 INFO namenode.FSImageFormatProtobuf: Image file /data/hdfs/nn/current/fsimage.ckpt_0000000000000000000 of size 325 bytes saved in 0 seconds .

20/06/18 11:32:44 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

20/06/18 11:32:44 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid = 0 when meet shutdown.

20/06/18 11:32:44 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-test-1/192.168.233.65

************************************************************/查看zk

$ zkCli.sh -server hadoop-test-4:2181

...

...

...

2020-06-18 11:34:25,075 [myid:hadoop-test-4:2181] - INFO [main-SendThread(hadoop-test-4:2181):ClientCnxn$SendThread@959] - Socket connection established, initiating session, client: /192.168.233.157:52598, server: hadoop-test-4/192.168.233.238:2181

2020-06-18 11:34:25,094 [myid:hadoop-test-4:2181] - INFO [main-SendThread(hadoop-test-4:2181):ClientCnxn$SendThread@1394] - Session establishment complete on server hadoop-test-4/192.168.233.238:2181, sessionid = 0x200002f8ee50001, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: hadoop-test-4:2181(CONNECTED) 0] ls /

[hadoop-ha, zookeeper]

[zk: hadoop-test-4:2181(CONNECTED) 1] ls /hadoop-ha在主namenode启动主namenode

$ hadoop-daemon.sh start namenode

starting namenode, logging to /data/hdfs/logs/hadoop-hadoop-namenode-hadoop-test-1.out

$ jps

10864 NameNode

10951 Jps在备namenode copy主namenode的数据并启动

$ hdfs namenode -bootstrapStandby

20/06/18 11:37:08 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

...

...

...

20/06/18 11:37:09 INFO namenode.FSEditLog: Edit logging is async:true

20/06/18 11:37:09 INFO namenode.TransferFsImage: Opening connection to http://192.168.233.65:50070/imagetransfer?getimage=1&txid=0&storageInfo=-63:2055238485:1592451163995:CID-e2c9292c-6fca-46eb-aef3-c96149a72ade&bootstrapstandby=true

20/06/18 11:37:09 INFO common.Util: Combined time for fsimage download and fsync to all disks took 0.00s. The fsimage download took 0.00s at 0.00 KB/s. Synchronous (fsync) write to disk of /data/hdfs/nn/current/fsimage.ckpt_0000000000000000000 took 0.00s.

20/06/18 11:37:09 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 325 bytes.

20/06/18 11:37:09 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop-test-2/192.168.233.94

************************************************************/

$ hadoop-daemon.sh start namenode

starting namenode, logging to /data/hdfs/logs/hadoop-hadoop-namenode-hadoop-test-2.out在主备namenode节点执行

$ hadoop-daemon.sh start zkfc

starting zkfc, logging to /data/hdfs/logs/hadoop-hadoop-zkfc-hadoop-test-1.out

$ hadoop-daemon.sh start zkfc

starting zkfc, logging to /data/hdfs/logs/hadoop-hadoop-zkfc-hadoop-test-2.out在主namenode执行命令启动datanode

$ hadoop-daemons.sh start datanode在主namenode resourcemanager启动yarn

$ start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /data/hdfs/logs/yarn-hadoop-resourcemanager-hadoop-test-1.out

hadoop-test-1: starting nodemanager, logging to /data/hdfs/logs/yarn-hadoop-nodemanager-hadoop-test-1.out

hadoop-test-4: starting nodemanager, logging to /data/hdfs/logs/yarn-hadoop-nodemanager-hadoop-test-4.out

hadoop-test-2: starting nodemanager, logging to /data/hdfs/logs/yarn-hadoop-nodemanager-hadoop-test-2.out

hadoop-test-3: starting nodemanager, logging to /data/hdfs/logs/yarn-hadoop-nodemanager-hadoop-test-3.out

hadoop-test-5: starting nodemanager, logging to /data/hdfs/logs/yarn-hadoop-nodemanager-hadoop-test-5.out在备namenode resourcemanager启动备resourcemanager

$ start-yarn.sh 查看进程

$ for i in {1..5};do ssh hadoop-test-$i "jps" && echo ---;done

10864 NameNode

12753 NodeManager

17058 Jps

12628 ResourceManager

11381 DataNode

11146 DFSZKFailoverController

---

9201 NameNode

14997 ResourceManager

15701 Jps

9431 DFSZKFailoverController

9623 DataNode

10701 NodeManager

---

14353 Jps

9078 JournalNode

9639 DataNode

4830 QuorumPeerMain

10574 NodeManager

---

9616 DataNode

10547 NodeManager

14343 Jps

4808 QuorumPeerMain

9115 JournalNode

---

9826 DataNode

10758 NodeManager

4807 QuorumPeerMain

9255 JournalNode

14538 JpsWordCount演示

$ cd /data/hadoop/

$ ll LICENSE.txt

-rw-r--r-- 1 hadoop hadoop 106210 6月 18 09:26 LICENSE.txt

$ hadoop fs -mkdir /input

$ hadoop fs -put LICENSE.txt /input

$ hadoop jar share/hadoop/

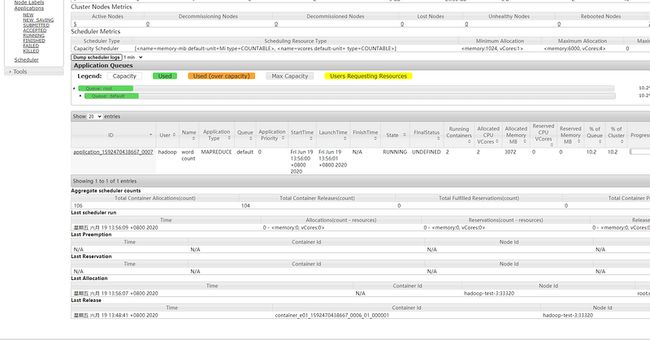

common/ hdfs/ httpfs/ kms/ mapreduce/ tools/ yarn/ $ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.10.0.jar wordcount /input /output

20/06/19 13:55:59 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

20/06/19 13:56:00 INFO input.FileInputFormat: Total input files to process : 1

20/06/19 13:56:00 INFO mapreduce.JobSubmitter: number of splits:1

20/06/19 13:56:00 INFO Configuration.deprecation: yarn.resourcemanager.zk-address is deprecated. Instead, use hadoop.zk.address

20/06/19 13:56:00 INFO Configuration.deprecation: yarn.resourcemanager.system-metrics-publisher.enabled is deprecated. Instead, use yarn.system-metrics-publisher.enabled

20/06/19 13:56:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1592470438667_0007

20/06/19 13:56:00 INFO conf.Configuration: resource-types.xml not found

20/06/19 13:56:00 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

20/06/19 13:56:00 INFO resource.ResourceUtils: Adding resource type - name = memory-mb, units = Mi, type = COUNTABLE

20/06/19 13:56:00 INFO resource.ResourceUtils: Adding resource type - name = vcores, units = , type = COUNTABLE

20/06/19 13:56:00 INFO impl.YarnClientImpl: Submitted application application_1592470438667_0007

20/06/19 13:56:01 INFO mapreduce.Job: The url to track the job: http://hadoop-test-2:8188/proxy/application_1592470438667_0007/

20/06/19 13:56:01 INFO mapreduce.Job: Running job: job_1592470438667_0007

20/06/19 13:56:08 INFO mapreduce.Job: Job job_1592470438667_0007 running in uber mode : false

20/06/19 13:56:08 INFO mapreduce.Job: map 0% reduce 0%

20/06/19 13:56:12 INFO mapreduce.Job: map 100% reduce 0%

20/06/19 13:56:17 INFO mapreduce.Job: map 100% reduce 100%

20/06/19 13:56:18 INFO mapreduce.Job: Job job_1592470438667_0007 completed successfully

20/06/19 13:56:18 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=36735

FILE: Number of bytes written=496235

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=106319

HDFS: Number of bytes written=27714

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=2442

Total time spent by all reduces in occupied slots (ms)=2678

Total time spent by all map tasks (ms)=2442

Total time spent by all reduce tasks (ms)=2678

Total vcore-milliseconds taken by all map tasks=2442

Total vcore-milliseconds taken by all reduce tasks=2678

Total megabyte-milliseconds taken by all map tasks=2500608

Total megabyte-milliseconds taken by all reduce tasks=2742272

Map-Reduce Framework

Map input records=1975

Map output records=15433

Map output bytes=166257

Map output materialized bytes=36735

Input split bytes=109

Combine input records=15433

Combine output records=2332

Reduce input groups=2332

Reduce shuffle bytes=36735

Reduce input records=2332

Reduce output records=2332

Spilled Records=4664

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=140

CPU time spent (ms)=1740

Physical memory (bytes) snapshot=510885888

Virtual memory (bytes) snapshot=4263968768

Total committed heap usage (bytes)=330301440

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=106210

File Output Format Counters

Bytes Written=27714$ hdfs dfs -ls /input

Found 1 items

-rw-r--r-- 3 hadoop supergroup 106210 2020-06-19 13:55 /input/LICENSE.txt

$ hdfs dfs -ls /output

Found 2 items

-rw-r--r-- 3 hadoop supergroup 0 2020-06-19 13:56 /output/_SUCCESS

-rw-r--r-- 3 hadoop supergroup 27714 2020-06-19 13:56 /output/part-r-00000