Pinot创建表出现问题

背景是建立实时表

schema和配置如下

{

"dimensionFieldSpecs": [

{

"dataType": "STRING",

"name": "FA0010000000"

},

{

"dataType": "STRING",

"name": "FA0010010000"

},

{

"dataType": "STRING",

"name": "FA0010020000"

},

{

"dataType": "DOUBLE",

"name": "FA0050000000",

"defaultNullValue": 99

},

{

"dataType": "STRING",

"name": "FA0010030000"

},

{

"dataType": "STRING",

"name": "FA0010050000"

},

{

"dataType": "STRING",

"name": "FA0020030000"

},

{

"dataType": "STRING",

"name": "FA0010040000"

},

{

"dataType": "STRING",

"name": "FA0020010000"

},{

"dataType": "STRING",

"name": "FA0020020000"

},{

"dataType": "STRING",

"name": "FA0020000000"

},

{

"dataType": "STRING",

"name": "FA0030010000"

},

{

"dataType": "STRING",

"name": "FA0070000000"

},

{

"dataType": "STRING",

"name": "FA0070010000"

},

{

"dataType": "STRING",

"name": "FA0070020000"

},

{

"dataType": "STRING",

"name": "FA0070030000"

},

{

"dataType": "STRING",

"name": "FA0070180000",

"singleValueField": false

}

],

"timeFieldSpec": {

"incomingGranularitySpec": {

"timeType": "DAYS",

"dataType": "INT",

"name": "time_day"

}

},

"schemaName": "BASIC_20"

}表配置如下

{

"tableName": "BASIC_20",

"tableType": "REALTIME",

"segmentsConfig": {

"timeColumnName": "time_day",

"timeType": "DAYS",

"segmentPushType": "APPEND",

"segmentAssignmentStrategy": "BalanceNumSegmentAssignmentStrategy",

"schemaName": "BASIC_20",

"replicasPerPartition": "2"

},

"tenants": {},

"tableIndexConfig": {

"invertedIndexColumns": ["FA0010000000","FA0010010000"],

"loadMode": "MMAP",

"streamConfigs": {

"streamType": "kafka",

"stream.kafka.consumer.type": "LowLevel",

"stream.kafka.topic.name": "BASIC_20",

"stream.kafka.decoder.class.name": "org.apache.pinot.plugin.stream.kafka.KafkaJSONMessageDecoder",

"stream.kafka.hlc.zk.connect.string": "192.168.0.110:2181",

"stream.kafka.consumer.factory.class.name": "org.apache.pinot.plugin.stream.kafka20.KafkaConsumerFactory",

"stream.kafka.broker.list": "192.168.0.110:6667"

}

},

"metadata": {

"customConfigs": {}

}

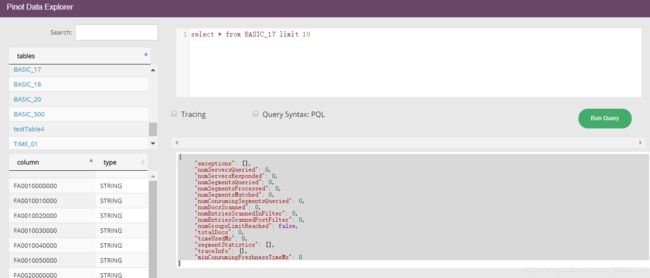

}创建后显示界面出现如下情况

而之前正常的表显示是,多了resultTable字段,因此查找pinot日志

在server的日志中找到如下问题

2020/02/25 12:30:56.693 ERROR [HelixStateTransitionHandler] [HelixTaskExecutor-message_handle_thread] Exception while executing a state transition task BASIC_16__0__0__20200225T0430Z

java.lang.reflect.InvocationTargetException: null

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_172]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_172]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_172]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_172]

at org.apache.helix.messaging.handling.HelixStateTransitionHandler.invoke(HelixStateTransitionHandler.java:404) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.helix.messaging.handling.HelixStateTransitionHandler.handleMessage(HelixStateTransitionHandler.java:331) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.helix.messaging.handling.HelixTask.call(HelixTask.java:97) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.helix.messaging.handling.HelixTask.call(HelixTask.java:49) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) ~[?:1.8.0_172]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) ~[?:1.8.0_172]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) ~[?:1.8.0_172]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_172]

Caused by: java.lang.OutOfMemoryError: Direct buffer memory

at java.nio.Bits.reserveMemory(Bits.java:694) ~[?:1.8.0_172]

at java.nio.DirectByteBuffer.(DirectByteBuffer.java:123) ~[?:1.8.0_172]

at java.nio.ByteBuffer.allocateDirect(ByteBuffer.java:311) ~[?:1.8.0_172]

at org.apache.pinot.core.segment.memory.PinotByteBuffer.allocateDirect(PinotByteBuffer.java:41) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.segment.memory.PinotDataBuffer.allocateDirect(PinotDataBuffer.java:116) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.io.writer.impl.DirectMemoryManager.allocateInternal(DirectMemoryManager.java:53) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.io.readerwriter.RealtimeIndexOffHeapMemoryManager.allocate(RealtimeIndexOffHeapMemoryManager.java:79) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.io.readerwriter.impl.FixedByteSingleColumnMultiValueReaderWriter.addHeaderBuffers(FixedByteSingleColumnMultiValueReaderWriter.java:144) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.io.readerwriter.impl.FixedByteSingleColumnMultiValueReaderWriter.(FixedByteSingleColumnMultiValueReaderWriter.java:135) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.indexsegment.mutable.MutableSegmentImpl.(MutableSegmentImpl.java:254) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.data.manager.realtime.LLRealtimeSegmentDataManager.(LLRealtimeSegmentDataManager.java:1124) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.core.data.manager.realtime.RealtimeTableDataManager.addSegment(RealtimeTableDataManager.java:245) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.server.starter.helix.HelixInstanceDataManager.addRealtimeSegment(HelixInstanceDataManager.java:128) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.server.starter.helix.SegmentOnlineOfflineStateModelFactory$SegmentOnlineOfflineStateModel.onBecomeOnlineFromOffline(SegmentOnlineOfflineStateModelFactory.java:164) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

at org.apache.pinot.server.starter.helix.SegmentOnlineOfflineStateModelFactory$SegmentOnlineOfflineStateModel.onBecomeConsumingFromOffline(SegmentOnlineOfflineStateModelFactory.java:88) ~[pinot-all-0.3.0-SNAPSHOT-jar-with-dependencies.jar:0.3.0-SNAPSHOT-${buildNumber}]

... 12 more

2020/02/25 12:30:56.911 ERROR [StateModel] [HelixTaskExecutor-message_handle_thread] Default rollback method invoked on error. Error Code: ERROR

2020/02/25 12:30:57.089 ERROR [HelixTask] [HelixTaskExecutor-message_handle_thread] Message execution failed. msgId: ecdb0910-0e3e-4a53-8b68-228402a4f57e, errorMsg: java.lang.reflect.InvocationTargetException

2020/02/25 12:30:57.231 ERROR [HelixStateTransitionHandler] [HelixTaskExecutor-message_handle_thread] Skip internal error. errCode: ERROR, errMsg: null 根据异常的堆栈找源码得知,pinot在实时操作中,会在创建表的时候往pinot中插入一个segment,通过DirectMemoryManager在堆外创建一个内存,内存大小为

2020/02/25 12:30:56.051 INFO [FixedByteSingleColumnMultiValueReaderWriter] [HelixTaskExecutor-message_handle_thread] Allocating header buffer of size 60000000 for: BASIC_16__0__0__20200225T0430Z:FA0070180000.mv.fwd60000000导致了server.log中出现

Caused by: java.lang.OutOfMemoryError: Direct buffer memory

-XX:MaxDirectMemorySize=size用于设置New I/O(java.nio) direct-buffer allocations的最大大小,size的单位可以使用k/K、m/M、g/G;如果没有设置该参数则默认值为0,意味着JVM自己自动给NIO direct-buffer allocations选择最大大小

也就是pinot启动server的java参数中设置的对外内存不够导致,需要修改start-server.sh脚本

原脚本

if [ -z "$JAVA_OPTS" ] ; then

ALL_JAVA_OPTS="-Xms1G -Xmx1G -Dlog4j2.configurationFile=conf/pinot-server-log4j2.xml"修改后的脚本

if [ -z "$JAVA_OPTS" ] ; then

ALL_JAVA_OPTS="-Xms1G -Xmx1G -XX:MaxDirectMemorySize=1024M -Dlog4j2.configurationFile=conf/pinot-server-log4j2.xml"