WebRTC音频

WebRtc语音整体框架

如上图所示,音频整个处理框架除了ligjingle负责p2p数据的传输,主要是VOE(Voice Engine)和Channel适配层

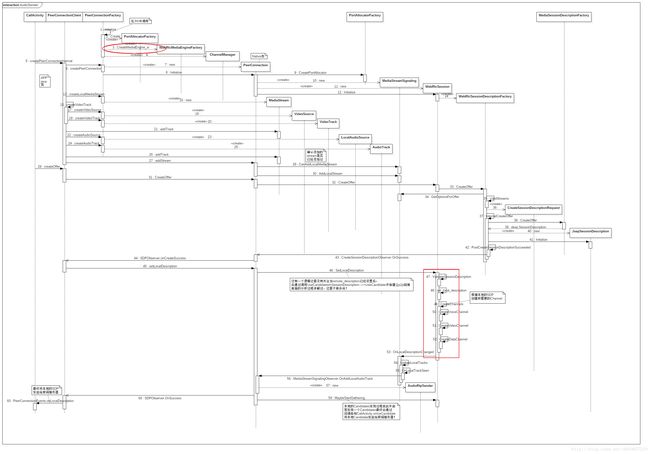

图二创建数据通信channel时序图

上图是本地端

的完整过程,VOE由CreateMediaEngine_w开始创建,Channel适配层由SetLocalDescription根据SDP开始创建,下面来分析下这两个过程

VOE创建过程

/*src\talk\app\webrtc\peerconnectionfactory.cc*/

bool PeerConnectionFactory::Initialize() {

......

default_allocator_factory_ = PortAllocatorFactory::Create(worker_thread_);

.....

cricket::MediaEngineInterface* media_engine =

worker_thread_->Invoke<cricket::MediaEngineInterface*>(rtc::Bind(

&PeerConnectionFactory::CreateMediaEngine_w, this));

//定义的宏,实际上就是在worker_thread_线程上运行CreateMediaEngine_w

.....

channel_manager_.reset(

new cricket::ChannelManager(media_engine, worker_thread_));

......

}

cricket::MediaEngineInterface* PeerConnectionFactory::CreateMediaEngine_w() {

ASSERT(worker_thread_ == rtc::Thread::Current());

return cricket::WebRtcMediaEngineFactory::Create(

default_adm_.get(), video_encoder_factory_.get(),

video_decoder_factory_.get());

}

MediaEngineInterface* WebRtcMediaEngineFactory::Create(

webrtc::AudioDeviceModule* adm,

WebRtcVideoEncoderFactory* encoder_factory,

WebRtcVideoDecoderFactory* decoder_factory) {

return CreateWebRtcMediaEngine(adm, encoder_factory, decoder_factory);

}

//CreateWebRtcMediaEngine实际上是WebRtcMediaEngine2,而WebRtcMediaEngine2又是继承至CompositeMediaEngine

//模板类,实现在webrtcmediaengine.cc

namespace cricket {

class WebRtcMediaEngine2

: public CompositeMediaEngine<WebRtcVoiceEngine, WebRtcVideoEngine2> {

public:

WebRtcMediaEngine2(webrtc::AudioDeviceModule* adm,

WebRtcVideoEncoderFactory* encoder_factory,

WebRtcVideoDecoderFactory* decoder_factory) {

voice_.SetAudioDeviceModule(adm);

video_.SetExternalDecoderFactory(decoder_factory);

video_.SetExternalEncoderFactory(encoder_factory);

}

};

} // namespace cricket

template<class VOICE, class VIDEO>

class CompositeMediaEngine : public MediaEngineInterface {

public:

virtual ~CompositeMediaEngine() {}

virtual bool Init(rtc::Thread* worker_thread) {

if (!voice_.Init(worker_thread)) //此处的voice 即为WebRtcVoiceEngine

return false;

video_.Init(); //video 为WebRtcVideoEngine2 后面再分析

return true;

}

......

}

WebRtcVoiceEngine::WebRtcVoiceEngine()

: voe_wrapper_(new VoEWrapper()), //底层Voice Engine代理类,与底层相关的上层都调用此类完成

tracing_(new VoETraceWrapper()), //调试相关类

adm_(NULL),

log_filter_(SeverityToFilter(kDefaultLogSeverity)),

is_dumping_aec_(false) {

Construct();

}下面看看构造WebRtcVoiceEngine相关的类和方法:

//VoEWrapper实际上是VoiceEngine--> voice_engine_impl.cc的代理

/* webrtcvoe.h */

class VoEWrapper {

public:

VoEWrapper()

: engine_(webrtc::VoiceEngine::Create()), processing_(engine_),

base_(engine_), codec_(engine_), dtmf_(engine_),

hw_(engine_), neteq_(engine_), network_(engine_),

rtp_(engine_), sync_(engine_), volume_(engine_) {

}

/*webrtcvoiceengine.cc*/

void WebRtcVoiceEngine::Construct() {

......

//注册引擎状态回调函数,将底层错误信息告知WebRtcVoiceEngine

if (voe_wrapper_->base()->RegisterVoiceEngineObserver(*this) == -1) {

LOG_RTCERR0(RegisterVoiceEngineObserver);

}

....

// Load our audio codec list.

ConstructCodecs(); // 根据kCodecPrefs表,音质从高到低,从底层获取最高音质的codec

.....

options_ = GetDefaultEngineOptions(); //设置默认的音频选项,需要回音消除,降噪,自动调节音量,是否需要dump等...

}

//WebRtcVoiceEngine初始化函数

bool WebRtcVoiceEngine::Init(rtc::Thread* worker_thread) {

......

bool res = InitInternal();

......

}

bool WebRtcVoiceEngine::InitInternal() {

......

// 初始化底层AudioDeviceModule 在WebRtc中参数dbm_此处传入的是NULL.

//voe_wrapper_ 是VoiceEngine的代理类在voice_engine_impl.cc 中实现,

//而VoiceEngineImpl继承至VoiceEngine,creat时创建的是VoiceEngineImpl

//在voe_base_impl.cc中实现

//并将对象返回给VoEWrapper

//此处voe_wrapper_->base()实际上是VoiceEngineImpl对象,下面分析VoiceEngineImpl.Init

if (voe_wrapper_->base()->Init(adm_) == -1) { //voe_wrapper_->base()

......

}

......

}

/*voe_base_impl.cc*/

int VoEBaseImpl::Init(AudioDeviceModule* external_adm,

AudioProcessing* audioproc) {

......

if (external_adm == nullptr) { //上面已经提到,demo中传入的是null

#if !defined(WEBRTC_INCLUDE_INTERNAL_AUDIO_DEVICE)

return -1;

#else

// Create the internal ADM implementation.

//创建本地的AudioDeviceModuleImpl 对象

//通过AudioRecorder 和AudioTrack实现音频采集与播放

shared_->set_audio_device(AudioDeviceModuleImpl::Create(

VoEId(shared_->instance_id(), -1), shared_->audio_device_layer()));

if (shared_->audio_device() == nullptr) {

shared_->SetLastError(VE_NO_MEMORY, kTraceCritical,

"Init() failed to create the ADM");

return -1;

}

#endif // WEBRTC_INCLUDE_INTERNAL_AUDIO_DEVICE

} else {

// Use the already existing external ADM implementation.

shared_->set_audio_device(external_adm);

LOG_F(LS_INFO)

<< "An external ADM implementation will be used in VoiceEngine";

}

// Register the ADM to the process thread, which will drive the error

// callback mechanism

if (shared_->process_thread()) {

shared_->process_thread()->RegisterModule(shared_->audio_device());

}

bool available = false;

// --------------------

// Reinitialize the ADM

// 为音频设备设置监听器

if (shared_->audio_device()->RegisterEventObserver(this) != 0) {

shared_->SetLastError(

VE_AUDIO_DEVICE_MODULE_ERROR, kTraceWarning,

"Init() failed to register event observer for the ADM");

}

// 为音频设备注册AudioTransport的实现,实现音频数据的传输

if (shared_->audio_device()->RegisterAudioCallback(this) != 0) {

shared_->SetLastError(

VE_AUDIO_DEVICE_MODULE_ERROR, kTraceWarning,

"Init() failed to register audio callback for the ADM");

}

// 音频设备的初始化!

if (shared_->audio_device()->Init() != 0) {

shared_->SetLastError(VE_AUDIO_DEVICE_MODULE_ERROR, kTraceError,

"Init() failed to initialize the ADM");

return -1;

}

......

}

AudioDeviceModule* AudioDeviceModuleImpl::Create(const int32_t id,

const AudioLayer audioLayer){

......

RefCountImpl<AudioDeviceModuleImpl>* audioDevice =

new RefCountImpl<AudioDeviceModuleImpl>(id, audioLayer);

// 检查平台是否支持

if (audioDevice->CheckPlatform() == -1)

{

delete audioDevice;

return NULL;

}

// 根据不同的平台选择不同的实现,Android平台 是通过JNI的方式( audio_record_jni.cc audio_track_jni.cc),

//获取java层的org/webrtc/voiceengine/WebRtcAudioRecord.java

//和org/webrtc/voiceengine/WebRtcAudioTrack.java 实现音频采集和播放

if (audioDevice->CreatePlatformSpecificObjects() == -1)

{

delete audioDevice;

return NULL;

}

// 分配共享内存,通过AudioTransportS实现音频数据的传递

if (audioDevice->AttachAudioBuffer() == -1)

{

delete audioDevice;

return NULL;

}

......

}Channel创建过程

在图二时序图中,在SetLocalDescription中会调用CreateChannels创建根据SDP创建会话所需要的Channels.由此开启了音视频数据和用户数据传输通道,下面详细看看音频channel创建的过程,其他的类似:

相关类图如下:

/* webrtcsession.cc */

bool WebRtcSession::CreateChannels(const SessionDescription* desc) {

// Creating the media channels and transport proxies.

//根据SDP创建VoiceChannel

const cricket::ContentInfo* voice = cricket::GetFirstAudioContent(desc);

if (voice && !voice->rejected && !voice_channel_) {

if (!CreateVoiceChannel(voice)) {

LOG(LS_ERROR) << "Failed to create voice channel.";

return false;

}

}

//根据SDP创建VideoChannel

const cricket::ContentInfo* video = cricket::GetFirstVideoContent(desc);

if (video && !video->rejected && !video_channel_) {

if (!CreateVideoChannel(video)) {

LOG(LS_ERROR) << "Failed to create video channel.";

return false;

}

}

////根据SDP创建DataChannel

const cricket::ContentInfo* data = cricket::GetFirstDataContent(desc);

if (data_channel_type_ != cricket::DCT_NONE &&

data && !data->rejected && !data_channel_) {

if (!CreateDataChannel(data)) {

LOG(LS_ERROR) << "Failed to create data channel.";

return false;

}

}

......

return true;

}

//此处主要分析VoiceChannel的创建过程

bool WebRtcSession::CreateVoiceChannel(const cricket::ContentInfo* content) {

//channel_manager_为在peerconnectionfactory.cc中Initialize是创建的ChannelManager

//media_controller_为WebRtcSession在Initialize时创建的MediaController对象,实际上是Call对象的封装,为了方便call对象的共享!

//transport_controller() 返回TransportController,WebRtcSession基类BaseSession 构造方法中创建的TransportController

//WebRtcSession基类BaseSession实现的是与libjingle进行的交互

voice_channel_.reset(channel_manager_->CreateVoiceChannel(

media_controller_.get(), transport_controller(), content->name, true,

audio_options_));

if (!voice_channel_) {

return false;

}

......

return true;

}

/* webrtc\src\talk\session\media\channelmanager.cc*/

VoiceChannel* ChannelManager::CreateVoiceChannel(

webrtc::MediaControllerInterface* media_controller,

TransportController* transport_controller,

const std::string& content_name,

bool rtcp,

const AudioOptions& options) {

//定义的宏,实际意思是 在worker_thread_中运行ChannelManager::CreateVoiceChannel_w方法!

return worker_thread_->Invoke<VoiceChannel*>(

Bind(&ChannelManager::CreateVoiceChannel_w, this, media_controller,

transport_controller, content_name, rtcp, options));

}

VoiceChannel* ChannelManager::CreateVoiceChannel_w(

webrtc::MediaControllerInterface* media_controller,

TransportController* transport_controller,

const std::string& content_name,

bool rtcp,

const AudioOptions& options) {

......

//此处的media_engine_为在peerconnectionfactory.cc中创建的WebRtcMediaEngine2

//最终调用WebRtcVoiceEngine::CreateChannel方法

VoiceMediaChannel* media_channel =

media_engine_->CreateChannel(media_controller->call_w(), options);

if (!media_channel)

return nullptr;

//VoiceChannel继承BaseChannel,从libjingle获取数据或者是通过libjingle将数据发给远程端!

VoiceChannel* voice_channel =

new VoiceChannel(worker_thread_, media_engine_.get(), media_channel,

transport_controller, content_name, rtcp);

if (!voice_channel->Init()) {

delete voice_channel;

return nullptr;

}

voice_channels_.push_back(voice_channel);

return voice_channel;

}

VoiceMediaChannel* WebRtcVoiceEngine::CreateChannel(webrtc::Call* call,

const AudioOptions& options) {

WebRtcVoiceMediaChannel* ch =

new WebRtcVoiceMediaChannel(this, options, call);

if (!ch->valid()) {

delete ch;

return nullptr;

}

return ch;

}

WebRtcVoiceMediaChannel::WebRtcVoiceMediaChannel(WebRtcVoiceEngine* engine,

const AudioOptions& options,

webrtc::Call* call)

: engine_(engine),

voe_channel_(engine->CreateMediaVoiceChannel()),//调用WebRtcVoiceEngine::CreateMediaVoiceChannel()方法

...... {

//将当前WebRtcVoiceMediaChannel注册给WebRtcVoiceEngine管理放入ChannelList中

engine->RegisterChannel(this);

......

//为上面创造的新channel注册WebRtcVoiceMediaChannel.可以认为WebRtcVoiceMediaChannel是桥梁,底层

//channel通过注册的Transport实现数据流的发送和接受!

ConfigureSendChannel(voe_channel());

SetOptions(options);

}

int WebRtcVoiceEngine::CreateVoiceChannel(VoEWrapper* voice_engine_wrapper) {

//VoEWrapper为VoiceEngine的封装,我觉得相当于是VoiceEngine的代理。

//而在VoiceEngine的实现voice_engine_impl.cc可以看出,VoiceEngine实际上是VoiceEngineImpl的封装

//voice_engine_wrapper->base()的到的是VoiceEngineImpl对象

return voice_engine_wrapper->base()->CreateChannel(voe_config_);

}

/* voe_base_impl.cc */

int VoEBaseImpl::CreateChannel() {

.....

//通过ChannelManager创建Channel对象

voe::ChannelOwner channel_owner = shared_->channel_manager().CreateChannel();

return InitializeChannel(&channel_owner);

}

/* android\webrtc\src\webrtc\voice_engine\channel_manager.cc*/

ChannelOwner ChannelManager::CreateChannel() {

return CreateChannelInternal(config_);

}

ChannelOwner ChannelManager::CreateChannelInternal(const Config& config) {

Channel* channel;

//新建Channel对象

Channel::CreateChannel(channel, ++last_channel_id_, instance_id_,

event_log_.get(), config);

ChannelOwner channel_owner(channel);

CriticalSectionScoped crit(lock_.get());

//ChannelManager对所有新建channel的管理

channels_.push_back(channel_owner);

//返回封装的ChannelOwner

return channel_owner;

}

语音发送流程

采集

在安卓系统的WebRtc demo中,语音还是通过系统的AudioRecorder.java 类实现采集的。在VoEBaseImpl::Init阶段介绍过会为AudioDeviceModule注册数据传输回调函数如下:

int VoEBaseImpl::Init(AudioDeviceModule* external_adm,

AudioProcessing* audioproc) {

......

// Register the AudioTransport implementation

if (shared_->audio_device()->RegisterAudioCallback(this) != 0) {

shared_->SetLastError(

VE_AUDIO_DEVICE_MODULE_ERROR, kTraceWarning,

"Init() failed to register audio callback for the ADM");

}

......

}

int32_t AudioDeviceModuleImpl::RegisterAudioCallback(AudioTransport* audioCallback)

{

CriticalSectionScoped lock(&_critSectAudioCb);

//最终将VoEBaseImpl的实现,注册到设备的AudioDeviceBuffer中

_audioDeviceBuffer.RegisterAudioCallback(audioCallback);

return 0;

}

所以总的来说音频数据会如下流程,最终VoEBaseImpl实现的AudioTransport回调获取数据或者播放数据!

nativeDataIsRecorded(org/webrtc/voiceengine/WebRtcAudioRecord.java)--->

(audio_record_jni.cc)AudioRecordJni::DataIsRecorded-->OnDataIsRecorded-->

AudioDeviceBuffer.DeliverRecordedData--->

AudioTransport.RecordedDataIsAvailable--->

(voe_base_impl.cc)VoEBaseImpl::RecordedDataIsAvailable

处理

/* voe_base_impl.cc */

int32_t VoEBaseImpl::RecordedDataIsAvailable(

const void* audioSamples, size_t nSamples, size_t nBytesPerSample,

uint8_t nChannels, uint32_t samplesPerSec, uint32_t totalDelayMS,

int32_t clockDrift, uint32_t micLevel, bool keyPressed,

uint32_t& newMicLevel) {

newMicLevel = static_cast(ProcessRecordedDataWithAPM(

nullptr, 0, audioSamples, samplesPerSec, nChannels, nSamples,

totalDelayMS, clockDrift, micLevel, keyPressed));

return 0;

}

//从java层获取的数据,直接送入ProcessRecordedDataWithAPM处理!

int VoEBaseImpl::ProcessRecordedDataWithAPM(

const int voe_channels[], int number_of_voe_channels,

const void* audio_data, uint32_t sample_rate, uint8_t number_of_channels,

size_t number_of_frames, uint32_t audio_delay_milliseconds,

int32_t clock_drift, uint32_t volume, bool key_pressed) {

......

//调节音量

if (volume != 0) {

// Scale from ADM to VoE level range

if (shared_->audio_device()->MaxMicrophoneVolume(&max_volume) == 0) {

if (max_volume) {

voe_mic_level = static_cast(

(volume * kMaxVolumeLevel + static_cast<int>(max_volume / 2)) /

max_volume);

}

}

// We learned that on certain systems (e.g Linux) the voe_mic_level

// can be greater than the maxVolumeLevel therefore

// we are going to cap the voe_mic_level to the maxVolumeLevel

// and change the maxVolume to volume if it turns out that

// the voe_mic_level is indeed greater than the maxVolumeLevel.

if (voe_mic_level > kMaxVolumeLevel) {

voe_mic_level = kMaxVolumeLevel;

max_volume = volume;

}

}

//这里对音频有一系列的处理,比如:录制到文件,重采样,回音消除,AGC调节等。。。

shared_->transmit_mixer()->PrepareDemux(

audio_data, number_of_frames, number_of_channels, sample_rate,

static_cast(audio_delay_milliseconds), clock_drift,

voe_mic_level, key_pressed);

// Copy the audio frame to each sending channel and perform

// channel-dependent operations (file mixing, mute, etc.), encode and

// packetize+transmit the RTP packet. When |number_of_voe_channels| == 0,

// do the operations on all the existing VoE channels; otherwise the

// operations will be done on specific channels.

if (number_of_voe_channels == 0) {

shared_->transmit_mixer()->DemuxAndMix();

shared_->transmit_mixer()->EncodeAndSend();

} else {

shared_->transmit_mixer()->DemuxAndMix(voe_channels,

number_of_voe_channels);

shared_->transmit_mixer()->EncodeAndSend(voe_channels,

number_of_voe_channels);

}

......

}

// Return 0 to indicate no change on the volume.

return 0;

}

编码

//shared_->transmit_mixer()->EncodeAndSend

//实现数据的编码,编码后触发打包发送

void TransmitMixer::EncodeAndSend(const int voe_channels[],

int number_of_voe_channels) {

for (int i = 0; i < number_of_voe_channels; ++i) {

voe::ChannelOwner ch = _channelManagerPtr->GetChannel(voe_channels[i]);

voe::Channel* channel_ptr = ch.channel();

if (channel_ptr && channel_ptr->Sending())//判断当前的channel是否处于发送的状态

channel_ptr->EncodeAndSend();

}

}

uint32_t

Channel::EncodeAndSend(){

......

//编码压缩音频数据

if (audio_coding_->Add10MsData((AudioFrame&)_audioFrame) < 0)

{

WEBRTC_TRACE(kTraceError, kTraceVoice, VoEId(_instanceId,_channelId),

"Channel::EncodeAndSend() ACM encoding failed");

return 0xFFFFFFFF;

}

......

}

int AudioCodingModuleImpl::Add10MsData(const AudioFrame& audio_frame) {

InputData input_data;

CriticalSectionScoped lock(acm_crit_sect_.get());

//编码之前的处理 ,根据需求重采样 并将数据封装在InputData中

int r = Add10MsDataInternal(audio_frame, &input_data);

//开始编码

return r < 0 ? r : Encode(input_data);

}

int32_t AudioCodingModuleImpl::Encode(const InputData& input_data){

......

//从CodecManager获取当前正在使用的编码器

AudioEncoder* audio_encoder = codec_manager_.CurrentEncoder();

......

//开始编码

encode_buffer_.SetSize(audio_encoder->MaxEncodedBytes());

encoded_info = audio_encoder->Encode(

rtp_timestamp, input_data.audio, input_data.length_per_channel,

encode_buffer_.size(), encode_buffer_.data());

encode_buffer_.SetSize(encoded_info.encoded_bytes);

......

{

CriticalSectionScoped lock(callback_crit_sect_.get());

if (packetization_callback_) {

//触发发送,packetization_callback_由Channel继承AudioPacketizationCallback实现。

//Channel在Init()时调用,audio_coding_->RegisterTransportCallback(this)完成注册!

packetization_callback_->SendData(

frame_type, encoded_info.payload_type, encoded_info.encoded_timestamp,

encode_buffer_.data(), encode_buffer_.size(),

my_fragmentation.fragmentationVectorSize > 0 ? &my_fragmentation

: nullptr);

}

if (vad_callback_) {

// 静音检测回调

vad_callback_->InFrameType(frame_type);

}

}

}

打包

音频数据在编码之后会通过Channel实现的AudioPacketizationCallback.SendData触发数据打包发送流程。

SendData实现如下:

/* android\webrtc\src\webrtc\voice_engine\channel.cc*/

int32_t

Channel::SendData(FrameType frameType,

uint8_t payloadType,

uint32_t timeStamp,

const uint8_t* payloadData,

size_t payloadSize,

const RTPFragmentationHeader* fragmentation){

......

//RTP打包和发送

if (_rtpRtcpModule->SendOutgoingData((FrameType&)frameType,

payloadType,

timeStamp,

// Leaving the time when this frame was

// received from the capture device as

// undefined for voice for now.

-1,

payloadData,

payloadSize,

fragmentation) == -1)

{

_engineStatisticsPtr->SetLastError(

VE_RTP_RTCP_MODULE_ERROR, kTraceWarning,

"Channel::SendData() failed to send data to RTP/RTCP module");

return -1;

}

......

}

/* android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_rtcp_impl.cc*/

//最终由RTPSender实现RTP打包和发送

int32_t ModuleRtpRtcpImpl::SendOutgoingData(

FrameType frame_type,

int8_t payload_type,

uint32_t time_stamp,

int64_t capture_time_ms,

const uint8_t* payload_data,

size_t payload_size,

const RTPFragmentationHeader* fragmentation,

const RTPVideoHeader* rtp_video_hdr) {

rtcp_sender_.SetLastRtpTime(time_stamp, capture_time_ms);

if (rtcp_sender_.TimeToSendRTCPReport(kVideoFrameKey == frame_type)) {

rtcp_sender_.SendRTCP(GetFeedbackState(), kRtcpReport);

}

return rtp_sender_.SendOutgoingData(

frame_type, payload_type, time_stamp, capture_time_ms, payload_data,

payload_size, fragmentation, rtp_video_hdr);

}

/*android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_sender.cc*/

int32_t RTPSender::SendOutgoingData(FrameType frame_type,

int8_t payload_type,

uint32_t capture_timestamp,

int64_t capture_time_ms,

const uint8_t* payload_data,

size_t payload_size,

const RTPFragmentationHeader* fragmentation,

const RTPVideoHeader* rtp_hdr) {

......

//确定传输的是音频还是视频

if (CheckPayloadType(payload_type, &video_type) != 0) {

LOG(LS_ERROR) << "Don't send data with unknown payload type.";

return -1;

}

//若为音频audio_ 为RTPSenderAudio在android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_sender_audio.cc中实现

ret_val = audio_->SendAudio(frame_type, payload_type, capture_timestamp,

payload_data, payload_size, fragmentation);

//若为视频

ret_val =

video_->SendVideo(video_type, frame_type, payload_type,

capture_timestamp, capture_time_ms, payload_data,

payload_size, fragmentation, rtp_hdr);

}

/*android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_sender_audio.cc*/

int32_t RTPSenderAudio::SendAudio(

const FrameType frameType,

const int8_t payloadType,

const uint32_t captureTimeStamp,

const uint8_t* payloadData,

const size_t dataSize,

const RTPFragmentationHeader* fragmentation) {

......

//根据协议打包编码后的音频数据,整个流程较复杂这里不做分析,可以参考源代码做深入的了解

......

//发送

return _rtpSender->SendToNetwork(dataBuffer, payloadSize, rtpHeaderLength,

-1, kAllowRetransmission,

RtpPacketSender::kHighPriority);

}

发送

上面流程可以了解到,RTP打包完成之后由RTPSender完成发送流程,如下:

int32_t RTPSender::SendToNetwork(uint8_t* buffer,

size_t payload_length,

size_t rtp_header_length,

int64_t capture_time_ms,

StorageType storage,

RtpPacketSender::Priority priority){

......

//进行一些时间上的处理和重发机制处理后直接发送数据

bool sent = SendPacketToNetwork(buffer, length);

.....

//更新统计状态

UpdateRtpStats(buffer, length, rtp_header, false, false);

......

}

bool RTPSender::SendPacketToNetwork(const uint8_t *packet, size_t size) {

int bytes_sent = -1;

if (transport_) {

bytes_sent =

//此处的transport_实际为Channel,Channel继承自Transport

/*

在Channel构造函数中

Channel::Channel(int32_t channelId,

uint32_t instanceId,

RtcEventLog* const event_log,

const Config& config){

RtpRtcp::Configuration configuration;

configuration.audio = true;

configuration.outgoing_transport = this; //设置Transport

configuration.audio_messages = this;

configuration.receive_statistics = rtp_receive_statistics_.get();

configuration.bandwidth_callback = rtcp_observer_.get();

_rtpRtcpModule.reset(RtpRtcp::CreateRtpRtcp(configuration));

}

//在ModuleRtpRtcpImpl构造方法中会将参数传入RTPSender

ModuleRtpRtcpImpl::ModuleRtpRtcpImpl(const Configuration& configuration)

: rtp_sender_(configuration.audio,

configuration.clock,

configuration.outgoing_transport,

configuration.audio_messages,

configuration.paced_sender,

configuration.transport_sequence_number_allocator,

configuration.transport_feedback_callback,

configuration.send_bitrate_observer,

configuration.send_frame_count_observer,

configuration.send_side_delay_observer),

rtcp_sender_(configuration.audio,

configuration.clock,

configuration.receive_statistics,

configuration.rtcp_packet_type_counter_observer),

rtcp_receiver_(configuration.clock,

configuration.receiver_only,

configuration.rtcp_packet_type_counter_observer,

configuration.bandwidth_callback,

configuration.intra_frame_callback,

configuration.transport_feedback_callback,

this)......)

*/

transport_->SendRtp(packet, size) ? static_cast<int>(size) : -1;

}

......

return true;

}通过上面的分析发现最终的发送流程在Channel中由SendRtp实现:

bool

Channel::SendRtp(const uint8_t *data, size_t len){

......

//此处的 _transportPtr 由int32_t Channel::RegisterExternalTransport(Transport& transport)注册完成

//联系之前分析的创建Channel的流程可以发现,在webrtcvoiceengine.cc中

// WebRtcVoiceMediaChannel构造函数中调用了ConfigureSendChannel(voe_channel())

/*

void WebRtcVoiceMediaChannel::ConfigureSendChannel(int channel) {

//在VoENetworkImpl中通过ChannelOwner获取Channel注册Transport

if (engine()->voe()->network()->RegisterExternalTransport(

channel, *this) == -1) {

LOG_RTCERR2(RegisterExternalTransport, channel, this);

}

// Enable RTCP (for quality stats and feedback messages)

EnableRtcp(channel);

// Reset all recv codecs; they will be enabled via SetRecvCodecs.

ResetRecvCodecs(channel);

// Set RTP header extension for the new channel.

SetChannelSendRtpHeaderExtensions(channel, send_extensions_);

}

*/

if (!_transportPtr->SendRtp(bufferToSendPtr, bufferLength)) {

std::string transport_name =

_externalTransport ? "external transport" : "WebRtc sockets";

WEBRTC_TRACE(kTraceError, kTraceVoice,

VoEId(_instanceId,_channelId),

"Channel::SendPacket() RTP transmission using %s failed",

transport_name.c_str());

return false;

}

......

}

通过上面的分析可以发现,Channel中注册的Transport实际上是WebRtcVoiceMediaChannel

/*android\webrtc\src\talk\media\webrtc\webrtcvoiceengine.h*/

class WebRtcVoiceMediaChannel : public VoiceMediaChannel,

public webrtc::Transport {

......

// implements Transport interface

bool SendRtp(const uint8_t* data, size_t len) override {

rtc::Buffer packet(reinterpret_cast<const uint8_t*>(data), len,

kMaxRtpPacketLen);

return VoiceMediaChannel::SendPacket(&packet);

}

......

}

/*android\webrtc\src\talk\media\base\mediachannel.h*/

class VoiceMediaChannel : public MediaChannel {

......

// Base method to send packet using NetworkInterface.

bool SendPacket(rtc::Buffer* packet) {

return DoSendPacket(packet, false);

}

bool SendRtcp(rtc::Buffer* packet) {

return DoSendPacket(packet, true);

}

// Sets the abstract interface class for sending RTP/RTCP data.

virtual void SetInterface(NetworkInterface *iface) {

rtc::CritScope cs(&network_interface_crit_);

network_interface_ = iface;

}

private:

bool DoSendPacket(rtc::Buffer* packet, bool rtcp) {

rtc::CritScope cs(&network_interface_crit_);

if (!network_interface_)

return false;

//network_interface_通过SetInterface设置,

//是由android\webrtc\src\talk\session\media\channel.h实现 在BaseChannel::Init()调用SetInterface完成注册

return (!rtcp) ? network_interface_->SendPacket(packet) :

network_interface_->SendRtcp(packet);

}

......

}

/*android\webrtc\src\talk\media\base\channel.h*/

class BaseChannel

: public rtc::MessageHandler, public sigslot::has_slots<>,

public MediaChannel::NetworkInterface,

public ConnectionStatsGetter {

}

/*android\webrtc\src\talk\media\base\channel.cc*/

bool BaseChannel::Init() {

......

//为BaseChannel设置TransportChannel

if (!SetTransport(content_name())) {

return false;

}

// Both RTP and RTCP channels are set, we can call SetInterface on

// media channel and it can set network options.

media_channel_->SetInterface(this);

return true;

}

bool BaseChannel::SendPacket(rtc::Buffer* packet,

rtc::DiffServCodePoint dscp) {

return SendPacket(false, packet, dscp);

}

bool BaseChannel::SendPacket(bool rtcp, rtc::Buffer* packet,

rtc::DiffServCodePoint dscp){

......

// 获取传输数据的TransportChannel,Init()通过调用SetTransport设置

TransportChannel* channel = (!rtcp || rtcp_mux_filter_.IsActive()) ?

transport_channel_ : rtcp_transport_channel_;

if (!channel || !channel->writable()) {

return false;

}

......

//

int ret =

channel->SendPacket(packet->data<char>(), packet->size(), options,

(secure() && secure_dtls()) ? PF_SRTP_BYPASS : 0);

}

bool BaseChannel::SetTransport(const std::string& transport_name) {

return worker_thread_->Invoke<bool>(

Bind(&BaseChannel::SetTransport_w, this, transport_name));//实际上就是在SetTransport_w线程中调用SetTransport_w

}

bool BaseChannel::SetTransport_w(const std::string& transport_name) {

......

//先通过TransportController创建相应的

//TransportChannel(TransportChannelImpl继承TransportChannel,P2PTransportChannel继承TransportChannelImpl,最终由P2PTransportChannel实现)

set_transport_channel(transport_controller_->CreateTransportChannel_w(

transport_name, cricket::ICE_CANDIDATE_COMPONENT_RTP));

if (!transport_channel()) {

return false;

}

......

}

void BaseChannel::set_transport_channel(TransportChannel* new_tc) {

TransportChannel* old_tc = transport_channel_;

if (old_tc) {//先注销old_tc的事件监听

DisconnectFromTransportChannel(old_tc);

//销毁掉没用的Channel节约系统资源

transport_controller_->DestroyTransportChannel_w(

transport_name_, cricket::ICE_CANDIDATE_COMPONENT_RTP);

}

transport_channel_ = new_tc;

if (new_tc) {//设置监听事件

ConnectToTransportChannel(new_tc);

for (const auto& pair : socket_options_) {

new_tc->SetOption(pair.first, pair.second);

}

}

//告知响应的MediaChannel,TransportChannel已经设置完毕

SetReadyToSend(false, new_tc && new_tc->writable());

}P2PTransportChannel的SendPacket设计到libjingle p2p的实现,这里做过多的分析。

从以上分析结合图一,就能较好理解webRTC整个音频框架!

语音接收播放流程

接收

如图一的黄色箭头所示,网络数据从libjingle传入BaseChannel。

//在VoiceChannel::Init()中调用BaseChannel::Init()

//--->BaseChannel::Init()

//--->bool BaseChannel::SetTransport(const std::string& transport_name)

//--->bool BaseChannel::SetTransport_w(const std::string& transport_name)

//--->void BaseChannel::set_transport_channel(TransportChannel* new_tc)

//--->void BaseChannel::ConnectToTransportChannel(TransportChannel* tc)

/*

在TransportChannel类中,每接受一个数据包都会触发SignalReadPacket信号

通过信号与曹实现类间的通信

*/

void BaseChannel::ConnectToTransportChannel(TransportChannel* tc) {

ASSERT(worker_thread_ == rtc::Thread::Current());

tc->SignalWritableState.connect(this, &BaseChannel::OnWritableState);

//libjingle每收到一个数据包都会触发BaseChannel::OnChannelRead

tc->SignalReadPacket.connect(this, &BaseChannel::OnChannelRead);

tc->SignalReadyToSend.connect(this, &BaseChannel::OnReadyToSend);

}

void BaseChannel::OnChannelRead(TransportChannel* channel,

const char* data, size_t len,

const rtc::PacketTime& packet_time,

int flags) {

// OnChannelRead gets called from P2PSocket; now pass data to MediaEngine

ASSERT(worker_thread_ == rtc::Thread::Current());

// When using RTCP multiplexing we might get RTCP packets on the RTP

// transport. We feed RTP traffic into the demuxer to determine if it is RTCP.

bool rtcp = PacketIsRtcp(channel, data, len);

rtc::Buffer packet(data, len);

HandlePacket(rtcp, &packet, packet_time);

}

void BaseChannel::HandlePacket(bool rtcp, rtc::Buffer* packet,

const rtc::PacketTime& packet_time){

......

if (!rtcp) {

//rtp packet

media_channel_->OnPacketReceived(packet, packet_time);

} else {

// rtcp packet 很显然这里的media_channel_是WebRtcVoiceMediaChannel

media_channel_->OnRtcpReceived(packet, packet_time);

}

......

}

解包

/*android\webrtc\src\talk\media\webrtc\webrtcvoiceengine.cc*/

void WebRtcVoiceMediaChannel::OnPacketReceived(

rtc::Buffer* packet, const rtc::PacketTime& packet_time) {

RTC_DCHECK(thread_checker_.CalledOnValidThread());

// Forward packet to Call as well.

const webrtc::PacketTime webrtc_packet_time(packet_time.timestamp,

packet_time.not_before);

//通过PacketReceiver::DeliveryStatus Call::DeliverPacket

//--->PacketReceiver::DeliveryStatus Call::DeliverRtp

//--->若为音频则调用bool AudioReceiveStream::DeliverRtp 估算延时,估算远程端的比特率,并更新相关状体

//若为视频则调用 bool VideoReceiveStream::DeliverRtp

call_->Receiver()->DeliverPacket(webrtc::MediaType::AUDIO,

reinterpret_cast<const uint8_t*>(packet->data()), packet->size(),

webrtc_packet_time);

// Pick which channel to send this packet to. If this packet doesn't match

// any multiplexed streams, just send it to the default channel. Otherwise,

// send it to the specific decoder instance for that stream.

int which_channel =

GetReceiveChannelNum(ParseSsrc(packet->data(), packet->size(), false));

if (which_channel == -1) {

which_channel = voe_channel();

}

// Pass it off to the decoder.

//开始解包 解码

engine()->voe()->network()->ReceivedRTPPacket(

which_channel, packet->data(), packet->size(),

webrtc::PacketTime(packet_time.timestamp, packet_time.not_before));

}

/*android\webrtc\src\webrtc\audio\audio_receive_stream.cc*/

bool AudioReceiveStream::DeliverRtp(const uint8_t* packet,

size_t length,

const PacketTime& packet_time) {

......

//解析包头

if (!rtp_header_parser_->Parse(packet, length, &header)) {

return false;

}

......

//估算延时和比特率

remote_bitrate_estimator_->IncomingPacket(arrival_time_ms, payload_size,

header, false);

}

/*android\webrtc\src\webrtc\voice_engine\voe_network_impl.cc*/

int VoENetworkImpl::ReceivedRTPPacket(int channel,

const void* data,

size_t length,

const PacketTime& packet_time){

......

//联系前面的解析,这里的channelPtr实际上就是android\webrtc\src\webrtc\voice_engine\Channel.cc中的Channel

return channelPtr->ReceivedRTPPacket((const int8_t*)data, length,

packet_time);

}

/*android\webrtc\src\webrtc\voice_engine\Channel.cc*/

int32_t Channel::ReceivedRTPPacket(const int8_t* data, size_t length,

const PacketTime& packet_time){

......

const uint8_t* received_packet = reinterpret_cast<const uint8_t*>(data);

RTPHeader header;

//解析包头

if (!rtp_header_parser_->Parse(received_packet, length, &header)) {

WEBRTC_TRACE(webrtc::kTraceDebug, webrtc::kTraceVoice, _channelId,

"Incoming packet: invalid RTP header");

return -1;

}

......

//开始解包和解码操作

return ReceivePacket(received_packet, length, header, in_order) ? 0 : -1;

}

bool Channel::ReceivePacket(const uint8_t* packet,

size_t packet_length,

const RTPHeader& header,

bool in_order){

......

const uint8_t* payload = packet + header.headerLength;

......

//将有效数据给解码器解码

return rtp_receiver_->IncomingRtpPacket(header, payload, payload_length,

payload_specific, in_order);

}

解码

/*android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_receiver_impl.cc*/

bool RtpReceiverImpl::IncomingRtpPacket(

const RTPHeader& rtp_header,

const uint8_t* payload,

size_t payload_length,

PayloadUnion payload_specific,

bool in_order){

// Trigger our callbacks.

CheckSSRCChanged(rtp_header);

......

//通过回调将数据送给解码器

int32_t ret_val = rtp_media_receiver_->ParseRtpPacket(

&webrtc_rtp_header, payload_specific, is_red, payload, payload_length,

clock_->TimeInMilliseconds(), is_first_packet_in_frame);

}

/*android\webrtc\src\webrtc\modules\rtp_rtcp\source\rtp_receiver_audio.cc*/

int32_t RTPReceiverAudio::ParseRtpPacket(WebRtcRTPHeader* rtp_header,

const PayloadUnion& specific_payload,

bool is_red,

const uint8_t* payload,

size_t payload_length,

int64_t timestamp_ms,

bool is_first_packet) {

......

return ParseAudioCodecSpecific(rtp_header,

payload,

payload_length,

specific_payload.Audio,

is_red);

}

int32_t RTPReceiverAudio::ParseAudioCodecSpecific(

WebRtcRTPHeader* rtp_header,

const uint8_t* payload_data,

size_t payload_length,

const AudioPayload& audio_specific,

bool is_red) {

//处理DTMF相关

bool telephone_event_packet =

TelephoneEventPayloadType(rtp_header->header.payloadType);

if (telephone_event_packet) {

CriticalSectionScoped lock(crit_sect_.get());

// RFC 4733 2.3

// 0 1 2 3

// 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1

// +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

// | event |E|R| volume | duration |

// +-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+-+

//

if (payload_length % 4 != 0) {

return -1;

}

size_t number_of_events = payload_length / 4;

// sanity

if (number_of_events >= MAX_NUMBER_OF_PARALLEL_TELEPHONE_EVENTS) {

number_of_events = MAX_NUMBER_OF_PARALLEL_TELEPHONE_EVENTS;

}

for (size_t n = 0; n < number_of_events; ++n) {

bool end = (payload_data[(4 * n) + 1] & 0x80) ? true : false;

std::set通过int NetEqImpl::GetAudio获取pcm数据。

int NetEqImpl::GetAudio(size_t max_length, int16_t* output_audio,

size_t* samples_per_channel, int* num_channels,

NetEqOutputType* type){

......

int error = GetAudioInternal(max_length, output_audio, samples_per_channel,

num_channels)

......

}

int NetEqImpl::GetAudioInternal(size_t max_length,

int16_t* output,

size_t* samples_per_channel,

int* num_channels){

......

//解码

int decode_return_value = Decode(&packet_list, &operation,

&length, &speech_type);

......

}

int NetEqImpl::Decode(PacketList* packet_list, Operations* operation,

int* decoded_length,

AudioDecoder::SpeechType* speech_type){

......

//获得当前解码器

AudioDecoder* decoder = decoder_database_->GetActiveDecoder();

......

//开始解码

if (*operation == kCodecInternalCng) {

RTC_DCHECK(packet_list->empty());

return_value = DecodeCng(decoder, decoded_length, speech_type);

} else {

return_value = DecodeLoop(packet_list, *operation, decoder,

decoded_length, speech_type);

}

......

}最终通过GetAudio获取的就是pcm数据!

播放

org/webrtc/voiceengine/WebRtcAudioTrack.java

通过AudioTrackThread播放线程不断从native获取pcm数据,并将pcm数据送入audiotrack中播放。

nativeGetPlayoutData(WebRtcAudioTrack.java)-->

void JNICALL AudioTrackJni::GetPlayoutData(audio_track_jni.cc)-->

void AudioTrackJni::OnGetPlayoutData(size_t length)((audio_track_jni.cc))

void AudioTrackJni::OnGetPlayoutData(size_t length) {

......

// Pull decoded data (in 16-bit PCM format) from jitter buffer.

//获取数据

int samples = audio_device_buffer_->RequestPlayoutData(frames_per_buffer_);

if (samples <= 0) {

ALOGE("AudioDeviceBuffer::RequestPlayoutData failed!");

return;

}

RTC_DCHECK_EQ(static_cast<size_t>(samples), frames_per_buffer_);

// Copy decoded data into common byte buffer to ensure that it can be

// written to the Java based audio track.

//拷贝到共享内存

samples = audio_device_buffer_->GetPlayoutData(direct_buffer_address_);

......

}

int32_t AudioDeviceBuffer::RequestPlayoutData(size_t nSamples){

......

/**/

if (_ptrCbAudioTransport)

{

uint32_t res(0);

int64_t elapsed_time_ms = -1;

int64_t ntp_time_ms = -1;

res = _ptrCbAudioTransport->NeedMorePlayData(_playSamples,

playBytesPerSample,

playChannels,

playSampleRate,

&_playBuffer[0],

nSamplesOut,

&elapsed_time_ms,

&ntp_time_ms);

if (res != 0)

{

WEBRTC_TRACE(kTraceError, kTraceAudioDevice, _id, "NeedMorePlayData() failed");

}

}

......

}

AudioTransport由VoEBaseImpl实现,具体的注册过程可以参考上面的解析!

/*android\webrtc\src\webrtc\voice_engine\voe_base_impl.cc*/

int32_t VoEBaseImpl::NeedMorePlayData(size_t nSamples,

size_t nBytesPerSample,

uint8_t nChannels, uint32_t samplesPerSec,

void* audioSamples, size_t& nSamplesOut,

int64_t* elapsed_time_ms,

int64_t* ntp_time_ms) {

GetPlayoutData(static_cast<int>(samplesPerSec), static_cast<int>(nChannels),

nSamples, true, audioSamples,

elapsed_time_ms, ntp_time_ms);

nSamplesOut = audioFrame_.samples_per_channel_;

return 0;

}

void VoEBaseImpl::GetPlayoutData(int sample_rate, int number_of_channels,

size_t number_of_frames, bool feed_data_to_apm,

void* audio_data, int64_t* elapsed_time_ms,

int64_t* ntp_time_ms){

//获取数据

shared_->output_mixer()->MixActiveChannels();

//混音和重采样处理

// Additional operations on the combined signal

shared_->output_mixer()->DoOperationsOnCombinedSignal(feed_data_to_apm);

// Retrieve the final output mix (resampled to match the ADM)

shared_->output_mixer()->GetMixedAudio(sample_rate, number_of_channels,

&audioFrame_);

//拷贝pcm数据

memcpy(audio_data, audioFrame_.data_,

sizeof(int16_t) * number_of_frames * number_of_channels);

}shared_->output_mixer()->MixActiveChannels() 通过channel从解码器中获取pcm数据

/*android\webrtc\src\webrtc\voice_engine\output_mixer.cc*/

int32_t

OutputMixer::MixActiveChannels()

{

return _mixerModule.Process();

}

/*android\webrtc\src\webrtc\modules\audio_conference_mixer\source\audio_conference_mixer_impl.cc*/

int32_t AudioConferenceMixerImpl::Process() {

......

UpdateToMix(&mixList, &rampOutList, &mixedParticipantsMap,

&remainingParticipantsAllowedToMix);

......

}

void AudioConferenceMixerImpl::UpdateToMix(

AudioFrameList* mixList,

AudioFrameList* rampOutList,

std::map<int, MixerParticipant*>* mixParticipantList,

size_t* maxAudioFrameCounter){

......

for (MixerParticipantList::const_iterator participant =

_participantList.begin(); participant != _participantList.end();

++participant) {

......

//从MixerParticipan获取pcm数据,而MixerParticipant由Channel实现

//

if((*participant)->GetAudioFrame(_id, audioFrame) != 0) {

WEBRTC_TRACE(kTraceWarning, kTraceAudioMixerServer, _id,

"failed to GetAudioFrame() from participant");

_audioFramePool->PushMemory(audioFrame);

continue;

......

}

}

......

}

/*android\webrtc\src\webrtc\voice_engine\channel.cc*/

int32_t Channel::GetAudioFrame(int32_t id, AudioFrame* audioFrame){

......

//从AudioCodingModule获取解码的pcm数据

if (audio_coding_->PlayoutData10Ms(audioFrame->sample_rate_hz_,

audioFrame) == -1)

{

WEBRTC_TRACE(kTraceError, kTraceVoice,

VoEId(_instanceId,_channelId),

"Channel::GetAudioFrame() PlayoutData10Ms() failed!");

// In all likelihood, the audio in this frame is garbage. We return an

// error so that the audio mixer module doesn't add it to the mix. As

// a result, it won't be played out and the actions skipped here are

// irrelevant.

return -1;

}

......

}

/*android\webrtc\src\webrtc\modules\audio_coding\main\acm2\audio_coding_module_impl.cc*/

int AudioCodingModuleImpl::PlayoutData10Ms(int desired_freq_hz,

AudioFrame* audio_frame) {

// GetAudio always returns 10 ms, at the requested sample rate.

if (receiver_.GetAudio(desired_freq_hz, audio_frame) != 0) {

WEBRTC_TRACE(webrtc::kTraceError, webrtc::kTraceAudioCoding, id_,

"PlayoutData failed, RecOut Failed");

return -1;

}

audio_frame->id_ = id_;

return 0;

}

/*android\webrtc\src\webrtc\modules\audio_coding\main\acm2\acm_receiver.cc*/

int AcmReceiver::GetAudio(int desired_freq_hz, AudioFrame* audio_frame){

......

// 结合之前的解码分析,可知,这里是从缓冲区中获取的压缩音频数据,然后通过decoder解码后送出!

if (neteq_->GetAudio(AudioFrame::kMaxDataSizeSamples,

audio_buffer_.get(),

&samples_per_channel,

&num_channels,

&type) != NetEq::kOK) {

LOG(LERROR) << "AcmReceiver::GetAudio - NetEq Failed.";

return -1;

}

......

}从上面的分析可以看出,webrtc整个层次结构非常清晰。结合图一,再结合相关代码很容易了解整个框架!