Bert+Bilstm+Crf 命名实体识别(NER) Keras实战

0.引言

最近做了一个命名实体识别(NER)的任务,开始用的是keras中的Embedding层+bilstm+crf,但是模型训练精度太低了,没有实用意义。后来考虑用bert来embedding,因为bert毕竟是Google花了大精力训练的模型,还是很强大的。其实网上相关tensorflow,pytorch的代码很多,但是用Keras的感觉没有一个是简单明了的,所以我在写这个网络的时候遇到不少麻烦,幸运的是最后还是实现了这个网络,正确率有99%+。作为总结,把自己的经验分享给大家,尽量做到简单明了可实现,代码粗陋,适合新手学习,也欢迎大家的宝贵意见。

1.前期准备

keras中要用bert和crf,我用到了两个封装好的函数包:keras_bert和keras_contrib,安装命令:

pip install git+https://www.github.com/keras-team/keras-contrib.git

pip install keras-bert

此外还要用到bert训练好的模型chinese_L-12_H-768_A-12,下载网址:

https://storage.googleapis.com/bert_models/2018_11_03/chinese_L-12_H-768_A-12.zip

2.任务与整体思路

NER的任务是根据自己的需要,识别机构名或者人名地名等,本次的任务就用识别公司名作为简单的例子,整体的思路就是首先使用下载的bert模型把语料样本embedding,然后输入bilstm+crf的网络训练模型。我们知道进入bilstm网络的张量应该是一个3D张量,所以任务的难点就是使用bert来embedding语料的过程,而且要值得注意的是语料样本embedding以后的squence要和标签的squence一致。这个会在后面用例子详细介绍。为了便于初学者理解语料的embedding和网络的输入,请看下图:

3.训练样本向量化

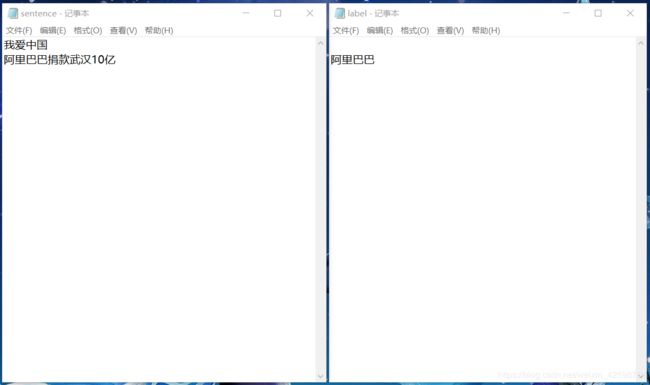

把语料向量化是这个任务的关键,在这里直观的用例子来解释向量化的过程,后面读者再结合代码,就能有比较好的理解。首先,我是把语料的样本和标签放到了不同的文档里,sentence放的是语料,label是语料的标签也就是标注的公司名。(没有公司名的就空行)

对语料的向量化:使用extract_embedding函数直接把sentence中的语料embedding成一个3D张量。【extract_embedding()处理样本太多的时候会很慢,bert_service的方法见我的另一篇博客:bert_serving 安装使用教程】

对标签的向量化:根据语料tokenize的结果进行标注。例如语料tokenizer以后的向量是:

‘[CLS]’, ‘我’, ‘爱’, ‘中’, ‘国’, ‘[SEP]’

‘[CLS]’, ‘阿’, ‘里’, ‘巴’, ‘巴’, ‘捐’, ‘款’, ‘武’, ‘汉’, ‘10’, ‘亿’, ‘[SEP]’

那对应的标注向量就是

[ [ [0],[0],[0],[0],[0],[0] ] , [ [0],[1],[2],[2],[2],[0],[0],[0],[0],[0],[0],[0] ] ]

1:代表公司名的首个字

2:代表公司名的非首个字

注:

1.bert的tokenize以后句子的首尾会多出’[CLS]‘和’[SEP]’

2.语料和标签向量化的时候要padding,否则squence长度不统一,无法统一成一个张量输入网络

4.代码

import

import numpy as np

import os

from keras_bert import extract_embeddings

from keras_bert import load_trained_model_from_checkpoint

import codecs

from keras_bert import Tokenizer

from keras.models import Sequential

from keras.layers import Embedding, Bidirectional, LSTM, TimeDistributed,Dense

from keras_contrib.layers import CRF

from keras.callbacks import Callback

from sklearn.metrics import confusion_matrix, f1_score, precision_score, recall_score

声明文件路径

pretrained_path = './chinese_L-12_H-768_A-12'

config_path = os.path.join(pretrained_path, 'bert_config.json')

checkpoint_path = os.path.join(pretrained_path, 'bert_model.ckpt')

vocab_path = os.path.join(pretrained_path, 'vocab.txt')

sentence_path=r'./sentence'

label_path=r'./label'

test_path=r'./test'

载入bert模型字典

#模型字典

token_dict = {}

with codecs.open(vocab_path, 'r', 'utf8') as reader:

for line in reader:

token = line.strip()

token_dict[token] = len(token_dict)

tokenizer = Tokenizer(token_dict)

样本向量化

#语料样本向量化

#pad_num:自定义语料squence长度

def pre_y(sentence,label,pad_num):

y=np.zeros((len(sentence),pad_num))

for i in range(len(sentence)):

sen=sentence[i]

tokens = tokenizer.tokenize(sen)

lab=label[i]

lab_y = np.zeros(len(tokens))

if lab!='':

top = None

#label只有一项

if ',' not in lab:

tokens_lab = tokenizer.tokenize(lab)

num_lab=len(tokens_lab)-2

for j in range(len(tokens)):

if j+num_lab-1<len(tokens):

if (tokens[j] == tokens_lab[1]) & (tokens[j + num_lab - 1] == tokens_lab[1 + num_lab - 1]):

top = j

if top==None:

print('error:' + sen)

print(tokens,tokens_lab)

break

lab_y[top] = 1 # B-COM

for g in range(top + 1, top + num_lab):

lab_y[g] = 2 # I-COM

#label有多项

else:

t=[]

n=[]

for u in lab.split(','):

tokens_lab = tokenizer.tokenize(u)

num_lab = len(tokens_lab) - 2

for j in range(len(tokens)):

if j + num_lab - 1 < len(tokens):

if (tokens[j] == tokens_lab[1]) & (tokens[j + num_lab - 1] == tokens_lab[1 + num_lab - 1]):

t.append(j)

n.append(num_lab)

if len(t)==0:

print('error:' + sen)

break

for t_num in range(len(t)):

lab_y[t[t_num]] = 1 # B-COM

for g in range(t[t_num]+1,t[t_num]+n[t_num]):

lab_y[g] = 2 # I-COM

y[i]=np.lib.pad(lab_y, (0,pad_num-len(tokens)), 'constant', constant_values=(0,0))

return y.reshape((len(sentence),pad_num,1))

#语料标签向量化

#pad_num:自定义语料squence长度

def pre_x(sentence,pad_num):

x = extract_embeddings(pretrained_path, sentence)

#padding

x_train = np.zeros((len(sentence), pad_num, x[0].shape[1]))

for i in range(len(sentence)):

for j in range(len(x[i])):

if len(x[i]) > pad_num:

print('error:超出范围!'+str(len(x[i]))+str(sentence[i]))

break

x_train[i][j] = x[i][j]

return x_train

class Metrics(Callback):

def on_train_begin(self, logs={}):

self.val_f1s = []

self.val_recalls = []

self.val_precisions = []

def on_epoch_end(self, epoch, logs={}):

val_predict=(np.asarray(self.model.predict(self.validation_data[0]))).round()

val_targ = self.validation_data[1]

# _val_f1 = f1_score(val_targ, val_predict,average='micro')

_val_recall = recall_score(val_targ, val_predict,average=None)

# _val_precision = precision_score(val_targ, val_predict,average=None)

# self.val_f1s.append(_val_f1)

self.val_recalls.append(_val_recall)

# self.val_precisions.append(_val_precision)

# print('-val_f1: %.4f --val_precision: %.4f --val_recall: %.4f'%(_val_f1, _val_precision, _val_recall))

print("— val_recall: %f " % _val_recall)

return

模型训练

EMBED_DIM = 200

BiRNN_UNITS = 200

chunk_tags=3#O,B-COM,I-COM

with open(sentence_path, encoding="utf-8", errors='ignore') as sentence_file_object:

sentence = sentence_file_object.read()

with open(label_path, encoding="utf-8", errors='ignore') as label_path_file_object:

label = label_path_file_object.read()

with open(test_path, encoding="utf-8", errors='ignore') as test_path_file_object:

test = test_path_file_object.read()

s = sentence.split('\n')

l = label.split('\n')

test_s=test.split('\n')

y=pre_y(s,l,128)

x=pre_x(s,128)

test_x=pre_x(test_s,128)

model = Sequential()

model.add(Bidirectional(LSTM(BiRNN_UNITS // 2, return_sequences=True)))

crf = CRF(chunk_tags, sparse_target=True)

model.add(crf)

model.compile('adam', loss=crf.loss_function, metrics=[crf.accuracy])

model.fit(x[:1000], y[:1000],batch_size=6,epochs=6, validation_data=(x[1000:], y[1000:]))#前1000个样本作为训练集

pre = model.predict(test_x)

最近很多人找我要源码,最后把整个项目分享给大家,至于数据涉及到商业因素,不方便公开,我用了10条样本供参考。记得收藏点赞!感谢大家支持!

获取项目代码戳这里

提取码:aylx