RtspServer实现及使用

编译环境:Ubuntu16.04 64位

交叉编译工具:arm-hisiv500-linux-gcc

最近需要在hi3519实现RtspServer,以便于推流。

ps1:这里记录一下工作过程,目前还未完成。

网上可以找到很多开源的RtspServer实现,需要做性能测试,也有假开源(例如EasyIPCamera,只放demo源码,没放sdk源码,而且sdk还被加密了)。

ps2:性能测试结果是延时都比较大,打算自己写了。

ps3:已经实现多路推流,提供库和接口,点击下载RtspServerForHisiv500

文章目录

- 1.开源代码的修改

- 1.1 PHZ76/RtspServer

- 1.1.1 代码修改

- 1.1.2 测试代码

- 1.1.2.1 头文件

- 1.1.2.2 测试代码

- 1.2 live555

- 1.2.1 交叉编译

- 1.2.2 测试live555MediaServer

- 2. 我的实现

- 2.1 sample代码

- 2.2 性能测试

- 2.3 组播扩展

1.开源代码的修改

1.1 PHZ76/RtspServer

源代码下载地址:PHZ76/RtspServer

1.1.1 代码修改

以上代码中最后是直接编译得到rtsp_server、rtsp_pusher和rtsp_h264_file,这里我首先对代码结构和Makefile做了调整,编译静态库libAFRtsp.a以便于我的项目使用。

代码结构如下:

jerry@ubuntu:~/work/RtspServer$ tree

.

├── example

│ ├── rtsp_h264_file.cpp

│ ├── rtsp_pusher.cpp

│ └── rtsp_server.cpp

├── inc

│ ├── net

│ │ ├── Acceptor.h

│ │ ├── BufferReader.h

│ │ ├── BufferWriter.h

│ │ ├── Channel.h

│ │ ├── EpollTaskScheduler.h

│ │ ├── EventLoop.h

│ │ ├── Logger.h

│ │ ├── log.h

│ │ ├── MemoryManager.h

│ │ ├── NetInterface.h

│ │ ├── Pipe.h

│ │ ├── RingBuffer.h

│ │ ├── SelectTaskScheduler.h

│ │ ├── Socket.h

│ │ ├── SocketUtil.h

│ │ ├── TaskScheduler.h

│ │ ├── TcpConnection.h

│ │ ├── TcpServer.h

│ │ ├── TcpSocket.h

│ │ ├── ThreadSafeQueue.h

│ │ ├── Timer.h

│ │ └── Timestamp.h

│ └── xop

│ ├── AACSource.h

│ ├── G711ASource.h

│ ├── H264Parser.h

│ ├── H264Source.h

│ ├── H265Source.h

│ ├── media.h

│ ├── MediaSession.h

│ ├── MediaSource.h

│ ├── RtpConnection.h

│ ├── rtp.h

│ ├── RtspConnection.h

│ ├── rtsp.h

│ ├── RtspMessage.h

│ ├── RtspPusher.h

│ └── RtspServer.h

├── LICENSE

├── Makefile

├── pic

│ └── 1.pic.JPG

├── README.md

├── src

│ ├── net

│ │ ├── Acceptor.cpp

│ │ ├── BufferReader.cpp

│ │ ├── BufferWriter.cpp

│ │ ├── EpollTaskScheduler.cpp

│ │ ├── EventLoop.cpp

│ │ ├── Logger.cpp

│ │ ├── MemoryManager.cpp

│ │ ├── NetInterface.cpp

│ │ ├── Pipe.cpp

│ │ ├── SelectTaskScheduler.cpp

│ │ ├── SocketUtil.cpp

│ │ ├── TaskScheduler.cpp

│ │ ├── TcpConnection.cpp

│ │ ├── TcpServer.cpp

│ │ ├── TcpSocket.cpp

│ │ ├── Timer.cpp

│ │ └── Timestamp.cpp

│ └── xop

│ ├── AACSource.cpp

│ ├── G711ASource.cpp

│ ├── H264Parser.cpp

│ ├── H264Source.cpp

│ ├── H265Source.cpp

│ ├── MediaSession.cpp

│ ├── RtpConnection.cpp

│ ├── RtspConnection.cpp

│ ├── RtspMessage.cpp

│ ├── RtspPusher.cpp

│ └── RtspServer.cpp

└── test.h264

8 directories, 73 files

Makefile的修改如下:

#CROSS :=

CROSS := arm-hisiv500-linux-

CC := $(CROSS)gcc

CXX := $(CROSS)g++

AR := $(CROSS)ar

LIB = AFRtsp

LIBDIR = lib

TARGET = ./$(LIBDIR)/lib$(LIB).a

OBJSDIR = objs

TARGET1 = rtsp_server

TARGET2 = rtsp_pusher

TARGET3 = rtsp_h264_file

RTSPLDFLAGS = -L./$(LIBDIR) -l$(LIB)

INCLUDE = -I./inc/net -I./inc/xop

CXXFLAGS = -std=c++11

LDFLAGS = -lpthread

#LDFLAGS = -lrt -pthread -lpthread -ldl -lm

ARFLAGS = -rc

SRC1 = $(notdir $(wildcard ./src/net/*.cpp))

OBJS1 = $(patsubst %.cpp,$(OBJSDIR)/%.o,$(SRC1))

SRC2 = $(notdir $(wildcard ./src/xop/*.cpp))

OBJS2 = $(patsubst %.cpp,$(OBJSDIR)/%.o,$(SRC2))

all: BUILD_DIR $(TARGET) $(TARGET1) $(TARGET2) $(TARGET3)

BUILD_DIR:

@-mkdir -p $(OBJSDIR) $(LIBDIR)

$(TARGET) : $(OBJS1) $(OBJS2)

$(AR) $(ARFLAGS) $@ $(OBJS1) $(OBJS2)

$(TARGET1) : ./example/rtsp_server.cpp

$(CXX) $< -o $@ $(RTSPLDFLAGS) $(CXXFLAGS) $(INCLUDE) $(LDFLAGS)

$(TARGET2) : ./example/rtsp_pusher.cpp

$(CXX) $< -o $@ $(RTSPLDFLAGS) $(CXXFLAGS) $(INCLUDE) $(LDFLAGS)

$(TARGET3) : ./example/rtsp_h264_file.cpp

$(CXX) $< -o $@ $(RTSPLDFLAGS) $(CXXFLAGS) $(INCLUDE) $(LDFLAGS)

$(OBJSDIR)/%.o : ./src/net/%.cpp

$(CXX) -c $< -o $@ $(CXXFLAGS) $(INCLUDE)

$(OBJSDIR)/%.o : ./src/xop/%.cpp

$(CXX) -c $< -o $@ $(CXXFLAGS) $(INCLUDE)

.PHONY : clean

clean :

-rm -rf $(OBJSDIR) $(LIBDIR) $(TARGET1) $(TARGET2) $(TARGET3)

使用:

编译完成后,inc目录下的头文件和lib目录下libAFRtsp.a即是我们所需要的。示例代码参考rtsp_server.cpp、rtsp_pusher.cpp和rtsp_h264_file.cpp。

使用报告:

暂无,后续完善。如果当前库无法满足需求,继续寻找其他开源库或者使用live555实现,或者完全实现。

调整后的代码下载:RtspServer实现的源码

PS:

使用过程发现bool MediaSession::addMediaSource(MediaChannelId channelId, MediaSource* source)在调用packets.emplace(id, tmpPkt);中会出现segmentation fault,最后做了如下修改:

#if 0

if (packets.size() != 0)

{

memcpy(tmpPkt.data.get(), pkt.data.get(), pkt.size);

}

else

{

tmpPkt.data = pkt.data;

}

#else

memcpy(tmpPkt.data.get(), pkt.data.get(), pkt.size);

#endif

理论上std::shared_ptr直接赋值是可以的,不太清楚这里是为什么???

做了上述修改之后,使用VLC和PotPlayer都能收到RTSP流了。

PPS:

pushFrame的xop::AVFrame数据不包括H264和H265的NALU头(00 00 00 01或 00 00 01)。

1.1.2 测试代码

1.1.2.1 头文件

#ifndef __AF_RTSP_H__

#define __AF_RTSP_H__

#ifdef __cplusplus

extern "C" {

#endif

int AF_RtspInit();

int AF_RtspExit();

int AF_RtspPush(int nCh, unsigned char *pBuffer, int nLength, int bKey);

#ifdef __cplusplus

}

#endif

#endif// __AF_RTSP_H__

1.1.2.2 测试代码

#include

#include "xop/RtspServer.h"

#include "net/NetInterface.h"

#include "afrtsp.h"

typedef struct tag_AF_RTSP_INFO {

xop::MediaSessionId SessionId;// 会话句柄

unsigned int nClients;// 客户端数量

char rtspUrl[64];

}AF_RTSP_INFO;

static pthread_t g_nThreadID;

static pthread_mutex_t g_Mutex;

static xop::RtspServer *g_pRtspServer = NULL;

static AF_RTSP_INFO g_RtspInfo[4] = { 0 };

static xop::EventLoop *g_pEventLoop = NULL;

static void *AF_RtspThread(void *p)

{

int clients = 0;

std::string rtspUrl;

std::string ip = xop::NetInterface::getLocalIPAddress(); //获取网卡ip地址

g_pEventLoop = new xop::EventLoop();

g_pRtspServer = new xop::RtspServer(g_pEventLoop, ip, 554);//创建一个RTSP服务器

// live通道

xop::MediaSession *session = xop::MediaSession::createNew("live");

snprintf(g_RtspInfo[0].rtspUrl, sizeof(g_RtspInfo[0].rtspUrl), "rtsp://%s/%s", ip.c_str(), session->getRtspUrlSuffix().c_str());

rtspUrl = "rtsp://" + ip + "/" + session->getRtspUrlSuffix();

// 添加音视频流到媒体会话, track0:h265, track1:aac

session->addMediaSource(xop::channel_0, xop::H265Source::createNew());

// session->addMediaSource(xop::channel_1, xop::AACSource::createNew(44100,2));

// 设置通知回调函数。 在当前会话中, 客户端连接或断开会通过回调函数发起通知

session->setNotifyCallback([&clients, &rtspUrl](xop::MediaSessionId sessionId, uint32_t numClients) {

pthread_mutex_lock(&g_Mutex);

g_RtspInfo[0].nClients = clients = numClients; //获取当前媒体会话客户端数量

std::cout << "[" << g_RtspInfo[0].rtspUrl << "]" << " Online: " << g_RtspInfo[0].nClients << std::endl;

pthread_mutex_unlock(&g_Mutex);

});

std::cout << "URL: " << g_RtspInfo[0].rtspUrl << std::endl;

g_RtspInfo[0].SessionId = g_pRtspServer->addMeidaSession(session);

g_pEventLoop->loop();

return NULL;

}

int AF_RtspInit()

{

if (g_pRtspServer != NULL)

return -1;

if (g_pEventLoop != NULL)

return -1;

pthread_mutex_init(&g_Mutex, NULL);

return pthread_create(&g_nThreadID, 0, AF_RtspThread, NULL);

}

int AF_RtspExit()

{

pthread_mutex_destroy(&g_Mutex);

if (g_pRtspServer != NULL) {

pthread_join(g_nThreadID, 0);

// 服务和会话的销毁

}

return 0;

}

int AF_RtspPush(int nCh, unsigned char *pBuffer, int nLength, int bKey)

{

if (nCh != 0)//暂时只测试xop::MediaSession live通道

return 0;

pthread_mutex_lock(&g_Mutex);

if (g_RtspInfo[0].nClients > 0) {

xop::AVFrame videoFrame = { 0 };

videoFrame.type = bKey ? xop::VIDEO_FRAME_I : xop::VIDEO_FRAME_P;

videoFrame.size = nLength - 4;

videoFrame.timestamp = xop::H265Source::getTimeStamp();

videoFrame.buffer.reset(new uint8_t[videoFrame.size]);

memcpy(videoFrame.buffer.get(), pBuffer + 4, videoFrame.size);

bool bRet = g_pRtspServer->pushFrame(g_RtspInfo[0].SessionId, xop::channel_0, videoFrame);

if (!bRet) {

printf("pushFrame failed\n");

}

}

pthread_mutex_unlock(&g_Mutex);

return 0;

}

以上代码仅供测试使用,如有疑问或者错漏之处,敬请留言指正。

1.2 live555

源代码下载地址:live555

1.2.1 交叉编译

添加配置文件:代码目录下存在很多config.xxx的配置文件,我这里是hi3519,选择比较接近的config.armlinux做拷贝并修改文件名为config.hi3519,

将

CROSS_COMPILE?= arm-elf-

修改为

CROSS_COMPILE?= arm-hisiv500-linux-

生成Makefile:liveMedia和mediaServer等目录下都不存在Makefile文件,只有Makefile.head和Makefile.tail,需要通过运行genMakefiles脚本生成Makefile

执行

./genMakefiles hi3519

就会在各个目录下生成Makefile

编译:

执行

make

发现报错,如下

../liveMedia/libliveMedia.a(Locale.o): In function `Locale::~Locale()':

Locale.cpp:(.text+0x20): undefined reference to `uselocale'

Locale.cpp:(.text+0x28): undefined reference to `freelocale'

../liveMedia/libliveMedia.a(Locale.o): In function `Locale::Locale(char const*, LocaleCategory)':

Locale.cpp:(.text+0x80): undefined reference to `newlocale'

Locale.cpp:(.text+0x88): undefined reference to `uselocale'

collect2: error: ld returned 1 exit status

查看源码,发现Locale的构造和析构用宏LOCALE_NOT_USED包含起来,继续修改config.hi3519

将

COMPILE_OPTS = $(INCLUDES) -I. -O2 -DSOCKLEN_T=socklen_t -DNO_SSTREAM=1 -D_LARGEFILE_SOURCE=1 -D_FILE_OFFSET_BITS=64

修改为

COMPILE_OPTS = $(INCLUDES) -I. -O2 -DSOCKLEN_T=socklen_t -DNO_SSTREAM=1 -D_LARGEFILE_SOURCE=1 -D_FILE_OFFSET_BITS=64 -DLOCALE_NOT_USED

重新执行

./genMakefiles hi3519

make clean && make

编译完成,得到静态库libliveMedia.a、libgroupsock.a、libBasicUsageEnvironment.a和libUsageEnvironment.a,以及可执行程序live555MediaServer、live555ProxyServer和testProgs目录下的可执行程序。

1.2.2 测试live555MediaServer

将live555MediaServer拷贝到开发板,

执行

/media # ./live555MediaServer

LIVE555 Media Server

version 0.92 (LIVE555 Streaming Media library version 2018.08.28).

Play streams from this server using the URL

rtsp://0.0.0.0/

where is a file present in the current directory.

Each file's type is inferred from its name suffix:

".264" => a H.264 Video Elementary Stream file

".265" => a H.265 Video Elementary Stream file

".aac" => an AAC Audio (ADTS format) file

".ac3" => an AC-3 Audio file

".amr" => an AMR Audio file

".dv" => a DV Video file

".m4e" => a MPEG-4 Video Elementary Stream file

".mkv" => a Matroska audio+video+(optional)subtitles file

".mp3" => a MPEG-1 or 2 Audio file

".mpg" => a MPEG-1 or 2 Program Stream (audio+video) file

".ogg" or ".ogv" or ".opus" => an Ogg audio and/or video file

".ts" => a MPEG Transport Stream file

(a ".tsx" index file - if present - provides server 'trick play' support)

".vob" => a VOB (MPEG-2 video with AC-3 audio) file

".wav" => a WAV Audio file

".webm" => a WebM audio(Vorbis)+video(VP8) file

See http://www.live555.com/mediaServer/ for additional documentation.

(We use port 8000 for optional RTSP-over-HTTP tunneling, or for HTTP live streaming (for indexed Transport Stream files only).)

可以看到支持的文件格式,我这里使用264格式的文件做测试,将test1.264直接拷贝到开发板上live555MediaServer所在的目录下,开发板IP192.168.1.21

PC上使用VLC打开网络串流,输入rtsp://192.168.1.21:554/test1.264,提示:

Unable to determine our source address: This computer has an invalid IP address: 0.0.0.0

最后定位是GroupsockHelper.cpp的ourIPAddress接口获取设备IP失败,我这里为了先测试,直接修改如下:

将

// Make sure we have a good address:

netAddressBits from = fromAddr.sin_addr.s_addr;

修改为

fromAddr.sin_addr.s_addr = our_inet_addr("192.168.1.21");

// Make sure we have a good address:

netAddressBits from = fromAddr.sin_addr.s_addr;

先直接写死设备IP做测试。重新运行live555MediaServer,VLC连接成功。

基于Live555的RTSP实时流传输参考资料:

【视频开发】【Live555】live555实现h264码流RTSP传输

【视频开发】【Live555】通过live555实现H264 RTSP直播

实时流测试延时较高,打算自己实现了。

2. 我的实现

2.1 sample代码

头文件:

#ifndef __AF_RTSP_H__

#define __AF_RTSP_H__

#ifdef __cplusplus

extern "C" {

#endif

#include "JLRtspAPI.h"

int AF_RtspInit();//初始化服务

int AF_RtspExit();//去初始化

int AF_RtspPush(int nCh, JL_RtspFrame *pstFrame);//推送数据

int AF_RtspStopChannel(int nCh);//服务端主动关闭流

#ifdef __cplusplus

}

#endif

#endif// __AF_RTSP_H__

代码部分:

#include

#include

#include "afrtsp.h"

#define AF_RTSP_PORT 554

static int g_RtspMapInfo[AF_Venc_MAX] = { 0 };

static int g_RtspChannelNum = 0;

static int AF_RtspCh2Id(int nCh)

{

int i = 0;

for (i = 0; i < g_RtspChannelNum; i++) {

if (g_RtspMapInfo[i] == nCh)

return i;

}

return -1;

}

static int AF_RtspMapInit()

{

int nNum = 0;

g_RtspMapInfo[nNum] = AF_Venc_Live;

nNum++;

#if __AF_INFRARED_CAM_ENABLE__

g_RtspMapInfo[nNum] = AF_Venc_Infrared;

nNum++;

#endif

g_RtspMapInfo[nNum] = AF_Venc_AI;

nNum++;

#if __AF_OPT_FLOW_VENC_ENABLE__

g_RtspMapInfo[nNum] = AF_Venc_OpticalFlow;

nNum++;

#endif

return nNum;

}

// 通道和ID建立对应关系

// ID是rtspserver内部的资源,从0开始递增

/* eg. nCh=0--->nId=0

nCh=3--->nId=1

nCh=4--->nId=2

对应代码JL_RtspChannelInfo

nCh=0---> .id=0 .name="/live"

nCh=3---> .id=1 .name="/ch3"

nCh=4---> .id=2 .name="/ch4"

*/

int AF_RtspInit()

{

g_RtspChannelNum = AF_RtspMapInit();

JL_RtspChannelInfo stRtspInfo[g_RtspChannelNum];

int i = 0;

for (i = 0; i < g_RtspChannelNum; i++) {

switch (g_RtspMapInfo[i]) {

case AF_Venc_Live:

sprintf(stRtspInfo[i].name,"/live");

break;

case AF_Venc_Infrared:

sprintf(stRtspInfo[i].name,"/ch3");

break;

case AF_Venc_AI:

sprintf(stRtspInfo[i].name,"/ch4");

break;

case AF_Venc_OpticalFlow:

sprintf(stRtspInfo[i].name,"/ch5");

break;

default :

printf("g_RtspMapInfo Error!\n");

break;

}

stRtspInfo[i].id = i;

}

return JL_RtspStart(g_RtspChannelNum, AF_RTSP_PORT, stRtspInfo);

}

int AF_RtspExit()

{

return JL_RtspStop();

}

int AF_RtspPush(int nCh, JL_RtspFrame *pstFrame)

{

int nID = AF_RtspCh2Id(nCh);

if (nID < 0)

return -1;

// if (nCh == 0)

// JL_RtspFrameDump(pstFrame);

return JL_RtspSend(nID, pstFrame);

}

int AF_RtspStopChannel(int nCh)

{

int nID = AF_RtspCh2Id(nCh);

if (nID >= 0) {

return JL_RtspStopSession(nID);

}

return -1;

}

hi3519v101中推流接口的调用:

static int AF_VencPushFrame(int nCh, unsigned char *pBuffer, int nLength, VENC_STREAM_S *pstStream)

{

JL_RtspFrame stFrame;

memset(&stFrame, 0, sizeof(stFrame));

stFrame.pBuffer = pBuffer;

stFrame.nLength = nLength;

AF_EncParam stEncParam;

AF_GetEncParam(nCh, &stEncParam);

int enRefType = 0;

switch (stEncParam.enEnc) {

case AF_Enc_H264:

stFrame.nCodec = JL_RtspCodecType_H264;

stFrame.nVINum = 0;

stFrame.nPBNum = 0;

enRefType = pstStream->stH264Info.enRefType;

break;

case AF_Enc_H265:

stFrame.nCodec = JL_RtspCodecType_H265;

stFrame.nVINum = 0;

stFrame.nPBNum = 0;

enRefType = pstStream->stH265Info.enRefType;

break;

case AF_Enc_H264P:

stFrame.nCodec = JL_RtspCodecType_H264P;

stFrame.nVINum = 1;

stFrame.nPBNum = 0;// 暂时未加入PB帧

enRefType = pstStream->stH264Info.enRefType;

break;

case AF_Enc_H265P:

stFrame.nCodec = JL_RtspCodecType_H265P;

stFrame.nVINum = 1;

stFrame.nPBNum = 0;// 暂时未加入PB帧

enRefType = pstStream->stH265Info.enRefType;

break;

}

switch (enRefType) {

case BASE_IDRSLICE:

stFrame.nFrame = JL_RtspFrameType_I;

break;

case BASE_PSLICE_REFTOIDR:

stFrame.nFrame = JL_RtspFrameType_VI;

break;

case BASE_PSLICE_REFBYBASE:

case BASE_PSLICE_REFBYENHANCE:

if ((pstStream->pstPack[0].DataType.enH264EType == H264E_NALU_SPS) || (pstStream->pstPack[0].DataType.enH265EType == H265E_NALU_VPS)) {

stFrame.nFrame = JL_RtspFrameType_I;

} else {

stFrame.nFrame = JL_RtspFrameType_P;

}

break;

case ENHANCE_PSLICE_REFBYENHANCE:

case ENHANCE_PSLICE_NOTFORREF:

default:

stFrame.nFrame = JL_RtspFrameType_PB;

break;

}

unsigned int nWidth, nHeight;

AF_Res2WH(stEncParam.enRes, &nWidth, &nHeight);

stFrame.nWidth = nWidth;

stFrame.nHeight = nHeight;

stFrame.nRealFps = stEncParam.nFps;

return AF_RtspPush(nCh, &stFrame);

}

2.2 性能测试

初步测试,在同时推送4路码流(20Mbps)时,延时在80ms左右。库代码内部写死分配了40M的缓冲区,支持H264/H264+,H265/H265+。

点击下载RtspServerForHisiv500

2.3 组播扩展

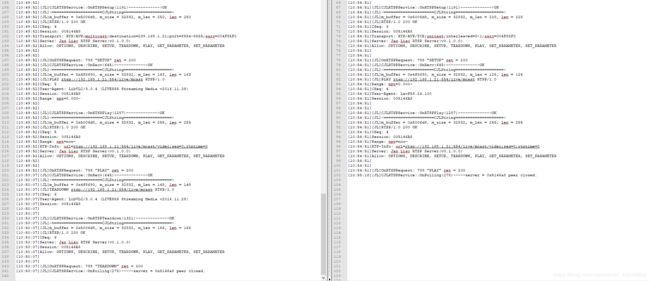

后续加入了组播的支持,VLC和PotPlayer测试对比如下:

服务端发送了组播地址,但是PotPlayer仍然使用单播方式TCP连接:

c=IN IP4 239.168.1.21/127

...

Transport: RTP/AVP/TCP;unicast;interleaved=0-1

注意:仅提供静态库用作测试,代码非开源,协议中包含个人签名Jax.Liao,严禁商用,如有疑问,请留言。如有错漏之处,敬请指正。