ELK日志分析平台实战-----(二)-----logstash数据采集

ELK日志分析平台实战-----(二)-----logstash数据采集

1.logstash简介

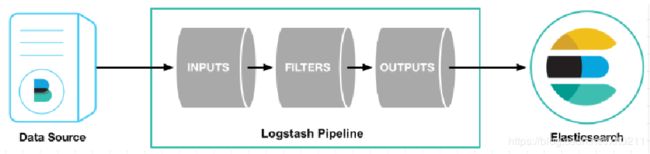

- Logstash是一个开源的服务器端数据处理管道。

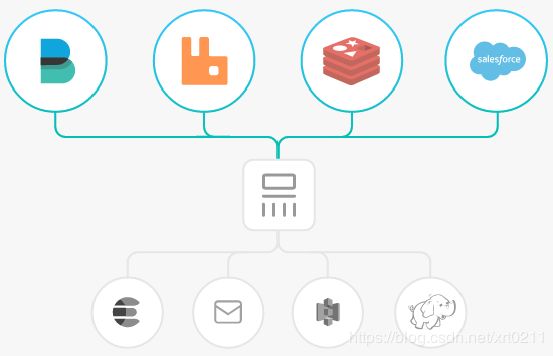

- logstash拥有200多个插件,能够同时从多个来源采集数据,转换数据,然后将数据发送到您最喜欢的 “存储库” 中。(大多都是 Elasticsearch。)

- Logstash管道有两个必需的元素,输入和输出,以及一个可选元素过滤器。

输入:采集各种样式、大小和来源的数据

4. Logstash 支持各种输入选择 ,同时从众多常用来源捕捉事件。

5. 能够以连续的流式传输方式,轻松地从您的日志、指标、Web 应用、数据存储以及各种 AWS 服务采集数据。

- 数据从源传输到存储库的过程中,Logstash 过滤器能够解析各个事件,识别已命名的字段以构建结构,并将它们转换成通用格式,以便更轻松、更快速地分析和实现商业价值。

- 利用 Grok 从非结构化数据中派生出结构

- 从 IP 地址破译出地理坐标

- 将 PII 数据匿名化,完全排除敏感字段

- 简化整体处理,不受数据源、格式或架构的影响

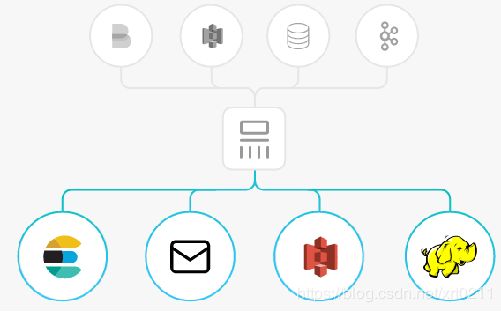

输出:选择您的存储库,导出您的数据

- 尽管 Elasticsearch 是我们的首选输出方向,能够为我们的搜索和分析带来无限可能,但它并非唯一选择。

- Logstash 提供众多输出选择,您可以将数据发送到您要指定的地方,并且能够灵活地解锁众多下游用例。

2.Logstash安装与配置

实验环境:我们用server3充当logstash服务器

软件下载:

https://elasticsearch.cn/download/

logstash安装:

[root@server3 ~]# ls

anaconda-ks.cfg jdk-8u181-linux-x64.rpm logstash-7.6.1.rpm

[root@server3 ~]# rpm -ivh logstash-7.6.1.rpm

[root@server3 ~]# rpm -ivh jdk-8u181-linux-x64.rpm

# 由于是java开发的,需要jdk的支持,我们不用系统自带的,系统自带的时open-jdk

命令存放的地方:

[root@server3 ~]# cd /usr/share/logstash/bin

[root@server3 bin]# ls

benchmark.sh logstash logstash.lib.sh pqrepair

cpdump logstash.bat logstash-plugin ruby

dependencies-report logstash-keystore logstash-plugin.bat setup.bat

ingest-convert.sh logstash-keystore.bat pqcheck system-install

标准输入到标准输出:

[root@server3 bin]# ./logstash -e 'input { stdin { } } output { stdout {} }'

#-e表示使用命令行

然后我们在命令行输入内容:

hello

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"message" => "hello",

"@timestamp" => 2020-06-13T18:36:48.974Z,

"host" => "server3",

"@version" => "1"

}

nishishui

{

"message" => "nishishui",

"@timestamp" => 2020-06-13T18:36:59.278Z,

"host" => "server3",

"@version" => "1"

}

###可以看出,我们输入什么就会输出什么,说明没有问题

ctrl + c 退出

3.file输出插件

[root@server3 conf.d]# vim /etc/logstash/conf.d/test.conf

input {

stdin {}

}

output {

file {

path => "/tmp/logstash.txt"

codec => line { format => "custom format: %{message}"}

}

}

[root@server3 bin]# ./logstash -f /etc/logstash/conf.d/test.conf

######## -f 指定文件去调用,这样更推荐使用######

...successsful 然后输入内容

xiaoxu

[INFO ] 2020-06-14 02:51:12.857 [[main]>worker0] file - Opening file {:path=>"/tmp/logstash.txt"}

c[INFO ] 2020-06-14 02:51:35.403 [[main]>worker0] file - Closing file /tmp/logstash.txt

ai

[INFO ] 2020-06-14 02:51:45.502 [[main]>worker0] file - Opening file {:path=>"/tmp/logstash.txt"}

[INFO ] 2020-06-14 02:51:55.535 [[main]>worker0] file - Closing file /tmp/logstash.txt

junjun

[INFO ] 2020-06-14 02:52:01.041 [[main]>worker0] file - Opening file {:path=>"/tmp/logstash.txt"}

[INFO ] 2020-06-14 02:52:15.617 [[main]>worker0] file - Closing file /tmp/logstash.txt

######可以看出这里的输出指定到了 :path=>"/tmp/logstash.txt#####

[root@server3 bin]# cat /tmp/logstash.txt

custom format: xiaoxu

custom format: ai

custom format: junjun

##可以看到保存到了这里,当然我们最常用的是指定输出到ES 中

一般情况我们输出至ES,ES进行分析,然后kibana在进行展示。

4.elasticsearch输出插件

输出至ES:

[root@server3 conf.d]# pwd

/etc/logstash/conf.d

[root@server3 conf.d]# ls

test.conf

[root@server3 conf.d]# vim es.conf

input {

stdin {}

}

output {

stdout {}

elasticsearch {

hosts => "192.168.43.74:9200" # 输出到的ES主机与端口

index => "logstash-%{+YYYY.MM.dd}" # 定制索引名称

}

}

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

我爱你

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"@timestamp" => 2020-06-13T19:09:42.837Z,

"host" => "server3",

"message" => "我爱你",

"@version" => "1"

}

君君

{

"@timestamp" => 2020-06-13T19:09:49.553Z,

"host" => "server3",

"message" => "君君",

"@version" => "1"

}

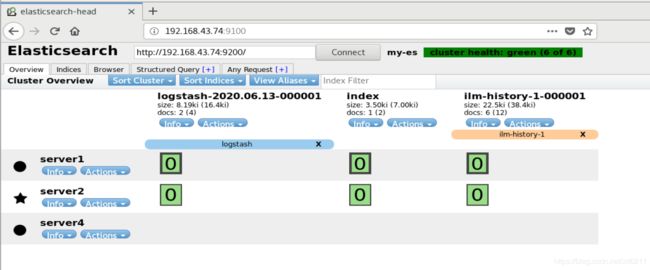

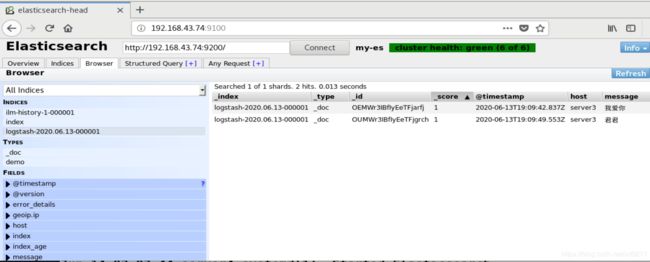

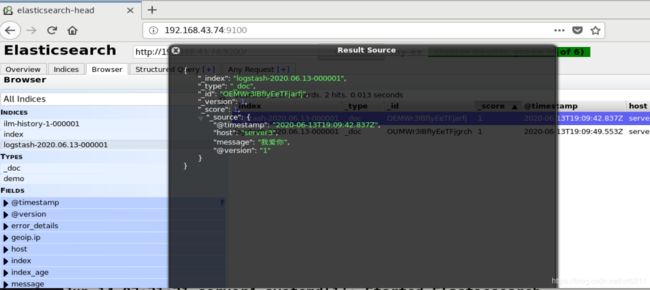

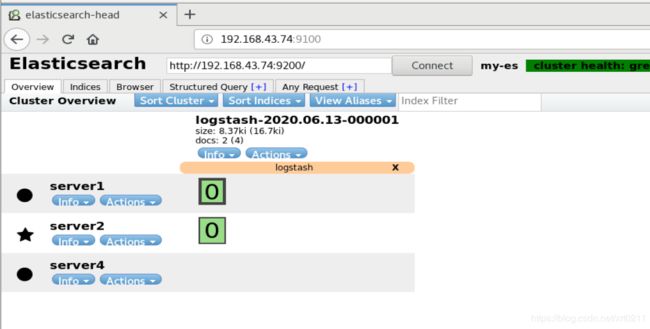

然后再web端查看

显然,这种的输入是我们一直在手敲,是不方便的,所以我们可以把文件中的内容输出至ES。

file输入插件

[root@server3 conf.d]# vim es.conf

input {

file {

path => "/etc/passwd" # 输入这个文件中的内容

start_position => "beginning" # begin 是从头开始读,end从末尾读,即只读新的内容

}

}

output {

stdout {}

elasticsearch {

hosts => "192.168.43.74:9200"

index => "logstash-%{+YYYY.MM.dd}"

}

}

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf # 运行

# 然后再web查看:

我们删除这个logstash

现在没有logstash了,我们在重新发送

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf

[INFO ] 2020-06-14 04:29:07.803 [[main]<file] observingtail - START, creating Discoverer, Watch with file and sincedb collections

[INFO ] 2020-06-14 04:29:08.571 [Api Webserver] agent - Successfully started Logstash API endpoint {:port=>9600}

现在它就卡在这里了,不会在重新输入数据了,这样就避免了数据的冗余

它是怎样实现的呢?

也就是说logstash如何区分设备、文件名、文件的不同版本?:

因为 logstash会把进度保存到sincedb文件中,这样在下次读取的时候会直接读取这个since文件,从上次的位置在开始。

[root@server3 file]# pwd

/usr/share/logstash/data/plugins/inputs/file

[root@server3 file]# l.

. .. .sincedb_c5068b7c2b1707f8939b283a2758a691

[root@server3 file]# cat .sincedb_c5068b7c2b1707f8939b283a2758a691

17657322 0 64768 1062 1592080148.519558 /etc/passwd

sincedb文件一共6个字段:

- inode编号

- 文件系统的主要设备号

- 文件系统的次要设备号

- 文件中的当前字节偏移量 (进度)

- 最后一个活动时间戳(浮点数)

- 与此记录匹配的最后一个已知路径

我们想重新读的话删除这个文件就可以了。

[root@server3 file]# rm -fr .sincedb_*

[root@server3 file]# ls .

./ ../

[root@server3 file]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf # 不退出重开一个终端,查看年偏移量,这样会持续关注文件内容

[root@server3 file]# ls -a

. .. .sincedb_c5068b7c2b1707f8939b283a2758a691

[root@server3 file]# cat .sincedb_c5068b7c2b1707f8939b283a2758a691

17657322 0 64768 1062 1592081250.194284 /etc/passwd

####此时的文件偏移量是1062

我们在插入一条数据:

[root@server3 file]# useradd xiaoxu

[root@server3 file]# useradd junjun

[root@server3 file]# cat .sincedb_c5068b7c2b1707f8939b283a2758a691

17905662 0 64768 1148 1592082652.3512042 /etc/passwd

文件偏移量增加了

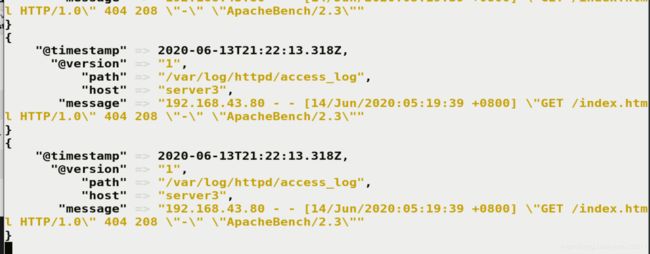

我们还可以吧文件路径这里改成我们的日志路径,比如/etc/log/httpd/access_log

[root@server3 ~]# vim /etc/logstash/conf.d/es.conf

input {

file {

path => "/var/log/httpd/access_log"

start_position => "beginning"

}

}

output {

stdout {}

elasticsearch {

hosts => "192.168.43.74:9200"

index => "apache-%{+YYYY.MM.dd}"

}

}

[root@server0 ~]# ab -c 1 -n 100 http://192.168.43.73/index.html #压力测试

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/es.conf # 导入

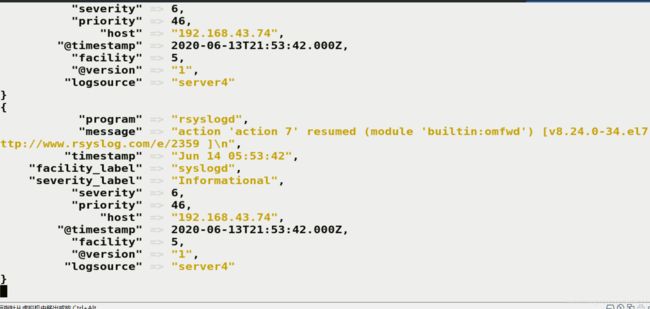

但是这样的话太繁琐,需要在每台机器上装个logsatsh,我们可以把logstash伪装成日志服务器,直接接受远程日志,做一个日志采集服务器。

比如我们现在想把这几个结点的日志都汇集到server3上去

[root@server3 conf.d]# vim syslog.conf

input {

syslog{

port => 514 # 默认的端口可以不加

}

}

output {

stdout {}

elasticsearch {

hosts => "192.168.43.74:9200"

index => "syslog-%{+YYYY.MM.dd}"

}

}

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/syslog.conf

[root@server4 _site]# vim /etc/rsyslog.conf

#*.* @@remote-host:514

#### end of the forwarding rule ###

*.* @@192.168.43.73:514 # 末尾加上这一行,*.*所有日志

[root@server4 _site]# systemctl restart rsyslog.service # 然后重启这个服务

然后server3上就采集到server4上的信息了,其它两个结点同理

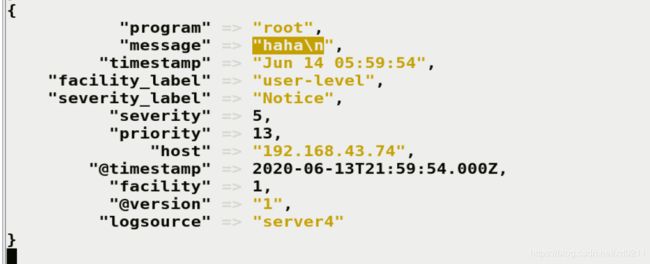

[root@server4 _site]# logger haha # 生成日志

我们也可以利用官方的插件,不部署logstash就采集日志

多行过滤插件

在标准输入中,我们只能敲一行按回车就提交了,那我们如果想输入多行,怎麼办那,就可以用这个多行过滤插件。

[root@server3 conf.d]# vim multline.conf

input {

stdin {

codec => multiline {

pattern => "^EOF" # 匹配到这个字符时结束,可以指定

negate => true

what => previous # 向上匹配,next向下匹配

}

}

}

output {

stdout {}

}

# 指定运行

wo

shi

shui

ni

da

ye

EOF # 不出现顶格的EOF,就可以一直回车进入下一行输入

/usr/share/logstash/vendor/bundle/jruby/2.5.0/gems/awesome_print-1.7.0/lib/awesome_print/formatters/base_formatter.rb:31: warning: constant ::Fixnum is deprecated

{

"@timestamp" => 2020-06-13T22:06:20.260Z,

"message" => "wo\nwo\nshi\nshui\nni\nda\nye",

"@version" => "1",

"tags" => [

[0] "multiline"

],

"host" => "server3"

}

这样好像没有任何意义了

[root@server4 _site]# ls /var/log/elasticsearch/

gc.log gc.log.08 gc.log.17 gc.log.26 my-es_audit.json

gc.log.00 gc.log.09 gc.log.18 gc.log.27 my-es_deprecation.json

gc.log.01 gc.log.10 gc.log.19 gc.log.28 my-es_deprecation.log

gc.log.02 gc.log.11 gc.log.20 gc.log.29 my-es_index_indexing_slowlog.json

gc.log.03 gc.log.12 gc.log.21 gc.log.30 my-es_index_indexing_slowlog.log

gc.log.04 gc.log.13 gc.log.22 gc.log.31 my-es_index_search_slowlog.json

gc.log.05 gc.log.14 gc.log.23 hs_err_pid20005.log my-es_index_search_slowlog.log

gc.log.06 gc.log.15 gc.log.24 my-es-2020-06-13-1.json.gz my-es.log

gc.log.07 gc.log.16 gc.log.25 my-es-2020-06-13-1.log.gz my-es_server.json

我们查看日志时发现被压缩了,

这是ES当日志超过一定大小就会自动压缩。

grok过滤插件

我们可以通过grok插件,对消息进行拆分,步入我们想要提取IP地址,就可以使用相应的语句。当然在企业中grok不一定时最好的,我们可以在数据output 到redis ,然后通过python语言进行处理,然后再放到ES中了。

[root@server3 file]# vim grok.conf

input {

stdin {}

}

filter {

grok {

match => { "message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}" }

}

}

output {

stdout {}

}

[root@server3 conf.d]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/grok.conf