springboot 整合 kafka demo 顺便看一下源码

大家好,我是烤鸭:

今天分享下 springboot 整合 kafka。

1. 环境参数:

windows + kafka_2.11-2.3.0 + zookeeper-3.5.6 + springboot 2.3.0

2. 下载安装zookeeper + kafka

zookeeper:

https://mirror.bit.edu.cn/apache/zookeeper/zookeeper-3.5.8/apache-zookeeper-3.5.8-bin.tar.gz

复制 zoo_sample.cfg ,改名为 zoo.cfg,增加日志路径:

dataDir=D:\xxx\env\apache-zookeeper-3.5.6-bin\data

dataLogDir=D:\xxx\env\apache-zookeeper-3.5.6-bin\log启动zk,zkServer.cmd

kafka:

https://kafka.apache.org/downloads

找 Binary downloads 下载

https://archive.apache.org/dist/kafka/2.3.0/kafka_2.12-2.3.0.tgz

修改 config/server.properties,由于zk用的默认端口 2181,所以不需要改

log.dirs=D:\\xxx\\env\\kafka\\logs

启动kafka

D:\xxx\env\kafka\bin\windows\kafka-server-start.bat D:\xxx\env\kafka\config\server.properties3. springboot 接入

pom.xml

org.springframework.boot

spring-boot-starter

org.springframework.kafka

spring-kafka

org.projectlombok

lombok

true

org.springframework.boot

spring-boot-starter-test

test

org.junit.vintage

junit-vintage-engine

org.springframework

spring-web

org.springframework.boot

spring-boot-starter-web

2.3.0.RELEASE

compile

application.yml

spring:

kafka:

# 指定kafka server的地址,集群配多个,中间,逗号隔开

bootstrap-servers: 127.0.0.1:9092

# 生产者

producer:

# 写入失败时,重试次数。当leader节点失效,一个repli节点会替代成为leader节点,此时可能出现写入失败,

# 当retris为0时,produce不会重复。retirs重发,此时repli节点完全成为leader节点,不会产生消息丢失。

retries: 0

# 每次批量发送消息的数量,produce积累到一定数据,一次发送

batch-size: 16384

# produce积累数据一次发送,缓存大小达到buffer.memory就发送数据

buffer-memory: 33554432

# 指定消息key和消息体的编解码方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

properties:

linger.ms: 1

# 消费者

consumer:

enable-auto-commit: false

auto-commit-interval: 100ms

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

properties:

session.timeout.ms: 15000

group-id: group

server:

port: 8081KafkaDemoController.java

package com.mys.mys.demo.kafka.web;

import com.mys.mys.demo.kafka.service.KafkaSendService;

import org.apache.kafka.clients.admin.AdminClient;

import org.apache.kafka.clients.admin.AdminClientConfig;

import org.apache.kafka.clients.admin.NewTopic;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaAdmin;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Map;

@RestController

public class KafkaDemoController {

@Autowired

private KafkaTemplate kafkaTemplate;

@Autowired

KafkaSendService kafkaSendService;

@GetMapping("/message/send")

public boolean send(@RequestParam String message) {

//默认自动创建,消费者端 allow.auto.create.topics = true

//createTopic();

kafkaTemplate.send("testTopic-xxx15", message);

return true;

}

//同步

@GetMapping("/message/sendSync")

public boolean sendSync(@RequestParam String message){

kafkaSendService.sendSync("synctopic",message);

return true;

}

//异步示例

@GetMapping("/message/sendAnsyc")

public boolean sendAnsys(@RequestParam String message){

kafkaSendService.sendAnsyc("ansyctopic",message);

return true;

}

/**

* @Author

* @Description 创建主题

* @Date 2020/5/23 19:03

* @Param []

* @return void

**/

private void createTopic() {

Map configs = new HashMap<>();

configs.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG,

"127.0.0.1:9092");

KafkaAdmin admin = new KafkaAdmin(configs);

NewTopic newTopic = new NewTopic("testTopic-xxx15",1,(short)1);

AdminClient adminClient = AdminClient.create(admin.getConfigurationProperties());

adminClient.createTopics(Arrays.asList(newTopic));

}

} KafkaSendService.java

package com.mys.mys.demo.kafka.service;

import com.mys.mys.demo.kafka.handler.KafkaSendResultHandler;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Service;

import org.springframework.util.concurrent.ListenableFuture;

import org.springframework.util.concurrent.ListenableFutureCallback;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.TimeoutException;

@Service

public class KafkaSendService {

@Autowired

private KafkaTemplate kafkaTemplate;

@Autowired

private KafkaSendResultHandler producerListener;

/**

* 异步示例

* */

public void sendAnsyc(final String topic,final String message){

//统一监听处理

kafkaTemplate.setProducerListener(producerListener);

ListenableFuture> future = kafkaTemplate.send(topic,message);

//具体业务的写自己的监听逻辑

future.addCallback(new ListenableFutureCallback>() {

@Override

public void onSuccess(SendResult result) {

System.out.println("发送消息成功:" + result);

}

@Override

public void onFailure(Throwable ex) {

System.out.println("发送消息失败:"+ ex.getMessage());

}

});

}

/**

* 同步示例

* */

public void sendSync(final String topic,final String message){

ProducerRecord producerRecord = new ProducerRecord<>(topic, message);

try {

kafkaTemplate.send(producerRecord).get(10, TimeUnit.SECONDS);

System.out.println("发送成功");

}

catch (ExecutionException e) {

System.out.println("发送消息失败:"+ e.getMessage());

}

catch (TimeoutException | InterruptedException e) {

System.out.println("发送消息失败:"+ e.getMessage());

}

}

} CustomerListener.java

package com.mys.mys.demo.kafka.consumer;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Component

public class CustomerListener {

@KafkaListener(topics="testTopic")

public void onMessage(String message){

System.out.println("消费="+message);

}

@KafkaListener(topics="testTopic-xxx14")

public void onMessage1(String message){

System.out.println("消费="+message);

}

@KafkaListener(topics="testTopic-xxx15")

public void onMessage15(String message){

System.out.println("消费="+message);

}

}KafkaSendResultHandler.java(用于接收异步的返回值)

package com.mys.mys.demo.kafka.handler;

import org.apache.kafka.clients.producer.ProducerRecord;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.kafka.support.ProducerListener;

import org.springframework.stereotype.Component;

@Component

public class KafkaSendResultHandler implements ProducerListener {

private static final Logger log = LoggerFactory.getLogger(KafkaSendResultHandler.class);

@Override

public void onSuccess(ProducerRecord producerRecord, RecordMetadata recordMetadata) {

log.info("Message send success : " + producerRecord.toString());

}

@Override

public void onError(ProducerRecord producerRecord, Exception exception) {

log.info("Message send error : " + producerRecord.toString());

}

}

4. 效果和部分源码分析

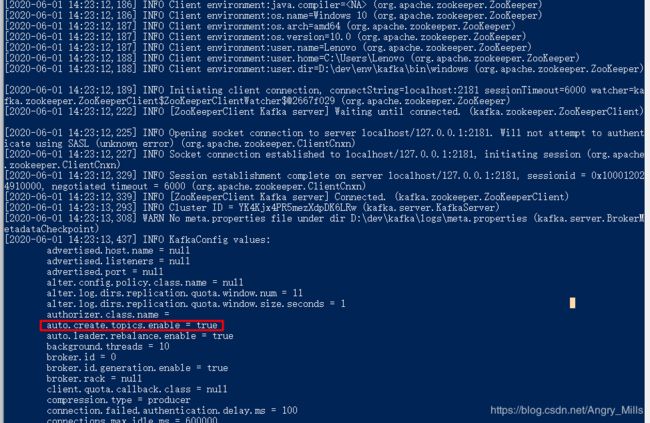

看一下项目启动的日志,消费者监听到的分区和队列名称。另外如果kafka没有这个队列,在调用send方法时自动创建,看以下这个配置。

auto.create.topics.enable ,默认为 true。

访问路径:http://localhost:8081/message/send?message=1234

输出结果。

可以看下 ProducerRecord 这个类,方法先不贴了,看这几个属性。

public class ProducerRecord {

//队列名称

private final String topic;

//分区名称,如果没有指定,会按照key的hash值分配。如果key也没有,按照循环的方式分配。

private final Integer partition;

//请求头,用来存放k、v以外的信息,默认是只读的

private final Headers headers;

//key-value

private final K key;

private final V value;

//时间戳,如果不传,默认按服务器时间来

private final Long timestamp;

} 再看下 Producer,重点看下 send方法,kafka支持同步或异步接收消息发送的结果,实现都是靠Future,只是异步的时候future执行了回调方法,支持拦截器方式。

/**

* The interface for the {@link KafkaProducer}

* @see KafkaProducer

* @see MockProducer

*/

public interface Producer extends Closeable {

/**

* See {@link KafkaProducer#send(ProducerRecord)}

*/

Future send(ProducerRecord record);

/**

* See {@link KafkaProducer#send(ProducerRecord, Callback)}

*/

Future send(ProducerRecord record, Callback callback);

}

更详细的看这篇文章说的很好:

https://www.cnblogs.com/dingwpmz/p/12153036.html

简单总结一下:

Producer的send方法并不会直接像broker发送数据,而是计算消息长度是否超限,是否开启事务,如果当前缓存区已写满或创建了一个新的缓存区,则唤醒 Sender(消息发送线程),将缓存区中的消息发送到 broker 服务器,以队列的形式(每个topic+每个partition维护一个双端队列),即 ArrayDeque,内部存放的元素为 ProducerBatch,即代表一个批次,即 Kafka 消息发送是按批发送的。