centos7 通过cephadm部署ceph octopus版本

导读:

1.从零部署一个ceph集群

2.ceph block device与cephfs快速入门

3.ceph 对象存储快速入门

4.Ceph存储集群&配置

ceph部署(manual)

| hostname | host ip |

|---|---|

| ceph-admin | 10.10.128.174 |

| ceph-node1 | 10.10.128.175 |

| ceph-node2 | 10.10.128.176 |

3个节点部署docker环境

配置docker repo

[root@ceph-admin ~]# yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

如果没有这条命令,可以先安装yum-utils包

[root@ceph-admin ~]# yum install docker-ce -y

启动docker并设置开机自启

[root@ceph-admin ~]# systemctl start docker ; systemctl enable docker

3个节点获取安装包

通过cephadm脚本

[root@ceph-admin ~]# curl --silent --remote-name --location https://github.com/ceph/ceph/raw/octopus/src/cephadm/cephadm

授予执行权限

[root@ceph-admin ~]# chmod +x cephadm

基于发行版的名称配置ceph仓库

[root@ceph-admin ~]# ./cephadm add-repo --release octopus

3个节点执行cephadm安装程序

执行安装脚本

[root@ceph-admin ~]# ./cephadm install

验证一下ceph已经在PATH中了

[root@ceph-admin ~]# which cephadm

/usr/sbin/cephadm

部署集群中第一个mon

[root@ceph-admin ~]# mkdir -p /etc/ceph

[root@ceph-admin ~]# cephadm bootstrap --mon-ip 10.10.128.174

上述两条指令会为我们完成以下工作:

- 创建mon

- 创建ssh key并且添加到

/root/.ssh/authorized_keys文件 - 将集群间通信的最小配置写入

/etc/ceph/ceph.conf - 将client.admin管理secret密钥的副本写入

/etc/ceph/ceph.client.admin.keyring。 - 将公用密钥的副本写入

/etc/ceph/ceph.pub

cephadm shell命令在装有所有Ceph软件包的容器中启动bash shell。默认情况下,如果在主机上的/etc/ceph中找到配置文件和keyring文件,它们将被传递到容器环境中,从而使Shell可以正常运行。 若是在MON主机上执行时,cephadm Shell将使用MON容器的配置,而不是使用默认配置。 如果给出了–mount ,则主机 (文件或目录)将出现在容器内的/mnt下:为了方便使用,可以给cephadm shell换个名字

[root@ceph-admin ~]# alias ceph='cephadm shell -- ceph'

想要永久生效可以编辑/etc/bashrc

alias ceph='cephadm shell -- ceph'

添加新的节点到集群

如果要添加新的节点到集群,要将ssh 公钥推送到新的节点authorized_keys文件中

[root@ceph-admin ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node1

[root@ceph-admin ~]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@ceph-node2

告诉Ceph,新节点是集群的一部分

[root@ceph-admin ~]# ceph orch host add ceph-node1

[root@ceph-admin ~]# ceph orch host add ceph-node2

添加mon

一个典型的Ceph集群具有三个或五个分布在不同主机上的mon守护程序。 如果群集中有五个或更多节点,建议部署五个监视器。

当Ceph知道监视器应该使用哪个IP子网时,它可以随着群集的增长(或收缩)自动部署和扩展mon。 默认情况下,Ceph假定其他mon应使用与第一台mon的IP相同的子网。

如果的Ceph mon(或整个群集)位于单个子网中,则默认情况下,向群集中添加新主机时,cephadm会自动最多添加5个监视器。 无需其他步骤。

我本次3个节点,调整默认3个mon

[root@ceph-admin ~]# ceph orch apply mon 3

部署mon到指定节点

[root@ceph-admin ~]# ceph orch apply mon ceph-admin,ceph-node1,ceph-node2

[root@ceph-admin ~]# ceph mon dump

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

epoch 3

fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

last_changed 2020-06-19T01:46:37.153347+0000

created 2020-06-19T01:42:58.010834+0000

min_mon_release 15 (octopus)

0: [v2:10.10.128.174:3300/0,v1:10.10.128.174:6789/0] mon.ceph-admin

1: [v2:10.10.128.175:3300/0,v1:10.10.128.175:6789/0] mon.ceph-node1

2: [v2:10.10.128.176:3300/0,v1:10.10.128.176:6789/0] mon.ceph-node2

dumped monmap epoch 3

部署osd

需要满足以下所有条件,存储设备才被认为是可用的:

- 设备没有分区

- 设备不得具有任何LVM状态。

- 设备没有挂载

- 设备不包含任何文件系统

- 设备不包含ceph bluestore osd

- 设备必须大于5G

并且ceph不会在不可用的设备上创建osd。

可以找一块干净的设备通过下述命令创建osd

[root@ceph-admin ~]# ceph orch daemon add osd ceph-node2:/dev/vdb

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

Created osd(s) 0 on host 'ceph-admin'

[root@ceph-admin ~]# ceph orch daemon add osd ceph-admin:/dev/vdb

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

Created osd(s) 1 on host 'ceph-admin'

[root@ceph-admin ~]# ceph orch daemon add osd ceph-node1:/dev/vdb

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

Created osd(s) 2 on host 'ceph-node1'

查看osd列表

[root@ceph-admin ~]# ceph osd tree

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.29306 root default

-5 0.09769 host ceph-admin

1 hdd 0.09769 osd.1 up 1.00000 1.00000

-7 0.09769 host ceph-node1

2 hdd 0.09769 osd.2 up 1.00000 1.00000

-3 0.09769 host ceph-node2

0 hdd 0.09769 osd.0 up 1.00000 1.00000

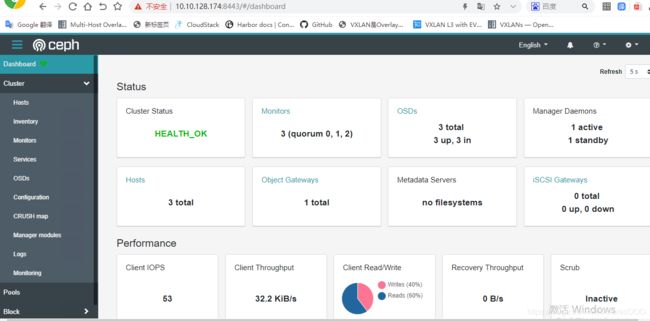

查看集群状态

这时我们的集群状态应该是ok了

[root@ceph-admin ~]# ceph -s

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

cluster:

id: 23db6d22-b1ce-11ea-b263-1e00940000dc

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-admin,ceph-node1,ceph-node2 (age 24m)

mgr: ceph-admin.zlwsks(active, since 83m), standbys: ceph-node2.lylyez

osd: 3 osds: 3 up (since 42s), 3 in (since 42s)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 297 GiB / 300 GiB avail

pgs: 1 active+clean

部署RGWS

Cephadm将radosgw部署为管理特定realm和zone的守护程序的集合。 (有关领域和区域的更多信息,请参见多站点。)

请注意,使用cephadm时,radosgw守护程序是通过监视器配置数据库而不是通过ceph.conf或命令行来配置的。 如果该配置尚未就绪(通常在client.rgw。。部分中),那么radosgw守护程序将使用默认设置(例如,绑定到端口80)启动。

如果尚未创建realm,请首先创建一个realm:

[root@ceph-admin ~]# yum install ceph-common -y

[root@ceph-admin ~]# radosgw-admin realm create --rgw-realm=mytest --default

{

"id": "01784f23-a4cf-456b-b87a-b102c42b5699",

"name": "mytest",

"current_period": "97d080cb-a93f-441a-ae80-c1f59ee39c03",

"epoch": 1

}

然后创建新的zonegroup

[root@ceph-admin ~]# radosgw-admin zonegroup create --rgw-zonegroup=myzg --master --default

{

"id": "1f02e57e-1b2c-4d93-ae39-0bb4e43421d4",

"name": "myzg",

"api_name": "myzg",

"is_master": "true",

"endpoints": [],

"hostnames": [],

"hostnames_s3website": [],

"master_zone": "",

"zones": [],

"placement_targets": [],

"default_placement": "",

"realm_id": "01784f23-a4cf-456b-b87a-b102c42b5699",

"sync_policy": {

"groups": []

}

}

之后再zonegroup中创建zone

[root@ceph-admin ~]# radosgw-admin zone create --rgw-zonegroup=myzg --rgw-zone=myzone --master --default

{

"id": "3710dde5-55a6-4fed-a5a8-cc85f9e0997f",

"name": "myzone",

"domain_root": "myzone.rgw.meta:root",

"control_pool": "myzone.rgw.control",

"gc_pool": "myzone.rgw.log:gc",

"lc_pool": "myzone.rgw.log:lc",

"log_pool": "myzone.rgw.log",

"intent_log_pool": "myzone.rgw.log:intent",

"usage_log_pool": "myzone.rgw.log:usage",

"roles_pool": "myzone.rgw.meta:roles",

"reshard_pool": "myzone.rgw.log:reshard",

"user_keys_pool": "myzone.rgw.meta:users.keys",

"user_email_pool": "myzone.rgw.meta:users.email",

"user_swift_pool": "myzone.rgw.meta:users.swift",

"user_uid_pool": "myzone.rgw.meta:users.uid",

"otp_pool": "myzone.rgw.otp",

"system_key": {

"access_key": "",

"secret_key": ""

},

"placement_pools": [

{

"key": "default-placement",

"val": {

"index_pool": "myzone.rgw.buckets.index",

"storage_classes": {

"STANDARD": {

"data_pool": "myzone.rgw.buckets.data"

}

},

"data_extra_pool": "myzone.rgw.buckets.non-ec",

"index_type": 0

}

}

],

"realm_id": "01784f23-a4cf-456b-b87a-b102c42b5699"

}

为特定的zone和realm部署一组radosgw程序

[root@ceph-admin ~]# ceph orch apply rgw mytest myzone --placement="1 ceph-node1"

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

Scheduled rgw.mytest.myzone update...

常用指令

- 显示当前的orchestrator模式和高级状态

[root@ceph-admin ~]# ceph orch status

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

Backend: cephadm

Available: True

- 显示集群内的主机

[root@ceph-admin ~]# ceph orch host ls

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

HOST ADDR LABELS STATUS

ceph-admin ceph-admin

ceph-node1 ceph-node1

ceph-node2 ceph-node2

- 添加/移除主机

ceph orch host add [] [...]

ceph orch host rm

也可以通过使用yaml文件,通过 ceph orch apply -i

---

service_type: host

addr: node-00

hostname: node-00

labels:

- example1

- example2

---

service_type: host

addr: node-01

hostname: node-01

labels:

- grafana

---

service_type: host

addr: node-02

hostname: node-02

- 显示发现的设备

[root@ceph-admin ~]# ceph orch device ls

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

HOST PATH TYPE SIZE DEVICE AVAIL REJECT REASONS

ceph-admin /dev/vda hdd 100G 83662236981f4c5787c2 False LVM detected, Insufficient space (<5GB) on vgs, locked

ceph-admin /dev/vdb hdd 100G f42c3ceb3b7c437fabd0 False LVM detected, Insufficient space (<5GB) on vgs, locked

ceph-node2 /dev/vda hdd 100G 7d763f9c09b9468c9aeb False LVM detected, Insufficient space (<5GB) on vgs, locked

ceph-node2 /dev/vdb hdd 100G 5c59861ba0b14c648ecb False LVM detected, Insufficient space (<5GB) on vgs, locked

ceph-node1 /dev/vda hdd 100G 4e37baa77dc24ea791c3 False locked, Insufficient space (<5GB) on vgs, LVM detected

ceph-node1 /dev/vdb hdd 100G ce3612013e9242028113 False locked, Insufficient space (<5GB) on vgs, LVM detected

- 创建osd

ceph orch daemon add osd :device1,device2

例如

[root@ceph-admin ~]# ceph orch daemon add osd ceph-node2:/dev/vdb

移除osd

ceph orch osd rm ... [--replace] [--force]

例如

ceph orch osd rm 4

- 查看service状态

[root@ceph-admin ~]# ceph orch ls

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

NAME RUNNING REFRESHED AGE PLACEMENT IMAGE NAME IMAGE ID

alertmanager 1/1 10m ago 3h count:1 prom/alertmanager c876f5897d7b

crash 3/3 10m ago 3h * docker.io/ceph/ceph:v15 d72755c420bc

grafana 1/1 10m ago 3h count:1 ceph/ceph-grafana:latest 87a51ecf0b1c

mgr 2/2 10m ago 3h count:2 docker.io/ceph/ceph:v15 d72755c420bc

mon 3/3 10m ago 3h ceph-admin,ceph-node1,ceph-node2 docker.io/ceph/ceph:v15 d72755c420bc

node-exporter 3/3 10m ago 3h * prom/node-exporter 0e0218889c33

osd.all-available-devices 0/3 - - *

prometheus 1/1 10m ago 3h count:1 prom/prometheus:latest 39d1866a438a

rgw.mytest.myzone 1/1 10m ago 93m count:1 ceph-node1 docker.io/ceph/ceph:v15 d72755c420bc

- 查看daemon 状态

[root@ceph-admin ~]# ceph orch ps

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

alertmanager.ceph-admin ceph-admin running (2h) 11m ago 3h 0.21.0 prom/alertmanager c876f5897d7b 1519fba800d1

crash.ceph-admin ceph-admin running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 96268d75560d

crash.ceph-node1 ceph-node1 running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 88b93a5fc13c

crash.ceph-node2 ceph-node2 running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc f28bf8e226a5

grafana.ceph-admin ceph-admin running (3h) 11m ago 3h 6.6.2 ceph/ceph-grafana:latest 87a51ecf0b1c ffdc94b51b4f

mgr.ceph-admin.zlwsks ceph-admin running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc f2f37c43ad33

mgr.ceph-node2.lylyez ceph-node2 running (2h) 11m ago 2h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 296c63eace2e

mon.ceph-admin ceph-admin running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 9b9fc8886759

mon.ceph-node1 ceph-node1 running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc b44f80941aa2

mon.ceph-node2 ceph-node2 running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 583fadcf6429

node-exporter.ceph-admin ceph-admin running (3h) 11m ago 3h 1.0.1 prom/node-exporter 0e0218889c33 712293a35a3d

node-exporter.ceph-node1 ceph-node1 running (2h) 11m ago 3h 1.0.1 prom/node-exporter 0e0218889c33 5488146a5ec9

node-exporter.ceph-node2 ceph-node2 running (3h) 11m ago 3h 1.0.1 prom/node-exporter 0e0218889c33 610e82d9a2a2

osd.0 ceph-node2 running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 1ad0eaa85618

osd.1 ceph-admin running (3h) 11m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 2efb75ec9216

osd.2 ceph-node1 running (2h) 11m ago 2h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc ceff74685794

prometheus.ceph-admin ceph-admin running (2h) 11m ago 3h 2.19.0 prom/prometheus:latest 39d1866a438a bc21536e7852

rgw.mytest.myzone.ceph-node1.xykzap ceph-node1 running (93m) 11m ago 93m 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc 40f483714868

查看指定的daemon状态

ceph orch ps --daemon_type osd --daemon_id 0

例如

[root@ceph-admin ~]# ceph orch ps --daemon_type mon --daemon_id ceph-node1

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

NAME HOST STATUS REFRESHED AGE VERSION IMAGE NAME IMAGE ID CONTAINER ID

mon.ceph-node1 ceph-node1 running (3h) 12m ago 3h 15.2.3 docker.io/ceph/ceph:v15 d72755c420bc b44f80941aa2

- service/daemon 的Start/stop/reload

ceph orch service {stop,start,reload}

ceph orch daemon {start,stop,reload}

访问dashboard界面

在部署完成时,其实是可以看到一个可以通过 hostip:8443访问dashboard的地址。并且提供了用户名和密码。如果忘记了,可以通过重新设置admin的密码进行修改。

[root@ceph-admin ~]# ceph dashboard ac-user-set-password admin 123qweASD

INFO:cephadm:Inferring fsid 23db6d22-b1ce-11ea-b263-1e00940000dc

INFO:cephadm:Using recent ceph image ceph/ceph:v15

{"username": "admin", "password": "$2b$12$fLsTok.XZk0OxS/QlI/ZouXMzv7lorZPPN0qg5WKof9P3tsuLy8Xm", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": 1592545879, "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}