ffmpeg水印叠加代码分析

刚刚学习,有什么不对的地方欢迎指正~~

附代码:

#include

#define __STDC_CONSTANT_MACROS

#ifdef _WIN32

#define snprintf _snprintf

//Windows

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavfilter/avfiltergraph.h"

#include "libavfilter/avcodec.h"

#include "libavfilter/buffersink.h"

#include "libavfilter/buffersrc.h"

#include "libavutil/avutil.h"

#include "libswscale/swscale.h"

#include "SDL/SDL.h"

};

#else

//Linux...

#ifdef __cplusplus

extern "C"

{

#endif

#include

#include

#include

#include

#include

#include

#include

#include

#include

#ifdef __cplusplus

};

#endif

#endif

//Enable SDL?

#define ENABLE_SDL 1

//Output YUV data?

#define ENABLE_YUVFILE 1

const char *filter_descr = "movie=my_logo.png[wm];[in][wm]overlay=5:5[out]";

static AVFormatContext *pFormatCtx;

static AVCodecContext *pCodecCtx;

AVFilterContext *buffersink_ctx;

AVFilterContext *buffersrc_ctx;

AVFilterGraph *filter_graph;

static int video_stream_index = -1;

static int open_input_file(const char *filename)

{

int ret;//相当于return,返回值

AVCodec *dec;

if ((ret = avformat_open_input(&pFormatCtx, filename, NULL, NULL)) < 0) {

printf( "Cannot open input file\n");

return ret;

}

if ((ret = avformat_find_stream_info(pFormatCtx, NULL)) < 0) {

printf( "Cannot find stream information\n");

return ret;

}

/* select the video stream */

ret = av_find_best_stream(pFormatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, &dec, 0);

if (ret < 0) {

printf( "Cannot find a video stream in the input file\n");

return ret;

}

video_stream_index = ret;

pCodecCtx = pFormatCtx->streams[video_stream_index]->codec;

/* init the video decoder */

if ((ret = avcodec_open2(pCodecCtx, dec, NULL)) < 0) {

printf( "Cannot open video decoder\n");

return ret;

}

return 0;

}

static int init_filters(const char *filters_descr)

{

char args[512];

int ret;

AVFilter *buffersrc = avfilter_get_by_name("buffer");

AVFilter *buffersink = avfilter_get_by_name("ffbuffersink");

AVFilterInOut *outputs = avfilter_inout_alloc();

AVFilterInOut *inputs = avfilter_inout_alloc();

enum PixelFormat pix_fmts[] = { PIX_FMT_YUV420P, PIX_FMT_NONE };

AVBufferSinkParams *buffersink_params;

filter_graph = avfilter_graph_alloc();

/* buffer video source: the decoded frames from the decoder will be inserted here. */

snprintf(args, sizeof(args),

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->time_base.num, pCodecCtx->time_base.den,

pCodecCtx->sample_aspect_ratio.num, pCodecCtx->sample_aspect_ratio.den);

ret = avfilter_graph_create_filter(&buffersrc_ctx, buffersrc, "in",

args, NULL, filter_graph);

if (ret < 0) {

printf("Cannot create buffer source\n");

return ret;

}

/* buffer video sink: to terminate the filter chain. */

buffersink_params = av_buffersink_params_alloc();

buffersink_params->pixel_fmts = pix_fmts;

ret = avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out",

NULL, buffersink_params, filter_graph);

av_free(buffersink_params);

if (ret < 0) {

printf("Cannot create buffer sink\n");

return ret;

}

/* Endpoints for the filter graph. */

outputs->name = av_strdup("in");

outputs->filter_ctx = buffersrc_ctx;

outputs->pad_idx = 0;

outputs->next = NULL;

inputs->name = av_strdup("out");

inputs->filter_ctx = buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

if ((ret = avfilter_graph_parse_ptr(filter_graph, filters_descr,

&inputs, &outputs, NULL)) < 0)

return ret;

if ((ret = avfilter_graph_config(filter_graph, NULL)) < 0)

return ret;

return 0;

}

int main(int argc, char* argv[])

{

int ret;

AVPacket packet;

AVFrame frame;

int got_frame;

av_register_all();

avfilter_register_all();

if ((ret = open_input_file("cuc_ieschool.flv")) < 0)

goto end;

if ((ret = init_filters(filter_descr)) < 0)

goto end;

#if ENABLE_YUVFILE

FILE *fp_yuv=fopen("test.yuv","wb+");

#endif

#if ENABLE_SDL

SDL_Surface *screen;

SDL_Overlay *bmp;

SDL_Rect rect;

if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf( "Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 0, 0);

if(!screen) {

printf("SDL: could not set video mode - exiting\n");

return -1;

}

bmp = SDL_CreateYUVOverlay(pCodecCtx->width, pCodecCtx->height,SDL_YV12_OVERLAY, screen);

SDL_WM_SetCaption("Simplest FFmpeg Video Filter",NULL);

#endif

/* read all packets */

while (1) {

AVFilterBufferRef *picref;

ret = av_read_frame(pFormatCtx, &packet);

if (ret< 0)

break;

if (packet.stream_index == video_stream_index) {

avcodec_get_frame_defaults(&frame);

got_frame = 0;

ret = avcodec_decode_video2(pCodecCtx, &frame, &got_frame, &packet);

if (ret < 0) {

printf( "Error decoding video\n");

break;

}

if (got_frame) {

frame.pts = av_frame_get_best_effort_timestamp(&frame);

/* push the decoded frame into the filtergraph */

if (av_buffersrc_add_frame(buffersrc_ctx, &frame) < 0) {

printf( "Error while feeding the filtergraph\n");

break;

}

/* pull filtered pictures from the filtergraph */

while (1) {

ret = av_buffersink_get_buffer_ref(buffersink_ctx, &picref, 0);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

break;

if (ret < 0)

goto end;

if (picref) {

#if ENABLE_YUVFILE

//Y, U, V

for(int i=0;ivideo->h;i++){

fwrite(picref->data[0]+picref->linesize[0]*i,1,picref->video->w,fp_yuv);

}

for(int i=0;ivideo->h/2;i++){

fwrite(picref->data[1]+picref->linesize[1]*i,1,picref->video->w/2,fp_yuv);

}

for(int i=0;ivideo->h/2;i++){

fwrite(picref->data[2]+picref->linesize[2]*i,1,picref->video->w/2,fp_yuv);

}

#endif

#if ENABLE_SDL

SDL_LockYUVOverlay(bmp);

int y_size=picref->video->w*picref->video->h;

memcpy(bmp->pixels[0],picref->data[0],y_size); //Y

memcpy(bmp->pixels[2],picref->data[1],y_size/4); //U

memcpy(bmp->pixels[1],picref->data[2],y_size/4); //V

bmp->pitches[0]=picref->linesize[0];

bmp->pitches[2]=picref->linesize[1];

bmp->pitches[1]=picref->linesize[2];

SDL_UnlockYUVOverlay(bmp);

rect.x = 0;

rect.y = 0;

rect.w = picref->video->w;

rect.h = picref->video->h;

SDL_DisplayYUVOverlay(bmp, &rect);

//Delay 40ms

SDL_Delay(40);

#endif

avfilter_unref_bufferp(&picref);

}

}

}

}

av_free_packet(&packet);

}

#if ENABLE_YUVFILE

fclose(fp_yuv);

#endif

end:

avfilter_graph_free(&filter_graph);

if (pCodecCtx)

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);

if (ret < 0 && ret != AVERROR_EOF) {

char buf[1024];

av_strerror(ret, buf, sizeof(buf));

printf("Error occurred: %s\n", buf);

return -1;

}

return 0;

}

分段分析:

const char *filter_descr = "movie=my_logo.png[wm];[in][wm]overlay=5:5[out]";相当于命令行里边的

ffmpeg -i inputvideo.avi -vf“movie = watermarklogo.png [watermark]; [in] [watermark] overlay = 10:10 [out]”outputvideo.flv

static int open_input_file(const char *filename)//打开文件函数

{

int ret;//相当于return,返回值

AVCodec *dec;

if ((ret = avformat_open_input(&pFormatCtx, filename, NULL, NULL)) < 0) {//判断能否打开

printf( "Cannot open input file\n");

return ret;

}

if ((ret = avformat_find_stream_info(pFormatCtx, NULL)) < 0) {//函数可以读取一部分视音频数据并且获得一些相关的信息

printf( "Cannot find stream information\n");

return ret;

}

/* select the video stream */

ret = av_find_best_stream(pFormatCtx, AVMEDIA_TYPE_VIDEO, -1, -1, &dec, 0);//获取音视频对应的stream_index(流索引)流类型是下列字母之一:v为视频,a为声音,s为字幕,d为数据,t为附件。如果stream_index给出,则它匹配该类型的索引为stream_index的流。否则,它匹配所有这种类型的流。

if (ret < 0) {

printf( "Cannot find a video stream in the input file\n");

return ret;

}

video_stream_index = ret;

pCodecCtx = pFormatCtx->streams[video_stream_index]->codec;

/* init the video decoder */

if ((ret = avcodec_open2(pCodecCtx, dec, NULL)) < 0) {//初始化一个视音频编解码器的AVCodecContext

printf( "Cannot open video decoder\n");

return ret;

}

return 0;

}

static int init_filters(const char *filters_descr)//初始化AVFilter相关的结构体。

{

char args[512];

int ret;

AVFilter *buffersrc = avfilter_get_by_name("buffer");//获取输入输出滤波器

AVFilter *buffersink = avfilter_get_by_name("ffbuffersink");

AVFilterInOut *outputs = avfilter_inout_alloc();//分配输出流

AVFilterInOut *inputs = avfilter_inout_alloc();//分配输出流

enum PixelFormat pix_fmts[] = { PIX_FMT_YUV420P, PIX_FMT_NONE };//枚举支持的格式

AVBufferSinkParams *buffersink_params;//缓冲池参数

filter_graph = avfilter_graph_alloc();//分配滤波图层

/* buffer video source: the decoded frames from the decoder will be inserted here. */

snprintf(args, sizeof(args),//将可变个参数(...)按照format格式化成字符串,然后将其复制到str中

"video_size=%dx%d:pix_fmt=%d:time_base=%d/%d:pixel_aspect=%d/%d",

pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt,

pCodecCtx->time_base.num, pCodecCtx->time_base.den,

pCodecCtx->sample_aspect_ratio.num, pCodecCtx->sample_aspect_ratio.den);

ret = avfilter_graph_create_filter(&buffersrc_ctx, buffersrc, "in",//创建并向FilterGraph中添加一个Filter

args, NULL, filter_graph);

if (ret < 0) {

printf("Cannot create buffer source\n");

return ret;

}

/* buffer video sink: to terminate the filter chain. */

buffersink_params = av_buffersink_params_alloc();

buffersink_params->pixel_fmts = pix_fmts;

ret = avfilter_graph_create_filter(&buffersink_ctx, buffersink, "out",

NULL, buffersink_params, filter_graph);

av_free(buffersink_params);

if (ret < 0) {

printf("Cannot create buffer sink\n");

return ret;

}

/* Endpoints for the filter graph. */

outputs->name = av_strdup("in");

outputs->filter_ctx = buffersrc_ctx;

outputs->pad_idx = 0;

outputs->next = NULL;

inputs->name = av_strdup("out");

inputs->filter_ctx = buffersink_ctx;

inputs->pad_idx = 0;

inputs->next = NULL;

if ((ret = avfilter_graph_parse_ptr(filter_graph, filters_descr,//解析字幕水印时返回-22,ffmpeg报错No such filter: 'drawtext'

&inputs, &outputs, NULL)) < 0)

return ret;

if ((ret = avfilter_graph_config(filter_graph, NULL)) < 0)//检查FilterGraph的配置

return ret;

return 0;

}主函数:

int main(int argc, char* argv[])

{

int ret;

AVPacket packet;//定义一个数据包

AVFrame frame;//定义一个框架

int got_frame;

av_register_all();//注册复用器,只有调用了该函数,才能使用复用器,编码器等

avfilter_register_all();//注册 滤镜filter

if ((ret = open_input_file("cuc_ieschool.flv")) < 0)

goto end;

if ((ret = init_filters(filter_descr)) < 0)

goto end;

#if ENABLE_YUVFILE//YUV

FILE *fp_yuv=fopen("test.yuv","wb+");

#endif

#if ENABLE_SDL//SDL

SDL_Surface *screen;

SDL_Overlay *bmp;

SDL_Rect rect;

if(SDL_Init(SDL_INIT_VIDEO | SDL_INIT_AUDIO | SDL_INIT_TIMER)) {

printf( "Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

screen = SDL_SetVideoMode(pCodecCtx->width, pCodecCtx->height, 0, 0);

if(!screen) {

printf("SDL: could not set video mode - exiting\n");

return -1;

}

bmp = SDL_CreateYUVOverlay(pCodecCtx->width, pCodecCtx->height,SDL_YV12_OVERLAY, screen);

SDL_WM_SetCaption("Simplest FFmpeg Video Filter",NULL);

#endif

/* read all packets */

while (1) {

AVFilterBufferRef *picref;//filter们处理的帧是用AVFilterBufferRef表示的.然后将帧的一些属性也复制到picref中

ret = av_read_frame(pFormatCtx, &packet);

if (ret< 0)

break;

if (packet.stream_index == video_stream_index) {

avcodec_get_frame_defaults(&frame);

got_frame = 0;

ret = avcodec_decode_video2(pCodecCtx, &frame, &got_frame, &packet);

if (ret < 0) {

printf( "Error decoding video\n");

break;

}

if (got_frame) {

frame.pts = av_frame_get_best_effort_timestamp(&frame);

/* push the decoded frame into the filtergraph */

if (av_buffersrc_add_frame(buffersrc_ctx, &frame) < 0) {

printf( "Error while feeding the filtergraph\n");

break;

}

/* pull filtered pictures from the filtergraph */

while (1) {

ret = av_buffersink_get_buffer_ref(buffersink_ctx, &picref, 0);//调用者从sink中获取处理后的帧:av_buffersink_get_buffer_ref(filt_out, &picref, 0);获取后的帧保存在picref中.

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF)

break;

if (ret < 0)

goto end;

if (picref) {

#if ENABLE_YUVFILE

//Y, U, V

for(int i=0;ivideo->h;i++){

fwrite(picref->data[0]+picref->linesize[0]*i,1,picref->video->w,fp_yuv);

}

for(int i=0;ivideo->h/2;i++){

fwrite(picref->data[1]+picref->linesize[1]*i,1,picref->video->w/2,fp_yuv);

}

for(int i=0;ivideo->h/2;i++){

fwrite(picref->data[2]+picref->linesize[2]*i,1,picref->video->w/2,fp_yuv);

}

#endif

#if ENABLE_SDL

SDL_LockYUVOverlay(bmp);

int y_size=picref->video->w*picref->video->h;

memcpy(bmp->pixels[0],picref->data[0],y_size); //Y

memcpy(bmp->pixels[2],picref->data[1],y_size/4); //U

memcpy(bmp->pixels[1],picref->data[2],y_size/4); //V

bmp->pitches[0]=picref->linesize[0];

bmp->pitches[2]=picref->linesize[1];

bmp->pitches[1]=picref->linesize[2];

SDL_UnlockYUVOverlay(bmp);

rect.x = 0;

rect.y = 0;

rect.w = picref->video->w;

rect.h = picref->video->h;

SDL_DisplayYUVOverlay(bmp, &rect);

//Delay 40ms

SDL_Delay(40);

#endif

avfilter_unref_bufferp(&picref);

}

}

}

}

av_free_packet(&packet);

}

#if ENABLE_YUVFILE

fclose(fp_yuv);

#endif

end:

avfilter_graph_free(&filter_graph);//释放内存

if (pCodecCtx)

avcodec_close(pCodecCtx);

avformat_close_input(&pFormatCtx);//清理封装上下文

if (ret < 0 && ret != AVERROR_EOF) {

char buf[1024];

av_strerror(ret, buf, sizeof(buf));

printf("Error occurred: %s\n", buf);

return -1;

}

return 0;

}

编译运行方法:

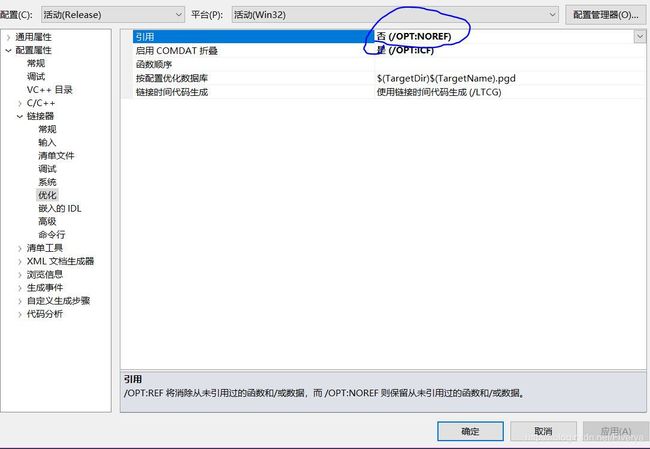

VS2010打开SLN文件,设置如下:

然后编译运行即可。