How to setup a high available Kubernetes cluster with v1.15.0

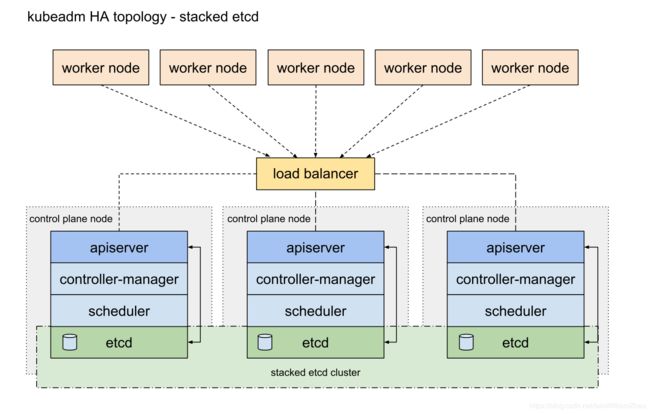

Kubernetes is the best platform for hosting cloud-native applications, there are several topologies for a Kubernetes cluster, if you are going to deploy many applications into one cluster in a production environment, then you might need a high available Kubernetes cluster, in this article, I describe how to setup a HA cluster with local stacked etcd server. here is the diagram:

environments

there should be at least 3 master nodes, one load balance node for api-server, and many worker nodes( if you want to persistent some data into file storage, you might need a node for NFS server).

1: redhat linux:

cat /proc/version

Linux version 3.10.0-957.21.3.el7.x86_64 ([email protected]) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC) ) #1 SMP Fri Jun 14 02:54:29 EDT 2019

2: docker Version:

docker version

Client:

Version: 18.09.6

API version: 1.39

Go version: go1.10.8

Git commit: 481bc77156

Built: Sat May 4 02:34:58 2019

OS/Arch: linux/amd64

Experimental: false

Server: Docker Engine - Community

Engine:

Version: 18.09.6

API version: 1.39 (minimum version 1.12)

Go version: go1.10.8

Git commit: 481bc77

Built: Sat May 4 02:02:43 2019

OS/Arch: linux/amd64

Experimental: false

3: Kubernetes version:

kubeadm version: &version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.0",

GitCommit:"e8462b5b5dc2584fdcd18e6bcfe9f1e4d970a529", GitTreeState:"clean", BuildDate:"2019-06-19T16:37:41Z",

GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.0", GitCommit:"e8462b5b5dc2584fdcd18e6bcfe9f1e4d970a529",

GitTreeState:"clean", BuildDate:"2019-06-19T16:40:16Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.0", GitCommit:"e8462b5b5dc2584fdcd18e6bcfe9f1e4d970a529",

GitTreeState:"clean", BuildDate:"2019-06-19T16:32:14Z", GoVersion:"go1.12.5", Compiler:"gc", Platform:"linux/amd64"}

kubelet --version

Kubernetes v1.15.0

prepare environment

all below steps need to be executed on all master nodes and worker nodes

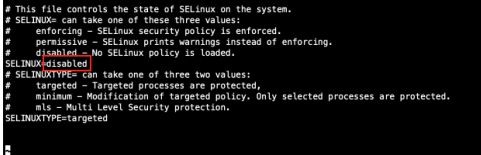

disable selinux

systemctl stop selinux

vi /etc/sysconfig/selinux

disable swap

swapoff -a

vi /etc/fstab

comment out swap entry in stab with char #

disable firewalld

I do not have much time to figure out how to enable firewalld to work well with Kubernetes, so here I disable and stop firewalld with following command:

systemctl disable firewalld

systemctl stop firewalld

install and enable iptable

yum install -y iptables

yum install -y iptables-services

systemctl enable iptables

systemctl start iptables

configure iptable

we need to open following firewall ports for Kubernetes

iptables -A INPUT -p tcp --sport 22 -j ACCEPT

iptables -A INPUT -p tcp --sport 80 -j ACCEPT

iptables -A INPUT -p tcp --sport 443 -j ACCEPT

iptables -A INPUT -p tcp --sport 2049 -j ACCEPT ---->this is for NFS

iptables -A INPUT -p tcp --sport 9443 -j ACCEPT ---->this is for api server proxy

iptables -A INPUT -p tcp --sport 6443 -j ACCEPT

iptables -A INPUT -p tcp --sport 2379:2380 -j ACCEPT

iptables -A INPUT -p tcp --sport 10248 -j ACCEPT

iptables -A INPUT -p tcp --sport 10250:10254 -j ACCEPT

iptables -A INPUT -p tcp --sport 30000:32767 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 22 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 80 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 443 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 2049 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 9443 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 6443 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 2379:2380 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 10248 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 10250:10254 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 30000:32767 -j ACCEPT

service iptables save

systemctl restart iptables

install and enable docker version CE-18.09.06

yum -y install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-cli-18.09.6-3.el7.x86_64.rpm

yum -y install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io-1.2.5-3.1.el7.x86_64.rpm

yum -y install http://mirror.centos.org/centos/7/extras/x86_64/Packages/container-selinux-2.107-1.el7_6.noarch.rpm

yum -y install https://download.docker.com/linux/centos/7/x86_64/stable/Packages/docker-ce-18.09.6-3.el7.x86_64.rpm

systemctl enable docker

systemctl start docker

install kubernetes and enable kubelet

enable kubernetes repository for yum

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kube*

EOF

install kubernetes

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

enable kubelet

systemctl enable kubelet

systemctl start kubelet

enable net bridge for K8s

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.d/k8s.conf

all above steps are needed on all nodes, after all these steps are done, then we can focus on following steps.

install and configure nginx

In a HA cluster, there should be one web server to do load balance for kube-api-servers, in Kubernetes official documents, HAProxy is recommended, but since I am more familiar with nginx, so here I setup ngnix to work as the load balancer.

Install nginx on your web server node

Config yum repository for nginx:

cat < /etc/yum.repos.d/nginx.repo

[nginx]

name=nginx repo

baseurl=http://nginx.org/packages/mainline/rhel/7/$basearch/

gpgcheck=0

enabled=1

EOF

yum install -y nginx

systemctl enable nginx

vi /etc/nginx/nginx.conf

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

worker_rlimit_nofile 65535;

events {

use epoll;

worker_connections 65535;

}

#here I use stream module to do the load balancer, if you want to use http module,

#then you need to pay attention on https certs

stream {

upstream api_server {

server dpydalpd0101.sl.xxx.xxx.com:6443 max_fails=1 fail_timeout=10s;

server dpydalpd0201.sl.xxx.xxx.com:6443 max_fails=1 fail_timeout=10s;

server dpydalpd0301.sl.xxx.xxx.com:6443 max_fails=1 fail_timeout=10s;

}

server {

listen 0.0.0.0:9443;

proxy_pass api_server;

proxy_timeout 60s;

proxy_connect_timeout 5s;

}

}

#start nginx

nginx

write cluster configuration file

we need to first prepare a cluster configuration yaml file, then use kubeadm command to initial first master node.

following is a sample configuration file:

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

bootstrapTokens:

- token: "9a08jv.c0izixklcxtmnze7"

description: "kubeadm bootstrap token"

ttl: "24h"

- token: "783bde.3f89s0fje9f38fhf"

description: "another bootstrap token"

usages:

- authentication

- signing

groups:

- system:bootstrappers:kubeadm:default-node-token

nodeRegistration:

name: "ppydalbik0102.sl.xxx.xxx.com"

criSocket: "/var/run/dockershim.sock"

taints:

- key: "kubeadmNode"

value: "master"

effect: "NoSchedule"

localAPIEndpoint:

advertiseAddress: "10.xxx.xxx.249"

bindPort: 6443

---

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

certificatesDir: "/etc/kubernetes/pki"

imageRepository: "k8s.gcr.io"

useHyperKubeImage: false

clusterName: "k8s-cluster-bi"

networking:

serviceSubnet: "172.17.0.0/16"

podSubnet: "10.244.0.0/16"

dnsDomain: "cluster.local"

kubernetesVersion: "v1.15.0"

controlPlaneEndpoint: "ppydalbik0101.sl.xxx.xxx.com:9443"

etcd:

# one of local or external

local:

imageRepository: "k8s.gcr.io"

imageTag: "3.2.24"

dataDir: "/var/lib/etcd"

serverCertSANs:

- "10.xxx.xxx.216"

- "ppydalbik0101.sl.xxx.xxx.com"

- "10.xxx.xxx.249"

- "ppydalbik0102.sl.xxx.xxx.com"

- "10.xxx.xxx.218"

- "ppydalbik0103.sl.xxx.xxx.com"

- "10.xxx.xxx.223"

- "ppydalbik0104.sl.xxx.xxx.com"

peerCertSANs:

- "10.xxx.xxx216"

- "ppydalbik0101.sl.xxx.xxx.com"

- "10.xxx.xxx.249"

- "ppydalbik0102.sl.xxx.xxx.com"

- "10.xxx.xxx.218"

- "ppydalbik0103.sl.xxx.xxx.ibm.com"

- "10.xxx.xxx.223"

- "ppydalbik0104.sl.xxx.xxx.com"

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

apiserver-count: "3"

certSANs:

- "10.xxx.xxx.216"

- "ppydalbik0101.sl.xxx.xxx.com"

- "10.xxx.xxx.249"

- "ppydalbik0102.sl.xxx.xxx.com"

- "10.xxx.xxx.218"

- "ppydalbik0103.sl.xxx.xxx.com"

- "10.xxx.xxx.223"

- "ppydalbik0104.sl.xxx.xxx.com"

timeoutForControlPlane: 4m0s

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

# kubelet specific options here

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

#kube-proxy specific options here

then you can use following command to initial your cluster

kubeadm init --config=/etc/kubernetes/k8s-cluster-bi.yaml --upload-certs

if you successfully initialize your cluster, then you will see following information

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join ppydalbik0101.sl.xxx.xxx.com:9443 --token 7396yd.ngzodxtroc7qcs7n \

--discovery-token-ca-cert-hash sha256:50d65683cc9cd7c1e375fb92eaa5578ed8ec970bd712ba3afb1336eaca8127ed \

--experimental-control-plane --certificate-key 8681021d49f6dfeefa35e026d8d827cec0b3d7e2a1ce1f9f8fea458f036e7b88

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join ppydalbik0101.sl.xxx.xxx.com:9443 --token 7396yd.ngzodxtroc7qcs7n \

--discovery-token-ca-cert-hash sha256:50d65683cc9cd7c1e375fb92eaa5578ed8ec970bd712ba3afb1336eaca8127ed

configure regular user to access your cluster with admin access.

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

install network add on

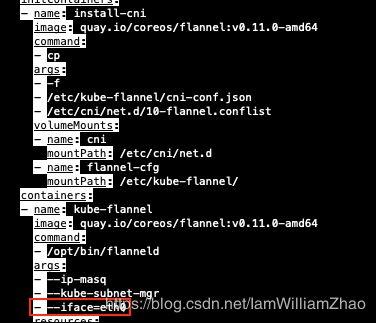

get flannel deployment file

curl https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml > kube-flannel.yml

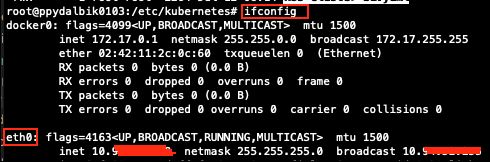

get your ethernet adapter with command ifconfig

edit flannel deployment file

Add flannel network add-on:

kubectl apply -f kube-flannel.yml

podsecuritypolicy.extensions/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

join other cluster master nodes

kubeadm join ppydalbik0101.sl.xxx.xxx.com:9443 --token 7396yd.ngzodxtroc7qcs7n --discovery-token-ca-cert-hash sha256:50d65683cc9cd7c1e375fb92eaa5578ed8ec970bd712ba3afb1336eaca8127ed --experimental-control-plane --certificate-key 8681021d49f6dfeefa35e026d8d827cec0b3d7e2a1ce1f9f8fea458f036e7b88

join worker nodes

kubeadm join ppydalbik0101.sl.xxx.xxx.com:9443 --token 7396yd.ngzodxtroc7qcs7n --discovery-token-ca-cert-hash sha256:50d65683cc9cd7c1e375fb92eaa5578ed8ec970bd712ba3afb1336eaca8127ed

after join nodes, sometimes we need to refresh iptables

systemctl daemon-reload

systemctl stop kubelet

systemctl stop docker

iptables --flush --be careful on this, this will remove your firewall settings.

iptables -tnat --flush. --after flush steps, you need to list and check if your rules are there, of you need to set your rules again

systemctl start kubelet

systemctl start docker

service iptables save

systemctl restart iptables

install and configure nfs (this step is not necessary, but its useful, so I write this step here)

when you move your applications into docker, you need to consider where and how to persistent some data(files), for example, you can use a database to store some structure data, you can save some files into some storage such Object storage or File storage, when you setup a Kubernetes cluster manually, we can use a NFS server to work as a file storage, its very easy to setup and configure.

open firewall ports for nfs-server:

if you use iptables, then you can use follow commands to open firewall ports on your nfs server node

iptables -A INPUT -p tcp --dport 111 -j ACCEPT

iptables -A INPUT -p udp --dport 111 -j ACCEPT

iptables -A INPUT -p tcp --dport 20048 -j ACCEPT

iptables -A INPUT -p udp --dport 20048 -j ACCEPT

iptables -A INPUT -p tcp --dport 2049 -j ACCEPT

iptables -A INPUT -p udp --dport 2049 -j ACCEPT

iptables -A OUTPUT -p tcp --sport 111 -j ACCEPT

iptables -A OUTPUT -p udp --sport 111 -j ACCEPT

iptables -A OUTPUT -p tcp --sport 2049 -j ACCEPT

iptables -A OUTPUT -p udp --sport 2049 -j ACCEPT

iptables -A OUTPUT -p tcp --sport 20048 -j ACCEPT

iptables -A OUTPUT -p udp --sport 20048 -j ACCEPT

if you use firewall, you can use following command to open your firewall ports on nfs-server node:

systemctl enable firewalld

systemctl start firewalld

firewall-cmd --zone=public --permanent --add-port=111/tcp

firewall-cmd --zone=public --permanent --add-port=111/udp

firewall-cmd --zone=public --permanent --add-port=2049/tcp

firewall-cmd --zone=public --permanent --add-port=2049/udp

firewall-cmd --zone=public --permanent --add-port=20048/tcp

firewall-cmd --zone=public --permanent --add-port=20048/udp

firewall-cmd --permanent --add-service=mountd

firewall-cmd --permanent --add-service=nfs

firewall-cmd --permanent --add-service=rpc-bind

firewall-cmd --reload

systemctl restart firewalld

install nfs server:

yum install -y rpcbind nfs-utils

enable and start nfs server:

systemctl enable nfs-server

systemctl start nfs-server

configure nfs-server:

vi /etc/exports

/data/disk1 *.xxx.com (rw,no_root_squash,no_all_squash,sync)

restart nfs-server:

systemctl restart nfs-server

check mount on nfs-server node:

showmount -e localhost

Export list for localhost:

/data/disk1 *

since we have opened firewall ports on all the master and worker nodes,

so now you can directly create PV/PVC to use file system on this nfs-server,

if you want to mount the file system on this nfs-server on other VMs,

you can following steps to install nfs client and create a mount.

1) you need to open firewall port 2049 on nfs client node:

iptables -A INPUT -p tcp --sport 2049 -j ACCEPT

iptables -A OUTPUT -p tcp --dport 2049 -j ACCEPT

2) install nfs-utils:

yum install -y nfs-utils

3) check nfs-server:

showmount -e nfs-server-ip-address

Export list for 10.208.1xx.xxx:

/data/disk1 *

4) create mount point

mkdir /web

5) mount file system

mount 10.208.1xx.xxx:/data/disk1 /web

6) change fstab to save this mount

vi /etc/fstab ---->add following entry

10.208.1xx.xxx:/data/disk1 /web nfs defaults 0 0