大数据-Logstash

Logstash

安装Logstash

(1)网站https://www.elastic.co/cn/downloads/past-releases/logstash-7-1-0下载logstash

(2)解压logstash-7.1.0.tar.gz压缩包

tar -xvzf logstash-7.1.0.tar.gz

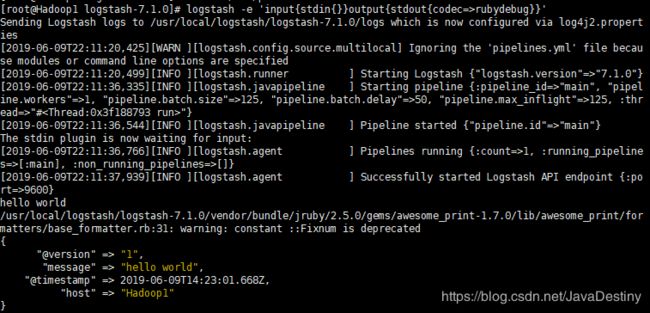

(3)启动logstash

logstash -e 'input{stdin{}}output{stdout{codec=>rubydebug}}'

输入输出配置

查看官网:https://www.elastic.co/guide/en/logstash/current/input-plugins.html

输入配置

(1)input_file.conf

input{

file{

path=>["/root/message"]

start_position=>"beginning"

type=>"system"

}

}

output{stdout{codec=>rubydebug}}

(2)input_stdin.conf

input{

stdin{

add_field=>{"key"=>"value"}

codec=>"plain"

tags=>["add"]

type=>"std"

}

}

output{stdout{codec=>rubydebug}}

(3)input_filter.conf

input{

stdin{

type=>"web"

}

}

filter{

if[type]="web"{

grok{

match=>["message",%{COMBINEDAPACHELOG}]

}

}

}

output{

if"_grokparsefailure" in [tags]{

nagios_nsca{

nagios_status=>"1"

}

}else{

elasticsearch{

}

}

}

(4)input_codec.conf

input{

stdin{

add_field=>{"key"=>"value"}

codec=>"json"

type=>"std"

}

}

output{stdout{codec=>rubydebug}}

(5)input_geoip.conf

input{

stdin{

type=>"std"

}

}

filter{

geoip{

source=>"message"

}

}

output{stdout{codec=>rubydebug}}

(6)input_grok.conf

# grok插件适用于系统日志、Apache和其他网络服务日志,MySQL日志等

# https://github.com/logstash-plugins/logstash-patterns-core/tree/master/patterns

input{

stdin{

type=>"std"

}

}

filter{

grok{

match=>{"message"}=>"%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{number:DURATION}"}

}

}

output{stdout{codec=>rubydebug}}

(7)input_pattern.conf

# pattern

POSTFIX_QUEUEID [0-9A-F]{0-8}

input{

stdin{

type=>"std"

}

}

filter{

grok{

pattern_dir=>["patterns"]

match=>{"message"}=>"%{SYSLOGBASE} %{POSTFIX_QUEUEID:queue_id}: %{GREEDYDATA:syslog_message} "}

}

}

output{stdout{codec=>rubydebug}}

输出配置

output_file.conf

input{

stdin{

type=>"std"

}

}

output{

file{

path=>"./logs/%{+yyyy}/%{+MM}/%{+dd}/%{host}.log"

codec=>line{format=>"custom format: %{message}"}

}

}

服务器间传输文件

接收日志服务器配置

input{

tcp{

mode=>"server"

port=>9600

ssl_enable=>false

}

}

filter{

json{

source=>"message"

}

}

output{

file{

path=>"/logs/%{+YYYY-MM-dd} %{servip}-%{filename}"

codec=>line{format=>"%{message}"}

}

}

发送日志服务器配置

input{

file{

path=>["/root/send.log"]

type=>"ecolog"

start_position=>"beginning"

}

}

filter{

if[type] =~ /^ecolog/ {

ruby{

code=>"file_name=event.get('path').split{'/'}[-1]

event.set('file_name',file_name)

event.set('servip','接收方IP')"

}

mutate{

rename=>{"file_name"=>"filename"}

}

}

}

output{

tcp{

host=>"接收方IP"

port=>9600

codec=>json_lines

}

}

写入到Elasticsearch配置

input{

stdin{

type=>"log2es"

}

}

output{

elasticsearch{

hosts=>["192.168.138.130:9200"]

index=>"logstash-%{type}-%{+YYYY.MM.dd}"

document_type=>"%{type}"

sniffing=>true

template_overwrite=>true

}

}

错误日志写入到Elasticsearch配置

input{

file{

path=>["/logs/error.log"]

type=>"error"

start_position=>"beginning"

}

}

output{

elasticsearch{

hosts=>["192.168.138.130:9200"]

index=>"logstash-%{type}-%{+YYYY.MM.dd}"

document_type=>"%{type}"

sniffing=>true

template_overwrite=>true

}

}