【OpenCV4硬核学习】一、图像与视频IO模块

文章目录

- 一、图像与视频IO模块

- 1.imshow函数、imread函数、imwrite函数

- 2.色彩空间转换函数- cvtColor

- 3.创建图像

- 4.图像创建于赋值

- 5.图像像素的读写操作

- 6.保存图片像素值到.xls文件中

- 7.像素算术操作

- 8.LUT(Look Up Table)的使用

- 9. 像素操作之逻辑操作

- 10.通道分离与合并

- 11.色彩空间

- 12.像素点统计

- 13.像素归一化

- 14.视频的读取和写入(后续补充)

- 15.图像翻转

- 16.图像插值(暂时跳过)

一、图像与视频IO模块

1.imshow函数、imread函数、imwrite函数

常用读取图片操作

Mat src = imread("C:\\Users\\Lenovo\\Desktop\\OpenCV4代码集\\titan.jpg");

if (src.empty()) {

printf("could not load image...\n");

return -1;

}

namedWindow("input", CV_WINDOW_AUTOSIZE);

imshow("input", src);

2.色彩空间转换函数- cvtColor

COLOR_BGR2GRAY = 6 彩色到灰度

COLOR_GRAY2BGR = 8 灰度到彩色

COLOR_BGR2HSV = 40 BGR到HSV

COLOR_HSV2BGR = 54 HSV到 BGR

数字没有什么含义,是枚举类型

3.创建图像

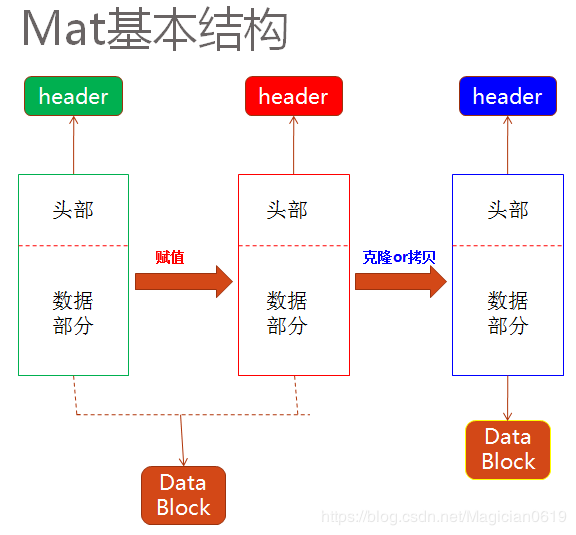

// 创建方法 - 克隆

Mat m1 = src.clone();

// 复制

Mat m2;

src.copyTo(m2);

// 赋值法

Mat m3 = src;

// 创建空白图像

Mat m4 = Mat::zeros(src.size(), src.type());

Mat m5 = Mat::zeros(Size(512, 512), CV_8UC3);

Mat m6 = Mat::ones(Size(512, 512), CV_8UC3);

//像素点描述,9个像素3*3的图像

Mat kernel = (Mat_<char>(3, 3)

<< 0, -1, 0,

-1, 5, -1,

0, -1, 0);

4.图像创建于赋值

5.图像像素的读写操作

这里只介绍两种,直接读取以及指针读取

// 直接读取图像像素

int height = src.rows;

int width = src.cols;

int ch = src.channels();//通道数

for (int c = 0; c < ch; c++) {

for (int row = 0; row < height; row++) {

for (int col = 0; col < width; col++) {

if (ch == 3) {

Vec3b bgr = src.at<Vec3b>(row, col);

bgr[0] = 255 - bgr[0];

bgr[1] = 255 - bgr[1];

bgr[2] = 255 - bgr[2];

src.at<Vec3b>(row, col) = bgr;

}

else if (ch == 1) {

int gray = src.at<uchar>(row, col);

src.at<uchar>(row, col) = 255 - gray;

}

}

}

}

imshow("output", src);

// 指针读取

Mat result = Mat::zeros(src.size(), src.type());

int blue = 0, green = 0, red = 0;

int gray;

for (int c = 0; c < ch; c++) {

for (int row = 0; row < height; row++) {

uchar* curr_row = src.ptr<uchar>(row);

uchar* result_row = result.ptr<uchar>(row);

for (int col = 0; col < width; col++) {

if (ch == 3) {

blue = *curr_row++;

green = *curr_row++;

red = *curr_row++;

*result_row++ = blue;

*result_row++ = green;

*result_row++ = red;

}

else if (ch == 1) {

gray = *curr_row++;

*result_row++ = gray;

}

}

}

}

6.保存图片像素值到.xls文件中

将.xls改成.txt亦可输出到文本文件中

#include 7.像素算术操作

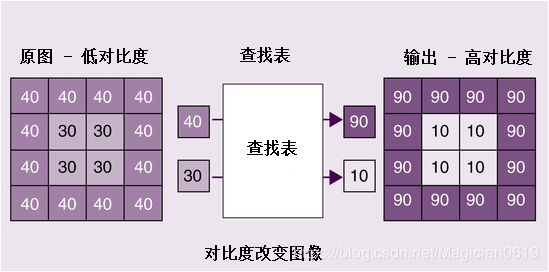

#include 8.LUT(Look Up Table)的使用

具体原理可以参见这篇文章,LUT的使用是将加减乘除运算转化为赋值运算,大大地提高了算法的效率。

#include 9. 像素操作之逻辑操作

-

bitwise_and

-

bitwise_xor

-

bitwise_or

上面三个类似,都是针对两张图像的位操作 -

bitwise_not

针对输入图像, 图像取反操作,二值图像分析中经常用

注:若两幅图像的同一个通道都不为零时,与操作取较大值。

#include 10.通道分离与合并

- split // 通道分类

- merge // 通道合并

#include 11.色彩空间

- RGB

RGB是一个加色空间,通过红,绿和蓝色值的线性组合获得颜色。三个通道通过撞击表面的光量相关联。

- HSV

HSV色彩空间有以下三个组成部分

1、H - 色调(主波长),只使用一个通道描述颜色,使得颜色描述比较直观。

2、S - 饱和度(纯度/颜色的阴影)。

3、V值(强度)。

- YUV

- YCrCb

YCrCb颜色空间是从RGB颜色空间导出的,并具有以下三个组件。

1、Y - 伽马校正后从RGB获得的亮度或亮度(Luma )分量。

2、Cr = R - Y(的红色成分距离Luma有多远)。

3、Cb = B - Y(蓝色分量距离Luma的有多远)。

此颜色空间具有以下属性。

1、 将亮度和色度分量分离成不同的通道。

2、主要用于电视传输的压缩(Cr和Cb组件)。

3、设备依赖。

// RGB to HSV

Mat hsv;

cvtColor(src, hsv, COLOR_BGR2HSV);

imshow("hsv", hsv);

// RGB to YUV

Mat yuv;

cvtColor(src, yuv, COLOR_BGR2YUV);

imshow("yuv", yuv);

// RGB to YUV

Mat ycrcb;

cvtColor(src, ycrcb, COLOR_BGR2YCrCb);

imshow("ycrcb", ycrcb);

12.像素点统计

double minVal; double maxVal; Point minLoc; Point maxLoc;

minMaxLoc(src, &minVal, &maxVal, &minLoc, &maxLoc, Mat());

printf("min: %.2f, max: %.2f \n", minVal, maxVal);

printf("min loc: (%d, %d) \n", minLoc.x, minLoc.y);

printf("max loc: (%d, %d)\n", maxLoc.x, maxLoc.y);

// 彩色图像 三通道的 均值与方差

src = imread("C:\\Users\\Lenovo\\Desktop\\OpenCV4代码集\\eg.jpg");

Mat means, stddev;

meanStdDev(src, means, stddev);

printf("blue channel->> mean: %.2f, stddev: %.2f\n", means.at<double>(0, 0), stddev.at<double>(0, 0));

printf("green channel->> mean: %.2f, stddev: %.2f\n", means.at<double>(1, 0), stddev.at<double>(1, 0));

printf("red channel->> mean: %.2f, stddev: %.2f\n", means.at<double>(2, 0), stddev.at<double>(2, 0));

13.像素归一化

在以下两种情况中,往往需要用到归一化:

1、对数据的范围有所限制,如要求训练数据在0-1之间;

2、数据分布稳定,不存在偏离中心的极大值或极小值时,可以使用归一化。

OpenCV中提供了四种归一化的方法

- NORM_MINMAX

- NORM_INF

- NORM_L1

- NORM_L2

最常用的就是NORM_MINMAX归一化方法。

相关API函数:

normalize(

InputArray src, // 输入图像

InputOutputArray dst, // 输出图像

double alpha = 1, // NORM_MINMAX时候低值

double beta = 0, // NORM_MINMAX时候高值

int norm_type = NORM_L2, // 只有alpha

int dtype = -1, // 默认类型与src一致

InputArray mask = noArray() // mask默认值为空

)

#include MinMax和INF归一化后最大值一定有1,所以255就会将所有的数re-scale到0-255,但L1和L2归一化的结果只是在0-1范围内,最大值不一定是1,所以如果255也不一定会把它re-scale到0-255区间,所以我们需要乘以很大的数,这样虽然值超过了255,但由于是字节型数据,最大值只能是255,这里的10000000和10000是根据具体图像的归一化后的scale范围取的,在做实验的时候不一定要这两个值,可以改,但一定要确保re-scale之后所有的值都在0-255区间。

14.视频的读取和写入(后续补充)

15.图像翻转

图像翻转(Image Flip),图像翻转的本质像素映射,OpenCV支持三种图像翻转方式

- X轴翻转,flipcode = 0

- Y轴翻转, flipcode = 1

- XY轴翻转, flipcode = -1

相关的API

flip

- src输入参数

- dst 翻转后图像

- flipcode

#include16.图像插值(暂时跳过)

图像插值(Image Interpolation)

最常见四种插值算法

INTER_NEAREST = 0

INTER_LINEAR = 1

INTER_CUBIC = 2

INTER_LANCZOS4 = 4

相关的应用场景:几何变换、透视变换、插值计算新像素resize

如果size有值,使用size做放缩插值,否则根据fx与fy卷积

参考

- 图像处理之三种常见双立方插值算法

- 图像放缩之双立方插值

- 图像放缩之双线性内插值

- 图像处理之Lanczos采样放缩算法

附:

- Scalar是一个由长度为4的数组作为元素构成的结构体,Scalar最多可以存储四个值,没有提供的值默认是0,它将各个通道的值构成一个整体,赋给具有相同通道数的矩阵元素。