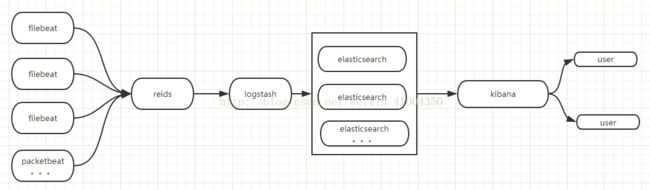

ELK

ELK 架构图

官网下载地址:https://www.elastic.co/cn/downloads/past-releases

elasticsearch-head:https://github.com/mobz/elasticsearch-head

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-5.6.16-x86_64.rpm

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-5.6.16.rpm

wget https://artifacts.elastic.co/downloads/kibana/kibana-5.6.16-x86_64.rpm

wget https://artifacts.elastic.co/downloads/logstash/logstash-5.6.16.rpm

1、安装:yum install ./*.rpm

2、elasticsearch和elasticsearch-head

设置elasticsearch的jave目录(手动安装java需设置yum安装的java无需设置)修改配置文件

vim /etc/sysconfig/elasticsearch

设置java目录

JAVA_HOME=/usr/local/jdk1.8.0_171

创建数据目录并设置权限

mkdir -p /service/es/{data,logs}

chown -R elasticsearch:elasticsearch /service/es/

vim /etc/elasticsearch/elasticsearch.yml

cluster.name: jettech-es

node.name: 10.30.30.117

path.data: /service/es/data

path.logs: /service/es/logs

network.host: 0.0.0.0

http.port: 9200

transport.host: 0.0.0.0

transport.tcp.port: 9300

//es-head 浏览器可以访问连接

http.cors.enabled: true

http.cors.allow-origin: "*"

head:

#安装epel源

yum -y install epel-release

#安装npm、git客户端以及openssl

yum -y install npm git openssl

#克隆elasticsearch项目到本地

git clone git://github.com/mobz/elasticsearch-head.git

cd elasticsearch-head

#安装项目,这个过程需花费大量时间

npm install

#安装完成后,会生成一个node_modes的文件夹

ls -ld node_modules/

执行命令`npm install`的时候遇到报错如下:

npm: relocation error: npm: symbol SSL_set_cert_cb, version libssl.so.10 not defined in file libssl.so.10 with link time reference

这是由于没有安装openssl导致的,因此需要事先安装好openssl!

#启动

nohup npm run start &

head:http://10.30.30.117:9100/

监测:

[root@k8s-test-117 ~]# curl 127.0.0.1:9200

{

"name" : "10.30.30.117",

"cluster_name" : "jettech-es",

"cluster_uuid" : "bQLfZRB8SSyIcYpcMhUweA",

"version" : {

"number" : "5.6.16",

"build_hash" : "3a740d1",

"build_date" : "2019-03-13T15:33:36.565Z",

"build_snapshot" : false,

"lucene_version" : "6.6.1"

},

"tagline" : "You Know, for Search"

}

3、redis:

mkdir -p /mydata/redis

chown -R redis:redis /mydata/redis

vim /etc/redis.conf

bind 0.0.0.0

dir /mydata/redis

systemctl start redis

systemctl enable redis4、filebeat:

vim /etc/filebeat/filebeat.yml

先把该文件中预设的配置全部注释掉,然后加上下面的

filebeat.prospectors:

- input_type: log

paths:

- /var/log/messages 从一个测试文件中获取数据

exclude_lines: ["^DBG"]

document_type: system-log-0019

output.redis: 输出到redis队列

enabled: true

hosts: ["127.0.0.1:6379"]

db: 1

key: "elk_test_list"

#output.redis:

# hosts: "10.30.30.144"

# db: "2"

# port: "6379"

# password: "123456aA"

# key: "filesystem-log-5612"

output.file:

path: "/tmp"

filename: "filebeat.txt"

output.elasticsearch:

hosts: ['elasticsearch:9200']

username: elastic

password: changeme

output.logstash:

hosts: ["10.30.30.117:5044"] #logstash 服务器地址可写入多个

enabled: true #是否开启输出到logstash 默认开启

worker: 1 #进程数

compression_level: 3 #压缩级别

#loadbalance: true #多个输出的时候开启负载

systemctl start filebeat

systemctl enable filebeat4、logstash:

[root@k8s-test-117 conf.d]# cat logstash-sample.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

redis { 从redis队列读数据,要与上面filebeat的配置一致

host => "127.0.0.1"

port => 6379

db => 1

data_type => "list"

key => "elk_test_list"

threads => 3

}

}

filter { 解析内容,这里用一个IP和一个字符串作测试

grok {

match => { "message" => '^%{IP:myip} %{DATA:myname}$' }

}

}

output {

elasticsearch { 输出到elasticsearch

hosts => ["127.0.0.1:9200"]

index => "test-log-%{+YYYY.MM.dd}"

}

}

#input {

# gelf {

# #mode => "server"

# #use_tcp => true

# #host => "10.30.30.134"

# port => 5044

# #type => "log4j2"

# }

#}

#input {

# tcp {

# #host => "10.30.30.134"

# port => 5044

# #mode => server

# codec => json

# }

#}

input {

beats {

port => 5044

#codec => json

}

}

#filter {

# mutate {

# lowercase => [ "logger", "level" ]

# }

#}

#filter {

# date {

# match => [ "timeMillis", "UNIX_MS" ]

# }

#}

output {

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["10.30.30.117:9200"]

index => "jettech-logstash"

#document_type => "%{level}"

template_overwrite => true

template => "/etc/logstash/template/user_action.json"

}

}

[root@k8s-wubo-134 template]# cat user_action.json

{

"template": "**",

"settings": {

"index.number_of_shards": 3,

"number_of_replicas": 0

},

"mappings" : {

"doc" : {

[root@k8s-wubo-134 template]# cat user_action.json

{

"template": "**",

"settings": {

"index.number_of_shards": 3,

"number_of_replicas": 0

},

"mappings" : {

"doc" : {

"properties" : {

"user" : {

"type" : "string",

"index" : "not_analyzed"

},

"module" : {

"type" : "string",

"index" : "not_analyzed"

},

"operation" : {

"type" : "string",

"index" : "not_analyzed"

},

"fullOperation" : {

"type" : "string",

"index" : "not_analyzed"

},

"proceedTime" : {

"type" : "integer",

"index" : "not_analyzed"

},

"createDate" : {

"type" : "date"

},

"createDateL" : {

"type" : "integer" ,

"index" : "not_analyzed"

}

}

}

}

}

https://blog.csdn.net/justlpf/article/details/86138227

https://www.cnblogs.com/toSeek/p/8760837.html

https://www.cnblogs.com/minseo/p/10948632.html

#================================= input kafka, output: es 示例 =============================#

input {

kafka {

bootstrap_servers => ["10.101.15.163:9092"]

group_id => "deepcogni_qa"

topics => ["deepcogni"]

consumer_threads => 5

decorate_events => true

codec => "json"

}

}

output {

elasticsearch {

hosts => ["10.101.15.163:9200"]

index => "deepcogni:qa"

codec => "json"

}

}

#================================= input logfile, output es =============================#

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

#beats {

# port => 5044

#}

file {

path => "/var/log/httpd/access_log"

start_position => beginning

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "%{[@metadata][logstash]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

#================================= input: log file, output: redis =============================#

input {

file {

path => [

# 这里填写需要监控的文件

"/data/log/php/php_fetal.log",

"/data/log/service1/access.log"

]

}

}

output {

# 输出到控制台

# stdout { }

# 输出到redis

redis {

host => "10.140.45.190" # redis主机地址

port => 6379 # redis端口号

db => 8 # redis数据库编号

data_type => "channel" # 使用发布/订阅模式

key => "logstash_list_0" # 发布通道名称

}

}

#================================= input: redis, output: log file =============================#

input {

redis {

host => "10.140.45.190" # redis主机地址

port => 6379 # redis端口号

db => 8 # redis数据库编号

data_type => "channel" # 使用发布/订阅模式

key => "logstash_list_0" # 发布通道名称

}

}

output {

file {

path => "/data/log/logstash/all.log" # 指定写入文件路径

message_format => "%{host} %{message}" # 指定写入格式

flush_interval => 0 # 指定刷新间隔,0代表实时写入

}

}

#================================= input 样式系列 =============================#

input {

file {

codec => json

path => [

"/opt/build/*.json"

]

}

}

input {

file {

path => [

# 这里填写需要监控的文件

"/data/log/php/php_fetal.log",

"/data/log/service1/access.log"

]

}

}

input {

beats {

port => 5000

type => "logs"

ssl => true

ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

#================================= filter样式系列 =============================#

filter {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

}

filter {

if [type] == "syslog-beat" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

geoip {

source => "clientip"

}

syslog_pri {}

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

#================================= output 样式系列 =============================#

output {

# 输出到控制台

# stdout { }

# 输出到redis

redis {

host => "10.140.45.190" # redis主机地址

port => 6379 # redis端口号

db => 8 # redis数据库编号

data_type => "channel" # 使用发布/订阅模式

key => "logstash_list_0" # 发布通道名称

}

}

#写入本地文件。

output {

file {

path => "/data/log/logstash/all.log" # 指定写入文件路径

message_format => "%{host} %{message}" # 指定写入格式

flush_interval => 0 # 指定刷新间隔,0代表实时写入

}

}

output {

elasticsearch {

hosts => "elasticsearch:9200" #改成你的elasticsearch地址

}

}

output {

elasticsearch { }

stdout { codec => rubydebug }

}

4、kibana:

server.port: 5601

server.host: "0.0.0.0"

server.name: "10.30.30.117"

elasticsearch.url: "http://10.30.30.117:9200"

kibana.index: ".kibana"

http://10.30.30.117:5601/

对elasticearch-head的索引(index)进行过滤展示在kibana中

===============================

docker版本

1、elasticsearch elasticsearch-head

docker run -p 9200:9200 -p 9300:9300 -v /root/es/elasticsearch/config:/usr/share/elasticsearch/config -v /root/es/elasticsearch/data:/usr/share/elasticsearch/data -v /root/es/elasticsearch/logs:/usr/share/elasticsearch/logs/ -e discovery.type=single-node -d elasticsearch:5.6.16

docker run -d --name es_admin -p 9100:9100 mobz/elasticsearch-head:5[root@k8s-wubo-134 elasticsearch]# cat config/elasticsearch.yml

cluster.name: "jettopro-es-cluster"

node.name: 10.30.30.134

http.host: 0.0.0.0

http.port: 9200

transport.host: 0.0.0.0

transport.tcp.port: 9300

http.cors.enabled: true

http.cors.allow-origin: "*"

#transport.host: 0.0.0.0

#discovery.zen.minimum_master_nodes: 12、filebead

docker run --name filebeat --network host -v /root/es/filebeat/config/filebeat.yml:/usr/share/filebeat/filebeat.yml -v /root/es/filebeat/messages:/var/log/messages -v /tmp:/tmp -d docker.elastic.co/beats/filebeat:5.6.16 filebeat -c /usr/share/filebeat/filebeat.yml[root@k8s-wubo-134 config]# cat filebeat.yml

filebeat.prospectors:

- input_type: log

paths:

- /var/log/messages

exclude_lines: ["^DBG"]

document_type: system-log-0019

#processor:

#- add_cloud_metadata:

#output.file:

# path: "/tmp"

# filename: "filebeat.txt"

#output.redis:

# hosts: "10.30.30.144"

# db: "2"

# port: "6379"

# password: "123456aA"

# key: "filesystem-log-5612"

#output.elasticsearch:

# hosts: ['elasticsearch:9200']

# username: elastic

# password: changeme

output.logstash:

hosts: ["10.30.30.134:5044"] #logstash 服务器地址可写入多个

enabled: true #是否开启输出到logstash 默认开启

worker: 1 #进程数

compression_level: 3 #压缩级别

#loadbalance: true #多个输出的时候开启负载

3、logstash

docker run -p 9600:9600 -p 5044:5044 -v /var/log/nginx/access.log:/var/log/nginx/access.log -v /var/log/messages:/var/log/messages -v /root/es/logstash/conf:/etc/logstash --name leon_logstash -it -d logstash:5.6.16[root@k8s-wubo-134 conf.d]# cat logstash-sample.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

tcp {

#host => "10.30.30.134"

port => 5044

#mode => server

codec => json

}

}

#input {

# beats {

# port => 5044

# }

#}

output {

elasticsearch {

hosts => ["http://10.30.30.134:9200"]

index => "user-action"

template_overwrite => true

template => "/etc/logstash/template/user_action.json"

#template_name => "user-action"

#user => "elastic"

#password => "changeme"

}

stdout{codec => rubydebug}

}

#output {

# stdout {

# codec => json

# }

#}

filter{

grok{

match => {

"message" => "(?(?<=user#).*?(?=#user))"

}

}

grok{

match => {

"message" => "(?(?<=module#).*?(?=#module))"

}

}

grok{

match => {

"message" => "(?(?<=operation#).*?(?=#operation))"

}

}

grok{

match => {

"message" => "(?(?<=fullOperation#).*?(?=#fullOperation))"

}

}

grok{

match => {

"message" => "(?(?<=proceedTime#).*?(?=#proceedTime))"

}

}

grok{

match => {

"message" => "(?(?<=createDate#).*?(?=#createDate))"

}

}

grok{

match => {

"message" => "(?(?<=createDateL#).*?(?=#createDateL))"

}

}

mutate{

remove_field => ["host"]

remove_field => ["agent"]

remove_field => ["ecs"]

remove_field => ["tags"]

remove_field => ["fields"]

remove_field => ["@version"]

#remove_field => ["@timestamp"]

remove_field => ["input"]

remove_field => ["log"]

}

}

[root@k8s-wubo-134 conf]# cat template/user_action.json

{

"template": "**",

"settings": {

"index.number_of_shards": 3,

"number_of_replicas": 0

},

"mappings" : {

"doc" : {

"properties" : {

"user" : {

"type" : "string",

"index" : "not_analyzed"

},

"module" : {

"type" : "string",

"index" : "not_analyzed"

},

"operation" : {

"type" : "string",

"index" : "not_analyzed"

},

"fullOperation" : {

"type" : "string",

"index" : "not_analyzed"

},

"proceedTime" : {

"type" : "integer",

"index" : "not_analyzed"

},

"createDate" : {

"type" : "date"

},

"createDateL" : {

"type" : "integer" ,

"index" : "not_analyzed"

}

}

}

}

}