分布式存储-ceph部署jewel版本

准备

1,所有节点添加域名解析,设置root无秘钥登录

2,所有节点(包括客服端)创建cent用户

#useradd cent && echo "123" | passwd --stdin cent

设置超级权限

#echo -e 'Defaults:cent !requiretty\ncent ALL = (root) NOPASSWD:ALL' | tee /etc/sudoers.d/ceph

#chmod 440 /etc/sudoers.d/ceph

3,在部署节点切换为cent用户,设置无密钥登陆各节点包括客户端节点

4,在部署节点切换为cent用户,在cent用户家目录,设置如下文件( define all nodes and users ):

$vi ~/.ssh/config

Host pikachu4

Hostname pikachu4

User cent

Host pikachu1

Hostname pikachu1

User cent

Host pikachu3

Hostname pikachu3

User cent

修改权限

$chmod 600 ~/.ssh/config

所有节点配置国内ceph源

1,all-node(包括客户端)

#vim /etc/yum.repos.d/ceph.repo

[ceph]

name=ceph-install

baseurl=https://mirrors.aliyun.com/centos/7/storage/x86_64/ceph-jewel/

enable=1

gpgcheck=0

2,需要到https://mirrors.aliyun.com/centos/7/storage/x86_64/ceph-jewel/下载下面的rpm包(贴心小提示:安包之前快个照)

链接:https://pan.baidu.com/s/1-I5YMav5C4dhN1pqOQUvTA

提取码:n6s4

ceph-10.2.11-0.el7.x86_64.rpm

ceph-base-10.2.11-0.el7.x86_64.rpm

ceph-common-10.2.11-0.el7.x86_64.rpm

ceph-devel-compat-10.2.11-0.el7.x86_64.rpm

cephfs-java-10.2.11-0.el7.x86_64.rpm

ceph-fuse-10.2.11-0.el7.x86_64.rpm

ceph-libs-compat-10.2.11-0.el7.x86_64.rpm

ceph-mds-10.2.11-0.el7.x86_64.rpm

ceph-mon-10.2.11-0.el7.x86_64.rpm

ceph-osd-10.2.11-0.el7.x86_64.rpm

ceph-radosgw-10.2.11-0.el7.x86_64.rpm

ceph-resource-agents-10.2.11-0.el7.x86_64.rpm

ceph-selinux-10.2.11-0.el7.x86_64.rpm

ceph-test-10.2.11-0.el7.x86_64.rpm

libcephfs1-10.2.11-0.el7.x86_64.rpm

libcephfs1-devel-10.2.11-0.el7.x86_64.rpm

libcephfs_jni1-10.2.11-0.el7.x86_64.rpm

libcephfs_jni1-devel-10.2.11-0.el7.x86_64.rpm

librados2-10.2.11-0.el7.x86_64.rpm

librados2-devel-10.2.11-0.el7.x86_64.rpm

libradosstriper1-10.2.11-0.el7.x86_64.rpm

libradosstriper1-devel-10.2.11-0.el7.x86_64.rpm

librbd1-10.2.11-0.el7.x86_64.rpm

librbd1-devel-10.2.11-0.el7.x86_64.rpm

librgw2-10.2.11-0.el7.x86_64.rpm

librgw2-devel-10.2.11-0.el7.x86_64.rpm

python-ceph-compat-10.2.11-0.el7.x86_64.rpm

python-cephfs-10.2.11-0.el7.x86_64.rpm

python-rados-10.2.11-0.el7.x86_64.rpm

python-rbd-10.2.11-0.el7.x86_64.rpm

rbd-fuse-10.2.11-0.el7.x86_64.rpm

rbd-mirror-10.2.11-0.el7.x86_64.rpm

rbd-nbd-10.2.11-0.el7.x86_64.rpm

在部署节点再安装ceph-deploy的rpm包,下载需要到https://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/中找到最新对应的ceph-deploy-xxxxx.noarch.rpm 下载

ceph-deploy-1.5.39-0.noarch.rpm

3,将下载好的rpm拷贝到所有节点,并安装。注意ceph-deploy-xxxxx.noarch.rpm 只有部署节点用到,其他节点不需要,部署节点也需要安装其余的rpm

4,在部署节点==(cent用户下执行)==:安装 ceph-deploy,在root用户下,进入下载好的rpm包目录,执行:

#yum localinstall -y ./*

#ceph-deploy --version

![]()

如果安装失败,只要保证安装 python-setuptools 版本是 0.9.8-7.el7 就可以了

![]()

pikachu1和pikachu3各准备一块磁盘,并格式化为xfs

#mkfs -t xfs /dev/sdb1

部署

部署节点

#su - cent

$mkdir ceph

配置新集群

$cd ceph

$ceph-deploy new pikachu1 pikachu3

$ls

$vim ceph.conf

添加:

osd_pool_default_size = 2

默认添加副本数(几副本)

osd_pool_default_min_size = 1

最小副本数

osd_pool_default_pg_num = 128

默认pg数量

osd_pool_default_pgp_num = 128

默认pgp与pg保持一致

osd_crush_chooseleaf_type = 1

在部署节点执行,所有节点安装ceph软件

$ceph-deploy install pikachu4 pikachu1 pikachu3

在部署节点初始化集群:

$ceph-deploy mon create-initial

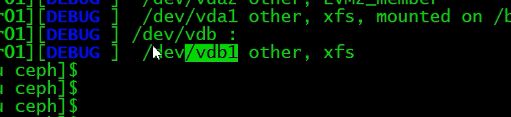

列出节点磁盘:

$ceph-deploy disk list pikachu1

擦净节点磁盘(格式化):

$ceph-deploy disk zap pikachu1:/dev/sdb1

$ceph-deploy disk zap pikachu3:/dev/sdb1

部署OSD(准备Object Storage Daemon)

$ceph-deploy osd prepare pikachu1:/dev/sdb1

$ceph-deploy osd prepare pikachu3:/dev/sdb1

激活Object Storage Daemon

$ceph-deploy osd activate pikachu1:/dev/sdb1

$ceph-deploy osd activate pikachu3:/dev/sdb1

把ceph配置文件传给每个节点

$ceph-deploy admin pikachu4 pikachu1 pikachu3

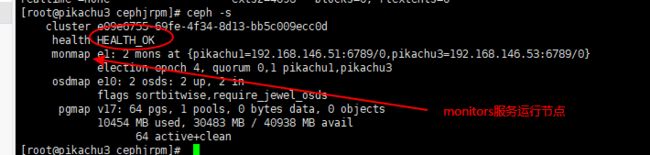

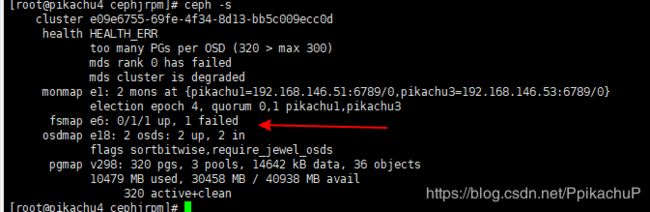

在ceph集群中任意节点检测

#ceph -s

实时刷新

#ceph -w

#ceph osd tree

#systemctl status [email protected]

客户端设置

客户端也要有cent用户

#useradd cent && echo "123" | passwd --stdin cent

#echo-e 'Defaults:cent !requiretty\ncent ALL = (root) NOPASSWD:ALL' | tee /etc/sudoers.d/ceph

#chmod440 /etc/sudoers.d/ceph

客户端执行

#sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

客户端执行,块设备rdb配置:

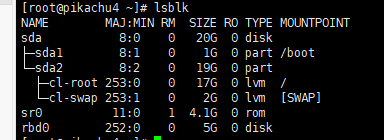

创建rbd:

#rbd create disk01 --size 5G --image-feature layering

列示rbd:

#rbd ls -l

映射rbd的image map:

#rbd map disk01

显示map:

#rbd showmapped

格式化disk01文件系统xfs:sudo

#mkfs.xfs /dev/rbd0

挂载硬盘:sudo

#mount /dev/rbd0 /mnt

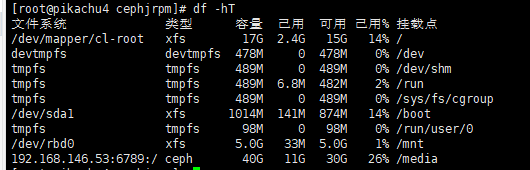

验证是否挂着成功:

#df -hT

客户端通过文件系统访问

在部署节点执行,选择一个node来创建MDS

$su - cent

$cd ceph

$ceph-deploy mds create pikachu3

在pikachu3上执行

#chmod 644 /etc/ceph/ceph.client.admin.keyring

在MDS节点pikachu3上创建 cephfs_data 和 cephfs_metadata 的 pool

![]()

#ceph osd pool create cephfs_data 128

#ceph osd pool create cephfs_metadata 128

123是pg

开启pool

$ceph fs new cephfs cephfs_metadata cephfs_data

显示ceph fs

$ceph fs ls

$ceph mds stat

客户端执行

安装ceph-fuse:

$sudo yum -y install ceph-fuse

获取admin key

$ssh cent@pikachu3 "sudo ceph-authtool -p /etc/ceph/ceph.client.admin.keyring" > admin.key

挂载ceph-fs

$sudo mount -t ceph pikachu3:6789:/ /media -o name=admin,secretfile=admin.key

停止ceph-mds服务

#umount /media/

node pikachu4

#systemctl stop ceph-mds@pikachu3

#ceph mds fail 0

#ceph fs rm cephfs --yes-i-really-mean-it

显示pool

#ceph osd lspools

#ceph osd pool rm cephfs_metadata cephfs_metadata --yes-i-really-really-mean-it

#ceph osd pool rm cephfs_data cephfs_data --yes-i-really-really-mean-it

删除环境:

部署节点

$ceph-deploy purge dlp node1 node2 node3 controller

$ceph-deploy purgedata dlp node1 node2 node3 controller

$ceph-deploy forgetkeys

$rm -rf ceph*