python实现多元线性回归梯度下降法与牛顿法

文章目录

- 梯度下降法

- 基本概念

- 1.微分

- 2.梯度

- 3.学习率

- 梯度下降法的一般求解步骤:

- 牛顿法

- 运用牛顿法的一般步骤

- 参考文献

梯度下降法

基本概念

1.微分

在梯度下降法中,我们所要优化的函数必须是一个连续可微的函数,可微,既可微分,意思是在函数的任意定义域上导数存在。如果导数存在且是连续函数,则原函数是连续可微的(可微必可导,可导必连续)。在高等数学中,我们知道函数的导数(近似于函数的微分)可以有以下两种理解:

1.函数上某一点的切线的斜率就是该点的导数值。

2.函数在某点的导数值能反映出函数在该点的变化率,即导数值越大,函数值变化越快。

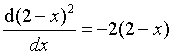

下面举几个连续可微函数求微分的例子:

2.梯度

研究梯度下降法怎么能不知道梯度这个概念呢?梯度的本意是一个向量(矢量),表示某一函数在该点处的方向导数沿着该方向取得最大值,即函数在该点处沿着该方向(此梯度的方向)变化最快,变化率最大(为该梯度的模),这是一个微积分学中的概念。

设二元函数

![]()

在平面区域D上具有一阶连续偏导数,则对于每一个点P(x,y)都可得出两个一阶偏导数

而两个偏导数构成的向量则为该二元函数的梯度向量,记作gradf(x,y)或

而两个偏导数构成的向量则为该二元函数的梯度向量,记作gradf(x,y)或![]()

例如:求函数u=x²+2y²+3z²+3x-2y在(1,1,2)点处的梯度?

3.学习率

学习率也被称为迭代的步长,优化函数的梯度一般是不断变化的(梯度的方向随梯度的变化而变化),因此需要一个适当的学习率约束着每次下降的距离不会太多也不会太少。

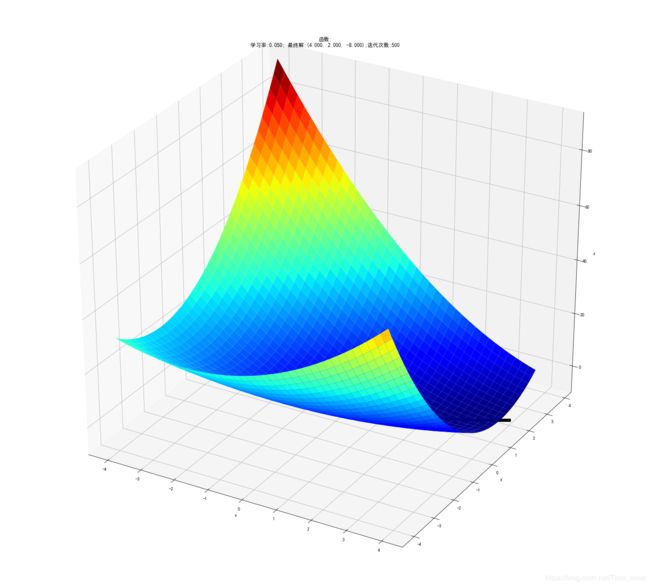

梯度下降法的一般求解步骤:

1.给定待优化连续可微函数J(Θ),学习率α以及一组初始值

2.计算待优化函数梯度:gradJ(Θ)

3.更新迭代公式:

![]()

4.计算Θ^0+1处函数梯度gradJ(Θ0+1)

5.计算梯度向量的模来判断算法是否收敛:||gradJ(Θ)||≤ε

6.若收敛,算法停止,否则根据迭代公式继续迭代

import numpy as np

import matplotlib.pyplot as plt

import matplotlib as mpl

import math

from mpl_toolkits.mplot3d import Axes3D

import warnings

# 解决中文显示问题

mpl.rcParams['font.sans-serif'] = [u'SimHei']

mpl.rcParams['axes.unicode_minus'] = False

"""

二维原始图像

1、构建一个函数。

2、随机生成X1,X2点,根据X1,X2点生成Y点。

3、画出图像。

"""

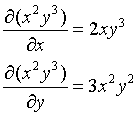

def f2(x1,x2):

return x1**2 + 2*x2**2 - 4*x1 - 2*x1*x2

X1 = np.arange(-4,4,0.2)

X2 = np.arange(-4,4,0.2)

X1, X2 = np.meshgrid(X1, X2) # 生成xv、yv,将X1、X2变成n*m的矩阵,方便后面绘图

Y = np.array(list(map(lambda t : f2(t[0],t[1]),zip(X1.flatten(),X2.flatten()))))

Y.shape = X1.shape # 1600的Y图还原成原来的(40,40)

%matplotlib inline

#作图

fig = plt.figure(facecolor='w')

ax = Axes3D(fig)

ax.plot_surface(X1,X2,Y,rstride=1,cstride=1,cmap=plt.cm.jet)

ax.set_title(u'$ y = x1^2+2x2^2-4x1-2x1x2 $')

plt.show()

"""

对当前二维图像求最小点¶

1、随机取一个点(x1,x2),设定α参数值

2、对这个点分别求关于x1、x2的偏导数

3、重复第二补,设置 y的变化量 小于多少时 不再重复。

"""

# 二维原始图像

def f2(x, y):

return x**2 + 2*y**2 - 4*x - 2*x*y

## 偏函数

def hx1(x, y):

return 2*x - 4 -2*y

def hx2(x, y):

return 4*y-2*x

x1 = -1

x2 = -1

alpha = 0.05

#保存梯度下降经过的点

GD_X1 = [x1]

GD_X2 = [x2]

GD_Y = [f2(x1,x2)]

# 定义y的变化量和迭代次数

y_change = f2(x1,x2)

iter_num = 0

while(y_change > 1e-10 and iter_num < 500) :

tmp_x1 = x1 - alpha * hx1(x1,x2)

tmp_x2 = x2 - alpha * hx2(x1,x2)

tmp_y = f2(tmp_x1,tmp_x2)

f_change = np.absolute(tmp_y - f2(x1,x2))

x1 = tmp_x1

x2 = tmp_x2

GD_X1.append(x1)

GD_X2.append(x2)

GD_Y.append(tmp_y)

iter_num += 1

print(u"最终结果为:(%.5f, %.5f, %.5f)" % (x1, x2, f2(x1,x2)))

print(u"迭代过程中X的取值,迭代次数:%d" % iter_num)

print(GD_X1)

最终结果为:(4.00000, 2.00000, -8.00000)

迭代过程中X的取值,迭代次数:500

[-1, -0.8, -0.6100000000000001, -0.42900000000000005, -0.2562, -0.09094999999999998, 0.06728700000000001, 0.21896240000000003, 0.36446231, 0.504122623, 0.638240219, 0.76708130997, 0.890887671459, 1.0098813114016, 1.12426798264683, 1.2342398394044751, 1.3399774593083582, 1.4416513948470318, 1.5394233751310196, 1.6334472473813408, 1.7238697242052554, 1.8108309855081823, 1.8944651711781786, 1.9749007912920942, 2.0522610736600533, 2.1266642634047037, 2.1982238854893583, 2.2670489783145698, 2.333244304437324, 2.3969105429401063, 2.458144466847681, 2.5170391081535817, 2.5736839123992357, 2.6281648842896574, 2.6805647254889604, 2.730962965485576, 2.779436086228317, 2.82605764109338, 2.870898368636641, 2.91402630150599, 2.955506870828168, 2.9954030063386328, 3.033775232487676, 3.07068176072862, 3.106178578172404, 3.140319532775767, 3.173156415216397, 3.20473903759708, 3.235115309111394, 3.2643313087954433, 3.292431355483164, 3.319458075076614, 3.345452465237197, 3.3704539575988393, 3.3945004775996166, 3.4176285020241726, 3.4398731143453656, 3.461268057949959, 3.481845787329721, 3.5016375173160545, 3.520673270433191, 3.5389819224420256, 3.5565912461438782, 3.5735279535107547, 3.5898177362061294, 3.605485304557784, 3.620554425041881, 3.635047956335171, 3.6489878839900554, 3.6623953537851226, 3.6752907038017693, 3.6876934952755707, 3.6996225422692137, 3.7110959402120085, 3.7221310933492724, 3.7327447411432373, 3.74295298366552, 3.7527713060196852, 3.7622146018309457, 3.771297195838631, 3.7800328656257016, 3.7884348625182644, 3.796515931686802, 3.804288331479595, 3.811763852017682, 3.818953833079547, 3.825869181302676, 3.8325203867280706, 3.83891753871282, 3.845070341234864, 3.850988127613167, 3.85667987466563, 3.8621542163262226, 3.867419456741981, 3.8724835828697497, 3.877354276591768, 3.8820389263684834, 3.8865446384462663, 3.8908782476370294, 3.895046327686101, 3.899055201244081, 3.902910949457806, 3.9066194211949727, 3.9101862419164113, 3.9136168222094687, 3.9169163659954447, 3.920089878423533, 3.9231421734632406, 3.9260778812068007, 3.92890145489266, 3.931617177660694, 3.9342291690493907, 3.9367413912448717, 3.9391576550912144, 3.9414816258712055, 3.9437168288662874, 3.9458666547041323, 3.947934364501961, 3.9499230948134, 3.951835862386387, 3.9536755687393446, 3.955445004562551, 3.957146853951402, 3.958783698477972, 3.960358021107057, 3.9618722099626367, 3.963328561950472, 3.9647292862423305, 3.9660765076271267, 3.9673722697340605, 3.968618538132643, 3.96981720331431, 3.9709700835601507, 3.972078927699096, 3.9731454177607564, 3.9741711715269274, 3.9751577449856397, 3.976106634691469, 3.977019280035693, 3.9778970654297354, 3.978741322405208, 3.9795533316337415, 3.9803343248696628, 3.98108548681847, 3.981807956933939, 3.9825028311465824, 3.9831711635260936, 3.9838139678802853, 3.984432219292959, 3.9850268556030275, 3.985598778827146, 3.9861488565279983, 3.9866779231303235, 3.9871867811866712, 3.9876762025948116, 3.988146929768643, 3.9885996767643768, 3.989035130363704, 3.9894539511155895, 3.9898567743382722, 3.9902442110829943, 3.990616849060917, 3.9909752535346326, 3.9913199681756244, 3.9916515158889725, 3.99197039960656, 3.9922771030499815, 3.992572091464311, 3.9928558123238416, 3.99312869601087, 3.9933911564685514, 3.99364359182882, 3.993886385016322, 3.9941199043292857, 3.994344503998197, 3.9945605247231417, 3.9947682941906213, 3.9949681275706257, 3.9951603279947223, 3.9953451870158836, 3.9955229850507497, 3.995693991804997, 3.9958584666824626, 3.9960166591786384, 3.996168809259137, 3.9963151477236996, 3.996455896556302, 3.996591269261887, 3.9967214711902335, 3.996846699847457, 3.9969671451956112, 3.9970829899408447, 3.9971944098105516, 3.9973015738199384, 3.9974046445284035, 3.99750377828613, 3.997599125471254, 3.9976908307179797, 3.9977790331359753, 3.997863866521392, 3.9979454595598245, 3.998023936021513, 3.9980994149490967, 3.99817201083819, 3.998241833811065, 3.998308989783695, 3.9983735806264256, 3.9984357043185, 3.998495455096688, 3.9985529235982344, 3.99860819699835, 3.9986613591424485, 3.9987124906733342, 3.9987616691535295, 3.998808969182933, 3.99885446251198, 3.9988982181504835, 3.9989403024723162, 3.9989807793160943, 3.999019710082016, 3.999057153825, 3.9990931673442685, 3.9991278052695067, 3.999161120143731, 3.999193162502993, 3.9992239809530385, 3.9992536222430406, 3.999282131336512, 3.999309551479511, 3.9993359242662456, 3.999361289702165, 3.9993856862646457, 3.9994091509613607, 3.999431719386415, 3.999453425774339, 3.9994743030520215, 3.999494382888656, 3.9995136957437802, 3.9995322709134804, 3.999550136574833, 3.9995673198286448, 3.9995838467405647, 3.999599742380622, 3.999615030861257, 3.999629735373895, 3.9996438782241293, 3.999657480865554, 3.9996705639323102, 3.999683147270384, 3.9996952499677123, 3.9997068903831385, 3.99971808617426, 3.9997288543242133, 3.999739211167438, 3.9997491724144534, 3.9997587531756897, 3.9997679679844107, 3.9997768308187585, 3.999785355122958, 3.99979355382771, 3.9998014393698065, 3.999809023710997, 3.9998163183561326, 3.9998233343706175, 3.9998300823971955, 3.999836572672094, 3.9998428150405507, 3.9998488189717496, 3.9998545935731835, 3.9998601476044695, 3.999865489490638, 3.999870627334911, 3.999875568930996, 3.9998803217749064, 3.9998848930763335, 3.9998892897695835, 3.999893518524095, 3.999897585754557, 3.9999014976306397, 3.999905260086352, 3.9999088788290442, 3.999912359348065, 3.9999157069230895, 3.999918926632126, 3.9999220233592205, 3.9999250018018655, 3.999927866478125, 3.999930621733488, 3.9999332717474605, 3.9999358205399065, 3.999938271977144, 3.9999406297778113, 3.999942897518507, 3.999945078639216, 3.999947176448527, 3.9999491941286527, 3.999951134740255, 3.9999530012270905, 3.999954796420473, 3.9999565230435694, 3.9999581837155325, 3.9999597809554706, 3.9999613171862722, 3.9999627947382788, 3.9999642158528204, 3.9999655826856166, 3.999966897310046, 3.9999681617202905, 3.9999693778343612, 3.9999705474970075, 3.9999716724825163, 3.9999727544974024, 3.9999737951829974, 3.99997479611794, 3.99997575882057, 3.9999766847512315, 3.999977575314489, 3.9999784318612566, 3.999979255690849, 3.9999800480529513, 3.9999808101495145, 3.9999815431365793, 3.999982248126029, 3.9999829261872786, 3.9999835783488926, 3.99998420560015, 3.999984808892541, 3.999985389141213, 3.9999859472263584, 3.999986483994548, 3.999987000260017, 3.9999874968059, 3.9999879743854176, 3.999988433723021, 3.9999888755154895, 3.999989300432987, 3.9999897091200807, 3.9999901021967164, 3.9999904802591604, 3.999990843880904, 3.9999911936135333, 3.9999915299875646, 3.9999918535132513, 3.9999921646813563, 3.9999924639638973, 3.999992751814862, 3.999993028670899, 3.9999932949519756, 3.9999935510620204, 3.999993797389532, 3.99999403430817, 3.9999942621773212, 3.9999944813426453, 3.999994692136599, 3.9999948948789403, 3.999995089877213, 3.9999952774272147, 3.999995457813444, 3.999995631309532, 3.9999957981786594, 3.9999959586739533, 3.9999961130388724, 3.999996261507576, 3.99999640430528, 3.999996541648597, 3.999996673745866, 3.9999968007974687, 3.999996922996132, 3.999997040527221, 3.9999971535690224, 3.999997262293011, 3.999997366864113, 3.999997467440954, 3.999997564176102, 3.9999976572162956, 3.9999977467026704, 3.9999978327709695, 3.999997915551752, 3.9999979951705904, 3.9999980717482595, 3.999998145400922, 3.999998216240303, 3.9999982843738606, 3.999998349904948, 3.99999841293297, 3.9999984735535365, 3.999998531858603, 3.9999985879366147, 3.9999986418726365, 3.999998693748486, 3.999998743642854, 3.999998791631427, 3.999998837786999, 3.9999988821795855, 3.999998924876526, 3.9999989659425887, 3.9999990054400674, 3.9999990434288764, 3.999999079966642, 3.999999115108789, 3.9999991489086257, 3.9999991814174236, 3.9999992126844957, 3.999999242757272, 3.9999992716813706, 3.999999299500667, 3.9999993262573605, 3.9999993519920394, 3.999999376743741, 3.9999994005500117, 3.9999994234469636, 3.9999994454693297, 3.9999994666505168, 3.999999487022654, 3.9999995066166454, 3.9999995254622127, 3.9999995435879434, 3.999999561021333, 3.999999577788826, 3.9999995939158577, 3.9999996094268915, 3.9999996243454565, 3.999999638694183, 3.9999996524948376, 3.9999996657683536, 3.9999996785348664, 3.999999690813742, 3.9999997026236063, 3.9999997139823735, 3.9999997249072745, 3.9999997354148817, 3.999999745521134, 3.9999997552413618, 3.99999976459031, 3.99999977358216, 3.9999997822305517, 3.9999997905486047, 3.999999798548936, 3.999999806243682, 3.9999998136445147, 3.999999820762661, 3.999999827608918, 3.9999998341936713, 3.9999998405269097, 3.99999984661824, 3.999999852476902, 3.999999858111783, 3.9999998635314307, 3.9999998687440663, 3.999999873757597, 3.9999998785796276, 3.9999998832174732, 3.999999887678169, 3.999999891968481, 3.999999896094918, 3.999999900063739, 3.9999999038809646, 3.999999907552385, 3.99999991108357, 3.9999999144798752, 3.9999999177464534, 3.9999999208882593, 3.999999923910059, 3.999999926816436, 3.9999999296117994, 3.9999999323003896, 3.9999999348862847, 3.999999937373407, 3.99999993976553, 3.999999942066282, 3.9999999442791534, 3.9999999464075002, 3.9999999484545516, 3.9999999504234127, 3.99999995231707, 3.9999999541383957, 3.999999955890153, 3.999999957574999, 3.99999995919549, 3.9999999607540837, 3.999999962253144, 3.999999963694946, 3.9999999650816758, 3.999999966415437, 3.999999967698253, 3.99999996893207, 3.9999999701187594, 3.999999971260121, 3.999999972357887, 3.999999973413722, 3.9999999744292274, 3.999999975405944, 3.9999999763453533, 3.9999999772488803, 3.999999978117896, 3.999999978953718, 3.9999999797576145, 3.999999980530805, 3.999999981274462, 3.999999981989714, 3.999999982677646]

# 作图

fig = plt.figure(facecolor='w',figsize=(20,18))

ax = Axes3D(fig)

ax.plot_surface(X1,X2,Y,rstride=1,cstride=1,cmap=plt.cm.jet)

ax.plot(GD_X1,GD_X2,GD_Y,'ko-')

ax.set_xlabel('x')

ax.set_ylabel('y')

ax.set_zlabel('z')

ax.set_title(u'函数;\n学习率:%.3f; 最终解:(%.3f, %.3f, %.3f);迭代次数:%d' % (alpha, x1, x2, f2(x1,x2), iter_num))

plt.show()

import numpy as np

from matplotlib import pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

data=np.genfromtxt('C:/Users/asus/Desktop/data.csv',delimiter=',')

x_data=data[:,:-1]

y_data=data[:,2]

#定义学习率、斜率、截据

#设方程为y=theta1x1+theta2x2+theta0

lr=0.00001

theta0=0

theta1=0

theta2=0

#定义最大迭代次数,因为梯度下降法是在不断迭代更新k与b

epochs=10000

#定义最小二乘法函数-损失函数(代价函数)

def compute_error(theta0,theta1,theta2,x_data,y_data):

totalerror=0

for i in range(0,len(x_data)):#定义一共有多少样本点

totalerror=totalerror+(y_data[i]-(theta1*x_data[i,0]+theta2*x_data[i,1]+theta0))**2

return totalerror/float(len(x_data))/2

#梯度下降算法求解参数

def gradient_descent_runner(x_data,y_data,theta0,theta1,theta2,lr,epochs):

m=len(x_data)

for i in range(epochs):

theta0_grad=0

theta1_grad=0

theta2_grad=0

for j in range(0,m):

theta0_grad-=(1/m)*(-(theta1*x_data[j,0]+theta2*x_data[j,1]+theta2)+y_data[j])

theta1_grad-=(1/m)*x_data[j,0]*(-(theta1*x_data[j,0]+theta2*x_data[j,1]+theta0)+y_data[j])

theta2_grad-=(1/m)*x_data[j,1]*(-(theta1*x_data[j,0]+theta2*x_data[j,1]+theta0)+y_data[j])

theta0=theta0-lr*theta0_grad

theta1=theta1-lr*theta1_grad

theta2=theta2-lr*theta2_grad

return theta0,theta1,theta2

#进行迭代求解

theta0,theta1,theta2=gradient_descent_runner(x_data,y_data,theta0,theta1,theta2,lr,epochs)

print('结果:迭代次数:{0} 学习率:{1}之后 a0={2},a1={3},a2={4},代价函数为{5}'.format(epochs,lr,theta0,theta1,theta2,compute_error(theta0,theta1,theta2,x_data,y_data)))

print("多元线性回归方程为:y=",theta1,"X1+",theta2,"X2+",theta0)

#画图

ax=plt.figure().add_subplot(111,projection='3d')

ax.scatter(x_data[:,0],x_data[:,1],y_data,c='r',marker='o')

x0=x_data[:,0]

x1=x_data[:,1]

#生成网格矩阵

x0,x1=np.meshgrid(x0,x1)

z=theta0+theta1*x0+theta2*x1

#画3d图

ax.plot_surface(x0,x1,z)

ax.set_xlabel('area')

ax.set_ylabel('distance')

ax.set_zlabel("Monthly turnover")

plt.show()

结果:迭代次数:10000 学习率:1e-05之后 a0=5.3774162274868,a1=45.0533119768975,a2=-0.19626929358281256,代价函数为366.7314528822914

多元线性回归方程为:y= 45.0533119768975 X1+ -0.19626929358281256 X2+ 5.3774162274868

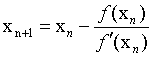

牛顿法

设r是f(x)=0的根,选取x0作为r的初始近似值,过点(x0,f(x0))做曲线y=f(x)的切线L

L:y=f(x0)+f’(x0)(x-x0),则L与x轴交点的横坐标

x1=x0-f(x0)/f’(x0),称x1为r的一次近似值。以此类推,

则为牛顿迭代公式。

运用牛顿法的一般步骤

一、确定迭代变量

在可以用迭代算法解决的问题中,至少存在一个可直接或间接地不断由旧值递推出新值的变量,这个变量就是迭代变量。

二、建立迭代关系式

所谓迭代关系式,指如何从变量的前一个值推出其下一个值的公式(或关系)。迭代关系式的建立是解决迭代问题的关键,通常可以使用递推或倒推的方法来完成。

三、对迭代过程进行控制

在什么时候结束迭代过程?这是编写迭代程序必须考虑的问题。不能让迭代过程无休止地执行下去。迭代过程的控制通常可分为两种情况:一种是所需的迭代次数是个确定的值,可以计算出来;另一种是所需的迭代次数无法确定。对于前一种情况,可以构建一个固定次数的循环来实现对迭代过程的控制;对于后一种情况,需要进一步分析得出可用来结束迭代过程的条件。

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

%matplotlib inline

dataset = pd.read_excel('C:/Users/asus/Desktop/data.xlsx')

data = np.genfromtxt("C:/Users/asus/Desktop/data.csv",delimiter=",")

X1=data[0:10,0]#自变量温度

X2=data[0:10,1]#因变量销售量

Y=data[0:10,2]#自变量温度

#将因变量赋值给矩阵Y1

Y1=np.array([Y]).T

#为自变量系数矩阵X赋值

X11=np.array([X1]).T

X22=np.array([X2]).T

A=np.array([[1],[1],[1],[1],[1],[1],[1],[1],[1],[1]])#创建系数矩阵

B=np.hstack((A,X11))#将矩阵a与矩阵X11合并为矩阵b

X=np.hstack((B,X22))#将矩阵b与矩阵X22合并为矩阵X

#求矩阵X的转置矩阵

X_=X.T

X_X=np.dot(X_,X)

X_X_=np.linalg.inv(X_X)

#求解系数矩阵W,分别对应截距b、a1、和a2

W=np.dot(np.dot((X_X_),(X_)),Y1)

b=W[0][0]

a1=W[1][0]

a2=W[2][0]

print("系数a1=",a1)

print("系数a2=",a2)

print("截距为=",b)

print("多元线性回归方程为:y=",a1,"X1+",a2,"X2+",b)

#求月销售量Y的和以及平均值y1

sumy=0#因变量的和

y1=0#因变量的平均值

for i in range(0,len(Y)):

sumy=sumy+Y[i]

y1=sumy/len(Y)

#求月销售额y-他的平均值的和

y_y1=0#y-y1的值的和

for i in range(0,len(Y)):

y_y1=y_y1+(Y[i]-y1)

print("销售量-销售量平均值的和为:",y_y1)

#求预测值sales1

sales1=[]

for i in range(0,len(Y)):

sales1.append(a1*X1[i]+a2*X2[i]+b)

#求预测值的平均值y2

y2=0

sumy2=0

for i in range(len(sales1)):

sumy2=sumy2+sales1[i]

y2=sumy2/len(sales1)

#求预测值-平均值的和y11_y2

y11_y2=0

for i in range(0,len(sales1)):

y11_y2=y11_y2+(sales1[i]-y2)

print("预测销售值-预测销售平均值的和为:",y11_y2)

#求月销售额y-他的平均值的平方和

Syy=0#y-y1的值的平方和

for i in range(0,len(Y)):

Syy=Syy+((Y[i]-y1)*(Y[i]-y1))

print("Syy=",Syy)

#求y1-y1平均的平方和

Sy1y1=0

for i in range(0,len(sales1)):

Sy1y1=Sy1y1+((sales1[i]-y2)*(sales1[i]-y2))

print("Sy1y1=",Sy1y1)

#(y1-y1平均)*(y-y平均)

Syy1=0

for i in range(0,len(sales1)):

Syy1=Syy1+((Y[i]-y1)*(sales1[i]-y2))

print("Syy1=",Syy1)

#(y1-y1平均)*(y-y平均)

Syy1=0

for i in range(0,len(sales1)):

Syy1=Syy1+((Y[i]-y1)*(sales1[i]-y2))

print("Syy1=",Syy1)

系数a1= 41.51347825643848

系数a2= -0.34088268566362023

截距为= 65.32391638894899

多元线性回归方程为:y= 41.51347825643848 X1+ -0.34088268566362023 X2+ 65.32391638894899

销售量-销售量平均值的和为: -1.1368683772161603e-13

预测销售值-预测销售平均值的和为: -3.410605131648481e-13

Syy= 76199.6

Sy1y1= 72026.59388000543

Syy1= 72026.59388000538

Syy1= 72026.59388000538

参考文献

https://blog.csdn.net/weixin_44606638/article/details/105326348

https://www.jianshu.com/p/424b7b70df7b

https://blog.csdn.net/qq_35946969/article/details/84446000