浅谈android应用之IPC

文章目录

- 1. 前言

- 2. Binder的由来

- 3. 什么是Binder

- 4. Binder机制的意义

- 5. 通信机制

- 6. 实现原理

- 6.1 引言

- 6.2 代码流程图

- 6.3 java层调用jni层实现

- 6.4 jni层调用native层实现

- 6.5 native层调用驱动层实现

- 7. 本章小结

1. 前言

更多内容请查看android生态之应用篇

IPC是Inter-Process-Communication的缩写,意思是进程间通信或者跨进程通信。按照操作系统的描述,线程是CPU调度的最小单元,而进程一般指一个执行单元,在移动设备上指一个程序或应用,一个进程可以包含多个线程。常见的IPC方式有以下几种方式:

- 使用Bundle,通过Intent传递过去

- 使用文件共享

- 使用Messenger

- 使用AIDL

- 使用Socket通信

- 使用ContentProvider

- 使用Binder

下面将Binder这个抽出来讲,理解Binder对理解整个android系统具有重要意义。

2. Binder的由来

简单的说,Binder是Android平台上的一种跨进程通信技术。该技术最早并不是谷歌公司提出的,它前身是Be Inc公司开发的OpenBinder,而且在Palm中也有应用。后来OpenBinder的作者Dianne Hackborn加入了谷歌公司,并负责Android平台开发的工作,所以把这项技术也带进了Android。

我们知道,在Android的应用层次上,基本上已经没有了进程的概念了,然后在具体的实现层次上,它毕竟还是要构建一个个进程之上的。实际上,在Android内部,哪些支持应用的组件往往会身处不同的继承,那么应用的底层必然会涉及大批的跨进程通信,为了保证了通信的高效性,Android提供了Binder机制。

3. 什么是Binder

让我们从四个维度来看Binder,这样会让大家对理解Binder机制更有帮助

• 1 从来类的角度来说,Binder就是Android的一个类,它继承了IBinder接口

• 2 从IPC的角度来说,Binder是Android中的一个中的一种跨进程通信方式,Binder还可以理解为一种虚拟的物理设备,它的设备驱动是/dev/binder,该通信方式在Linux中没有(由于耦合性太强,而Linux没有接纳)

• 3 从Android Framework角度来说,Binder是ServiceManager连接各种Manager(ActivityManager、WindowManager等)和相应的ManagerService的桥梁

• 4 从Android应用层的角度来说,Binder是客户端和服务端进行通信的媒介,当你bindService的时候,服务端会返回一个包含了服务端业务调用的Binder对象,通过这个Binder对象,客户端就可以获取服务端提供的服务或者数据,这里的服务包括普通服务和基于AIDL的服务。

4. Binder机制的意义

Binder机制具有两层含义:

• 1 是一种跨进程通信的方式(IPC)

• 2 是一种远程过程调用方式(RPC)

而从实现的角度来说,Binder核心被实现成一个Linux驱动程序,并运行于内核态。这样它才能具有强大的跨进程访问能力。

5. 通信机制

Binder的实现分为这几层,按照大的框架理解是:

• 其中Linux驱动层位于Linux内核中,它提供了最底层的数据传递,对象标示,线程管理,通过调用过程控制等功能。驱动层其实是Binder机制的核心。

• Framework层以Linux驱动层为基础,提供了应用开发的基础设施。Framework层既包含了C++部分的实现,也包含了Java基础部分的实现。为了能将C++ 的实现复用到Java端,中间通过JNI进行衔接。

6. 实现原理

6.1 引言

Binder的实现是非常复杂的,它的实现是从java层到jni层,再从jni层到native层,最后从native层到drive层,因此,学习Binder的实现机制可以更加清晰地认识framwork。

6.2 代码流程图

跟踪Binder的代码实现之前先看一下大体的流程,了解大概:

6.3 java层调用jni层实现

这边以flashmanagerservice服务注册举例:

ServiceManager.addService(NETMANAGERSERVICE,netmanagerService);

它调用了ServiceManager的addService方法,我们找到这个方法的具体实现,代码路径为

==========./frameworks/base/core/java/android/os/ServiceManager.java ==========

public static void addService(String name, IBinder service, boolean allowIsolated) {

try {

getIServiceManager().addService(name, service, allowIsolated);

} catch (RemoteException e) {

Log.e(TAG, "error in addService", e);

}

}

代码里面又调用到getIServiceManager的addService方法。

那就来看一下getIServiceManager做的是什么工作:

private static IServiceManager getIServiceManager() {

if (sServiceManager != null) {

return sServiceManager;

}

// Find the service manager

sServiceManager = ServiceManagerNative.asInterface(BinderInternal.getContextObject()); //1

return sServiceManager;

}

需要关注的是注释1处这段代码。

getContextObject函数的作用是调用native代码,它打开了binder驱动设备并映射内存的起始地址。

先看asInterface函数的实现为:

static public IServiceManager asInterface(IBinder obj)

{

if (obj == null) {

return null;

}

IServiceManager in =

(IServiceManager)obj.queryLocalInterface(descriptor);

if (in != null) {

return in;

}

return new ServiceManagerProxy(obj);

}

可以看到它返回的是一个ServiceManagerProxy对象。那么getIServiceManager().addService其实调用的是代理对象的addService方法。代码路径为:

==========./frameworks/base/core/java/android/os/ServiceManagerNative.java ==========

public void addService(String name, IBinder service, boolean allowIsolated)

throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IServiceManager.descriptor);

data.writeString(name);

data.writeStrongBinder(service);

data.writeInt(allowIsolated ? 1 : 0);

mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0); //2

reply.recycle();

data.recycle();

}

上面代码需要注意的是注释2处的代码,这里的mRemote其实就是BinderProxy

========== ./frameworks/base/core/java/android/os/Binder.java ==========

final class BinderProxy implements IBinder {

......

public native String getInterfaceDescriptor() throws RemoteException;

public native boolean transact(int code, Parcel data, Parcel reply,

int flags) throws RemoteException;

public native void linkToDeath(DeathRecipient recipient, int flags)

throws RemoteException;

public native boolean unlinkToDeath(DeathRecipient recipient, int flags);

......

}

可以看到最终调用到jni层的代码。

6.4 jni层调用native层实现

接着上面,我们找到jni的transact函数所在位置:

从jni注册的函数可以知道,java层的transact函数对应jni的

===== =====/frameworks/base/core/jni/android_util_Binder.cpp ==========

static const JNINativeMethod gBinderProxyMethods[] = {

/* name, signature, funcPtr */

{"pingBinder", "()Z", (void*)android_os_BinderProxy_pingBinder},

{"isBinderAlive", "()Z", (void*)android_os_BinderProxy_isBinderAlive},

{"getInterfaceDescriptor", "()Ljava/lang/String;", (void*)android_os_BinderProxy_getInterfaceDescriptor},

{"transact", "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z", (void*)android_os_BinderProxy_transact},

{"linkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)V", (void*)android_os_BinderProxy_linkToDeath},

{"unlinkToDeath", "(Landroid/os/IBinder$DeathRecipient;I)Z", (void*)android_os_BinderProxy_unlinkToDeath},

{"destroy", "()V", (void*)android_os_BinderProxy_destroy},

};

android_os_BinderProxy_transact函数,它的实现代码比较多,只看关键代码:

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

......

status_t err = target->transact(code, *data, reply, flags);

......

}

它调用了target的transact函数,target其实就是BpBinder对象,那直接看BpBinder的transact函数的实现代码:

========== /frameworks/native/libs/binder/BpBinder.cpp ==========

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact( //3

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

上面注释3的代码最后会调用到IPCThreadState::transact函数,transact函数实现代码关键代码已红框标记:

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

if (err == NO_ERROR) {

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL); //4

}

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(NULL, NULL); //5

}

return err;

}

注释4其实是将一些传递给驱动的参数存到Parcel里头,它会在注释5代码中被使用,waitForRespone函数中有调用talkWithDriver函数,关键代码如下:

======== /frameworks/native/libs/binder/IPCThreadState.cpp ==========

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

......

if ((err=talkWithDriver()) < NO_ERROR) break;

......

}

talkWithDriver函数的关键代码:

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

.......

#if defined(HAVE_ANDROID_OS)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0) //6

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

.......

}

需要关注的是注释6的代码,通过系统调用,会走到驱动binder_ioctl函数里头,还有就是,一定先要打开binder这个设备驱动,并建立内存映射,ioctl才能正常调用到驱动层的binder_ioctl,这个操作是在系统开机启动servicemanager进程的时候实现的,下面也会讲到。

6.5 native层调用驱动层实现

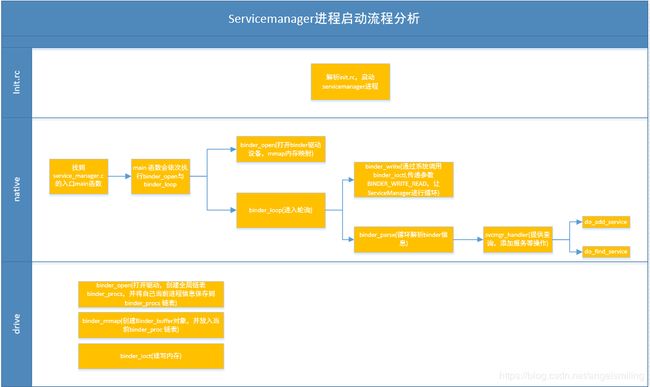

在讲native层调用驱动层之前,这里先讲servicemanger进程的启动过程,以帮助理解native层调用驱动层。

Servicemanager进程启动流程图如下所示:

servicemanager这个进程是开机的时候init进程解析init.rc将进程给拉起来的,代码路径为:

=========== ./system/core/rootdir/init.rc ===========

service servicemanager /system/bin/servicemanager

class core

user system

group system

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

onrestart restart drm

通过这个init.rc配置文件可知,servicemanager这个进程启动依赖

/system/bin/servicemanger这个程序,这个可执行程序其实就是service_manager.c

先找到其入口函数main函数,看一下它做了哪些操作:

===========./framework/native/cmds/servicemanager/service_manager.c ===========

int main(int argc, char **argv)

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

if (binder_become_context_manager(bs)) { //7

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler); //8

return 0;

}

首先 bs = binder_open(128*1024);这段代码从名字上应该是打开什么东西,后面带的参数应该是大小,那继续找到binder_open这个函数的具体实现,代码位置为:

============ ./framework/native/cmds/servicemanager/binder.c ============

struct binder_state *binder_open(unsigned mapsize)

{

struct binder_state *bs;

bs = malloc(sizeof(*bs));

if (!bs) {

errno = ENOMEM;

return 0;

}

bs->fd = open("/dev/binder", O_RDWR);

if (bs->fd < 0) {

fprintf(stderr,"binder: cannot open device (%s)\n",

strerror(errno));

goto fail_open;

}

bs->mapsize = mapsize;

bs->mapped = mmap(NULL, mapsize, PROT_READ, MAP_PRIVATE, bs->fd, 0);

if (bs->mapped == MAP_FAILED) {

fprintf(stderr,"binder: cannot map device (%s)\n",

strerror(errno));

goto fail_map;

}

return bs;

fail_map:

close(bs->fd);

fail_open:

free(bs);

return 0;

}

可以直接看bs->fd = open(“/dev/binder”,O_RDWR); open函数对应着驱动层的binder_open函数,打开binder驱动节点,创建全局链表 binder_procs,并将自己当前进程信息保存到 binder_procs 链表。继续往下,就是调用mmap()进行内存映射,同理mmap()方法经过系统调用,对应Binder驱动层binde_mmap()方法,即将内核空间与用户空间映射到同一物理地址,这样第一次复制到内核空间,其实目标的用户空间上也有这段数据了。这就是 binder 比传统 IPC 高效的一个原因。

让我们再回到service_manager.c的main函数中,看注释7的代码:

if (binder_become_context_manager(bs)) { //7

ALOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

起到的作用是将自己设置为Binder“大管家”,整个Android系统只允许一个ServiceManager存在,因而如果后面还有人调用这个函数就会失败。

再往下跟踪代码:

binder_loop(bs, svcmgr_handler); //8

这段代码的作用就是一个死循环,不断的接收客户端的请求,发送给binder驱动处理,并将收到的返回值进行解析。具体实现代码为:

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

struct binder_write_read bwr;

unsigned readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

在binder_loop函数中,也需要注意这么几个函数,他们分别是binder_write和binder_parse。首先来看这个binder_write这个函数的实现:

int binder_write(struct binder_state *bs, void *data, unsigned len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (unsigned) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}

需要注意的是bwr.write_size是不为0,还有bwr.write_buffer 是一个首元素为BC_ENTER_LOOPER unsigned类型的数组

里面的代码通过系统调用,对应binder驱动层的binder_ioctl函数:

======= ./device/hisilicon/bigfish/sdk/source/kernel/linux-3.10.y/drivers/staging/android/binder.c ========

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

/*pr_info("binder_ioctl: %d:%d %x %lx\n", proc->pid, current->pid, cmd, arg);*/

trace_binder_ioctl(cmd, arg);

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

thread = binder_get_thread(proc);

if (thread == NULL) {

ret = -ENOMEM;

goto err;

}

switch (cmd) {

case BINDER_WRITE_READ: {

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto err;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %ld at %08lx, read %ld at %08lx\n",

proc->pid, thread->pid, bwr.write_size,

bwr.write_buffer, bwr.read_size, bwr.read_buffer);

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread, (void __user *)bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, (void __user *)bwr.read_buffer, bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto err;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %ld of %ld, read return %ld of %ld\n",

proc->pid, thread->pid, bwr.write_consumed, bwr.write_size,

bwr.read_consumed, bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto err;

}

break;

}

}

ret = 0;

err:

if (thread)

thread->looper &= ~BINDER_LOOPER_STATE_NEED_RETURN;

binder_unlock(__func__);

wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret && ret != -ERESTARTSYS)

pr_info("%d:%d ioctl %x %lx returned %d\n", proc->pid, current->pid, cmd, arg, ret);

err_unlocked:

trace_binder_ioctl_done(ret);

return ret;

}

........

他传入的参数是BINDER_WRITE_READ,那我们在switch语句中找到匹配的参数,看他到底了做些啥。

通过copy_from_user函数将用户空间的ubuf拷贝到内核空间。

我们知道bwr.write_size是大于0的,所以会走binder_thread_write函数,实现代码比较多,只贴出关键代码:

case BC_ENTER_LOOPER:

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BC_ENTER_LOOPER\n",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPER\n",

proc->pid, thread->pid);

}

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

主要是从bwr.write_buffer中拿出数据,此处为BC_ENTER_LOOPER,可见上层调用binder_write()方法主要是设置当前线程状态为BINDER_LOOPER_STATE_ENABLE。

再回过头来看binder_loop函数的

res = binder_parse(bs, 0, readbuf, bwr.read_consumed, func);

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uint32_t *ptr, uint32_t size, binder_handler func)

{

int r = 1;

uint32_t *end = ptr + (size / 4);

while (ptr < end) {

uint32_t cmd = *ptr++;

#if TRACE

fprintf(stderr,"%s:\n", cmd_name(cmd));

#endif

switch(cmd) {

case BR_NOOP:

break;

case BR_TRANSACTION_COMPLETE:

break;

case BR_INCREFS:

case BR_ACQUIRE:

case BR_RELEASE:

case BR_DECREFS:

#if TRACE

fprintf(stderr," %08x %08x\n", ptr[0], ptr[1]);

#endif

ptr += 2;

break;

case BR_TRANSACTION: {

struct binder_txn *txn = (void *) ptr;

if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) {

ALOGE("parse: txn too small!\n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

res = func(bs, txn, &msg, &reply);

binder_send_reply(bs, &reply, txn->data, res);

}

ptr += sizeof(*txn) / sizeof(uint32_t);

break;

}

case BR_REPLY: {

struct binder_txn *txn = (void*) ptr;

if ((end - ptr) * sizeof(uint32_t) < sizeof(struct binder_txn)) {

ALOGE("parse: reply too small!\n");

return -1;

}

binder_dump_txn(txn);

if (bio) {

bio_init_from_txn(bio, txn);

bio = 0;

} else {

/* todo FREE BUFFER */

}

ptr += (sizeof(*txn) / sizeof(uint32_t));

r = 0;

break;

}

case BR_DEAD_BINDER: {

struct binder_death *death = (void*) *ptr++;

death->func(bs, death->ptr);

break;

}

case BR_FAILED_REPLY:

r = -1;

break;

case BR_DEAD_REPLY:

r = -1;

break;

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}

上面代码主要是解析binder消息,此处参数ptr指向BC_ENTER_LOOPER,func指向svcmgr_handler,所以有请求来,则调用svcmgr:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

ptr = do_find_service(bs, s, len, txn->sender_euid);

if (!ptr)

break;

bio_put_ref(reply, ptr);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

ptr = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

if (do_add_service(bs, s, len, ptr, txn->sender_euid, allow_isolated))

return -1;

break;

代码看着很多,已截取主要代码,可以看到的是servicemanger提供查询服务和注册服务以及列举所有服务。

7. 本章小结

通过这章我们可以知道什么是binder,binder的实现原理,这对我们理解整个android系统框架起到积极作用。但此篇文章依旧不是binder的整个过程,最多只是一半的内容,主要讲的是服务注册的过程,后面的一半内容是获取服务的过程,大体差不多,有兴趣的同学可以自行研究一下。