elk引入filebeat

Logstash收集日志依赖于Java环境,而且用来收集日志会显得比较重,占用内存和CPU,而Filebeat相对轻量,占用服务器资源小

一般选用Filebeat来进行日志收集,然后发送到logstash进行处理,同时filebeat也可以直接发送到es。

filebeat>es>kibana

#192.168.1.104

cd /usr/local/src/

tar -zxf filebeat-6.6.0-linux-x86_64.tar.gz

mv filebeat-6.6.0-linux-x86_64 /usr/local/filebeat-6.6.0Filebeat发送日志到ES配置:

#/usr/local/filebeat-6.6.0/filebeat.yml

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.log

output:

elasticsearch:

hosts: ["192.168.1.105:9200"]启动

#前台启动

/usr/local/filebeat-6.6.0/filebeat -e -c /usr/local/filebeat-6.6.0/filebeat.yml

#后台启动

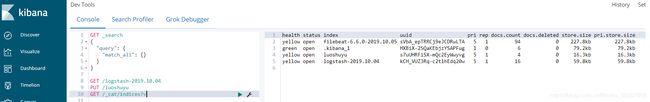

nohup ./filebeat -e -c filebeat.yml &Kibana上查看日志数据

这种适合查看日志,不适合具体日志的分析 ,不能分字段和过滤

filebeat>logstash>es>kibana

Filebeat配置发往Logstash

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.log

output:

logstash:

hosts: ["192.168.1.106:5044"]Logstash配置监听在5044端口,接收Filebeat发送过来的日志

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

filter {

grok {

match => {

"message" => '(?[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}) - (?[a-zA-Z]+) \[(?[^ ]+ \+[0-9]+)\] "(?[A-Z]+) (?[^ ]+) HTTP/\d.\d" (?[0-9]+) (?[0-9]+) "[^"]+" "(?[^"]+)"'

}

remove_field => ["message","@version","path"]

}

date {

match => ["requesttime", "dd/MMM/yyyy:HH:mm:ss Z"]

target => "@timestamp"

}

}

output {

elasticsearch {

hosts => ["http://192.168.1.105:9200"]

}

}

Nginx使用Json格式日志

#nginxpeizhi

log_format json '{"@timestamp":"$time_iso8601",'

'"clientip":"$remote_addr",'

'"status":$status,'

'"bodysize":$body_bytes_sent,'

'"referer":"$http_referer",'

'"ua":"$http_user_agent",'

'"handletime":$request_time,'

'"url":"$uri"}';

access_log logs/access.log;

access_log logs/access.json.log json;

#Filebeat采集Json格式的日志

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

output:

logstash:

hosts: ["192.168.1.105:5044"]

#Logstash解析Json日志

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

filter {

json {

source => "message"

remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

}

}

output {

elasticsearch {

hosts => ["http://192.168.1.105:9200"]

}

}filebeat采集多个日志

filebeat.inputs:

- type: log

tail_files: true

backoff: "1s"

paths:

- /usr/local/nginx/logs/access.json.log

fields:

type: access

fields_under_root: true

- type: log

tail_files: true

backoff: "1s"

paths:

- /var/log/secure

fields:

type: secure

fields_under_root: true

output:

logstash:

hosts: ["192.168.1.105:5044"]#Logstash通过type字段进行判断

input {

beats {

host => '0.0.0.0'

port => 5044

}

}

filter {

if [type] == "access" {

json {

source => "message"

remove_field => ["message","@version","path","beat","input","log","offset","prospector","source","tags"]

}

}

}

output{

if [type] == "access" {

elasticsearch {

hosts => ["http://192.168.1.105:9200"]

index => "access-%{+YYYY.MM.dd}" #指定索引

}

}

else if [type] == "secure" {

elasticsearch {

hosts => ["http://192.168.1.105:9200"]

index => "secure-%{+YYYY.MM.dd}" #指定索引

}

}

}至此,filebeat>logstash>es>kibana架构已经完成