记一次详细安装 kubernetes、istio 步骤

写在前面

先说一下我的机器配置,热乎乎的裸机,一共三台配置如下

- 10.20.1.103 4C 8G 磁盘 50G node4 master centos7

- 10.20.1.104 4C 8G 磁盘 50G node5 node centos7

- 10.20.1.105 4C 8G 磁盘 50G node6 node centos7

不要忘记修改你的主机名以及 hosts 文件映射,不然你的主机名就会全是 localhost…而且如果全是 localhost 的话使用 kubeadm 加入集群时也会出现 bug。

我的安装方法是基于 k8s 官方的推荐方法 kubeadm,istio 同样也是,如果想看原文访问以下连接。

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

https://istio.io/docs/setup/getting-started/

我在这里默认你已经安装好了 docker

基本就这些了,下面就开始了!

kubernetes

step1 安装常用应用

yum install -y vim wget

step2 验证机器

验证你的每个节点是否唯一,一般新的机器都没有任何问题。

ip link

sudo cat /sys/class/dmi/id/product_uuid

step3 开放端口

每台机器开放端口不用多说

// master 执行

[root@localhost ~]# firewall-cmd --zone=public --add-port=6443/tcp --permanent

success

[root@localhost ~]# firewall-cmd --zone=public --add-port=2379/tcp --permanent

success

[root@localhost ~]# firewall-cmd --zone=public --add-port=2380/tcp --permanent

success

[root@localhost ~]# firewall-cmd --zone=public --add-port=10250/tcp --permanent

success

[root@localhost ~]# firewall-cmd --zone=public --add-port=10251/tcp --permanent

success

[root@localhost ~]# firewall-cmd --zone=public --add-port=10252/tcp --permanent

success

[root@localhost ~]# firewall-cmd --reload

success

// node 执行

[root@localhost ~]# firewall-cmd --zone=public --add-port=10250/tcp --permanent

success

[root@localhost ~]# firewall-cmd --reload

success

step4 安装组件

安装 kubeadm, kubelet 和 kubectl

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

截止到上一步,如果你不修改你上网的姿势你是不会安装成功的,失败如下图。这和正常。

[root@localhost ~]# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Loaded plugins: fastestmirror

Loading mirror speeds from cached hostfile

* base: mirrors.huaweicloud.com

* extras: mirrors.huaweicloud.com

* updates: mirrors.huaweicloud.com

https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno 14] curl#7 - "Failed to connect to 2404:6800:4012::200e: Network is unreachable"

Trying other mirror.

https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno 14] curl#7 - "Failed to connect to 2404:6800:4012::200e: Network is unreachable"

Trying other mirror.

^Chttps://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64/repodata/repomd.xml: [Errno 14] curl#56 - "Callback aborted"

Trying other mirror.

因为我们访问不到 google,所以我们修改一下源,去其他路径下载,修改之后如下面代码块所示:实际只是注释了两行代码而已。

vim /etc/yum.repos.d/kubernetes.repo

name=Kubernetes

# baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

# gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

exclude=kube*

验证安装,可以看到我们已经安装成功了

[root@localhost ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.1", GitCommit:"d224476cd0730baca2b6e357d144171ed74192d6", GitTreeState:"clean", BuildDate:"2020-01-14T21:04:32Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?

[root@localhost ~]# kubelet --version

Kubernetes v1.17.1

[root@localhost ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.1", GitCommit:"d224476cd0730baca2b6e357d144171ed74192d6", GitTreeState:"clean", BuildDate:"2020-01-14T21:02:14Z", GoVersion:"go1.13.5", Compiler:"gc", Platform:"linux/amd64"}

启动 kubelet

systemctl enable --now kubelet

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

systemctl daemon-reload

systemctl restart kubelet

截止到这里已经成功一半了。下一步是启动集群。

step5 启动集群

// 先启动 master

[root@localhost ~]# kubeadm init --kubernetes-version=v1.17.0

W0117 17:16:23.316968 11538 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0117 17:16:23.317084 11538 validation.go:28] Cannot validate kubelet config - no validator is available

[init] Using Kubernetes version: v1.17.0

[preflight] Running pre-flight checks

[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or highe

我们执行命令之后,可以看到一些警告以及错误,那么我们执行以下命令,关闭防火墙以及交换分区

systemctl stop firewalld

swapoff -a

再次执行可以看到只有一个警告,这个警告我们修改 docker 的驱动即可。如下图所示,修改之后重启 docker

[root@node1 ~]# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"]

}

重启之后我们再次执行,此时肯定会再次失败。很简单可安装时的失败是一致的。我们访问不到 google。也就是我们 pull 不下来镜像。

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.17.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.17.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.17.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.17.0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.4.3-0: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.6.5: output: Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

, error: exit status 1

所以我们现在的任务就是解决下面这些依赖的镜像即可。

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.17.0 7d54289267dc 5 weeks ago 116MB

k8s.gcr.io/kube-scheduler v1.17.0 78c190f736b1 5 weeks ago 94.4MB

k8s.gcr.io/kube-apiserver v1.17.0 0cae8d5cc64c 5 weeks ago 171MB

k8s.gcr.io/kube-controller-manager v1.17.0 5eb3b7486872 5 weeks ago 161MB

k8s.gcr.io/coredns 1.6.5 70f311871ae1 2 months ago 41.6MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 2 months ago 288MB

k8s.gcr.io/pause 3.1 da86e6ba6ca1 2 years ago 742kB

这里我认为应该是最麻烦的一步,方法有很多,简单说几条:

- 使用阿里云的镜像然后修改名称

- 去 dockerhub 捡垃圾

- 给博主点赞留言

- 开动自己的小脑筋…

接着执行启动集群命令,以下只是一段日志,可以看到已经启动成功了。

kubeadm init --kubernetes-version=v1.17.0

......

......

......

[bootstrap-token] Using token: zlkmry.z97kut9e1ezjgetm

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.20.1.103:6443 --token zlkmry.z97kut9e1ezjgetm \

--discovery-token-ca-cert-hash sha256:ebe4e4f09e46e4b1fff8e6d81fdc9854fb23b64d05258d52e4afbd84136bb4c7

启动节点这一步,如果你修改了主机名却没有配置 hosts 映射就会抛出以下异常,这是正常的,你需要修改映射。

[WARNING Hostname]: hostname "node4" could not be reached

[WARNING Hostname]: hostname "node4": lookup node6 on 8.8.8.8:53: no such host

master 节点执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

剩余节点加入集群

kubeadm join 10.20.1.103:6443 --token zlkmry.z97kut9e1ezjgetm \

--discovery-token-ca-cert-hash sha256:ebe4e4f09e46e4b1fff8e6d81fdc9854fb23b64d05258d52e4afbd84136bb4c7

从输出命令我们可以看到,1:我们集群的网络没有初始化,所以每个节点是 NotReady 状态,2:我们两个子节点的镜像没有 pull 下来。集群网络我们使用 calico 在下一步解决,集群镜像我们直接把先前 master 的镜像使用 docker save 命令压缩以下然后直接发送到另外两个子节点使用 docker loca 命令直接解压,这样解决了两个子节点镜像的问题!

[root@localhost ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node4 NotReady master 7m6s v1.17.1

node5 NotReady <none> 79s v1.17.1

node6 NotReady <none> 69s v1.17.1

[root@localhost ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-6955765f44-qft45 0/1 Pending 0 6m56s <none> <none> <none> <none>

kube-system coredns-6955765f44-wtzp2 0/1 Pending 0 6m56s <none> <none> <none> <none>

kube-system etcd-node4 1/1 Running 0 6m43s 10.20.1.103 node4 <none> <none>

kube-system kube-apiserver-node4 1/1 Running 0 6m43s 10.20.1.103 node4 <none> <none>

kube-system kube-controller-manager-node4 1/1 Running 0 6m43s 10.20.1.103 node4 <none> <none>

kube-system kube-proxy-4cx77 0/1 ContainerCreating 0 71s 10.20.1.105 node6 <none> <none>

kube-system kube-proxy-lw9xf 1/1 Running 0 6m56s 10.20.1.103 node4 <none> <none>

kube-system kube-proxy-zfzqj 0/1 ContainerCreating 0 80s 10.20.1.104 node5 <none> <none>

kube-system kube-scheduler-node4 1/1 Running 0 6m43s 10.20.1.103 node4 <none> <none>

创建集群网络,这里使用的是 calico 网络

kubectl apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

到了这一步,你的 k8s 集群应该是已经正常的了,如下所示,下一步就是安装 istio!

[root@localhost ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node4 Ready master 23m v1.17.1

node5 Ready <none> 17m v1.17.1

node6 Ready <none> 17m v1.17.1

[root@localhost ~]# kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system calico-kube-controllers-5c45f5bd9f-wx2g5 1/1 Running 0 5m16s 192.168.139.2 node6 <none> <none>

kube-system calico-node-7wthb 1/1 Running 0 5m16s 10.20.1.103 node4 <none> <none>

kube-system calico-node-fzdcj 1/1 Running 0 5m16s 10.20.1.105 node6 <none> <none>

kube-system calico-node-glf8p 1/1 Running 0 5m16s 10.20.1.104 node5 <none> <none>

kube-system coredns-6955765f44-qft45 1/1 Running 0 23m 192.168.139.1 node6 <none> <none>

kube-system coredns-6955765f44-wtzp2 1/1 Running 0 23m 192.168.139.3 node6 <none> <none>

kube-system etcd-node4 1/1 Running 0 23m 10.20.1.103 node4 <none> <none>

kube-system kube-apiserver-node4 1/1 Running 0 23m 10.20.1.103 node4 <none> <none>

kube-system kube-controller-manager-node4 1/1 Running 0 23m 10.20.1.103 node4 <none> <none>

kube-system kube-proxy-4cx77 1/1 Running 0 17m 10.20.1.105 node6 <none> <none>

kube-system kube-proxy-lw9xf 1/1 Running 0 23m 10.20.1.103 node4 <none> <none>

kube-system kube-proxy-zfzqj 1/1 Running 0 17m 10.20.1.104 node5 <none> <none>

kube-system kube-scheduler-node4 1/1 Running 0 23m 10.20.1.103 node4 <none> <none>

istio

step1 下载 istio

下载 istio 稳定版,截止到我安装版本为 1.4.3

curl -L https://istio.io/downloadIstio | sh -

step2 启动 istio

启动 istio,模式为:demo

./istioctl manifest apply --set profile=demo

以下为输出:

- Applying manifest for component Base...

✔ Finished applying manifest for component Base.

- Applying manifest for component Tracing...

- Applying manifest for component IngressGateway...

- Applying manifest for component EgressGateway...

- Applying manifest for component Policy...

- Applying manifest for component Galley...

- Applying manifest for component Citadel...

- Applying manifest for component Telemetry...

- Applying manifest for component Injector...

- Applying manifest for component Prometheus...

- Applying manifest for component Pilot...

- Applying manifest for component Kiali...

- Applying manifest for component Grafana...

✔ Finished applying manifest for component Injector.

✔ Finished applying manifest for component Prometheus.

✔ Finished applying manifest for component Galley.

✔ Finished applying manifest for component Tracing.

✔ Finished applying manifest for component Pilot.

✔ Finished applying manifest for component IngressGateway.

✔ Finished applying manifest for component Citadel.

✔ Finished applying manifest for component Policy.

✔ Finished applying manifest for component Kiali.

✔ Finished applying manifest for component EgressGateway.

✔ Finished applying manifest for component Grafana.

✔ Finished applying manifest for component Telemetry.

✔ Installation complete

查看 pod,istio 所需镜像可以直接 pull 下来,不用拐弯抹角的麻烦。这里网速慢得估计需要等个三五分钟 pod 才会启动成功!

[root@localhost ~]# kubectl get pods -n istio-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

grafana-6b65874977-dr9gc 1/1 Running 0 9m36s 192.168.139.8 node6 <none> <none>

istio-citadel-f78ff689-dvghc 1/1 Running 0 9m38s 192.168.139.5 node6 <none> <none>

istio-egressgateway-7b6b69ddcd-gzp4n 0/1 Running 0 9m44s 192.168.33.131 node5 <none> <none>

istio-galley-69674cb559-w7lhq 1/1 Running 0 9m40s 192.168.33.135 node5 <none> <none>

istio-ingressgateway-649f9646d4-86snz 0/1 Running 0 9m42s 192.168.33.132 node5 <none> <none>

istio-pilot-7989874664-nspww 0/1 Running 0 9m40s 192.168.139.4 node6 <none> <none>

istio-policy-5cdbc47674-5xx24 1/1 Running 6 9m38s 192.168.139.7 node6 <none> <none>

istio-sidecar-injector-7dd87d7989-9984l 1/1 Running 0 9m41s 192.168.33.133 node5 <none> <none>

istio-telemetry-6dccd56cf4-4h2pd 1/1 Running 7 9m40s 192.168.33.130 node5 <none> <none>

istio-tracing-c66d67cd9-c8z89 1/1 Running 0 9m42s 192.168.33.129 node5 <none> <none>

kiali-8559969566-bpnn4 1/1 Running 0 9m37s 192.168.139.6 node6 <none> <none>

prometheus-66c5887c86-zg26g 1/1 Running 0 9m41s 192.168.33.134 node5 <none> <none>

step3 自动注入

为 default 命名空间开启自动注入:

kubectl label namespace default istio-injection=enabled

step4 部署示例

启动 bookinfo 示例,bookinfo 的 yaml 文件我们已经跟随 istio 下载下来了

kubectl apply -f samples/bookinfo/platform/kube/bookinfo.yaml

查看结果,启动比较慢需要一两分钟

[root@localhost istio-1.4.3]# kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

details-v1-78d78fbddf-zhz6h 2/2 Running 0 5m31s

productpage-v1-596598f447-x4tnb 2/2 Running 0 5m31s

ratings-v1-6c9dbf6b45-9mv64 2/2 Running 0 5m32s

reviews-v1-7bb8ffd9b6-7dtbg 2/2 Running 0 5m32s

reviews-v2-d7d75fff8-bmlkv 2/2 Running 0 5m32s

reviews-v3-68964bc4c8-5sxpr 2/2 Running 0 5m32s

创建入口网关:

kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml

返回结果:

[root@localhost istio-1.4.3]# kubectl get gateway -n default

NAME AGE

bookinfo-gateway 6m52s

[root@localhost istio-1.4.3]# kubectl get vs -n default

NAME GATEWAYS HOSTS AGE

bookinfo [bookinfo-gateway] [*] 6m57s

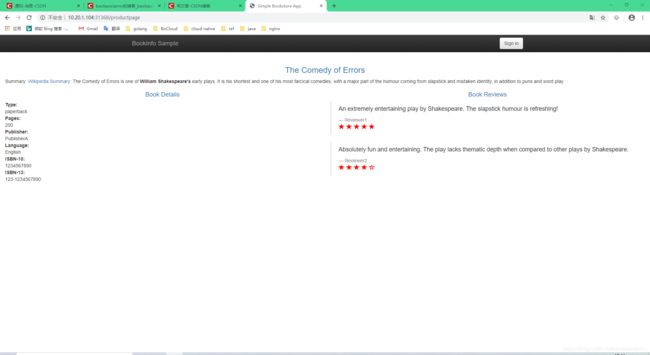

创建成功之后,尝试从网页访问:

截止到这里,详细的部署流程已经全部贴出,又遇到什么问题欢迎留言,如果对大家有用的话不要忘记点赞哦!