K8S环境快速部署Kafka(K8S外部可访问)

如何快速部署

- 借助Helm,只需少量操作即可部署kafka;

- kafka和zookeeper对存储都有需求,若提前准备了StorageClass,存储一事则变得十分简单

参考文章

本次实战涉及到的K8S、Helm、NFS、StorageClass等前置条件,它们的安装和使用请参考:

- 《kubespray2.11安装kubernetes1.15》

- 《部署和体验Helm(2.16.1版本)》

- 《Ubuntu16环境安装和使用NFS》

- 《K8S使用群晖DS218+的NFS》

- 《K8S的StorageClass实战(NFS)》

环境信息

本次实战的操作系统和软件的版本信息如下:

- Kubernetes:1.15

- Kubernetes宿主机:CentOS Linux release 7.7.1908

- NFS服务:IP地址192.168.50.135,文件夹/volume1/nfs-storageclass-test

- Helm:2.16.1

- Kafka:2.0.1

- Zookeeper:3.5.5

接下来的实战之前,请您准备好:K8S、Helm、NFS、StorageClass;

操作

- 添加helm仓库(该仓库中有kafka):helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator

- 下载kafka的chart:helm fetch incubator/kafka

- 下载成功后当前目录有个压缩包:kafka-0.20.8.tgz,解压:tar -zxvf kafka-0.20.8.tgz

- 进入解压后的kafka目录,编辑values.yaml文件,下面是具体的修改点:

- 首先要设置在K8S之外的也能使用kafka服务,修改external.enabled的值,改为true:

- 找到configurationOverrides,下图两个黄框中的内容原本是注释的,请删除注释符号,另外,如果您之前设置过跨网络访问kafka,就能理解下面写入K8S宿主机IP的原因了:

- 接下来设置数据卷,找到persistence,按需要调整大小,再设置已准备好的storageclass的名称:

- 再设置zookeeper的数据卷:

- 设置完成,开始部署,先创建namespace,执行:kubectl create namespace kafka-test

- 在kafka目录下执行:helm install --name-template kafka -f values.yaml . --namespace kafka-test

- 如果前面的配置没有问题,控制台提示如下所示:

- kafka启动依赖zookeeper,整个启动会耗时数分钟,期间可见zookeeper和kafka的pod逐渐启动:

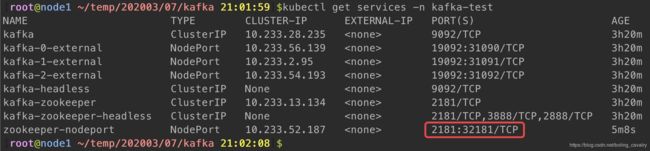

- 查看服务:kubectl get services -n kafka-test,如下图红框所示,通过宿主机IP:31090、宿主机IP:31091、宿主机IP:31092即可从外部访问kafka:

- 查看kafka版本:kubectl exec kafka-0 -n kafka-test – sh -c ‘ls /usr/share/java/kafka/kafka_*.jar’ ,如下图红框所示,scala版本2.11,kafka版本2.0.1:

- kafka启动成功后,咱们来验证服务是否正常;

对外暴露zookeeper

- 为了远程操作kafka,有时需要连接到zookeeper,所以需要将zookeeper也暴露出来;

- 创建文件zookeeper-nodeport-svc.yaml,内容如下:

apiVersion: v1

kind: Service

metadata:

name: zookeeper-nodeport

namespace: kafka-test

spec:

type: NodePort

ports:

- port: 2181

nodePort: 32181

selector:

app: zookeeper

release: kafka

验证kafka服务

找一台电脑安装kafka包,就能通过里面自带的命令远程连接和操作K8S的kafka了:

- 访问kafka官网:http://kafka.apache.org/downloads ,刚才确定了scala版本2.11,kafka版本2.0.1,因此下载下图红框中的版本:

- 下载后解压,进入目录kafka_2.11-2.0.1/bin

- 查看当前topic:

./kafka-topics.sh --list --zookeeper 192.168.50.135:32181

./kafka-topics.sh --create --zookeeper 192.168.50.135:32181 --replication-factor 1 --partitions 1 --topic test001

如下图,创建成功后再查看topic终于有内容了:

5. 查看名为test001的topic:

./kafka-topics.sh --describe --zookeeper 192.168.50.135:32181 --topic test001

./kafka-console-producer.sh --broker-list 192.168.50.135:31090 --topic test001

进入交互模式后,输入任何字符串再输入回车,就会将当前内容作为一条消息发送出去:

7. 再打开一个窗口,执行命令消费消息:

./kafka-console-consumer.sh --bootstrap-server 192.168.50.135:31090 --topic test001 --from-beginning

./kafka-consumer-groups.sh --bootstrap-server 192.168.50.135:31090 --list

如下图可见groupid等于console-consumer-21022

![]()

9. 执行命令查看groupid等于console-consumer-21022的消费情况:

./kafka-consumer-groups.sh --group console-consumer-21022 --describe --bootstrap-server 192.168.50.135:31090

如下图所示:

远程连接kafka体验基本功能完毕,查看、收发消息都正常,证明本次部署成功;

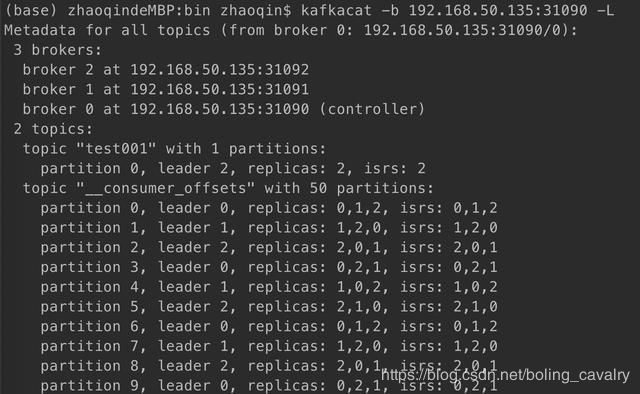

kafkacat连接

- kafkacat是个客户端工具,我这里是在MacBook Pro上用brew安装的;

- 我这里K8S服务器IP是192.168.50.135,因此执行此命令查看kafka信息:kafkacat -b 192.168.50.135:31090 -L,如下图,可以看到broker信息,以及topic信息(一个是test001,还有一个是consumer的offset),把端口换成31091和31092会连接到另外两个broker,也能得到相同信息:

清理资源

本次实战创建了很多资源:rbac、role、serviceaccount、pod、deployment、service,下面的脚本可以将这些资源清理掉(只剩NFS的文件没有被清理掉):

helm del --purge kafka

kubectl delete service zookeeper-nodeport -n kafka-test

kubectl delete storageclass managed-nfs-storage

kubectl delete deployment nfs-client-provisioner -n kafka-test

kubectl delete clusterrolebinding run-nfs-client-provisioner

kubectl delete serviceaccount nfs-client-provisioner -n kafka-test

kubectl delete role leader-locking-nfs-client-provisioner -n kafka-test

kubectl delete rolebinding leader-locking-nfs-client-provisioner -n kafka-test

kubectl delete clusterrole nfs-client-provisioner-runner

kubectl delete namespace kafka-test

至此,K8S环境部署和验证kafka的实战就完成了,希望能给您提供一些参考;

欢迎关注我的公众号:程序员欣宸

![]()