97. k8s持久化存储

文章目录

- 8. 持久化存储

- 8.1 emptyDir类型:

- 8.2 HostPath类型:

- 8.3 NFS共享存储

- 8.4 pvc(persistentVolumeReclaimPolicy)

- 8.4.1 安装nfs服务端(10.0.0.11)

- 8.4.2 node节点安装nfs客户端

- 8.4.3 创建pv和pvc

- 8.4.4 创建mysql-rc,pod模板里使用volume

- 8.4.5 验证持久化

- 8.5 分布式存储glusterfs

- 8.5.1 glusterfs介绍

- 8.5.2 安装glusterfs

- 8.5.3 添加存储资源池

- 8.5.4 glusterfs卷管理

- 8.5.5 分布式复制卷扩容

- 8.6 k8s 对接glusterfs存储

- 8.6.1 创建endpoint

- 8.6.2 创建service

- 8.7 k8s 映射

- 8.8 创建gluster类型pv(全称“持久化卷”)

- 8.9 创建pvc【局部资源】

8. 持久化存储

痛点:没有做持久化存储的时候,当我们清理容器之后,再次启动pod之后,用户的数据就会丢失,这会严重影响到公司的利益与客户的体验;为了解决这个问题,请看以下几种方案:

8.1 emptyDir类型:

1. 前期准备工作:登录我们的web网站,创建若干条数据;然后进入容器的数据库中查询

2. 配置持久化存储

1.修改yml文件

[root@k8s-master tomcat_demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

volumes: #表示对持久化存储目录做持久化

- name: mysql #名称一一对应

emptyDir: {} #持久化类型

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

volumeMounts: #挂载

- name: mysql #名称

mountPath: /var/lib/mysql #配置持久化目录

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

2.创建pod

[root@k8s-master tomcat_demo]# kubectl delete -f .

[root@k8s-master tomcat_demo]# kubectl create -f .

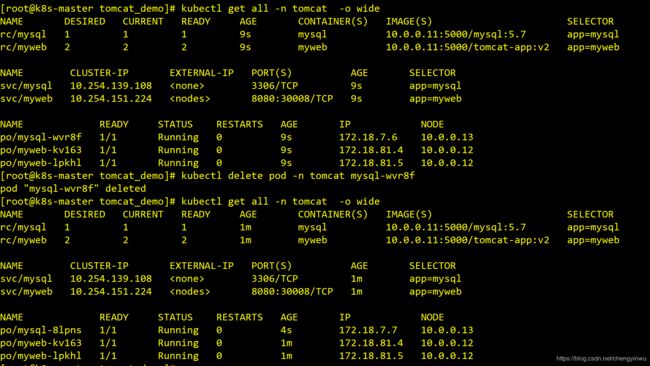

[root@k8s-master tomcat_demo]# kubectl get all -n tomcat -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 10s mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 2 2 2 10s myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/mysql 10.254.70.69 <none> 3306/TCP 10s app=mysql

svc/myweb 10.254.41.20 <nodes> 8080:30008/TCP 10s app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-sxfqp 1/1 Running 0 10s 172.18.81.4 10.0.0.12

po/myweb-0c0hg 1/1 Running 0 10s 172.18.81.2 10.0.0.12

po/myweb-zzfd8 1/1 Running 0 10s 172.18.7.6 10.0.0.13

3.再次登录网站添加数据----》node1节点清理容器-----》登录web检查

数据的存放位置

[root@k8s-node-1 ~]# cd /var/lib/kubelet/pods/a5301810-1c0b-11ea-8a15-000c29d8ae3b/volumes/kubernetes.io~empty-dir/mysql

4.测试删除pod

[root@k8s-master tomcat_demo]# kubectl delete pod -n tomcat mysql-sxfqp

发现痛点;当我们的Pod发生故障时,启动新pod之后数据也会跟着丢失;

为了解决这个问题,请看下边方案:

8.2 HostPath类型:

持久化到宿主机目录

修改mysql-rc.yml文件

[root@k8s-master tomcat_demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

nodeName: 10.0.0.13

volumes:

- name: mysql

hostPath: #类型

path: /data/mysql #持久化路径

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

[root@k8s-master tomcat_demo]# kubectl create -f .

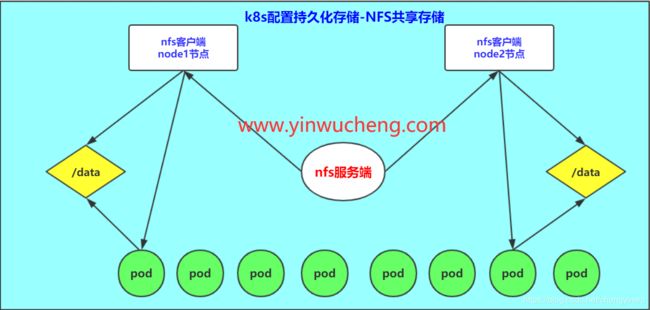

8.3 NFS共享存储

0.修改文件

volumes:

- name: mysql

nfs:

path: /data/wp_mysql

server: 10.0.0.11

1.所有节点安装nfs-utils

yum install nfs-utils -y

mkdir /data

2.nfs服务端配置共享目录:

[root@k8s-master ~]# cat /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

systemctl start rpcbind nfs-server

3.wordpress项目数据库持久化:

1.配置mysql-rc

[root@k8s-master wordpress]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: wordpress

name: mysql-wp

spec:

replicas: 1

selector:

app: mysql-wp

template:

metadata:

labels:

app: mysql-wp

spec:

volumes:

- name: mysql-wp

nfs:

path: /data/blogsql-db

server: 10.0.0.11

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

volumeMounts:

- name: mysql-wp

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: 'somewordpress'

- name: MYSQL_DATABASE

value: 'wordpress'

- name: MYSQL_USER

value: 'wordpress'

- name: MYSQL_PASSWORD

value: 'wordpress'

2.配置wordpress-rc

[root@k8s-master wordpress]# cat wordpress-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: wordpress

name: wordpress

spec:

replicas: 2

selector:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

volumes:

- name: wordpress

nfs:

path: /data/wordpress-db

server: 10.0.0.11 #apiserver的ip地址

containers:

- name: wordpress

image: 10.0.0.11:5000/wordpress:latest

volumeMounts:

- name: wordpress

mountPath: /var/www/html

ports:

- containerPort: 80

env:

- name: WORDPRESS_DB_HOST

value: 'mysql-wp'

- name: WORDPRESS_DB_USER

value: 'wordpress'

- name: WORDPRESS_DB_PASSWORD

value: 'wordpress'

3.创建对应的目录

mkdir -p /data/blogsql-db

mkdir -p /data/wordpress-db

4.测试数据是否持久化

kubectl delete pod --all -n wordpress

登录web界面测试---》测试数据是否存在

[root@k8s-node-1 ~]# df -Th |grep -i nfs

10.0.0.11:/data/blogsql-db nfs4 48G 3.9G 45G 8% /var/lib/kubelet/pods/a9768011-1c80-11ea-a2dc-000c29d8ae3b/volumes/kubernetes.io~nfs/mysql-wp

10.0.0.11:/data/wordpress-db nfs4 48G 3.9G 45G 8% /var/lib/kubelet/pods/a98fa0ab-1c80-11ea-a2dc-000c29d8ae3b/volumes/kubernetes.io~nfs/wordpress

结果:数据得到了持久化

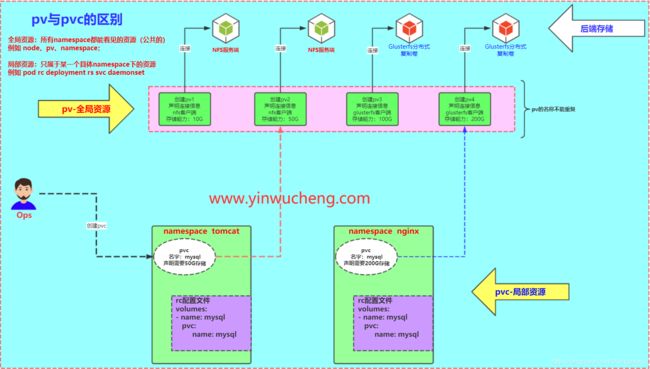

8.4 pvc(persistentVolumeReclaimPolicy)

pv: persistent volume 全局资源,k8s集群

pvc: persistent volume claim, 局部资源属于某一个namespace

8.4.1 安装nfs服务端(10.0.0.11)

yum install nfs-utils.x86_64 -y

mkdir /data

vim /etc/exports

/data 10.0.0.0/24(rw,async,no_root_squash,no_all_squash)

systemctl start rpcbind

systemctl start nfs

8.4.2 node节点安装nfs客户端

yum install nfs-utils.x86_64 -y

showmount -e 10.0.0.11

8.4.3 创建pv和pvc

上传yaml配置文件,创建pv和pvc

8.4.4 创建mysql-rc,pod模板里使用volume

volumes:

- name: mysql

persistentVolumeClaim:

claimName: tomcat-mysql

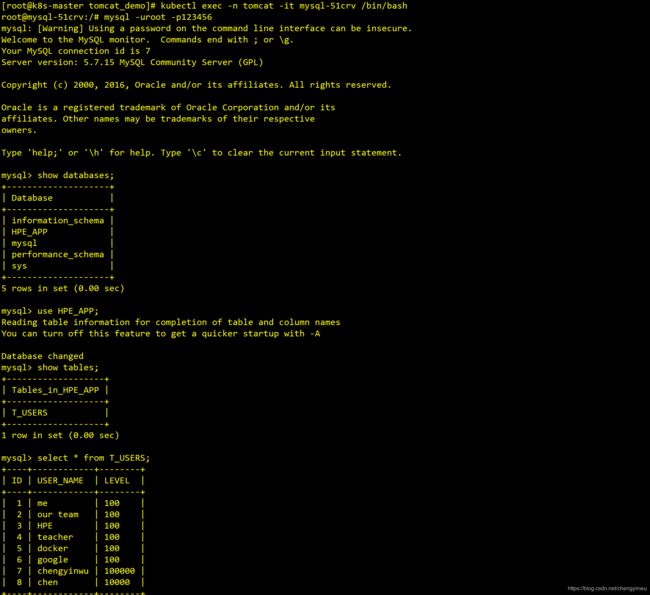

8.4.5 验证持久化

验证方法1:删除mysql的pod,数据库不丢

kubectl delete pod mysql-gt054

验证方法2:查看nfs服务端,是否有mysql的数据文件

8.5 分布式存储glusterfs

8.5.1 glusterfs介绍

Glusterfs是一个开源分布式文件系统,具有强大的横向扩展能力,可支持数PB存储容量和数千客户端,通过网络互联成一个并行的网络文件系统。具有可扩展性、高性能、高可用性等特点。

8.5.2 安装glusterfs

所有节点安装glusterfs:

yum install centos-release-gluster -y

yum install install glusterfs-server -y

systemctl start glusterd.service

systemctl enable glusterd.service

#为gluster集群增加存储单元brick

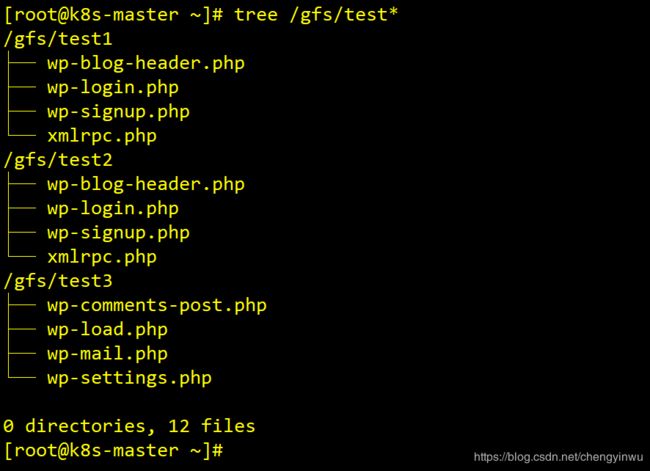

mkdir -p /gfs/test1

mkdir -p /gfs/test2

mkdir -p /gfs/test3

各个节点增加3块为10G硬盘【3个节点都要操作】

echo '- - -' >/sys/class/scsi_host/host0/scan

echo '- - -' >/sys/class/scsi_host/host1/scan

echo '- - -' >/sys/class/scsi_host/host2/scan

mkfs.xfs /dev/sdb

mkfs.xfs /dev/sdc

mkfs.xfs /dev/sdd

mount /dev/sdb /gfs/test1

mount /dev/sdc /gfs/test2

mount /dev/sdd /gfs/test3

8.5.3 添加存储资源池

master节点:

gluster pool list

[root@k8s-master ~]# gluster peer probe k8s-node-1

[root@k8s-master ~]# gluster peer probe k8s-node-2

[root@k8s-master ~]# gluster pool list

UUID Hostname State

faf41469-794a-4460-be0e-65d9fb773be3 k8s-node-1 Connected

a1707123-944a-4395-89ac-0870b640751a k8s-node-2 Connected

08f4b5cc-6a5d-4213-b107-f2c4dd4a59d2 localhost Connected

8.5.4 glusterfs卷管理

1.任何节点都可以创建分布式卷

[root@k8s-master ~]# gluster volume create cheng k8s-master:/gfs/test1 k8s-node-1:/gfs/test1 k8s-node-2:/gfs/test1 force

volume create: cheng: success: please start the volume to access data

2.启动卷并查看卷信息

[root@k8s-master ~]# gluster volume info cheng

Volume Name: cheng

Type: Distribute 分布式卷

Volume ID: 5c17c614-c90d-4c70-b14f-62a8c5903162

Status: Created

Snapshot Count: 0

Number of Bricks: 3

Transport-type: tcp

Bricks:

Brick1: k8s-master:/gfs/test1

Brick2: k8s-node-1:/gfs/test1

Brick3: k8s-node-2:/gfs/test1

Options Reconfigured:

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

gluster volume start cheng

gluster volume info cheng

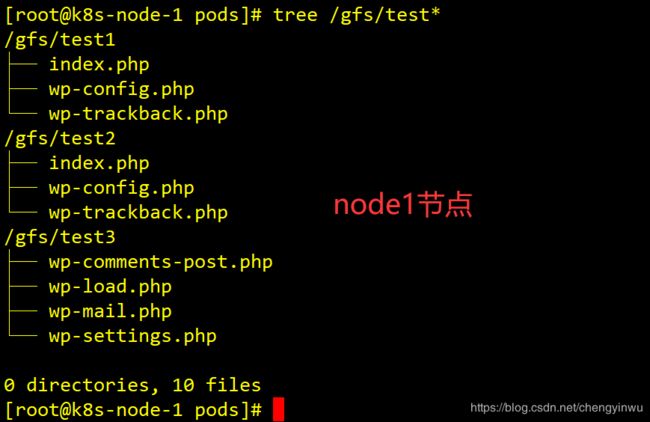

3.挂载并测试

mount -t glusterfs 127.0.0.1:/cheng /mnt/

df -h 查看挂载信息

cp /data/wordpress-db/*.php /mnt/

痛点:

每个节点分布了一些数据;痛点是如果又一个盘故障了,会导致数据丢失

4. 增加存储单元【分布式复制卷】

[root@k8s-master ~]# gluster volume add-brick cheng replica 2 k8s-master:/gfs/test2 k8s-node-1:/gfs/test2 k8s-node-2:/gfs/test2 force

- replica 副本数

[root@k8s-master ~]# gluster volume info cheng

Volume Name: cheng

Type: Distributed-Replicate #分布式复制卷

Volume ID: 5c17c614-c90d-4c70-b14f-62a8c5903162

Status: Started

Snapshot Count: 0

Number of Bricks: 3 x 2 = 6

Transport-type: tcp

Bricks:

Brick1: k8s-master:/gfs/test1

Brick2: k8s-master:/gfs/test2

Brick3: k8s-node-1:/gfs/test1

Brick4: k8s-node-1:/gfs/test2

Brick5: k8s-node-2:/gfs/test1

Brick6: k8s-node-2:/gfs/test2

Options Reconfigured:

performance.client-io-threads: off

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

===============================================================

#创建分布式复制卷

gluster volume create qiangge replica 2 k8s-master:/gfs/test1 k8s-node-1:/gfs/test1 k8s-master:/gfs/test2 k8s-node-1:/gfs/test2 force

#启动卷

gluster volume start qiangge

#查看卷

gluster volume info qiangge

#挂载卷

mount -t glusterfs 10.0.0.11:/qiangge /mnt

8.5.5 分布式复制卷扩容

1.扩容前查看容量:

[root@k8s-master ~]# df -h

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

/dev/sdd 10G 33M 10G 1% /gfs/test3

127.0.0.1:/cheng 30G 404M 30G 2% /mnt

2.扩容命令:

[root@k8s-master ~]# gluster volume add-brick cheng k8s-master:/gfs/test3 k8s-node-1:/gfs/test3 force

3.扩容后查看容量:

[root@k8s-master ~]# df -h

/dev/sdb 10G 33M 10G 1% /gfs/test1

/dev/sdc 10G 33M 10G 1% /gfs/test2

/dev/sdd 10G 33M 10G 1% /gfs/test3

127.0.0.1:/cheng 40G 539M 40G 2% /mnt

[root@k8s-master ~]# gluster volume info cheng

Volume Name: cheng

Type: Distributed-Replicate

Volume ID: 5c17c614-c90d-4c70-b14f-62a8c5903162

Status: Started

Snapshot Count: 0

Number of Bricks: 4 x 2 = 8

Transport-type: tcp

Bricks:

Brick1: k8s-master:/gfs/test1

Brick2: k8s-master:/gfs/test2

Brick3: k8s-node-1:/gfs/test1

Brick4: k8s-node-1:/gfs/test2

Brick5: k8s-node-2:/gfs/test1

Brick6: k8s-node-2:/gfs/test2

Brick7: k8s-master:/gfs/test3

Brick8: k8s-node-1:/gfs/test3

Options Reconfigured:

performance.client-io-threads: off

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

4.让数据均衡分布【建议在晚上做,白天做的话会大量消耗磁盘的IO】

[root@k8s-master ~]# gluster volume rebalance cheng start

这时候分布式卷的内容一致,同时也不需要再次挂载

8.6 k8s 对接glusterfs存储

8.6.1 创建endpoint

vi glusterfs-ep.yaml

iapiVersion: v1

kind: Endpoints

metadata:

name: glusterfs

namespace: tomcat

subsets:

- addresses:

- ip: 10.0.0.11

- ip: 10.0.0.12

- ip: 10.0.0.13

ports:

- port: 49152

protocol: TCP

8.6.2 创建service

vi glusterfs-svc.yaml

iapiVersion: v1

kind: Service

metadata:

name: glusterfs

namespace: tomcat

spec:

ports:

- port: 49152

protocol: TCP

targetPort: 49152

sessionAffinity: None

type: ClusterIP

[root@k8s-master glusterfs]# kubectl describe svc glusterfs -n tomcat

Name: glusterfs

Namespace: tomcat

Labels: <none>

Selector: <none>

Type: ClusterIP

IP: 10.254.150.156

Port: <unset> 49152/TCP

Endpoints: 10.0.0.11:49152,10.0.0.12:49152,10.0.0.13:49152

Session Affinity: None

No events.

===================================================================================

tomcat对接glusterfs

===================================================================================

[root@k8s-master tomcat_demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

nodeName: 10.0.0.13

volumes:

- name: mysql

glusterfs:

path: cheng

endpoints: glusterfs

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

[root@k8s-master tomcat_demo]# kubectl get all -n tomcat -o wide

NAME DESIRED CURRENT READY AGE CONTAINER(S) IMAGE(S) SELECTOR

rc/mysql 1 1 1 3m mysql 10.0.0.11:5000/mysql:5.7 app=mysql

rc/myweb 2 2 2 3m myweb 10.0.0.11:5000/tomcat-app:v2 app=myweb

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/glusterfs 10.254.150.156 <none> 49152/TCP 7m <none>

svc/mysql 10.254.219.210 <none> 3306/TCP 3m app=mysql

svc/myweb 10.254.247.11 <nodes> 8080:30008/TCP 3m app=myweb

NAME READY STATUS RESTARTS AGE IP NODE

po/mysql-12xg6 1/1 Running 0 27s 172.18.7.6 10.0.0.13

po/myweb-ffg16 1/1 Running 0 27s 172.18.81.7 10.0.0.12

po/myweb-w1gqg 1/1 Running 0 27s 172.18.81.6 10.0.0.12

所有数据存在glusterfs存储中

8.7 k8s 映射

wordpress项目实战:独立数据库服务至宿主机--》k8s 映射

把外部的服务,通过创建service和endpoint,把它映射到k8s内部来使用

提前在宿主机安装mysql服务

[root@k8s-node-2 ~]# yum install mariadb-server mariadb -y

[root@k8s-node-2 ~]# systemctl start mariadb

[root@k8s-node-2 ~]# mysql_secure_installation

0.创建wordpress库,并授权

MariaDB [(none)]> grant all on wordpress.* to wordpress@'%' identified by 'wordpress';

MariaDB [(none)]> create database wordpress;

1.编写mysql-ep配置文件

[root@k8s-master wordpress]# cat mysql-ep.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: mysql-wp

namespace: wordpress

subsets:

- addresses:

- ip: 10.0.0.13

ports:

- port: 3306

protocol: TCP

2.配置mysql-svc文件

[root@k8s-master wordpress]# cat mysql-svc.yml

apiVersion: v1

kind: Service

metadata:

namespace: wordpress

name: mysql-wp

spec:

ports:

- port: 3306

targetPort: 3306 #这儿注意,不能声明标签选择器

3.编写wordpress-rc配置文件

[root@k8s-master wordpress]# cat wordpress-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: wordpress

name: wordpress

spec:

replicas: 2

selector:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

volumes:

- name: wordpress

nfs:

path: /data/wordpress-db

server: 10.0.0.11

containers:

- name: wordpress

image: 10.0.0.11:5000/wordpress:latest

volumeMounts:

- name: wordpress

mountPath: /var/www/html

ports:

- containerPort: 80

env:

- name: WORDPRESS_DB_HOST

value: 'mysql-wp'

- name: WORDPRESS_DB_USER

value: 'wordpress'

- name: WORDPRESS_DB_PASSWORD

value: 'wordpress'

4.编写wordpress-svc配置文件

[root@k8s-master wordpress]# cat wordpress-svc.yml

apiVersion: v1

kind: Service

metadata:

namespace: wordpress

name: wordpress

spec:

type: NodePort

ports:

- port: 80

nodePort: 30009

selector:

app: wordpress

检查有没有连接到后端的数据库

[root@k8s-master wordpress]# kubectl describe svc -n wordpress mysql-wp

Name: mysql-wp

Namespace: wordpress

Labels: <none>

Selector: <none>

Type: ClusterIP

IP: 10.254.97.196

Port: <unset> 3306/TCP

Endpoints: 10.0.0.13:3306 #表示已连接到10.0.0.13数据库

Session Affinity: None

No events.

注意:

k8s在做映射的时候,不能做标签选择器

8.8 创建gluster类型pv(全称“持久化卷”)

全局资源:所有namespace都能看见的资源 node pv namespace

局部资源:只属于某一个具体namespace下的资源, 比如:pod、rc、deploy、rs、svc、daemonset

pvc就是pv的分配,pvc是来筛选pv的(根据标签来选)

我们需要什么就创建pvc即可

1.编写mysql_pv配置文件

[root@k8s-master ~]# mkdir /data/pv{1,2}

[root@k8s-master pv_pvc]# cat mysql_pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

labels:

type: nfs #pv的标签

spec:

capacity: #提供的存储能力10G,作为筛选pv的标识

storage: 10Gi

accessModes: #访问模式

- ReadWriteMany #允许多个pod同时挂载读写

persistentVolumeReclaimPolicy: Recycle #pv的回收策略

nfs: #后端存储

path: "/data/pv1"

server: 10.0.0.11

readOnly: false

创建Pv

[root@k8s-master pv_pvc]# kubectl create -f mysql_pv.yaml

persistentvolume "pv1" created

查看pv【全局资源】

[root@k8s-master pv_pvc]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

pv1 10Gi RWX Recycle Available 23s

==============================================================

2.编写pv2配置文件

[root@k8s-master pv_pvc]# cat mysql_pv2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

labels:

type: nfs #pv的标签

spec:

capacity: #提供的存储能力10G,作为筛选pv的标签

storage: 30Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Recycle #pv的回收策略

nfs: #后端存储

path: "/data/pv2"

server: 10.0.0.11

readOnly: false

创建pv2

[root@k8s-master pv_pvc]# kubectl create -f mysql_pv2.yaml

persistentvolume "pv2" created

查看pv

[root@k8s-master pv_pvc]# kubectl get pv

NAME CAPACITY ACCESSMODES RECLAIMPOLICY STATUS CLAIM REASON AGE

pv1 10Gi RWX Recycle Available 2m

pv2 30Gi RWX Recycle Available 4s

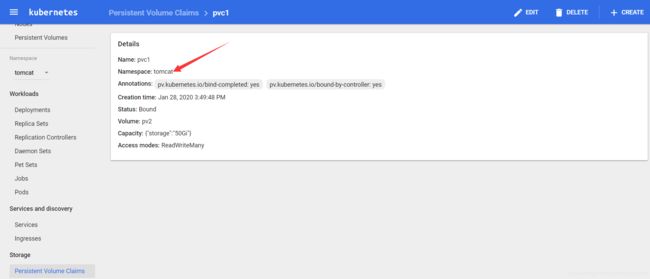

8.9 创建pvc【局部资源】

声明namespace

1.创建mysql_pvc

[root@k8s-master pv_pvc]# cat mysql_pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1 #Pvc名称

namespace: tomcat #给tomcat做持久化

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 30Gi

创建pvc

[root@k8s-master pv_pvc]# kubectl create -f mysql_pvc.yaml

persistentvolumeclaim "pvc1" created

查看pvc

[root@k8s-master pv_pvc]# kubectl get pvc -n tomcat

NAME STATUS VOLUME CAPACITY ACCESSMODES AGE

pvc1 Bound pv2 30Gi RWX 19s

2:在tomcat pod中使用pvc

[root@k8s-master tomcat_demo]# cat mysql-rc.yml

apiVersion: v1

kind: ReplicationController

metadata:

namespace: tomcat

name: mysql

spec:

replicas: 1

selector:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

nodeName: 10.0.0.13

volumes:

- name: mysql

persistentVolumeClaim: #pvc类型

claimName: pvc1 #pvc的名字

containers:

- name: mysql

image: 10.0.0.11:5000/mysql:5.7

volumeMounts:

- name: mysql

mountPath: /var/lib/mysql

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: '123456'

kubectl create -f .

3.查看数据

[root@k8s-master tomcat_demo]# ll /data/pv2/

total 237668

-rw-r----- 1 polkitd input 56 Dec 12 19:26 auto.cnf

drwxr-x--- 2 polkitd input 58 Dec 12 19:27 HPE_APP

-rw-r----- 1 polkitd input 1329 Dec 12 19:26 ib_buffer_pool

-rw-r----- 1 polkitd input 79691776 Dec 12 19:27 ibdata1

-rw-r----- 1 polkitd input 50331648 Dec 12 19:27 ib_logfile0

-rw-r----- 1 polkitd input 50331648 Dec 12 19:26 ib_logfile1

-rw-r----- 1 polkitd input 12582912 Dec 12 19:26 ibtmp1

drwxr-x--- 2 polkitd input 4096 Dec 12 19:26 mysql

drwxr-x--- 2 polkitd input 8192 Dec 12 19:26 performance_schema

drwxr-x--- 2 polkitd input 8192 Dec 12 19:26 sys

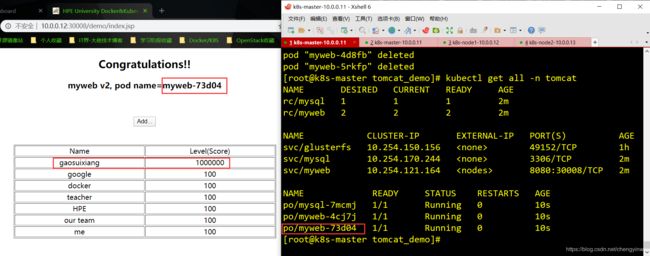

4. 测试删除所有pod(删除之前登录web写入数据,主要目的测试数据是否持久化在pv)

[root@k8s-master tomcat_demo]# kubectl delete pod -n tomcat --all

pod "mysql-778s7" deleted

pod "myweb-4d8fb" deleted

pod "myweb-5rkfp" deleted

5. 验证: