图像视频的常用概念HDR/WDR等

Everything you need but don't want to know about digital imaging

转载自http://www.xinstruments.com/knowledge-base/digital-imaging-glossary.html

For whatever reason you've stumbled upon this page, one thing is clear - you are trying to figure out few "simple" things but they just don't make sense. There is so much information on the subject scattered around so many places that it is hard to combine everything in one piece. Don't worry though, we have a solution. In this section you'll find answers on most of your questions.

Take into account that definitions may change from industry to industry and some terms are very subjective to begin with. So if you need in-depth information, treat these pages as an appetizer and go from there.

Let's start from the start!

What is a bit?

Apart from being a cornerstone and a foundation of modern digital world bit is minimal possible amount of information used in computing. It can be either 0 or 1. It's either ON or OFF.

What is binary system?

Binary counting system is a way to store data and process information. System's base is 2. The decimal counting system we use in real word has a base of 10. There are ten numbers from 0 to 9 which allow us to count up to nine objects (apples, coins, days left till vacation etc.) and if we want to count more than that we will add a digit to the front of the number. So number 12 for example tells us that there are 1*10+2 objects we have counted (where 10 is the base of the system). The same applies to the binary counting system. Except instead of 10 numbers there are only 2. It's 0 and 1.

Number 1 in decimal is also 1 in binary. Number 2 in decimal is 10 in binary (1*2+0). Number 5 in decimal is 101 in binary (1*2*2+0*2+1).

| Decimal | Binary |

|---|---|

| 0 | 0 |

| 1 | 1 |

| 2 | 10 |

| 3 | 11 |

| 4 | 100 |

| 5 | 101 |

| 6 | 110 |

| 7 | 111 |

| - | - |

| 254 | 11111110 |

| 255 | 11111111 |

Notice that 8 digits (or 8 bits) of binary data can code a decimal number from 0 to 255. Those 8 bits are usually combined in a logical block called byte. So one byte is eight bits.

What is byte?

Byte is 8 bits grouped together. Byte can count from 0 to 255. So it can code 256 numbers in total. Notice that 256 is 2^8 (2 power 8).

What is word?

Word (usually WORD) is two bytes grouped together. WORD can count from 0 to 65535. WORD can code 2^16 numbers. (Shouldn't be confused with Microsoft Word® which is a typing tool.)

What is double word?

Double word (usually DWORD) is two WORDs combined together. DWORD can count from 0 to 4,294,967,295. DWORD can code 2^32 numbers.

What is 8 bit device?

Depending on the industry, the term "8 bit device" can be applied to: microcontrollers, switches, filters, computers (way back in a day), etc. However in photo/video industry we will apply term 8 bit devices to a certain imaging sensors, monitors, digital scanners, and printers.

What is CCD?

Charge Coupled Device (CCD) is a type of a light sensor used to capture images. It contains many individual cells (pixels) arranged as a matrix or a line (linear CCD) which can sense light waves (photons). CCD converts photons into electrons and then counts them. Those counted electrons form the image which we can read from CCD. The reality is a little bit complex but is actually irrelevant for most applications.

What is CMOS?

Complementary Metal Oxide Semiconductor (CMOS) is a type of a light sensor used to capture images. Just like CCD it converts photons into electrons and counts them. Even though electronics for CMOS sensor is very different from the CCD counterpart. The only practical difference is that it is possible to get a portion of the image from CMOS sensors thus increasing readout speed and frame rate. With CCD it is only possible to get the entire image. Thus CMOS sensors can have an advantage in certain applications like surveillance or object tracking.

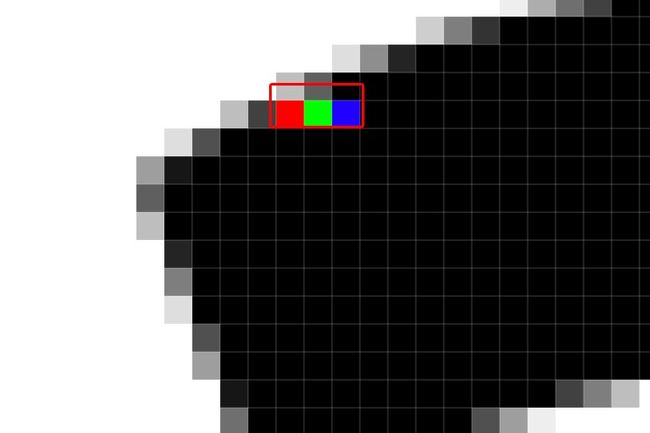

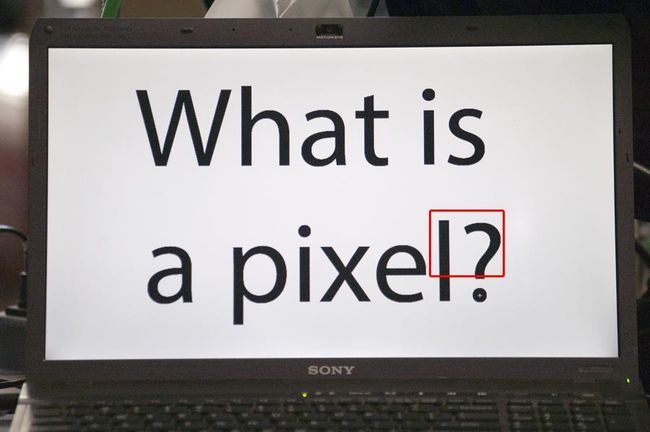

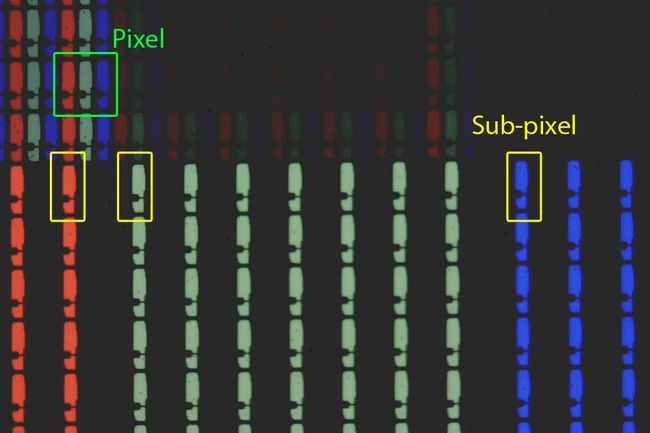

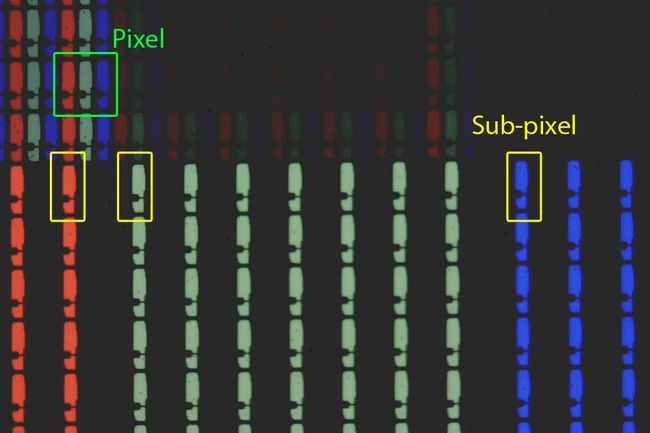

What is a pixel?

A pixel is smallest light sensing or light emitting element or smallest element of digital image. Individual cells of CCD/CMOS can be referred to as pixels. It also works for monitors where smallest element containing full color information is called pixel. In digital imaging, a pixel is the smallest possible dot.

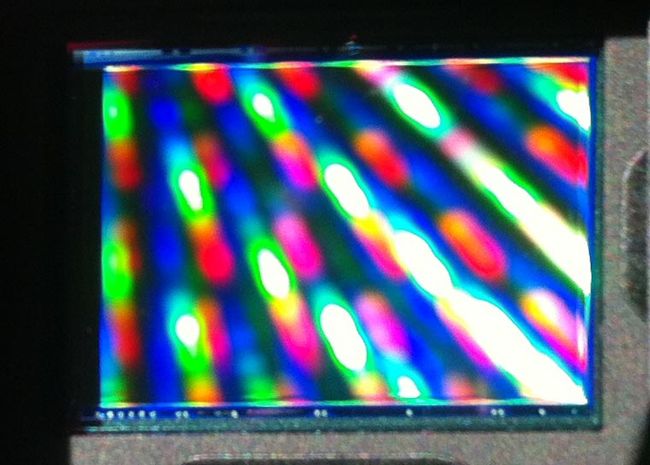

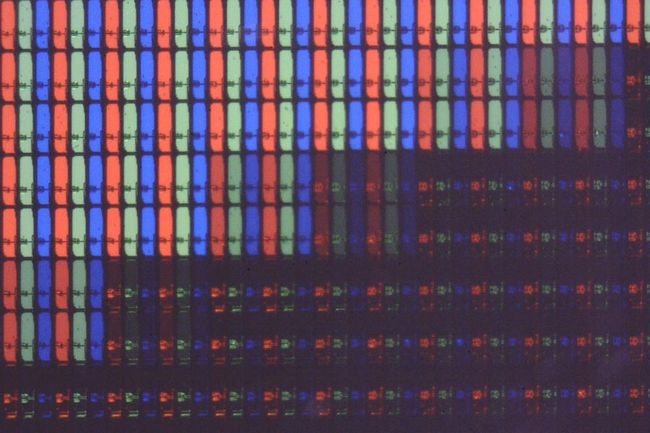

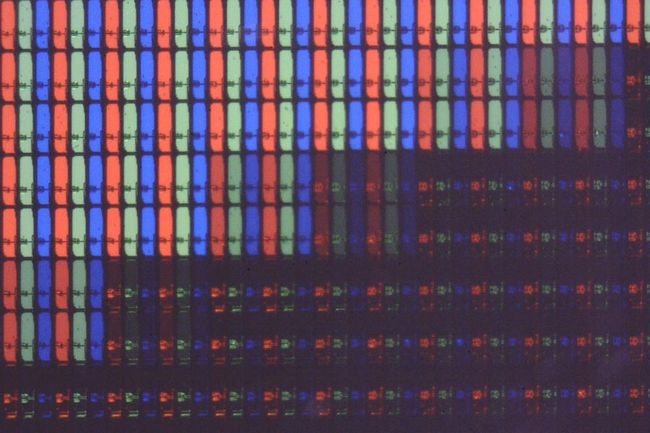

Let's look at two examples. First let's take a picture of the laptop screen using digital camera and magnify it.

Laptop's screenLaptop's screen

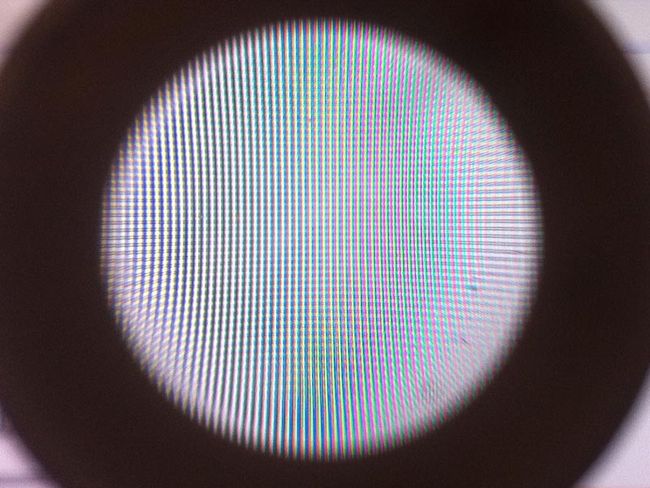

Laptop's screen throught the microscopeLaptop's screen through the microscope

Microscope light is onMicroscope light is on

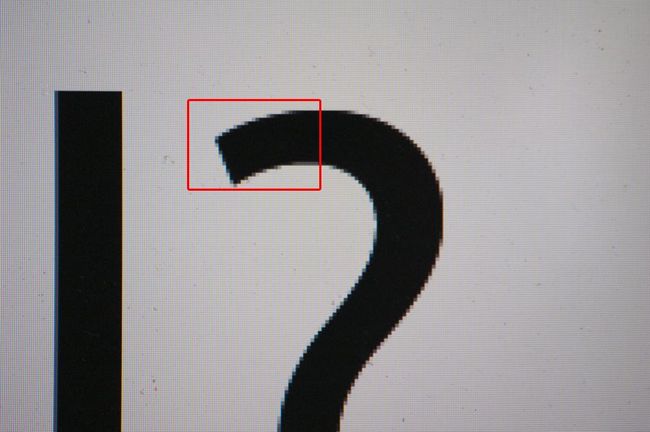

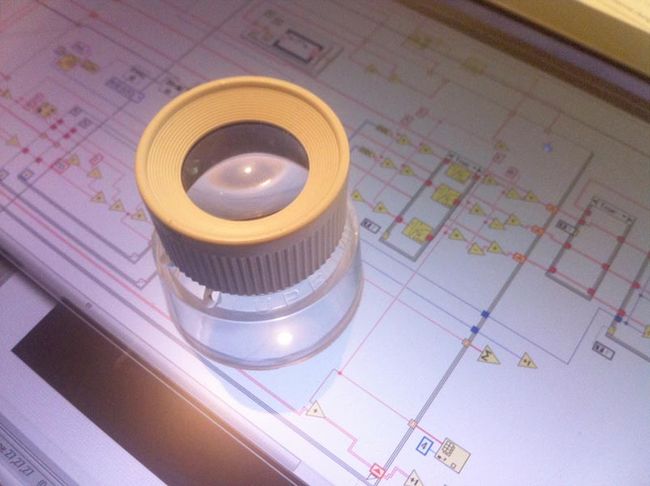

DIY (do it yourself) experiment: If you have a lense you can see individual pixels on the screen with your own eyes. Just place it in front of the monitor and see pixels at it's beauty.

Magnifying lensMagnifying lens

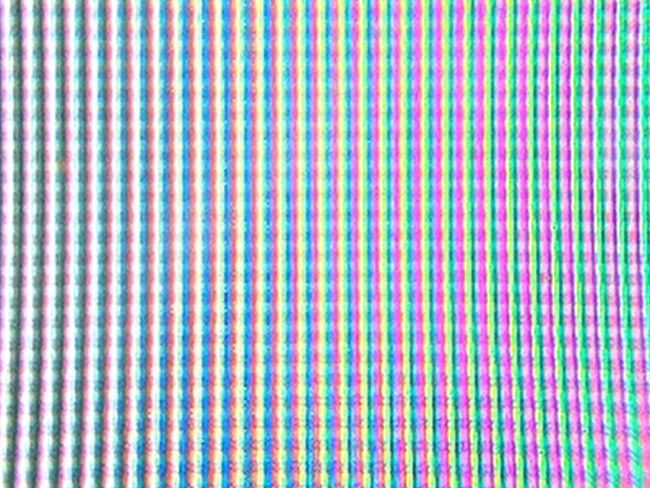

What is sub-pixel?

The term sub-pixel (or sub pixel) can either refer to accuracy measurements in machine vision (as in sub-pixel accuracy) or to the fact that each pixel of the monitor consists of (usually) three smaller elements each of which contains color information about Red, Green or Blue channels.

Pixels and subpixels of the monitor. Looking through microscope.Pixels and subpixels of the monitor

What is a Color Channel?

Color channel (or color plane) is the component of the original color image which contains information about certain color only. Let's consider a standard color image. Each pixel of that image contains information about red, green and blue components. Now if we will discard Red and Green components in each pixel we will create new image which contains information about Blue color only. This new image is called Blue color channel. It will actually be black and white (grayscale) since it represents one color only.

It would be the same as to look at the color image through blue glass (filter). Red and green components of the image would be filtered out and we would be able to see only parts of the image which have blue color in it. Some parts of that image will be darker some brighter. If we will take those intensities and discard blueness, we will get a grayscale image which is in fact blue color channel of the image.

Green color channelGreen channel

Blue color channelBlue channel

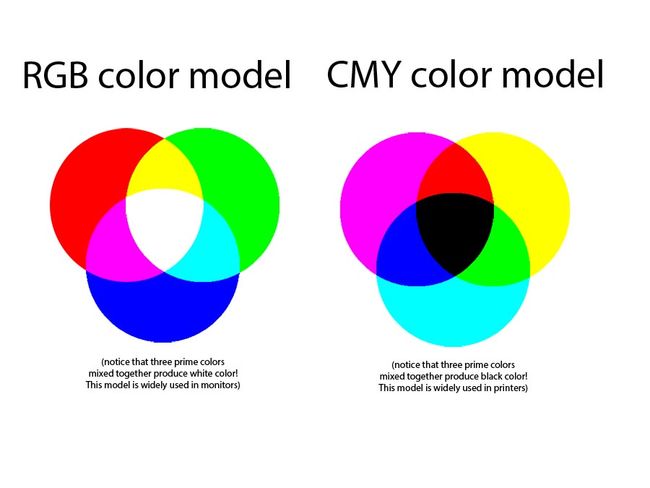

What are the prime colors?

Generally Red, Green and Blue are called prime colors (RGB color model). All other colors are derived from combination of these three. RGB colors have been selected as prime to mimic human eye structure. It has cone cells some of which are receptive to red light only. Other sets are receptive to for blue and green light only. (Humans have also rod cells which are receptive to light in general regardless of color, typically used at night.)

Even though RGB is most common color coding format other prime colors are also used. For example Cyan, Yellow and Magenta (CYM) are used as prime colors in majority of printers.

What is Alpha Channel?

Alpha channel is a component of the image which contains information about its transparency.

What is a Megapixel?

A megapixel is one million pixels. So 10 megapixel camera contains ten million pixels or ten million individual cells which can record light. In reality number will be a little different from exact 10,000,000 but that is not important.

What is Color CCD / Color CMOS?

Contrary to popular belief, there is no such thing as a color light sensor. Both CCD and CMOS can only "see" light as shades of gray. They cannot distinguish colors. So all images obtained with these sensors are actually black and white (grayscale). How color is obtained then? Very simple actually. Each individual cell of the CCD or CMOS is covered with Red, Green or Blue filter. It means that each cell sees only part of the light (part of the spectrum) which falls on it. In order to get color information we interpolate prime colors from neighboring pixels. This way each pixel has all three color values.Contrary to popular belief, there is no such thing as a color light sensor. Both CCD and CMOS can only "see" light as shades of gray. They cannot distinguish colors. So all images obtained with these sensors are actually black and white (grayscale). How color is obtained then? Very simple actually. Each individual cell of the CCD or CMOS is covered with Red, Green or Blue filter. It means that each cell sees only part of the light (part of the spectrum) which falls on it. In order to get color information we interpolate prime colors from neighboring pixels. This way each pixel has all three color values.

(Note! Foveon X3 ® comes as close as it gets nowadays to a color sensor but it is a very special case which we won't cover here).

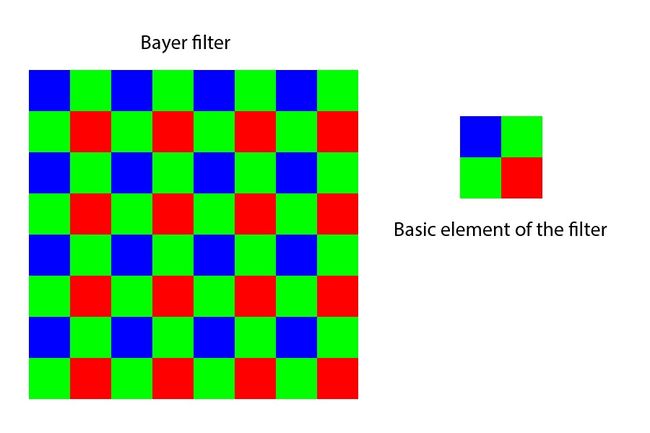

What is Bayer filter?

Bayer color filter is a certain arrangement of color filters over the surface of light sensor. Original filter has a base size of 2x2 and contains two green, one red and one blue filters. It repeats itself over the entire surface of the sensor. Nowadays there is a big variety of these filters with different base size, locations and percentages of red, green and blue elements.

What is Dynamic Range?

Dynamic range is a ratio of brightest to darkest intensity the device can reliably capture or reproduce.

Let's consider standard computer LCD monitor as an example. Each pixel we see is actually comprised of three sub pixels each of which is covered with color filter - red, green and blue. There is a liquid crystal behind each sub pixel and there is a light source behind all of that. Intensity of the light source is constant so perceived "intensity" of each sub pixel is controlled by transparency of liquid crystal behind it. All standard monitors are 8 bit devices. It means that transparency of each liquid crystal can be set it 256 different ways (electronics which controls it can apply 2^8=256 different voltages to adjust its transparency). In other words we can display 256 different intensities or 256 shades of gray. Our darkest intensity (liquid crystal is 100% opaque) will be called black and our brightest (liquid crystal is 100% transparent) is called white. Thus each pixel can have 256*256*256 (16.7M) variations in color. However dynamic range of such a monitor is just 8 (bit) because it can reproduce only 256 (2^8) shades of gray.

What is Contrast Ratio? (confusion about Dynamic Range and Contrast)

The ratio between brightest intensity and darkest intensity that monitor can reliably reproduce is called contrast ratio or simply contrast (in the monitor related industry).

Let's consider two different monitors. They have same electronics, same back light source and both of them are 8 bit devices. They differ in one thing thought - light leakage (or base light). It so happens that even when liquid crystal is 100% opaque there still is some amount of light which reaches the viewer. So monitor in never black - there is always some light we see and that light originates from the monitor itself (as opposed to the light reflected or scattered by the monitor). Let's say first monitor has half the base light of the second one and has a contrast ratio of 1000:1. It means that brightest intensity in can display is 1000 times bigger than darkest one. The second monitor has contrast ratio of 500:1. Strictly speaking, these values (or logarithm of these values) should be called dynamic range (without going into details of dynamic contrast of newest LCD monitors). However historically happened that they are called contrast ratio or simply contrast.

We must note that pixels' intensities of both monitors can still be adjusted in 256 different ways only for standard non HDR monitors. So their "dynamic range" is 8 (bit).

What is HDR?

High Dynamic Range photography (or video) is a technique which allows the combination of images with different exposures to produce one output image showing the entire dynamic range of the scene. This allows the viewer to see both very bright and very dark regions of the scene. HDR images can hold up to 65,535 shades of gray.

Underexposed imageUnderexposed image

Normally exposed imageNormally exposed image

Overexposed imageOverexposed image

Tonemapped HDR imageTonemapped HDR image

What is WDR?

Wide Dynamic Range (WDR) is the term mostly used in surveillance video industry. The meaning of WDR is pretty much the same as HDR. WDR cameras allow to capture images which contain very dark and very bright regions. WDR images are often combined from two images only - one dark and one bright.

What is the difference between HDR and WDR?

None. It is the same thing. Names differ between industries. HDR is mostly used in photography. WDR in video surveillance industry.

What is LDR?

Low dynamic range (LDR) refers to standard 8bit monitors or image formats. It only makes sense to use term LDR when talking about HDR imaging since all standard cameras, monitors, projectors and jpeg images all use same 8bit format hence none of them is HDR or LDR.

What is contrast?

Contrast is a measure which allows to define how well object or part of the image stands out from its surroundings. It is highly subjective and depends on many factors including the individual observer.

What is local contrast?

Local contrast is pretty much the same as contrast in terms of what it defines. It is the same measure of how well object or part of the image stands out of its background. However it is used to highlight the fact that we are talking about contrast in a part of the image or the scene as opposed to the contrast of the entire image or the scene, which in this case is called global contrast.

What is local contrast enhancement?

Local contrast enhancement is a technique of increasing contrast of certain part of the image without affecting the rest of the image. It is very important step of tone mapping process. Without local contrast enhancement, HDR images will have very low contrast or "foggy" look once compressed to fit standard monitor.

Global contrast enchancedGlobal contrast enchanced

Local contrast enchancedLocal contrast enchanced

What is tone mapping?

Tone mapping is a process of reducing dynamic range of the HDR image while trying to retain local contrast of the parts of the image. Monitors can reproduce only 256 shades of gray where is HDR image can contain up to 65,000+ shades of gray. Dynamic range of HDR image must be reduced because it is impossible to show it straight away on the standard monitor.

Underexposed imageUnderexposed image

Normally exposed imageNormally exposed image

Overexposed imageOverexposed image

Linearly mapped HDR imageLinearly mapped HDR image

Logarithmically mapped HDR imageLogarithmically mapped HDR image

Tonemapped HDR imageTonemapped HDR image

What is WDR camera?

WDR camera can combine underexposed and overexposed images inside itself and provide combined image as its output. Camera achieves it but making a quick succession of (usually) two shots. First one is underexposed (dark) and captures details in the bright regions of the scene. Second shot is normal (or overexposed, depending on settings) and captures mid and dark regions of the scene.

Recent years have seen the slow rise of Wide Dynamic Range (WDR) cameras especially in the surveillance industry.

WDR camera backWDR camera back

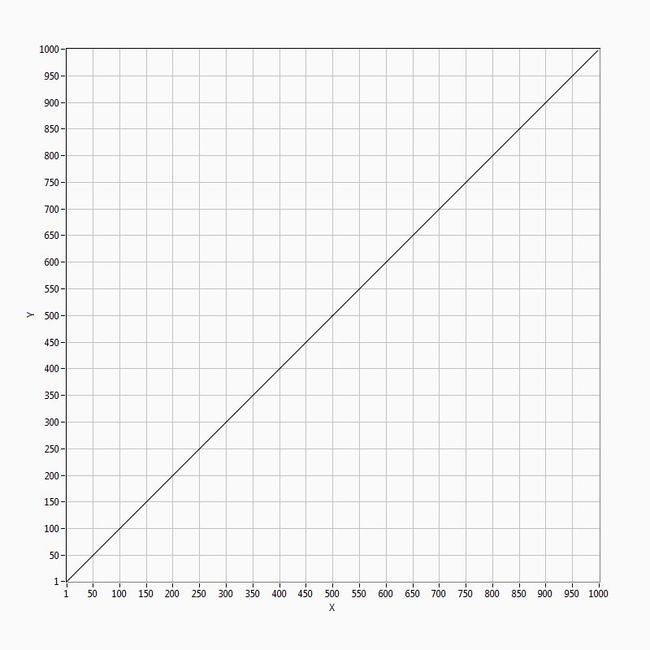

What is linear response?

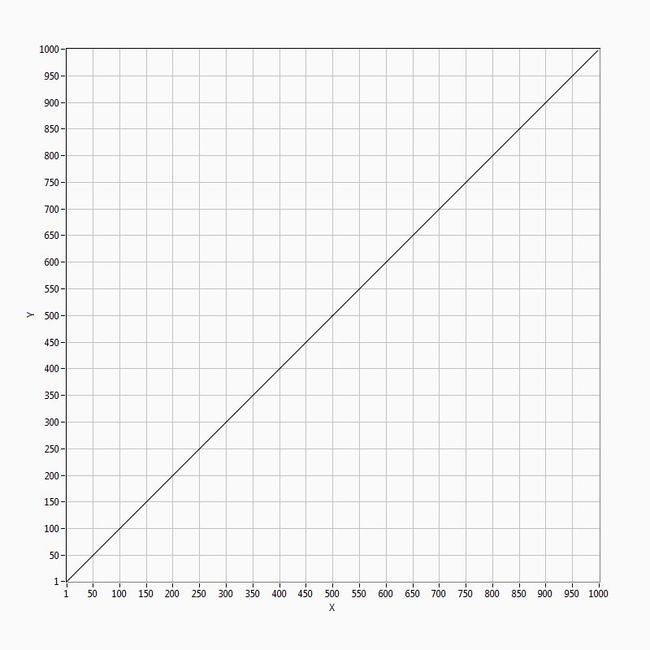

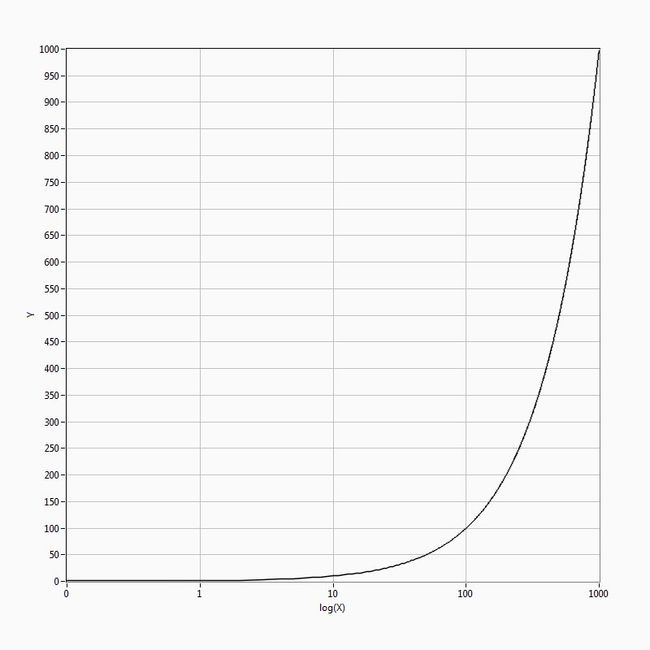

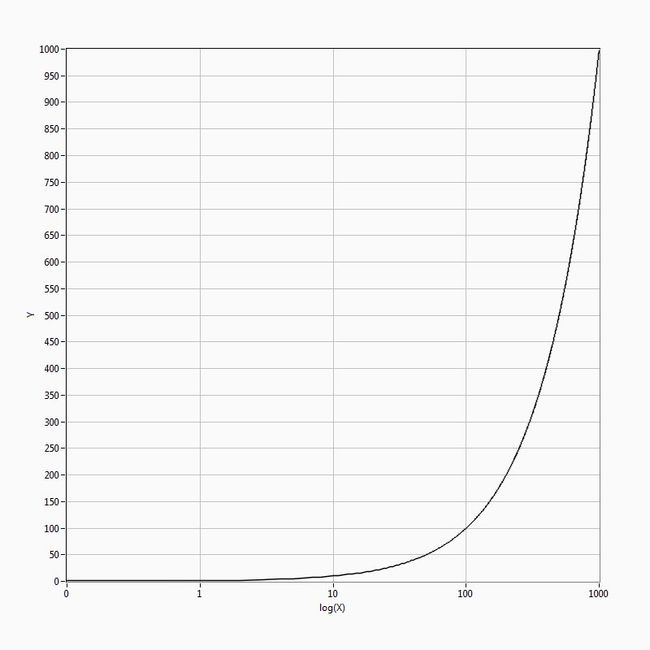

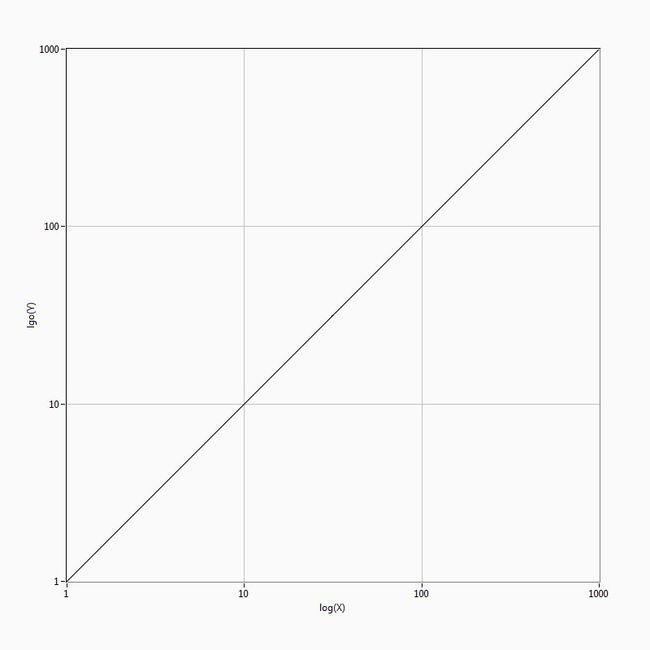

Let's consider one pixel of the camera which is looking at the something. It sees one photon (we wish we had a camera which sees individual photons, but let's just imaging that we do) and give us one count back. If it sees two photons it gives us two counts back. If it sees 1000 photons it gives as 1000 counts. In general its output (O) is linearly proportional to its input (I) with some coefficient (a), which in our example is equal to 1. So in general O=a*I. If [a=1/100], then the camera will give us 1 count per 100 photons it observes. Actually O=a*I+b, where b is dark current (or dark noise of the camera).

Linear response: Y=XLinear response: Y=X

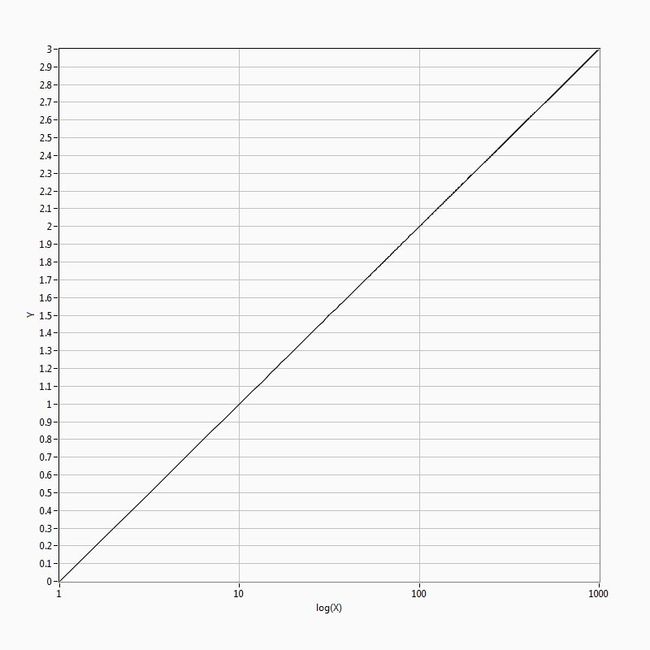

Y=X. Logarithmic X scale.Y=X. Logarithmic X scale.

Y=X. Logarithmic Y scale.Y=X. Logarithmic Y scale.

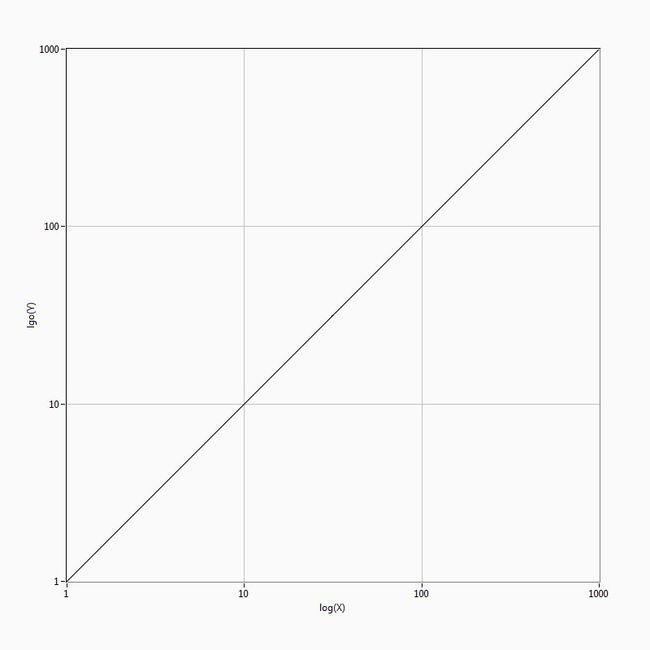

Y=X. Logarithmic X and Y scale.Y=X. Logarithmic X and Y scale.

What is logarithmic response?

Just like with linear response let's consider one pixel of the camera which is looking at the something. It sees one photon and gives us one count back. Now if it sees 10 photons it gives us 2 counts back. If it sees 1000 photons it gives us 4 counts back. So the more photons it sees the less it reacts to it. Output of the camera will be O=a*log(I)+b (where b is dark noise). (Base of the logarithm can be 10 or 2 or 2.71828 or anything else.)

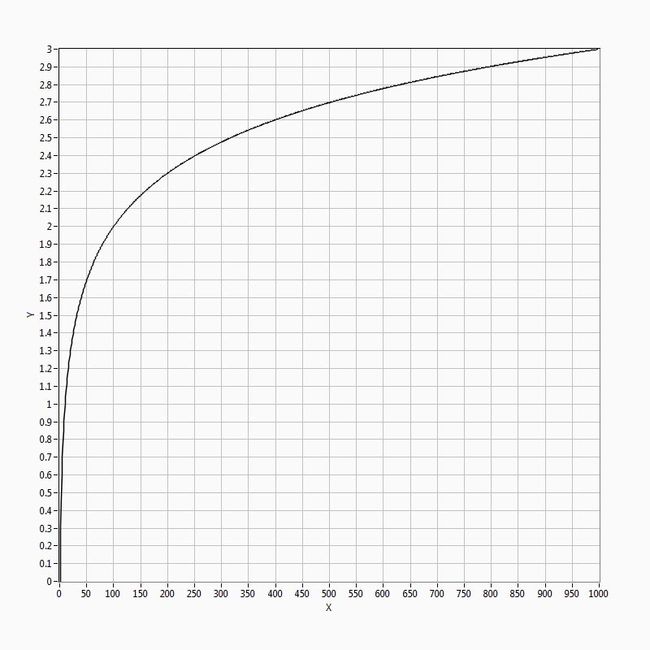

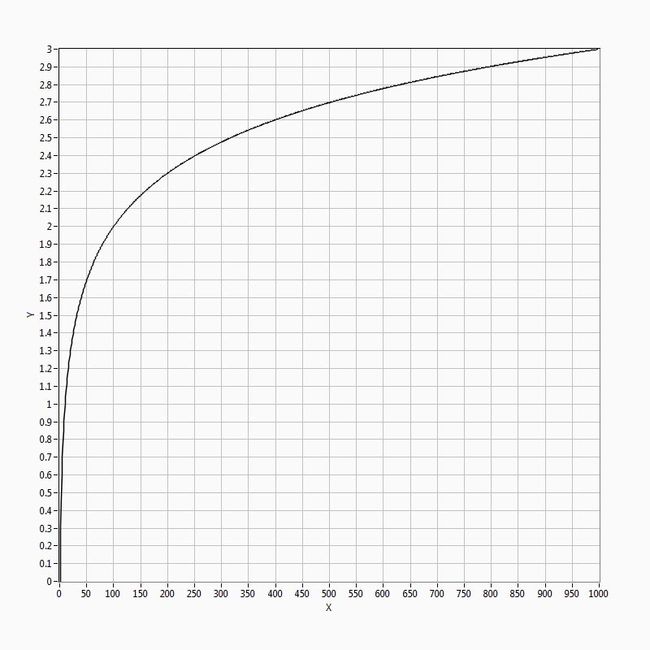

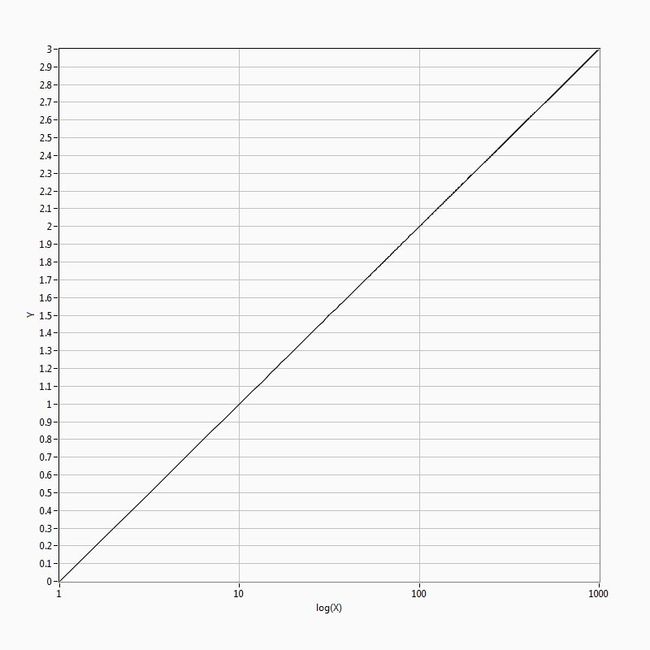

Logarithmic response: Y=log(X)Logarithmic response: Y=log(X)

Y=log(X). Logarithmic X scale.Y=log(X). Logarithmic X scale.

Y=log(X). Logarithmic Y scale.Y=log(X). Logarithmic Y scale.

Y=log(X). Logarithmic X and Y scale.Y=log(X). Logarithmic X and Y scale.

What is dark noise?

If we cover camera with something so that no light reaches the sensor we still can see something at the image we get from it. What we see is actually noise or dark noise. Intensity of dark noise varies from camera to camera. One can never get rid of it, only to minimize it. Having camera with better electronics helps so does cooling the camera to absolute zero (We mean Kelvin scale.)

What is camera noise?

Camera noise consists of dark noise + amplifier noise + readout noise (for CCD cameras).

What is CCD camera?

CCD stands for Charged-Coupled Device. In reality it is a piece of silicon which can convert light (photons) to electrical current (electrons). If we add a little bit of electronics it can become a camera. One very important thing to know about CCD camera is that it is only possible to read the entire image from it. It is impossible to get a portion of the image. Another important thing to know is that it has only one (usually) amplifier which is good because it means that manufacturers can use good low-noise amplifiers.

What is CMOS camera?

CMOS stands for Complementary Metal-Oxide Semiconductor. CMOS sensor is also a piece of silicon which can convert light to electrical current. It works a little bit different from CCD. Important things for us are: 1) it is possible to read any portion of the image without the need to read the entire image, and 2) each pixel has its own amplifier - which means (in general) CMOS cameras are a bit more noisy than CCD (in the same price range).

CCD VS CMOS cameras

Just by looking at the camera it is impossible to tell if CCD or CMOS version is better. It is preferable to use a datasheet for comparison. As a matter of fact, the question of "better" is very complex. Before purchasing a camera you should ask yourself what it is you NEED. Is it low noise? High frame rate? A lot of megapixels? or you need it to be inexpensive? Remember that you cannot have all of the above. As for CCD vs CMOS both technologies are very well developed nowadays. Compare datasheets and make a choice based on your need. Don't waste money on the features you are not going to use.

Choice: a regular camera with a big dynamic range vs. WDR camera

Let's consider grayscale cameras. (RGB will act the same in terms of dynamic range. Color information takes more bits to transfer from the camera but doesn't provide any significantly different information on dynamic range of the scene.)

Regular grayscale cameras provide the output image in 8 bit format. So each image can consist of 256 (2 power 8) shades of gray. Some cameras provide 10 or even 12 bits of information.

The regular camera response curve is linear. It means that camera output is directly proportional to the light. Drawback of these cameras is that they usually have low details in the low light regions of the image.

Response curves of WDR cameras are logarithmic. It means that they are proportionally more sensitive to the low light. Drawback of these cameras is that they have relatively low contrast in the bright regions of the image.