【Abstract】This paper concentrates on the meaning of the form AX=b. As an essential part of the chapter Linear Equations in Linear Algebra, the diverse uses of it makes it confusing for readers to understand. After permenently thinking, I summarize some of the meanings of the matrix equation.

【Key Words】 Linear algebra, matrix equation, linear system.

1 The connections of matrix and vector

1.1 Definition

So before we talking about the sophisticated questions. I think it is necessary to make the definition of matrix clearly. According to Wikipedia, " In mathmetical field , a matrix ( plural matrices ) is a rectangulart array of numerbers, symbols, or expression ( of course we usually use as number combinations in linear algebra), which is arranged in rows and colums. "

There are many kinds of matrices, strictly, it is to say matrices are classifed into many groups according to there specific features.

A rectangular matrix is in row echelon form if it has the following three properties: all nonzero rows are above any rows of all zeros; each leading entry of a row is in a column to the right of the leadign entry of the row above it; all entries in a column below a leading entry are zeros.

If a matrix in echelon form satisfies the following additional conditions, then it is in reduced echelon form: the leading entry in each nonzero row is 1; each leading 1 is the only nonzero entry in its column.

We have to claim that there may be many different echelon form for one particular matrix, but there is only one reduced row achelon form. Thus, we find that there can be many different kinds of pivot of one particular matrix, but it is only one possible pivot possition and pivot column. Next, we will prove it.

Let us begin in the most fundamental field. We all learn basic eqution set ( although sometimes it is difficlut to solve it ). Each eqution represents a linear connection. A system of linear equations has either no solution or exactly one solution or infinitely many solutions. It is said to be consistent if it has solution or solutions. Else, we say it inconsistent. We can use matrix to represent them. Given the system with the coeffifcients of each variable aligned in columns, the matrix is called the coefficient matrix. Adding a column containing the constants from the right sides of the equtions to make it a agumented matrix. An m*n matrix is a rectangular array of numbers with m rows and n column. For a particular matix, we know that it must have only one general solution ( I don't say it mus have only one solution ), it means that it must have only one reduced echelon form. But we can add ,substract, multiply, divide some of the rows, result in the diverse kinds of echelon form. In the meantime, the unique reduced echelon form indicated the fact that we can obtain only one pivot possition and piovt column. But different echelon form means we have many possible pivot.

Identity matrix, or sometimes ambiguously called an unit matrix, of size n is the n×n square matix with ones on the main diagonal and zeros elsewhere. Less frequently, some mathematics books use i to represent the identity matrix, meaning "unit matrix". This is specificly easy to understand, so I just to highlight one point. A matrix A is equvilent to the A*I, I is the identity matrix.

1.2 Connections

We talked a lot of features about matrix. And we have had a great command of vector. So what is the connertions between them? Were they insolated? The answey of course is NO. In fact, not only do they connected, but also related closely. It all stand for an operation generalizedly. The matrix is the higher dimension of vector in the narrow sence.

I am not a dilligent peple, and I like to refer the good throught of others. So when I get a good essay, I would like to share with you. Recently I found an article demonstrated this question. It mainly said that the essence of the matrix is a description of the sports. I think this essay indicated the connections between vetor and matrix deeply and humously. If you want to get deep in this topic or rather to say for learning linear algebra better, please click here to get the hyperlink.

2 Span and rank

2.1 Span

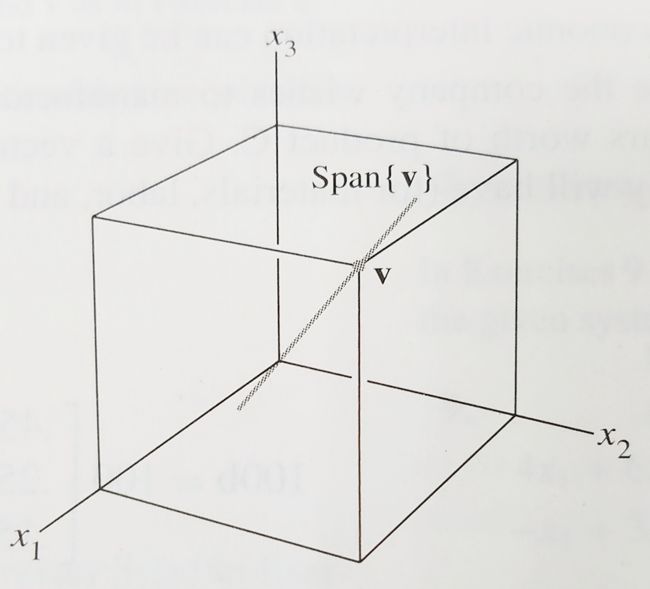

In linear algebra, thelinear span(also called the linear hull or just span) of a set of vectors in a vector space is the intersection of all subspace containing that set. The linear span of a set of vectors is therefore a vector space. Thus, Span{v1,v2......vp} can be written in the form c1v1+c2v2+......cpvp, with c1,c2......cp scalars. Then comes a result: if some vectors generate a linear space, it means the space are combination of them.

2.2 *Rank

The theory of matrix rank of matrix is an important part of the matrix almost throughout the course, matrix rank has a wide application upon the nature of the property, in the square matrix inverse, system of linear equations judgment whether there is solution and how many solution, judgment of linear correlation, as well as the characteristic value of matrix, .

What is rank? What is the relationship of rank and span? What is the charteristic of rank? Actually, we need't to solve all of these complicated task now, for we wil have a more deeply research on it. All we need to know it that span may be a subset of rank. If you are confident enough, you are able to have a glance at the article, having a premier impression of rank. Please click here to get the available essay.

Also, limited by the standard, I just to list ( no understand well ) the following website to be a quotion.

THE APPLICATION OF RANK IN LINEAR ALGEBRA.

A NATURE OF A DIAGONALIZABLE MATRIX.

THE DEFINATION EQUVILENT OF RANK OF MATRICES.

3 The matrix eqution Ax=b(some equivalences)

3.1 Rephrase concepts of vector equation

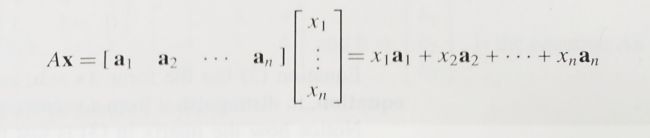

If A is an m*n matrix, with columns a1,....an, and if x is in rank n , then the product of A and x, denoted by Ax, is the linear combination of the columns of A using the corresponding entries in x as weight; to speak it directly, we can use the fiture below to rephrase concepts of linear space or linear combination.

Notice how the matrix above is just he matrix of coefficients of the system of linear equations. We can assumpt that the vetor equation can be represented by a form. The form is called matrix eqution.

3.2 The condition of linearly dependent

The defination of Ax leads directly to the following useful fact.

The equation Ax=b has a solution if and only if b is a linear combination of the columns of A. Then, we can translate the question, "Is b in Span{ a1,a2...an}" into the mathmetical calculation of Ax=b.

An indexed set of vectors {v1...vp} is said to be linearly independent if the homogenerous equation has only the trivial solution, else linearly dependent. Reading this, you are bond to be curious about the relationship between linearly dependent and linear combination.

Firstly, I list some exceptions.

A set of two vectors {v1,v2} is linearly dependent if at least one of the vectors is a mutiple of the other.

If a set S={v1...vp} in rank n contains the zero vector, then the set is linearly dependent.

Then we look the picture below.

An indexed set S={v1...vp} of two or more vectors is linearly dependent if and only if at least one of the vectors in S is a linear combination of the others. In fact, if S is linearly dependent and v1 is not a zero vector, then some vj is a linear combination of the preceding vectors,v1...vj-1. Warning this theorem does not say that every vector in a linearly dependent set is a linear combination of the preceding vectors. Maybe they don't need to use such a lot of vectors. For instance, two vector v1,vn are dependent, so vn is not compulsorily be the combination of other vectors preceding.

If a set contains more vectors than there are entries in each vector, then teh set is linearly dependent. That is, any set {v1..vp} in rank n is linearly dependent if p>n.

I came cross an interesting question at a time,

According to the question, there seems to be a relationship between the elements of b. For rank3, the equation gives us a fact that the b must satisfy a limitation, showing b must exsit on a platform which through original point. We generalize this conclusion, for a certain coffecient matrix A in rank n, the argument column must satisfy a limitation in rank(n-1).

3.3 Possession of pivot possition in row

We have nothing difficult to conscious the illustration that only when argumented matrix A has a pivot position in every row can it have solutions.

Consequently, if we get solutions of Ax=b, we can make sure that each rows has a pivot possition for A. Be careful, A is an argumented matrix but not a coffecient matrix.

3.4 Varibles of algebra equation

A system of linear equations is said to be homogenerous if it can be written in the form Ax=0, where A is an m*n matrix and 0 is the zero vector. For this eqution, x=0 is deserved to be a solution. This zero solution is usually called the trivial solution. However, they are probably other solutions. For a given equation Ax = 0, the important question is whether there exists a nontrival solution, that is, a nonzero vector x satisfies Ax=0.

The homogeneous equation Ax=0 has a nontrival solution if and only if the equation has at least one free variable. Condition of the solution of homogenerous has a closely relation with algebra eqution. If the algebra equation has free variable, the matrix equation Ax=0 has trival solution.

How was the effect on the numbers of free variables?

If the only solution is zero, or we can say there exists only the trival solution of the homogenerous, the solution set is Span{0}.

If the homogenerous has one solution other than the trival solution, the solution set is a line through the origin.

A plane above provides a good mental image for the solution set of homogenerous when there are two free variables.

So what if the vector b is not zero vector?

By simplifying the argumented matrix, we can acquire the answer x = p +tv . This form is called the parametric vector equation of the plane. Similarly, the solutions represented in this way (combine the parameters and the vector) are named parametric vector forms. For nonhomogenerous, it has a additional constant, which is able to recognized as a movement.

Thus the solution set Ax=b is a line through p parallel to the solution set of Ax=0.

3.5 One-to-one

The T is one-to-one if and only if the equation T(x) = 0 has only the trival solution. We prepare to discuss it in chaper 4 in detail.

If T is not one-to-one, there comes a b that is the image of at least two different vectors in rank n, for example u and v, T(u) =T(v)=b. But then,since T is linear, T(u-v)=T(u)-T(v)=0. The vector u-v is not zero. So this situation is unconsistent.

4 Linear transformations ( the geometric significances)

In the last chaper, we discuss the equivalences of the matrix equation. So what is the geometric meaning of Ax = b?

We can acknowledge this as the matrix A as an object that "act" on a vector by multiplication to produce a new vector called Ax.

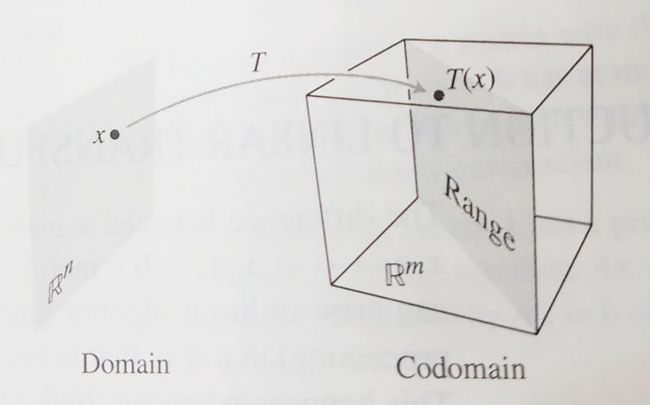

A transformation (or function or mapping ) T from rank n to rank m is a rule that assigns to each vector x in rank n a vector T(x) in rank m. The set rank n is called the domain of T, and rank m is called the codomain of T. The set of all images T(x) is called the range of T.

T(x) = Ax. We can also find the equation of A.

This is also adaptable in the case of rotation transformation.

To grasp A, only we have to do is to find the T(e1) and T(e2).

5 Summary

In this article, we introduced premier matirx equation. We firstly talked about the fundamental conception, then we thought of some of the equivalences. Finally, we put the knowledge in geometric angle. To grasp them, you would better take some practice.

6 Reference

* hyperlink to arrive

*wekipedia

*