基于FFmpeg和OpenSL ES的Android音频播放器实现

前言

在以前的博文中,我们通过FFmpeg解码,并基于OpenGL ES完成了视频的渲染。本文我们将基于OpenSL ES完成native音频的注入播放。

OpenSL ES也是The Khronos Group Inc组织制定的一个音频规范,网上资料很多,在Android SDK代码里还有例子:

native-audio,里面详细的实现了OpenSL ES的不同功能,本文不再阐述原理了,基本上是按照固定流程调用。

当我们基于FFmpeg解码时,最合适的方法是采用buffer管理的方式,将音频PCM数据连续注入解码内存,再由芯片完成PCM的播放。有关采样率,声道存储位宽等音频相关知识,请自行学习。

实现需求

- 只演示音频解码和播放,不进行视频解码,也不做音视频同步;

- 基于FFmpeg来进行音频解封装和解码,基于OpenSL ES进行PCM注入播放;

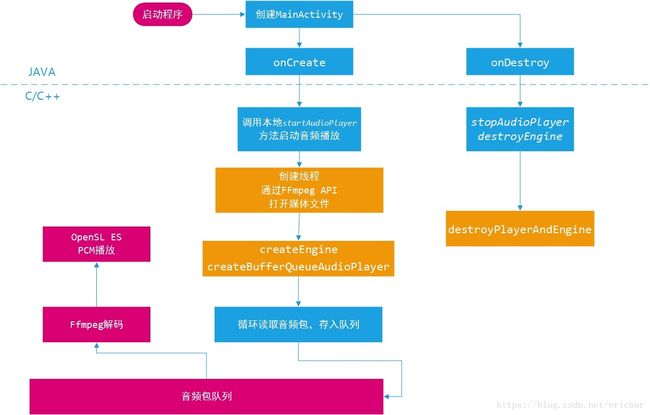

实现架构

仍然类似于前面视频播放的播放流程设计,由于不涉及surface的管理,所以要简单一些,注意下面几点:

1. createEngine()和createBufferQueueAudioPlayer()函数放在打开媒体文件之后,因为要依赖媒体的音频属性(声道数,采样率等);

2. 播放器PCM数据的读取是通过回调的方式从媒体队列中读取并进行解码,而不是另建一个线程主动注入,这点与视频解码是不同的。

3. 因为音频的播放速度是由采样率决定的,所以音频的播放速率无需干预,不存在视频帧播放快慢的问题。

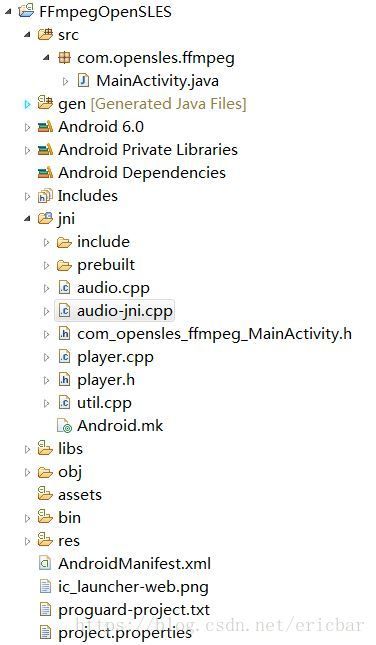

代码结构

audio-jni.cpp提供jni相关函数的实现,本地代码最终生成libaudio-jni.so库。

audio.cpp完成基于OpenSL ES的音频管理。

player.cpp里实现媒体文件的打开播放(基于FFmpeg)。

主要代码

下面是audio-jni.cpp的源码,这是本项目的核心实现。需要特别注意如下几点,否则音频无法播放成功:

1. createBufferQueueAudioPlayer()函数中,SLDataFormat_PCM format_pcm的第二个参数是声道数,第三个参数是采样率,FFmpeg和OpenSL ES差1000的倍乘,要注意的是参数channelMask,一定要和声道数对应,比如,如果是双声道,我们需要将两个声道通过或关系绑定(SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT),而不能简单的设置SL_SPEAKER_FRONT_CENTER,否则CreateAudioPlayer会失败,这是我遇到过的问题。

2. 播放器SetPlayState状态为SL_PLAYSTATE_PLAYING后,并不会主动调用回调函数来取数据,所以我们需要主动调用一次回调函数(通过fireOnPlayer函数)触发数据读取。

3. 回调函数读取数据并不会像SDL那样,告知底层需要读取的数据长度,所以我们可以做简单一点,仅读取一个包并解码回调即可,待数据消耗完毕,还会再次调用回调函数读PCM数据的,效率并不低。

#include 下面是audio.cpp的源码,audio_decode_frame()函数通过FFmpeg的avcodec_decode_audio4()函数完成音频数据解码,init_filter_graph()函数,av_buffersrc_add_frame()函数,av_buffersink_get_frame()函数,完成解码后音频格式的统一转换,最后都变成AV_SAMPLE_FMT_S16的存储形式,当然采样率和声道数保持原始音频属性,这样做的好处是避免了不同音频格式解码出不同PCM存储类型。

#include "player.h"

#define DECODE_AUDIO_BUFFER_SIZE ((AVCODEC_MAX_AUDIO_FRAME_SIZE * 3) )

static AVFilterContext *in_audio_filter; // the first filter in the audio chain

static AVFilterContext *out_audio_filter; // the last filter in the audio chain

static AVFilterGraph *agraph; // audio filter graph

static struct AudioParams audio_filter_src;

static int init_filter_graph(AVFilterGraph **graph, AVFilterContext **src,

AVFilterContext **sink) {

AVFilterGraph *filter_graph;

AVFilterContext *abuffer_ctx;

AVFilter *abuffer;

AVFilterContext *aformat_ctx;

AVFilter *aformat;

AVFilterContext *abuffersink_ctx;

AVFilter *abuffersink;

char options_str[1024];

char ch_layout[64];

int err;

/* Create a new filtergraph, which will contain all the filters. */

filter_graph = avfilter_graph_alloc();

if (!filter_graph) {

av_log(NULL, AV_LOG_ERROR, "Unable to create filter graph.\n");

return AVERROR(ENOMEM);

}

/* Create the abuffer filter;

* it will be used for feeding the data into the graph. */

abuffer = avfilter_get_by_name("abuffer");

if (!abuffer) {

av_log(NULL, AV_LOG_ERROR, "Could not find the abuffer filter.\n");

return AVERROR_FILTER_NOT_FOUND ;

}

abuffer_ctx = avfilter_graph_alloc_filter(filter_graph, abuffer, "src");

if (!abuffer_ctx) {

av_log(NULL, AV_LOG_ERROR,

"Could not allocate the abuffer instance.\n");

return AVERROR(ENOMEM);

}

/* Set the filter options through the AVOptions API. */

av_get_channel_layout_string(ch_layout, sizeof(ch_layout), (int) 0,

audio_filter_src.channel_layout);

av_opt_set(abuffer_ctx, "channel_layout", ch_layout,

AV_OPT_SEARCH_CHILDREN);

av_opt_set(abuffer_ctx, "sample_fmt",

av_get_sample_fmt_name(audio_filter_src.fmt),

AV_OPT_SEARCH_CHILDREN);

av_opt_set_q(abuffer_ctx, "time_base",

(AVRational ) { 1, audio_filter_src.freq },

AV_OPT_SEARCH_CHILDREN);

av_opt_set_int(abuffer_ctx, "sample_rate", audio_filter_src.freq,

AV_OPT_SEARCH_CHILDREN);

/* Now initialize the filter; we pass NULL options, since we have already

* set all the options above. */

err = avfilter_init_str(abuffer_ctx, NULL);

if (err < 0) {

av_log(NULL, AV_LOG_ERROR,

"Could not initialize the abuffer filter.\n");

return err;

}

/* Create the aformat filter;

* it ensures that the output is of the format we want. */

aformat = avfilter_get_by_name("aformat");

if (!aformat) {

av_log(NULL, AV_LOG_ERROR, "Could not find the aformat filter.\n");

return AVERROR_FILTER_NOT_FOUND ;

}

aformat_ctx = avfilter_graph_alloc_filter(filter_graph, aformat, "aformat");

if (!aformat_ctx) {

av_log(NULL, AV_LOG_ERROR,

"Could not allocate the aformat instance.\n");

return AVERROR(ENOMEM);

}

/* A third way of passing the options is in a string of the form

* key1=value1:key2=value2.... */

snprintf(options_str, sizeof(options_str),

"sample_fmts=%s:sample_rates=%d:channel_layouts=0x%x",

av_get_sample_fmt_name(AV_SAMPLE_FMT_S16), audio_filter_src.freq,

audio_filter_src.channel_layout);

err = avfilter_init_str(aformat_ctx, options_str);

if (err < 0) {

av_log(NULL, AV_LOG_ERROR,

"Could not initialize the aformat filter.\n");

return err;

}

/* Finally create the abuffersink filter;

* it will be used to get the filtered data out of the graph. */

abuffersink = avfilter_get_by_name("abuffersink");

if (!abuffersink) {

av_log(NULL, AV_LOG_ERROR, "Could not find the abuffersink filter.\n");

return AVERROR_FILTER_NOT_FOUND ;

}

abuffersink_ctx = avfilter_graph_alloc_filter(filter_graph, abuffersink,

"sink");

if (!abuffersink_ctx) {

av_log(NULL, AV_LOG_ERROR,

"Could not allocate the abuffersink instance.\n");

return AVERROR(ENOMEM);

}

/* This filter takes no options. */

err = avfilter_init_str(abuffersink_ctx, NULL);

if (err < 0) {

av_log(NULL, AV_LOG_ERROR,

"Could not initialize the abuffersink instance.\n");

return err;

}

/* Connect the filters;

* in this simple case the filters just form a linear chain. */

err = avfilter_link(abuffer_ctx, 0, aformat_ctx, 0);

if (err >= 0) {

err = avfilter_link(aformat_ctx, 0, abuffersink_ctx, 0);

}

if (err < 0) {

av_log(NULL, AV_LOG_ERROR, "Error connecting filters\n");

return err;

}

/* Configure the graph. */

err = avfilter_graph_config(filter_graph, NULL);

if (err < 0) {

av_log(NULL, AV_LOG_ERROR, "Error configuring the filter graph\n");

return err;

}

*graph = filter_graph;

*src = abuffer_ctx;

*sink = abuffersink_ctx;

return 0;

}

static inline int64_t get_valid_channel_layout(int64_t channel_layout,

int channels) {

if (channel_layout

&& av_get_channel_layout_nb_channels(channel_layout) == channels) {

return channel_layout;

} else {

return 0;

}

}

// decode a new packet(not multi-frame)

// return decoded frame size, not decoded packet size

int audio_decode_frame(uint8_t *audio_buf, int buf_size) {

static AVPacket pkt;

static uint8_t *audio_pkt_data = NULL;

static int audio_pkt_size = 0;

int len1, data_size;

int got_frame;

AVFrame * frame = NULL;

static int reconfigure = 1;

int ret = -1;

for (;;) {

while (audio_pkt_size > 0) {

if (NULL == frame) {

frame = av_frame_alloc();

}

data_size = buf_size;

got_frame = 0;

// len1 is decoded packet size

len1 = avcodec_decode_audio4(global_context.acodec_ctx, frame,

&got_frame, &pkt);

if (got_frame) {

if (reconfigure) {

reconfigure = 0;

int64_t dec_channel_layout = get_valid_channel_layout(

frame->channel_layout,

av_frame_get_channels(frame));

// used by init_filter_graph()

audio_filter_src.fmt = (enum AVSampleFormat) frame->format;

audio_filter_src.channels = av_frame_get_channels(frame);

audio_filter_src.channel_layout = dec_channel_layout;

audio_filter_src.freq = frame->sample_rate;

init_filter_graph(&agraph, &in_audio_filter,

&out_audio_filter);

}

if ((ret = av_buffersrc_add_frame(in_audio_filter, frame))

< 0) {

av_log(NULL, AV_LOG_ERROR,

"av_buffersrc_add_frame : failure. \n");

return ret;

}

if ((ret = av_buffersink_get_frame(out_audio_filter, frame))

< 0) {

av_log(NULL, AV_LOG_ERROR,

"av_buffersink_get_frame : failure. \n");

continue;

}

data_size = av_samples_get_buffer_size(NULL, frame->channels,

frame->nb_samples, (enum AVSampleFormat) frame->format,

1);

// len1 is decoded packet size

// < 0 means failure or error,so break to get a new packet

if (len1 < 0) {

audio_pkt_size = 0;

av_log(NULL, AV_LOG_ERROR,

"avcodec_decode_audio4 failure. \n");

break;

}

// decoded data to audio buf

memcpy(audio_buf, frame->data[0], data_size);

audio_pkt_data += len1;

audio_pkt_size -= len1;

int n = 2 * global_context.acodec_ctx->channels;

/*audio_clock += (double) data_size

/ (double) (n * global_context.acodec_ctx->sample_rate); // add bytes offset */

av_free_packet(&pkt);

av_frame_free(&frame);

return data_size;

} else if (len1 < 0) {

char errbuf[64];

av_strerror(ret, errbuf, 64);

LOGV2("avcodec_decode_audio4 ret < 0, %s", errbuf);

}

}

av_free_packet(&pkt);

av_frame_free(&frame);

// get a new packet

if (packet_queue_get(&global_context.audio_queue, &pkt) < 0) {

return -1;

}

//LOGV2("pkt.size is %d", pkt.size);

audio_pkt_data = pkt.data;

audio_pkt_size = pkt.size;

}

return ret;

}

GitHub源码

请参考完整的源码路径:

https://github.com/ericbars/FFmpegOpenSLES