mac安装hadoop3.2.1

安装hadoop

Hadoop的搭建有三种方式,本文章安装的是单机版

单机版,适合开发调试;

伪分布式版,适合模拟集群学习;

完全分布式,生产使用的模式

安装步骤:

修改主机名

(base) localhost:~ XXX$ sudo scutil --set HostName localhost

Password:

ssh免密等录(下面是一路回车到底,因为昨天安装过,所以有个询问是否ovewride的,那个选y)

(1)ssh-keygen -t rsa

(2)cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

(3)chmod og-wx ~/.ssh/authorized_keys

(base) localhost:~ XXX$ ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/Users/XXX/.ssh/id_rsa):

/Users/XXX/.ssh/id_rsa already exists.

Overwrite (y/n)? y

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /Users/XXX/.ssh/id_rsa.

Your public key has been saved in /Users/XXX/.ssh/id_rsa.pub.

The key fingerprint is:

SHA256:p4uOVZyfifSQ1TSupLTfmiifAoahU2Xck5xQnIISlS8 XXX@localhost

The key’s randomart image is:

±–[RSA 2048]----+

|.o.+.*.+ o |

|. o = O + . |

| . + . … o o |

| E . o B . |

| o + S o |

|o . o o O + |

| . . … o * . |

| oo. + o |

| …o+= o |

±—[SHA256]-----+

(base) localhost:~ XXX$ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

(base) localhost:~ XXX$ chmod og-wx ~/.ssh/authorized_keys

然后重启终端,看是否免密(由下可知,确实没有让输入密码)

Last login: Thu Jan 9 11:09:53 on ttys000

(base) localhost:~ XXX$ ssh localhost

Last login: Thu Jan 9 11:29:29 2020

安装hadoop

(base) localhost:~ XXX$ brew install hadoop

因为我前面安装过一次,所以这次就运行 brew reinstall Hadoop

/usr/local/Cellar/hadoop/3.2.1: 22,397 files, 815.7MB, built in 4 minutes 51 seconds

说明安装成功了。

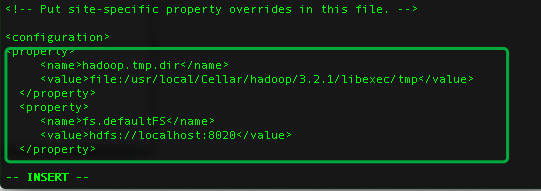

修改配置文件

(《hadoop基础教程》时添加,fs.defaultFs保存了NameNode的位置,hdfs和mapreduce组件都需要它,所以它出现在core-site.xml中而不是hdfs-site.xml中的原因)

/usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/core-site.xml

(#由于我们将在hadoop中存储数据,同时所有组件都运行在本地主机,这些数据都需要存储在本地文件系统的某个地方,不管选择何种模式,hadoop默认使用hadoop.tmp.dir属性作为根目录,所有文件和数据都写入该目录。)

(base) localhost:~ XXX$ vim /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/core-site.xml

hadoop.tmp.dir

file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp

fs.defaultFS

hdfs://localhost:8020

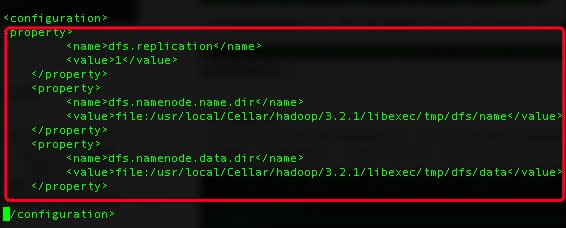

修改配置文件 /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/hdfs-site.xml

(base) localhost:~ XXX$ vim /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/hdfs-site.xml

dfs.replication指定每个hdfs数据块的复制次数

dfs.replication

1

dfs.namenode.name.dir

file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp/dfs/name

dfs.namenode.data.dir

file:/usr/local/Cellar/hadoop/3.2.1/libexec/tmp/dfs/data

配置hadoop的环境变量

(base) localhost:~ XXX$ vi ~/.bash_profile

#add

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_201.jdk/Contents/Home

export HADOOP_HOME=/usr/local/Cellar/hadoop/3.2.1/libexec

export HADOOP_ROOT_LOGGER=INFO,console

export PATH= P A T H : PATH: PATH:{HADOOP_HOME}/bin

#并不会立刻生效,需要执行 source ~/.bash_profile才可

初始化

需要转换到cd /usr/local/Cellar/hadoop/3.2.1/bin下

(base) localhost:bin XXX$ ./hdfs namenode -format

启动

hadoop 转到cd /usr/local/Cellar/hadoop/3.2.1/sbin

(base) localhost:sbin XXX$ ./start-dfs.sh

查看是否启动

#备注:使用start-dfs.sh或者start-all.sh来启动hadoop,停止 ./stop-dfs.sh#

(base) localhost:sbin XXX$ jps

17490 Jps

17174 NameNode

11695 SecondaryNameNode

查看namenode

http://localhost:9870/dfshealth.html#tab-overview

下面启动yarn

修改配置文件/usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/mapred-site.xml

mapreduce.framework.name

yarn

修改配置文件

/usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.env-whitelist

JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME

启动yarn

备注:

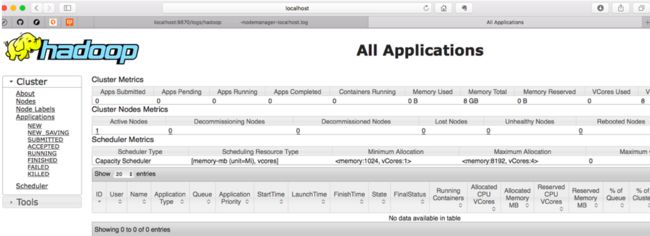

#启动ResourceManager 和 NodeManager,启动成功后可以访问http://localhost:8088/cluster

#停止 sbin/stop-yarn.sh可以通过start-all.sh 来同时启动start-dfs.sh和start-yarn.sh

(base) localhost:sbin XXX$ ./start-yarn.sh

Starting resourcemanager

Starting nodemanagers

问题来了:http://localhost:8088/cluster这个页面启动不了????

一直报上面的错误,对于java痴而言,根本不知道啥原因,搜索了好长时间,发现说是要更换jdk,好嘛,从12换到jdk8,可以了~心力憔悴呀。

见到这个页面不容易呀

终于在搞了2天之后,把wordcount运行了一遍,虽说还是不晓得原理是啥,已经很欣慰了, 不容易呀,摸爬滚打~

问题来了,我建的文件弄哪里去了。原来上传上去了

重新梳理下流程:

#创建目录(啥反应没有,找文件夹中也没有input,就把下面的一句话复制找原因,结果还下载了个glibc-2.17.tar.gz,哎反正走的弯路不少,此时应该到http://localhost:9870/explorer.html#/这个链接下去找。)

(base) localhost:bin XXX$ hadoop fs -mkdir /input

2020-01-10 16:08:37,847 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

#于是乎重新建了一个,提示已经存在,哈哈

(base) localhost:bin XXX$ hadoop fs -mkdir /input

2020-01-10 16:14:56,171 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

mkdir: `/input’: File exists

#查看hadoop下面的所有目录

(base) localhost:bin XXX$ hadoop fs -ls /

2020-01-10 16:15:20,438 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - XXX supergroup 0 2020-01-10 16:08 /input

drwxr-xr-x - XXX supergroup 0 2020-01-10 15:42 /wordcount

#将文件上传

(base) localhost:3.2.1 XXX$ hadoop fs -put LICENSE.txt /input

2020-01-10 16:19:22,783 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

2020-01-10 16:19:24,046 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

#查看上传的文件

(base) localhost:3.2.1 XXX$ hadoop fs -ls /input

2020-01-10 16:19:39,254 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Found 1 items

-rw-r–r-- 1 XXX supergroup 150569 2020-01-10 16:19 /input/LICENSE.txt

#运行计算LICENSE.txt里面的单词

(base) localhost:~ XXX$ hadoop jar /usr/local/Cellar/hadoop/3.2.1/libexec/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount /input /output

#备注:最后三个参数的意思如下:wordcount是测试用例的名称,/input表示输入文件的目录,/output表示输出文件的目录

(⚠️输出文件必须是一个不存在的文件,如果指定一个已有目录作为hadoop作业输出的话,作业将无法运行。如果想让hadoop将输出存储到一个目录,它必须是不存在的目录,应该是hadoop的一种安全机制,防止hadoop重写有用的文件)

#查看output里面都有啥

(base) localhost:~ XXX$ hadoop fs -ls /output

2020-01-10 16:26:48,394 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform… using builtin-java classes where applicable

Found 2 items

-rw-r–r-- 1 XXX supergroup 0 2020-01-10 16:23 /output/_SUCCESS

-rw-r–r-- 1 XXX supergroup 35324 2020-01-10 16:23 /output/part-r-00000

#看part-r-00000里面的结果

(base) localhost:~ XXX$ hadoop fs -cat /output/part-r-00000

至此已经告一段落,好好想想,总结以下。从完全不知道是啥,到愣是配置通,感谢万能的网络,也感谢自己没有放弃。