Tensorflow Serving部署deeplab v3+模型并提供服务

Tensorflow Serving部署deeplab v3+模型并提供服务

从实验到生产,简单快速部署机器学习模型一直是一个挑战。这个过程要做的就是讲训练好的模型对外提供预测服务。在生产中,这个过程需要可重现,隔离和安全。这里,我们使用基于docker的TensorFlow Serving来简单的完成这个过程。TensorFlow从1.8版本开始支持docker部署,包括CPU和GPU,非常方便。

获取模型的第一步当然是训练一个模型了,这里我模型已经训练完毕了,使用deeplab v3+训练的语义分割模型。TensorFlow Serving使用SavedModel这种格式来保存其模型,SavedModel是一种独立于语言的,可恢复、密集的序列化格式,支持使用更高级别的系统和工具来生成,使用和转换TensorFlow模型。

一、模型序列化

由于deeplab v3+训练出来的模型格式与TensorFlow serving需要的格式不符,所以需要进行模型序列化

模型序列化代码如下:

# encoding=utf-8

import os

import tensorflow as tf

from tensorflow.python.tools import freeze_graph

import sys

sys.path.append('/data/guoyin/Semantic_Segmentation_Deep/research')

sys.path.append('/data/guoyin/Semantic_Segmentation_Deep/research/slim')

from deeplab import common

from deeplab import input_preprocess

from deeplab import model

os.environ['CUDA_VISIBLE_DEVICES'] = '1'

slim = tf.contrib.slim

flags = tf.app.flags

FLAGS = flags.FLAGS

flags.DEFINE_string('checkpoint_path', None, 'Checkpoint path')

flags.DEFINE_string('export_path', None,

'Path to output Tensorflow frozen graph.')

flags.DEFINE_integer('model_version', 1, 'Models version number.')

flags.DEFINE_integer('num_classes', 2, 'Number of classes.')

flags.DEFINE_multi_integer('crop_size', [800, 608],

'Crop size [height, width].')

# For `xception_65`, use atrous_rates = [12, 24, 36] if output_stride = 8, or

# rates = [6, 12, 18] if output_stride = 16. For `mobilenet_v2`, use None. Note

# one could use different atrous_rates/output_stride during training/evaluation.

flags.DEFINE_multi_integer('atrous_rates', None,

'Atrous rates for atrous spatial pyramid pooling.')

flags.DEFINE_integer('output_stride', 8,

'The ratio of input to output spatial resolution.')

# Change to [0.5, 0.75, 1.0, 1.25, 1.5, 1.75] for multi-scale inference.

flags.DEFINE_multi_float('inference_scales', [1.0],

'The scales to resize images for inference.')

flags.DEFINE_bool('add_flipped_images', False,

'Add flipped images during inference or not.')

def _create_input_tensors():

"""Creates and prepares input tensors for DeepLab model.

This method creates a 4-D uint8 image tensor 'ImageTensor' with shape

[1, None, None, 3]. The actual input tensor name to use during inference is

'ImageTensor:0'.

Returns:

image: Preprocessed 4-D float32 tensor with shape [1, crop_height,

crop_width, 3].

original_image_size: Original image shape tensor [height, width].

resized_image_size: Resized image shape tensor [height, width].

"""

# input_preprocess takes 4-D image tensor as input.

input_image = tf.placeholder(tf.uint8, [1, None, None, 3], name='ImageTensor')

original_image_size = tf.shape(input_image)[1:3]

# Squeeze the dimension in axis=0 since `preprocess_image_and_label` assumes

# image to be 3-D.

image = tf.squeeze(input_image, axis=0)

resized_image, image, _ = input_preprocess.preprocess_image_and_label(

image,

label=None,

crop_height=FLAGS.crop_size[0],

crop_width=FLAGS.crop_size[1],

min_resize_value=FLAGS.min_resize_value,

max_resize_value=FLAGS.max_resize_value,

resize_factor=FLAGS.resize_factor,

is_training=False,

model_variant=FLAGS.model_variant)

resized_image_size = tf.shape(resized_image)[:2]

# Expand the dimension in axis=0, since the following operations assume the

# image to be 4-D.

image = tf.expand_dims(image, 0)

return image, original_image_size, resized_image_size

def main(unused_argv):

tf.logging.set_verbosity(tf.logging.INFO)

tf.logging.info('Prepare to export model to: %s', FLAGS.export_path)

with tf.Session(graph=tf.Graph()) as sess:

model_options = common.ModelOptions(

outputs_to_num_classes={common.OUTPUT_TYPE: FLAGS.num_classes},

crop_size=FLAGS.crop_size,

atrous_rates=FLAGS.atrous_rates,

output_stride=FLAGS.output_stride)

# placeholder for receiving the serialized input image

serialized_tf_example = tf.placeholder(tf.string, name='tf_example')

feature_configs = {'x': tf.FixedLenFeature(shape=[], dtype=tf.float32), }

tf_example = tf.parse_example(serialized_tf_example, feature_configs)

# reshape the input image to its original dimension

tf_example['x'] = tf.reshape(tf_example['x'], (1, 800, 608, 3))

input_tensor = tf.identity(tf_example['x'], name='x') # use tf.identity() to assign name

if tuple(FLAGS.inference_scales) == (1.0,):

tf.logging.info('Exported model performs single-scale inference.')

predictions = model.predict_labels(

input_tensor,

model_options=model_options,

image_pyramid=FLAGS.image_pyramid)

else:

tf.logging.info('Exported model performs multi-scale inference.')

predictions = model.predict_labels_multi_scale(

input_tensor,

model_options=model_options,

eval_scales=FLAGS.inference_scales,

add_flipped_images=FLAGS.add_flipped_images)

semantic_predictions = predictions[common.OUTPUT_TYPE]

# restore model from checkpoints

saver = tf.train.Saver()

module_file = tf.train.latest_checkpoint(FLAGS.checkpoint_path)

saver.restore(sess, module_file)

# builder

builder = tf.saved_model.builder.SavedModelBuilder(os.path.join(FLAGS.export_path, str(FLAGS.model_version)))

tensor_info_x = tf.saved_model.utils.build_tensor_info(input_tensor)

tensor_info_y = tf.saved_model.utils.build_tensor_info(semantic_predictions)

signature_def_map = {

"predict_image": tf.saved_model.signature_def_utils.build_signature_def(

inputs={"image": tensor_info_x},

outputs={"seg": tensor_info_y},

method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME

)

}

builder.add_meta_graph_and_variables(sess,

[tf.saved_model.tag_constants.SERVING],

signature_def_map=signature_def_map)

builder.save()

if __name__ == '__main__':

flags.mark_flag_as_required('checkpoint_path')

flags.mark_flag_as_required('export_path')

tf.app.run()

序列化模型配置文件

--logtostderr

\

--model_variant="xception_65"

\

--atrous_rates=6

\

--atrous_rates=12

\

--atrous_rates=18

\

--output_stride=16

\

--decoder_output_stride=4

\

--crop_size=800

\

--crop_size=608

\

--num_classes=2

\

--inference_scales=1.0

\

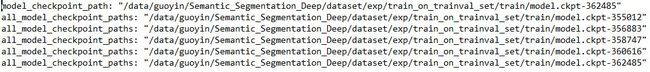

--checkpoint_path=/data/guoyin/Semantic_Segmentation_Deep/dataset/exp/train_on_trainval_set/train/

\

--export_path=/data/guoyin/Semantic_Segmentation_Deep/dataset/exp/train_on_trainval_set/tf_serving_model/

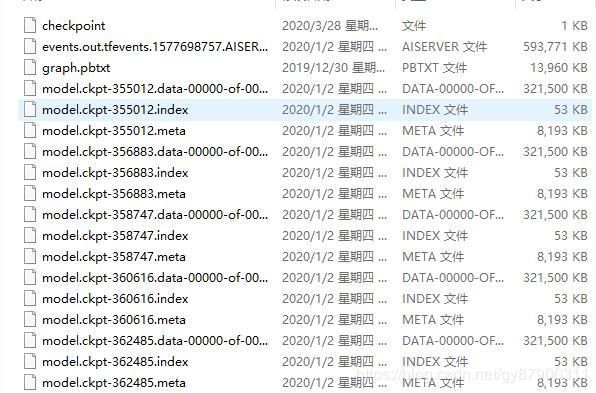

这里有个坑,需要将checkpoint里面的模型路径改掉

这里一定要配置好crop_size,否则模型的输出是不对的。由于训练的时候,crop_size是801和609,因为要整除16余1,但是模型导出这里不需要余1

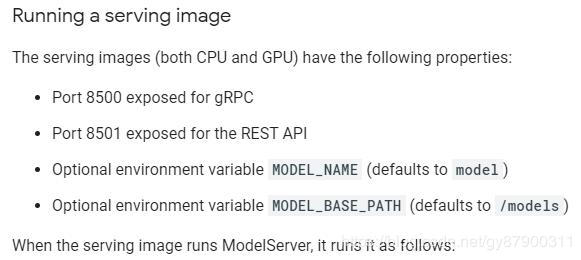

二、docker部署模型

docker run --runtime=nvidia -p 8501:8501 -p 8500:8500 --mount

type=bind,source=/root/serving_model/serving/tensorflow_serving/servables/tensorflow/testdata/deeplab_two,target=/models/deeplab_two

-e CUDA_VISIBLE_DEVICES=0 -e MODEL_NAME=deeplab_two -t tensorflow/serving:latest-gpu --per_process_gpu_memory_fraction=0.4 &

–per_process_gpu_memory_fraction=0.4需要加到最后,不能加在中间

部署的时候,一定要注意,8500和8501端口都要注明启动,否则只会启动一个端口,另一个端口就启动不了。

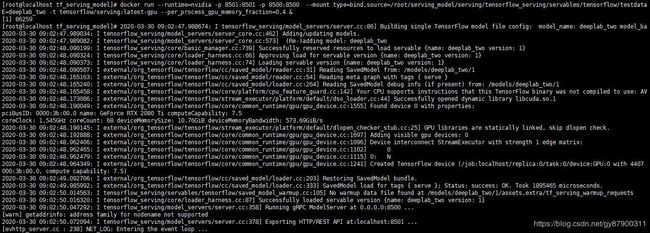

模型启动成功

开放端口就可以正常提供服务了

三、客户端请求服务

# encoding=utf-8

from grpc.beta import implementations

import numpy as np

from PIL import Image

import tensorflow as tf

import matplotlib.pyplot as plt

import time

import sys

sys.path.append('/data/guoyin/Semantic_Segmentation_Deep/research')

sys.path.append('/data/guoyin/Semantic_Segmentation_Deep/research/slim')

from deeplab import input_preprocess

from tensorflow.contrib.util import make_tensor_proto

from tensorflow_serving.apis import predict_pb2

from tensorflow_serving.apis import prediction_service_pb2

import warnings

warnings.filterwarnings("always")

server="localhost:8500"

host, port = server.split(':')

file = '/data/guoyin/Semantic_Segmentation_Deep/dataset/exp/train_on_trainval_set/infer_image/jxxyrb2019122502.jpg'

im=np.array(Image.open(file))

channel = implementations.insecure_channel(host, int(port))

stub = prediction_service_pb2.beta_create_PredictionService_stub(channel)

request = predict_pb2.PredictRequest()

request.model_spec.name = "deeplab_two"

request.model_spec.signature_name = 'predict_image'

#########################################################################################################

start = time.perf_counter()

request.inputs["image"].CopyFrom(

tf.contrib.util.make_tensor_proto(im.astype(dtype=np.float32), shape=[1, 800, 608, 3]))

response = stub.Predict(request, 30)

######################################################################################################

output = np.array(response.outputs['seg'].int64_val)

output = np.reshape(output, (800, 608))

######################################################################################################

mask=np.reshape(output, (800, 608))

end = time.perf_counter()

print ("==>TIME: " , end - start)

plt.figure(figsize=(14,10))

plt.subplot(1,2,1)

plt.imshow(im, 'gray', interpolation='none')

plt.subplot(1,2,2)

plt.imshow(im, 'gray', interpolation='none')

plt.imshow(mask, 'jet', interpolation='none', alpha=0.7)

plt.show()

模型调用成功会输出如下内容:

可以看到分割一张图片所需时间为:==>TIME: 0.5489408209687099

四、输出xml文件

只需要在第三步代码中加入如下代码即可

sys.path.append('/data/guoyin/Semantic_Segmentation_Deep_Four/dataset/exp/train_on_trainval_set')

from CreateXML import SaveXML

#将mask分割图输出xml文件

mask = mask.astype(np.uint8)

save_xml_path = '/data/guoyin/Semantic_Segmentation_Deep/dataset/exp/train_on_trainval_set/save_xml/'

filepath = file.split('/')[-1]

SaveXML(mask,filepath,save_xml_path)

参考:

https://blog.csdn.net/weixin_34343000/article/details/88118667

https://www.jianshu.com/p/675a31e135c1

https://github.com/kilimi/tensorflow_deeplabv3-_serving