Zookeeper——4、使用Curator操作Zookeeper

为了更好的实现Java操作zookeeper服务器,后来出现了Curator框架,非常的强大,目前已经是Apache的顶级项目,里面提供了更多丰富的操作,例如session超时重连、主从选举、分布式计数器、分布式锁等等适用于各种复杂的zookeeper场景的API封装。(zookeeper文章所需的jar包)

Curator所需的maven依赖:

org.apache.curator

curator-framework

3.2.1

org.apache.curator

curator-recipes

3.2.1

org.apache.curator

curator-client

3.2.1

1.使用CuratorFrameworkFactory的两个静态工厂方法(参数不同)来创建zookeeper客户端对象。

参数1:connectString,zookeeper服务器地址及端口号,多个zookeeper服务器地址以“,”分隔。

参数2:sessionTimeoutMs,会话超时时间,单位毫秒,默认为60000ms。

参数3:connectionTimeoutMs,连接超时时间,单位毫秒,默认为15000ms。

参数4:retryPolicy,重试连接策略,有四种实现,分别为:ExponentialBackoffRetry(重试指定的次数, 且每一次重试之间停顿的时间逐渐增加)、RetryNtimes(指定最大重试次数的重试策略)、RetryOneTimes(仅重试一次)、RetryUntilElapsed(一直重试直到达到规定的时间)

Curator的Helloworld入门:

public class CuratorHelloworld {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

//重试策略,初试时间1秒,重试10次

RetryPolicy policy = new ExponentialBackoffRetry(1000, 10);

//通过工厂创建Curator

CuratorFramework curator = CuratorFrameworkFactory.builder().connectString(CONNECT_ADDR)

.sessionTimeoutMs(SESSION_TIMEOUT).retryPolicy(policy).build();

//开启连接

curator.start();

ExecutorService executor = Executors.newCachedThreadPool();

/**创建节点,creatingParentsIfNeeded()方法的意思是如果父节点不存在,则在创建节点的同时创建父节点;

* withMode()方法指定创建的节点类型,跟原生的Zookeeper API一样,不设置默认为PERSISTENT类型。

* */

curator.create().creatingParentsIfNeeded().withMode(CreateMode.PERSISTENT)

.inBackground((framework, event) -> { //添加回调

System.out.println("Code:" + event.getResultCode());

System.out.println("Type:" + event.getType());

System.out.println("Path:" + event.getPath());

}, executor).forPath("/super/c1", "c1内容".getBytes());

Thread.sleep(5000); //为了能够看到回调信息

String data = new String(curator.getData().forPath("/super/c1")); //获取节点数据

System.out.println(data);

Stat stat = curator.checkExists().forPath("/super/c1"); //判断指定节点是否存在

System.out.println(stat);

curator.setData().forPath("/super/c1", "c1新内容".getBytes()); //更新节点数据

data = new String(curator.getData().forPath("/super/c1"));

System.out.println(data);

List children = curator.getChildren().forPath("/super"); //获取子节点

for(String child : children) {

System.out.println(child);

}

//放心的删除节点,deletingChildrenIfNeeded()方法表示如果存在子节点的话,同时删除子节点

curator.delete().guaranteed().deletingChildrenIfNeeded().forPath("/super");

curator.close();

}

} delete删除节点方法可选的链式项:deletingChildrenIfNeeded(是否同时删除子节点)、guaranteed(安全删除)、withVersion(版本检查)、forPath(删除的节点路径)

inBackground绑定异步回调方法。比如在创建节点时绑定一个回调方法,该回调方法可以输出服务器的状态码以及服务器的事件类型等信息,还可以加入一个线程池进行优化操作。

2.Curator的监听

1)NodeCache:监听节点的新增、修改操作。

public class CuratorWatcher1 {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

RetryPolicy policy = new ExponentialBackoffRetry(1000, 10);

CuratorFramework curator = CuratorFrameworkFactory.builder().connectString(CONNECT_ADDR)

.sessionTimeoutMs(SESSION_TIMEOUT).retryPolicy(policy).build();

curator.start();

//最后一个参数表示是否进行压缩

NodeCache cache = new NodeCache(curator, "/super", false);

cache.start(true);

//只会监听节点的创建和修改,删除不会监听

cache.getListenable().addListener(() -> {

System.out.println("路径:" + cache.getCurrentData().getPath());

System.out.println("数据:" + new String(cache.getCurrentData().getData()));

System.out.println("状态:" + cache.getCurrentData().getStat());

});

curator.create().forPath("/super", "1234".getBytes());

Thread.sleep(1000);

curator.setData().forPath("/super", "5678".getBytes());

Thread.sleep(1000);

curator.delete().forPath("/super");

Thread.sleep(5000);

curator.close();

}

}2)PathChildrenCache:监听子节点的新增、修改、删除操作。

public class CuratorWatcher2 {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

RetryPolicy policy = new ExponentialBackoffRetry(1000, 10);

CuratorFramework curator = CuratorFrameworkFactory.builder().connectString(CONNECT_ADDR)

.sessionTimeoutMs(SESSION_TIMEOUT).retryPolicy(policy).build();

curator.start();

//第三个参数表示是否接收节点数据内容

PathChildrenCache childrenCache = new PathChildrenCache(curator, "/super", true);

/**

* 如果不填写这个参数,则无法监听到子节点的数据更新

如果参数为PathChildrenCache.StartMode.BUILD_INITIAL_CACHE,则会预先创建之前指定的/super节点

如果参数为PathChildrenCache.StartMode.POST_INITIALIZED_EVENT,效果与BUILD_INITIAL_CACHE相同,只是不会预先创建/super节点

参数为PathChildrenCache.StartMode.NORMAL时,与不填写参数是同样的效果,不会监听子节点的数据更新操作

*/

childrenCache.start(PathChildrenCache.StartMode.POST_INITIALIZED_EVENT);

childrenCache.getListenable().addListener((framework, event) -> {

switch (event.getType()) {

case CHILD_ADDED:

System.out.println("CHILD_ADDED,类型:" + event.getType() + ",路径:" + event.getData().getPath() + ",数据:" +

new String(event.getData().getData()) + ",状态:" + event.getData().getStat());

break;

case CHILD_UPDATED:

System.out.println("CHILD_UPDATED,类型:" + event.getType() + ",路径:" + event.getData().getPath() + ",数据:" +

new String(event.getData().getData()) + ",状态:" + event.getData().getStat());

break;

case CHILD_REMOVED:

System.out.println("CHILD_REMOVED,类型:" + event.getType() + ",路径:" + event.getData().getPath() + ",数据:" +

new String(event.getData().getData()) + ",状态:" + event.getData().getStat());

break;

default:

break;

}

});

curator.create().forPath("/super", "123".getBytes());

curator.create().creatingParentsIfNeeded().withMode(CreateMode.PERSISTENT).forPath("/super/c1", "c1内容".getBytes());

//经测试,不会监听到本节点的数据变更,只会监听到指定节点下子节点数据的变更

curator.setData().forPath("/super", "456".getBytes());

curator.setData().forPath("/super/c1", "c1新内容".getBytes());

curator.delete().guaranteed().deletingChildrenIfNeeded().forPath("/super");

Thread.sleep(5000);

curator.close();

}

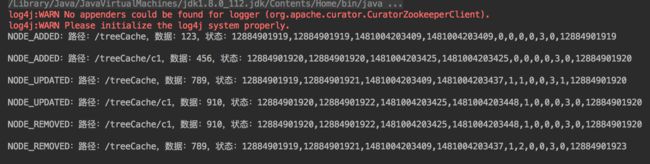

}3)TreeCache:既可以监听节点的状态,又可以监听子节点的状态。类似于上面两种Cache的组合。

public class CuratorWatcher3 {

private static final String CONNECT_ADDR = "192.168.3.58:2181,192.168.3.59:2181,192.168.3.66:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

RetryPolicy policy = new ExponentialBackoffRetry(1000, 10);

CuratorFramework curator = CuratorFrameworkFactory.builder().connectString(CONNECT_ADDR).sessionTimeoutMs(SESSION_TIMEOUT)

.retryPolicy(policy).build();

curator.start();

TreeCache treeCache = new TreeCache(curator, "/treeCache");

treeCache.start();

treeCache.getListenable().addListener((curatorFramework, treeCacheEvent) -> {

switch (treeCacheEvent.getType()) {

case NODE_ADDED:

System.out.println("NODE_ADDED:路径:" + treeCacheEvent.getData().getPath() + ",数据:" + new String(treeCacheEvent.getData().getData())

+ ",状态:" + treeCacheEvent.getData().getStat());

break;

case NODE_UPDATED:

System.out.println("NODE_UPDATED:路径:" + treeCacheEvent.getData().getPath() + ",数据:" + new String(treeCacheEvent.getData().getData())

+ ",状态:" + treeCacheEvent.getData().getStat());

break;

case NODE_REMOVED:

System.out.println("NODE_REMOVED:路径:" + treeCacheEvent.getData().getPath() + ",数据:" + new String(treeCacheEvent.getData().getData())

+ ",状态:" + treeCacheEvent.getData().getStat());

break;

default:

break;

}

});

curator.create().forPath("/treeCache", "123".getBytes());

curator.create().creatingParentsIfNeeded().withMode(CreateMode.PERSISTENT).forPath("/treeCache/c1", "456".getBytes());

curator.setData().forPath("/treeCache", "789".getBytes());

curator.setData().forPath("/treeCache/c1", "910".getBytes());

curator.delete().forPath("/treeCache/c1");

curator.delete().forPath("/treeCache");

Thread.sleep(5000);

curator.close();

}

}

PS:Curator 2.4.2的jar包没有TreeCache,我升级到了3.2.1的版本。但是在运行时报java.lang.NoSuchMethodError:org.apache.zookeeper.server.quorum.flexible.QuorumMaj.

3.Curator应用场景

①分布式锁(该部分来自跟着实例学习ZooKeeper的用法: 分布式锁)

在分布式场景中,为了保证数据的一致性,经常在程序运行的某一个点需要进行同步操作(Java中提供了Synchronized和ReentrantLock实现)。我们使用Curator基于Zookeeper的特性提供的分布式锁来处理分布式场景的数据一致性。

可重入锁:InterProcessMutex(CuratorFramework client, String path)

通过acquire()获得锁,并提供超时机制;通过release()释放锁。makeRevocable(RevocationListener

首先创建一个模拟的公共资源,这个资源期望只能单线程的访问,否则会有并发问题。

public class FakeLimitedResource {

private final AtomicBoolean inUse = new AtomicBoolean(false);

public void use() throws Exception {

//这个例子在使用锁的情况下不会抛出非法并发异常IllegalStateException

//但是在无锁的情况下,由于sleep了一段时间,所以很容易抛出异常

if(!inUse.compareAndSet(false, true)) {

throw new IllegalStateException("Needs to be used by one client at a time");

}

try {

Thread.sleep((long) (3 * Math.random()));

} finally {

inUse.set(false);

}

}

}public class ExampleClientThatLocks {

private final InterProcessMutex lock;

//private final InterProcessSemaphoreMutex lock;

private final FakeLimitedResource resource;

private final String clientName;

public ExampleClientThatLocks(CuratorFramework framework, String path, FakeLimitedResource resource, String clientName) {

this.lock = new InterProcessMutex(framework, path);

//this.lock = new InterProcessSemaphoreMutex(framework, path);

this.resource = resource;

this.clientName = clientName;

}

public void doWork(long time, TimeUnit timeUnit) throws Exception {

if(!lock.acquire(time, timeUnit)) {

throw new IllegalStateException(clientName + " could not acquire the lock!");

}

System.out.println(clientName + " has the lock");

/*if(!lock.acquire(time, timeUnit)) {

throw new IllegalStateException(clientName + " could not acquire the lock!");

}

System.out.println(clientName + " has the lock");*/

try {

resource.use();

} finally {

System.out.println(clientName + " releasing the lock");

lock.release();

//lock.release();

}

}

}最后创建主程序来测试。

public class InterProcessMutexExample {

private static final int QTY = 5;

private static final int REPETITIONS = QTY * 10;

private static final String PATH = "/examples/locks";

private static final String CONNECT_ADDR = "192.168.3.58:2181,192.168.3.59:2181,192.168.3.66:2181";

public static void main(String[] args) throws Exception {

final FakeLimitedResource resource = new FakeLimitedResource();

ExecutorService executor = Executors.newFixedThreadPool(QTY);

try {

for(int i=0; i task = () -> {

CuratorFramework curator = CuratorFrameworkFactory.newClient(CONNECT_ADDR, new RetryNTimes(3, 1000));

curator.start();

try {

final ExampleClientThatLocks example = new ExampleClientThatLocks(curator, PATH, resource, "Client " + index);

for(int j=0; j 不可重入锁:InterProcessSemaphoreMutex

这个锁和可重入锁相比,就是少了Reentrant功能,也就意味着不能在同一个线程中重入,使用方法和上面的类似。将ExampleClientThatLocks修改成如下:

public class ExampleClientThatLocks {

//private final InterProcessMutex lock;

private final InterProcessSemaphoreMutex lock;

private final FakeLimitedResource resource;

private final String clientName;

public ExampleClientThatLocks(CuratorFramework framework, String path, FakeLimitedResource resource, String clientName) {

//this.lock = new InterProcessMutex(framework, path);

this.lock = new InterProcessSemaphoreMutex(framework, path);

this.resource = resource;

this.clientName = clientName;

}

public void doWork(long time, TimeUnit timeUnit) throws Exception {

if(!lock.acquire(time, timeUnit)) {

throw new IllegalStateException(clientName + " could not acquire the lock!");

}

System.out.println(clientName + " has the lock");

if(!lock.acquire(time, timeUnit)) {

throw new IllegalStateException(clientName + " could not acquire the lock!");

}

System.out.println(clientName + " has the lock");

try {

resource.use();

} finally {

System.out.println(clientName + " releasing the lock");

lock.release();

lock.release();

}

}

}注意我们也需要调用release两次。这和JDK的ReentrantLock用法一致。如果少调用一次release,则此线程依然拥有锁。 上面的代码没有问题,我们可以多次调用acquire,后续的acquire也不会阻塞。 将上面的InterProcessMutex换成不可重入锁InterProcessSemaphoreMutex,如果再运行上面的代码,结果就会发现线程被阻塞再第二个acquire上。 也就是此锁不是可重入的。

public class ExampleClientReadWriteLocks {

private final InterProcessReadWriteLock readWriteLock;

private final InterProcessMutex readLock;

private final InterProcessMutex writeLock;

private final FakeLimitedResource resource;

private final String clientName;

public ExampleClientReadWriteLocks(CuratorFramework client, String path, FakeLimitedResource resource, String clientName) {

this.readWriteLock = new InterProcessReadWriteLock(client, path);

this.readLock = readWriteLock.readLock();

this.writeLock = readWriteLock.writeLock();

this.resource = resource;

this.clientName = clientName;

}

public void doWork(long time, TimeUnit unit) throws Exception {

if(!writeLock.acquire(time, unit)) {

throw new IllegalStateException(clientName + " could not acquire the writeLock!");

}

System.out.println(clientName + " has the writeLock");

if(!readLock.acquire(time, unit)) {

throw new IllegalStateException(clientName + " could not acquire the readLock!");

}

System.out.println(clientName + " has the readLock");

try {

resource.use();

} finally {

readLock.release();

writeLock.release();

}

}

}这次调用acquire会返回一个租约对象。 客户端必须在finally中close这些租约对象,否则这些租约会丢失掉。 但是, 但是,如果客户端session由于某种原因比如crash丢掉, 那么这些客户端持有的租约会自动close, 这样其它客户端可以继续使用这些租约。 租约还可以通过下面的方式返还:

public void returnAll(Collection leases)

public void returnLease(Lease lease) public Lease acquire()

public Collection acquire(int qty)

public Lease acquire(long time, TimeUnit unit)

public Collection acquire(int qty, long time, TimeUnit unit) public class InterProcessSemaphoreExample {

private static final int MAX_LEASE = 10;

private static final String PATH = "/examples/locks";

public static void main(String[] args) throws Exception {

FakeLimitedResource resource = new FakeLimitedResource();

try (TestingServer server = new TestingServer()) {

CuratorFramework client = CuratorFrameworkFactory.newClient(server.getConnectString(), new ExponentialBackoffRetry(1000, 3));

client.start();

InterProcessSemaphoreV2 semaphore = new InterProcessSemaphoreV2(client, PATH, MAX_LEASE);

Collection leases = semaphore.acquire(5);

System.out.println("get " + leases.size() + " leases");

Lease lease = semaphore.acquire();

System.out.println("get another lease");

resource.use();

Collection leases2 = semaphore.acquire(5, 10, TimeUnit.SECONDS);

System.out.println("Should timeout and acquire return " + leases2);

System.out.println("return one lease");

semaphore.returnLease(lease);

System.out.println("return another 5 leases");

semaphore.returnAll(leases);

}

}

} public class InterProcessMultiLockExample {

private static final String PATH1 = "/examples/locks1";

private static final String PATH2 = "/examples/locks2";

public static void main(String[] args) throws Exception {

FakeLimitedResource resource = new FakeLimitedResource();

try (TestingServer server = new TestingServer()) {

CuratorFramework client = CuratorFrameworkFactory.newClient(server.getConnectString(), new ExponentialBackoffRetry(1000, 3));

client.start();

InterProcessLock lock1 = new InterProcessMutex(client, PATH1);

InterProcessLock lock2 = new InterProcessSemaphoreMutex(client, PATH2);

InterProcessMultiLock lock = new InterProcessMultiLock(Arrays.asList(lock1, lock2));

if (!lock.acquire(10, TimeUnit.SECONDS)) {

throw new IllegalStateException("could not acquire the lock");

}

System.out.println("has the lock");

System.out.println("has the lock1: " + lock1.isAcquiredInThisProcess());

System.out.println("has the lock2: " + lock2.isAcquiredInThisProcess());

try {

resource.use(); //access resource exclusively

} finally {

System.out.println("releasing the lock");

lock.release(); // always release the lock in a finally block

}

System.out.println("has the lock1: " + lock1.isAcquiredInThisProcess());

System.out.println("has the lock2: " + lock2.isAcquiredInThisProcess());

}

}

}一说到分布式计数器,你可能马上想到AtomicInteger这种经典的方式。如果是在同一个JVM下肯定没有问题,但是在分布式场景下,肯定会存在问题。所以就需要使用Curator框架的DistributedAtomicInteger了。

public class CuratorDistributedAtomicInteger {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

//重试策略,初试时间1秒,重试10次

RetryPolicy policy = new ExponentialBackoffRetry(1000, 10);

//通过工厂创建Curator

CuratorFramework curator = CuratorFrameworkFactory.builder().connectString(CONNECT_ADDR)

.sessionTimeoutMs(SESSION_TIMEOUT).retryPolicy(policy).build();

//开启连接

curator.start();

DistributedAtomicInteger atomicInteger = new DistributedAtomicInteger(curator, "/super", new RetryNTimes(3, 1000));

AtomicValue value = atomicInteger.add(1);

System.out.println(value.succeeded());

System.out.println(value.preValue()); //新值

System.out.println(value.postValue()); //旧值

curator.close();

}

} ③Barrier

分布式Barrier是这样一个类:它会阻塞所有节点上的等待进程,知道某一个被满足,然后所有的节点继续执行。比如赛马比赛中,等赛马陆续来到起跑线前,一声令下,所有的赛马都飞奔而出。

DistributedBarrier类实现了栏栅的功能,构造方法如下:

public DistributedBarrier(CuratorFramework client, String barrierPath)接下来看例子:

public class DistributedBarrierExample {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

private static final int SESSION_TIMEOUT = 5000;

public static void main(String[] args) throws Exception {

CuratorFramework curator = CuratorFrameworkFactory.newClient(CONNECT_ADDR, new RetryNTimes(3, 1000));

curator.start();

ExecutorService executor = Executors.newFixedThreadPool(5);

DistributedBarrier controlBarrier = new DistributedBarrier(curator, "/example/barrier");

controlBarrier.setBarrier();

for(int i=0; i<5; i++) {

final DistributedBarrier barrier = new DistributedBarrier(curator, "/example/barrier");

final int index = i;

Callable task = () -> {

Thread.sleep((long) (3 * Math.random()));

System.out.println("Client#" + index + " wait on Barrier");

barrier.waitOnBarrier();

System.out.println("Client#" + index + " begins");

return null;

};

executor.submit(task);

}

Thread.sleep(5000);

controlBarrier.removeBarrier();

Thread.sleep(5000);

executor.shutdown();

curator.close();

}

} public DistributedDoubleBarrier(CuratorFramework client, String barrierPath, int memberQty)例子代码:

public class DistributedDoubleBarrierExample {

private static final String CONNECT_ADDR = "192.168.1.102:2181,192.168.1.104:2181,192.168.1.105:2181";

public static void main(String[] args) throws InterruptedException {

CuratorFramework curator = CuratorFrameworkFactory.newClient(CONNECT_ADDR, new RetryNTimes(3, 1000));

curator.start();

ExecutorService executor = Executors.newFixedThreadPool(5);

for(int i=0; i<5; i++) {

final DistributedDoubleBarrier barrier = new DistributedDoubleBarrier(curator, "/example/barrier", 5);

final int index = i;

Callable task = () -> {

Thread.sleep((long) (3000 * Math.random()));

System.out.println("Client#" + index + " enter");

barrier.enter();

System.out.println("Client#" + index + "begin");

Thread.sleep((long) (3000 * Math.random()));

barrier.leave();

System.out.println("Client#" + index + "left");

return null;

};

executor.submit(task);

}

executor.shutdown();;

executor.awaitTermination(10, TimeUnit.MINUTES);

curator.close();

}

}