$ vim /data/hadoop/etc/hadoop/yarn-site.xml

yarn.acl.enable

true

yarn.admin.acl

*

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

259200

yarn.resourcemanager.cluster-id

hadoop-test

yarn.resourcemanager.connect.retry-interval.ms

2000

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.ha.automatic-failover.embedded

true

yarn.resourcemanager.ha.rm-ids

rm1,rm2

ha.zookeeper.quorum

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.ha.automatic-failover.enabled

true

yarn.resourcemanager.hostname.rm1

192.168.233.65

yarn.resourcemanager.hostname.rm2

192.168.233.94

yarn.resourcemanager.zk-address

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.zk-state-store.address

192.168.233.17:2181,192.168.233.238:2181,192.168.233.157:2181

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms

5000

yarn.resourcemanager.address.rm1

192.168.233.65:8132

yarn.resourcemanager.scheduler.address.rm1

192.168.233.65:8130

yarn.resourcemanager.webapp.address.rm1

192.168.233.65:8188

yarn.resourcemanager.resource-tracker.address.rm1

192.168.233.65:8131

yarn.resourcemanager.admin.address.rm1

192.168.233.65:8033

yarn.resourcemanager.ha.admin.address.rm1

192.168.233.65:23142

yarn.resourcemanager.address.rm2

192.168.233.94:8132

yarn.resourcemanager.scheduler.address.rm2

192.168.233.94:8130

yarn.resourcemanager.webapp.address.rm2

192.168.233.94:8188

yarn.resourcemanager.resource-tracker.address.rm2

192.168.233.94:8131

yarn.resourcemanager.admin.address.rm2

192.168.233.94:8033

yarn.resourcemanager.ha.admin.address.rm2

192.168.233.94:23142

yarn.log-aggregation-enable

true

yarn.scheduler.fair.preemption

true

开启资源抢占,default is True

yarn.scheduler.fair.user-as-default-queue

true

default is True

yarn.scheduler.fair.allow-undeclared-pools

false

yarn.scheduler.minimum-allocation-mb

512

yarn.scheduler.maximum-allocation-mb

4096

yarn.scheduler.minimum-allocation-vcores

1

yarn.scheduler.maximum-allocation-vcores

4

yarn.scheduler.increment-allocation-vcores

1

yarn.scheduler.increment-allocation-mb

512

yarn.resourcemanager.am.max-attempts

2

yarn.resourcemanager.container.liveness-monitor.interval-ms

600000

yarn.resourcemanager.nm.liveness-monitor.interval-ms

1000

yarn.nm.liveness-monitor.expiry-interval-ms

600000

yarn.resourcemanager.resource-tracker.client.thread-count

50

yarn.nodemanager.resource.memory-mb

6000

每个节点可用内存,单位MB

yarn.nodemanager.resource.cpu-vcores

2

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

yarn.resourcemanager.scheduler.class

org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler

yarn.resourcemanager.max-completed-applications

10000

yarn.client.failover-proxy-provider

org.apache.hadoop.yarn.client.ConfiguredRMFailoverProxyProvider

yarn.resourcemanager.ha.automatic-failover.zk-base-path

/yarn-leader-election

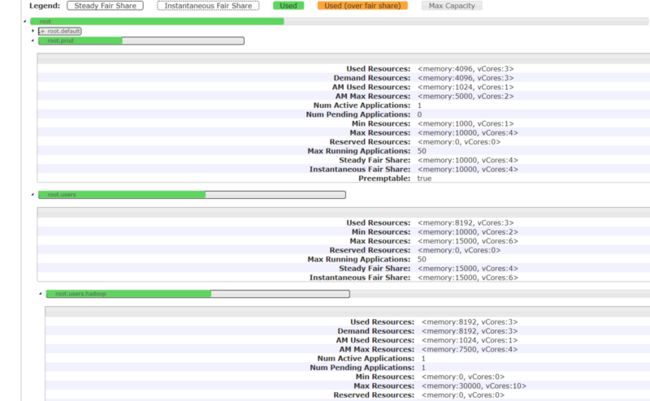

$ vim /data/hadoop/etc/hadoop/fair-scheduler.xml

$ cat /data/hadoop/etc/hadoop/fair-scheduler.xml

30

5120mb,5vcores

29000mb,10vcores

100

1.0

DRF

10000mb,2vcores

15000mb,6vcores

50

3

fair

hadoop,hdfs

hadoop

1000mb,1vcores

2000mb,2vcores

50

3

fair

hadoop

hadoop

1000mb,1vcores

10000mb,4vcores

50

3

fair

hadoop,hdfs

hadoop

20000mb,16vcores

测试prod资源池

$ spark-shell --master yarn --master yarn --queue prod --executor-memory 1000m --total-executor-cores 1

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop-test-1:4040

Spark context available as 'sc' (master = yarn, app id = application_1592814747219_0002).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.6

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_231)

Type in expressions to have them evaluated.

Type :help for more information.

scala> 测试users父级资源池

$ spark-shell --master yarn --master yarn --queue root.users.hadoop --executor-memory 3000m --total-executor-cores 3

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://hadoop-test-2:4040

Spark context available as 'sc' (master = yarn, app id = application_1592814747219_0003).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.6

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_231)

Type in expressions to have them evaluated.

Type :help for more information.

scala>