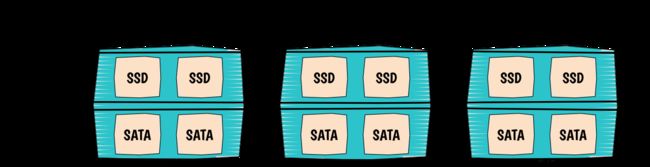

我一台有2块硬盘,1块ssd(性能盘)和1块sata(容量盘),共有3台同样的服务器(控制计算存储融合)

/dev/sdb ssd

/dev/sdc sata

配置globals.yml 文件

|

1

2

3

4

|

ceph_enable_cache: "yes"

enable_ceph: "yes"

enable_cinder: "yes"

cinder_backend_ceph: "{{ enable_ceph }}"

|

修改cinder配置(代码修改都在部署节点)

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

[DEFAULT]

enabled_backends = rbd-1,ssd

[ssd]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

volume_backend_name = ssd

rbd_pool = volumes-cache

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = 5

rbd_user = cinder

rbd_secret_uuid = b89a2a40-c009-47da-ba5b-7b6414a1f759

report_discard_supported = True

image_upload_use_cinder_backend = True

|

给磁盘打标签

|

1

2

3

|

parted /dev/sdb -s -- mklabel gpt mkpart KOLLA_CEPH_OSD_BOOTSTRAP 1 -1

parted /dev/sdc -s -- mklabel gpt mkpart KOLLA_CEPH_OSD_CACHE_BOOTSTRAP 1 -1

|

开始部署

|

1

|

kolla-ansible deploy -i /root/multinode

|

crushmap

获取crushmap

|

1

2

|

|

反编译:此后就可以编辑CRUSHMAP文件了

|

1

|

|

修改CRUSHMAP

根据实际情况编写crushmap

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

|

#

begin crush map

tunable choose_local_tries 0

tunable choose_local_fallback_tries 0

tunable choose_total_tries 50

tunable chooseleaf_descend_once 1

tunable chooseleaf_vary_r 1

tunable straw_calc_version 1

#

devices

device 0 osd.0

device 1 osd.1

device 2 osd.2

device 3 osd.3

device 4 osd.4

device 5 osd.5

#

types

type 0 osd

type 1 host

type 2 chassis

type 3 rack

type 4 row

type 5 pdu

type 6 pod

type 7 room

type 8 datacenter

type 9 region

type 10 root

#

buckets

host 1.1.1.163 {

id -2 # do not change unnecessarily

# weight 0.990

alg straw

hash 0 # rjenkins1

item osd.0 weight 0.990

}

host 1.1.1.161 {

id -3 # do not change unnecessarily

# weight 0.990

alg straw

hash 0 # rjenkins1

item osd.1 weight 0.990

}

host 1.1.1.162 {

id -4 # do not change unnecessarily

# weight 0.990

alg straw

hash 0 # rjenkins1

item osd.2 weight 0.990

}

root default {

id -1 # do not change unnecessarily

# weight 2.970

alg straw

hash 0 # rjenkins1

item 1.1.1.163 weight 0.990

item 1.1.1.161 weight 0.990

item 1.1.1.162 weight 0.990

}

host 1.1.1.163-ssd {

id -5 # do not change unnecessarily

# weight 0.470

alg straw

hash 0 # rjenkins1

item osd.4 weight 0.470

}

host 1.1.1.161-ssd {

id -6 # do not change unnecessarily

# weight 0.470

alg straw

hash 0 # rjenkins1

item osd.3 weight 0.470

}

host 1.1.1.162-ssd {

id -8 # do not change unnecessarily

# weight 0.470

alg straw

hash 0 # rjenkins1

item osd.5 weight 0.470

}

root ssd {

id -7 # do not change unnecessarily

# weight 1.410

alg straw

hash 0 # rjenkins1

item 1.1.1.163-ssd weight 0.470

item 1.1.1.161-ssd weight 0.470

item 1.1.1.162-ssd weight 0.470

}

#

rules

rule replicated_ruleset {

ruleset 0

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule disks {

ruleset 1

type replicated

min_size 1

max_size 10

step take default

step chooseleaf firstn 0 type host

step emit

}

rule ssd_releset {

ruleset 2

type replicated

min_size 1

max_size 10

step take ssd

step chooseleaf firstn 0 type host

step emit

}

#

end crush map

|

重新编译

|

1

|

|

导入新的CRUSHMAP

|

1

|

|

查看

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

# ceph osd tree

ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY

-7 1.40996 root ssd

-5 0.46999 host 1.1.1.163-ssd

4 0.46999 osd.4 up 1.00000 1.00000

-6 0.46999 host 1.1.1.161-ssd

3 0.46999 osd.3 up 1.00000 1.00000

-8 0.46999 host 1.1.1.162-ssd

5 0.46999 osd.5 up 1.00000 1.00000

-1 2.96997 root default

-2 0.98999 host 1.1.1.163

0 0.98999 osd.0 up 1.00000 1.00000

-3 0.98999 host 1.1.1.161

1 0.98999 osd.1 up 1.00000 1.00000

-4 0.98999 host 1.1.1.162

2 0.98999 osd.2 up 1.00000 1.00000

|

创建pool

|

1

|

|

设置pool的crushmap

|

1

2

3

4

5

6

7

8

|

pool 1 'images' replicated size 1 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 128 pgp_num 128 last_change 285 flags hashpspool stripe_width 0

pool 2 'volumes' replicated size 1 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 128 pgp_num 128 last_change 281 flags hashpspool stripe_width 0

pool 3 'backups' replicated size 1 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 128 pgp_num 128 last_change 165 flags hashpspool stripe_width 0

pool 4 'vms' replicated size 1 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 128 pgp_num 128 last_change 44 flags hashpspool stripe_width 0

pool 5 'gnocchi' replicated size 1 min_size 1 crush_ruleset 1 object_hash rjenkins pg_num 128 pgp_num 128 last_change 35 flags hashpspool stripe_width 0

pool 6 'volumes-cache' replicated size 3 min_size 2 crush_ruleset 2 object_hash rjenkins pg_num 64 pgp_num 64 last_change 62 flags hashpspool stripe_width 0

|

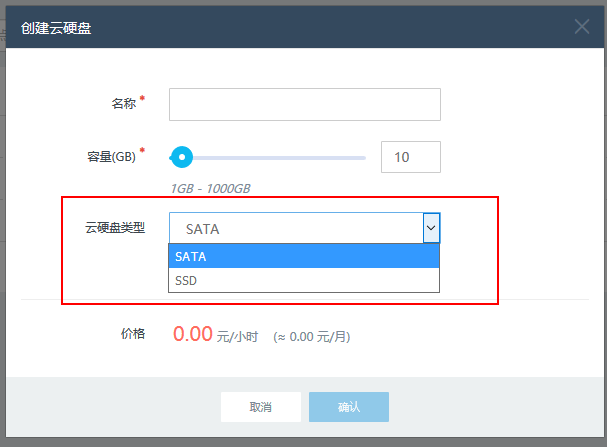

创建两个cinder卷类型

|

1

2

3

4

5

6

7

8

9

10

|

+--------------------------------------+------+-------------+-----------+

| ID | Name | Description | Is_Public |

+--------------------------------------+------+-------------+-----------+

| 8c1079e5-90a3-4f6d-bdb7-2f25b70bc2c8 | SSD | | True |

| a605c569-1e88-486d-bd8e-7aba43ce1ef2 | SATA | | True |

+--------------------------------------+------+-------------+-----------+

|

设置卷类型的key键值

|

1

2

3

4

5

6

7

8

9

|

cinder type-key SSD set volume_backend_name=ssd

cinder type-key SATA set volume_backend_name=rbd-1

+--------------------------------------+------+----------------------------------+

| ID | Name | extra_specs |

+--------------------------------------+------+----------------------------------+

| 8c1079e5-90a3-4f6d-bdb7-2f25b70bc2c8 | SSD | {'volume_backend_name': 'ssd'} |

| a605c569-1e88-486d-bd8e-7aba43ce1ef2 | SATA | {'volume_backend_name': 'rbd-1'} |

+--------------------------------------+------+----------------------------------+

|

创建云硬盘验证

测试

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

|

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2017-11-30T02:58:17.000000 |

| description | None |

| encrypted | False |

| id | e2df2b47-13ec-4503-a78b-2ab60cb5a34b |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | vol-ssd |

| os-vol-host-attr:host | control@ssd#ssd |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | 21c161dda51147fb9ff527aadfe1d81a |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | 2017-11-30T02:58:18.000000 |

| user_id | 68601348f1264a4cb69f8f8f162e3f2a |

| volume_type | SSD |

+--------------------------------+--------------------------------------+

+--------------------------------+--------------------------------------+

| Property | Value |

+--------------------------------+--------------------------------------+

| attachments | [] |

| availability_zone | nova |

| bootable | false |

| consistencygroup_id | None |

| created_at | 2017-11-30T02:59:07.000000 |

| description | None |

| encrypted | False |

| id | f3f53733-348d-4ff9-a472-445aed77c111 |

| metadata | {} |

| migration_status | None |

| multiattach | False |

| name | vol-sata |

| os-vol-host-attr:host | None |

| os-vol-mig-status-attr:migstat | None |

| os-vol-mig-status-attr:name_id | None |

| os-vol-tenant-attr:tenant_id | 21c161dda51147fb9ff527aadfe1d81a |

| replication_status | None |

| size | 1 |

| snapshot_id | None |

| source_volid | None |

| status | creating |

| updated_at | None |

| user_id | 68601348f1264a4cb69f8f8f162e3f2a |

| volume_type | SATA |

+--------------------------------+--------------------------------------+

#

rbd -p volumes-cache ls|grep e2df2b47-13ec-4503-a78b-2ab60cb5a34b

volume-e2df2b47-13ec-4503-a78b-2ab60cb5a34b

volume-f3f53733-348d-4ff9-a472-445aed77c111

|