最近终于有一点时间抽空来看看最近的新技术,然后发现了苹果在去年出的新框架,可以直接语音转文字,简直厉害了,直接完爆了某些大公司。但是缺点在于只支持iOS10以上的系统,但是也算是一大进步,赞一个。

一、开发环境要求

XCode8以上,只有它之后的编译器里才有Speech.framework

二、创建工程开发

1.导入Speech.framework【Build Phases->Link Binary With Libraries->+】

2.info.plist文件中添加

Privacy - Speech Recognition Usage Description

使用语音识别

Privacy - Microphone Usage Description

使用麦克风

3.第一种:识别本地录音

#import "ViewController.h"#import

@interface ViewController ()

@property (nonatomic ,strong) SFSpeechRecognitionTask *recognitionTask;

@property (nonatomic ,strong) SFSpeechRecognizer *speechRecognizer;

@property (nonatomic ,strong) UILabel *recognizerLabel;

@end

@implementation ViewController

- (void)dealloc {

[self.recognitionTask cancel];

self.recognitionTask = nil;

}

- (void)viewDidLoad {

[super viewDidLoad];

self.view.backgroundColor = [UIColor whiteColor];

//0.0获取权限

[SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status) {

switch (status) {

case SFSpeechRecognizerAuthorizationStatusNotDetermined:

break;

case SFSpeechRecognizerAuthorizationStatusDenied:

break;

case SFSpeechRecognizerAuthorizationStatusRestricted:

break;

case SFSpeechRecognizerAuthorizationStatusAuthorized:

break;

default:

break;

}

}];

//1.创建SFSpeechRecognizer识别实例

self.speechRecognizer = [[SFSpeechRecognizer alloc] initWithLocale:[[NSLocale alloc] initWithLocaleIdentifier:@"zh_CN"]];//中文识别

//@"zh"在iOS9之后就不是简体中文了,而是TW繁体中文

// [SFSpeechRecognizer supportedLocales];//根据手机设置的语言识别

// for (NSLocale *lacal in [SFSpeechRecognizer supportedLocales].allObjects) {

// NSLog(@"countryCode:%@ languageCode:%@ ", lacal.countryCode, lacal.languageCode);

// }

//2.创建识别请求

SFSpeechURLRecognitionRequest *request = [[SFSpeechURLRecognitionRequest alloc] initWithURL:[NSURL fileURLWithPath:[[NSBundle mainBundle] pathForResource:@"1122334455.mp3" ofType:nil]]];

//3.开始识别任务

self.recognitionTask = [self recognitionTaskWithRequest1:request];

}

- (SFSpeechRecognitionTask *)recognitionTaskWithRequest0:(SFSpeechURLRecognitionRequest *)request{

return [self.speechRecognizer recognitionTaskWithRequest:request resultHandler:^(SFSpeechRecognitionResult * _Nullable result, NSError * _Nullable error) {

if (!error) {

NSLog(@"语音识别解析正确--%@", result.bestTranscription.formattedString);

}else {

NSLog(@"语音识别解析失败--%@", error);

}

}];

}

- (SFSpeechRecognitionTask *)recognitionTaskWithRequest1:(SFSpeechURLRecognitionRequest *)request{

return [self.speechRecognizer recognitionTaskWithRequest:request delegate:self];

}

- (void)didReceiveMemoryWarning {

[super didReceiveMemoryWarning];

}

#pragma mark- SFSpeechRecognitionTaskDelegate

- (void)speechRecognitionDidDetectSpeech:(SFSpeechRecognitionTask *)task

{

}

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didHypothesizeTranscription:(SFTranscription *)transcription {

}

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didFinishRecognition:(SFSpeechRecognitionResult *)recognitionResult {

NSDictionary *attributes = @{

NSFontAttributeName:[UIFont systemFontOfSize:18],

};

CGRect rect = [recognitionResult.bestTranscription.formattedString boundingRectWithSize:CGSizeMake(self.view.bounds.size.width - 100, CGFLOAT_MAX) options:NSStringDrawingUsesLineFragmentOrigin attributes:attributes context:nil];

self.recognizerLabel.text = recognitionResult.bestTranscription.formattedString;

self.recognizerLabel.frame = CGRectMake(50, 120, rect.size.width, rect.size.height);

}

- (void)speechRecognitionTaskFinishedReadingAudio:(SFSpeechRecognitionTask *)task {

}

- (void)speechRecognitionTaskWasCancelled:(SFSpeechRecognitionTask *)task {

}

- (void)speechRecognitionTask:(SFSpeechRecognitionTask *)task didFinishSuccessfully:(BOOL)successfully {

if (successfully) {

NSLog(@"全部解析完毕");

}

}

#pragma mark- getter

- (UILabel *)recognizerLabel {

if (!_recognizerLabel) {

_recognizerLabel = [[UILabel alloc] initWithFrame:CGRectMake(50, 120, self.view.bounds.size.width - 100, 100)];

_recognizerLabel.numberOfLines = 0;

_recognizerLabel.font = [UIFont preferredFontForTextStyle:UIFontTextStyleBody];

_recognizerLabel.adjustsFontForContentSizeCategory = YES;

_recognizerLabel.textColor = [UIColor orangeColor];

[self.view addSubview:_recognizerLabel];

}

return _recognizerLabel;

}

@end

4.第二种:识别即时语音录入

#import "ViewController.h"

#import

@interface ViewController ()

@property (nonatomic, strong) AVAudioEngine *audioEngine; // 声音处理器

@property (nonatomic, strong) SFSpeechRecognizer *speechRecognizer; // 语音识别器

@property (nonatomic, strong) SFSpeechAudioBufferRecognitionRequest *speechRequest; // 语音请求对象

@property (nonatomic, strong) SFSpeechRecognitionTask *currentSpeechTask; // 当前语音识别进程

@property (nonatomic, strong) UILabel *showLb; // 用于展现的label

@property (nonatomic, strong) UIButton *startBtn; // 启动按钮

@end

@implementation ViewController

- (void)viewDidLoad

{

[super viewDidLoad];

// 初始化

self.audioEngine = [AVAudioEngine new];

// 这里需要先设置一个AVAudioEngine和一个语音识别的请求对象SFSpeechAudioBufferRecognitionRequest

self.speechRecognizer = [SFSpeechRecognizer new];

self.startBtn.enabled = NO;

[SFSpeechRecognizer requestAuthorization:^(SFSpeechRecognizerAuthorizationStatus status)

{

if (status != SFSpeechRecognizerAuthorizationStatusAuthorized)

{

// 如果状态不是已授权则return

return;

}

// 初始化语音处理器的输入模式

[self.audioEngine.inputNode installTapOnBus:0 bufferSize:1024 format:[self.audioEngine.inputNode outputFormatForBus:0] block:^(AVAudioPCMBuffer * _Nonnull buffer,AVAudioTime * _Nonnull when)

{

// 为语音识别请求对象添加一个AudioPCMBuffer,来获取声音数据

[self.speechRequest appendAudioPCMBuffer:buffer];

}];

// 语音处理器准备就绪(会为一些audioEngine启动时所必须的资源开辟内存)

[self.audioEngine prepare];

self.startBtn.enabled = YES;

}];

}

- (void)onStartBtnClicked

{

if (self.currentSpeechTask.state == SFSpeechRecognitionTaskStateRunning)

{ // 如果当前进程状态是进行中

[self.startBtn setTitle:@"开始录制" forState:UIControlStateNormal];

// 停止语音识别

[self stopDictating];

}

else

{ // 进程状态不在进行中

[self.startBtn setTitle:@"停止录制" forState:UIControlStateNormal];

self.showLb.text = @"等待";

// 开启语音识别

[self startDictating];

}

}

- (void)startDictating

{

NSError *error;

// 启动声音处理器

[self.audioEngine startAndReturnError: &error];

// 初始化

self.speechRequest = [SFSpeechAudioBufferRecognitionRequest new];

// 使用speechRequest请求进行识别

self.currentSpeechTask =

[self.speechRecognizer recognitionTaskWithRequest:self.speechRequest resultHandler:^(SFSpeechRecognitionResult * _Nullable result,NSError * _Nullable error)

{

// 识别结果,识别后的操作

if (result == NULL) return;

self.showLb.text = result.bestTranscription.formattedString;

}];

}

- (void)stopDictating

{

// 停止声音处理器,停止语音识别请求进程

[self.audioEngine stop];

[self.speechRequest endAudio];

}

#pragma mark- getter

- (UILabel *)showLb {

if (!_showLb) {

_showLb = [[UILabel alloc] initWithFrame:CGRectMake(50, 180, self.view.bounds.size.width - 100, 100)];

_showLb.numberOfLines = 0;

_showLb.font = [UIFont preferredFontForTextStyle:UIFontTextStyleBody];

_showLb.text = @"等待中...";

_showLb.adjustsFontForContentSizeCategory = YES;

_showLb.textColor = [UIColor orangeColor];

[self.view addSubview:_showLb];

}

return _showLb;

}

- (UIButton *)startBtn {

if (!_startBtn) {

_startBtn = [UIButton buttonWithType:UIButtonTypeCustom];

_startBtn.frame = CGRectMake(50, 80, 80, 80);

[_startBtn addTarget:self action:@selector(onStartBtnClicked) forControlEvents:UIControlEventTouchUpInside];

[_startBtn setBackgroundColor:[UIColor redColor]];

[_startBtn setTitle:@"录音" forState:UIControlStateNormal];

[_startBtn setTitleColor:[UIColor whiteColor] forState:UIControlStateNormal];

[self.view addSubview:_startBtn];

}

return _startBtn;

}

@end

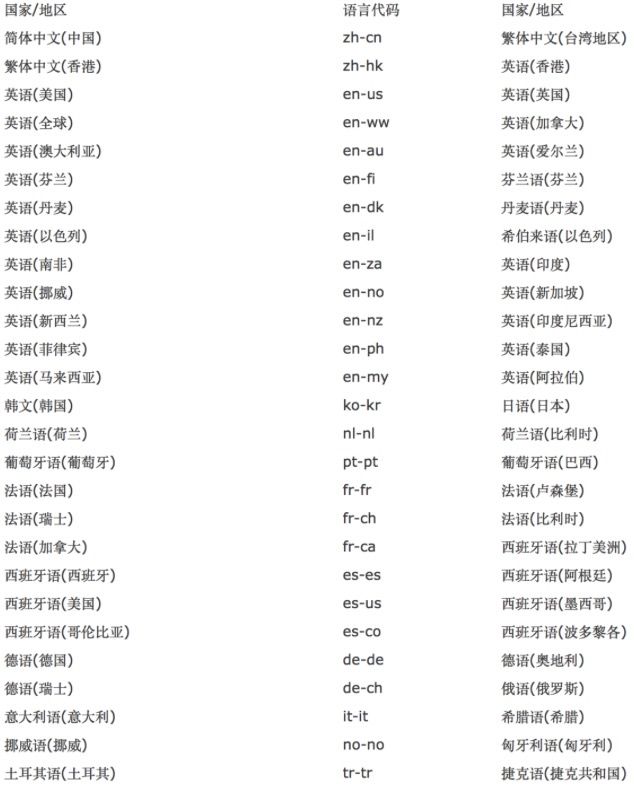

5.引申【各国语言代码】

6.知其然知其所以然

SpeechFramework框架中的重要类

SFSpeechRecognizer:这个类是语音识别的操作类,用于语音识别用户权限的申请,语言环境的设置,语音模式的设置以及向Apple服务发送语音识别的请求。

SFSpeechRecognitionTask:这个类是语音识别服务请求任务类,每一个语音识别请求都可以抽象为一个SFSpeechRecognitionTask实例,其中SFSpeechRecognitionTaskDelegate协议中约定了许多请求任务过程中的监听方法。

SFSpeechRecognitionRequest:语音识别请求类,需要通过其子类来进行实例化。

SFSpeechURLRecognitionRequest:通过音频URL来创建语音识别请求。

SFSpeechAudioBufferRecognitionRequest:通过音频流来创建语音识别请求。

SFSpeechRecognitionResult:语音识别请求结果类。

SFTranscription:语音转换后的信息类。

7.Demo地址

https://github.com/BeanMan/SpeechFramWork

参考文章:

http://www.jianshu.com/p/c4de4ee2134d

http://www.jianshu.com/p/487147605e08

站在巨人的肩膀上才有这些总结

菜鸟走向大牛,大家共同前进,如果觉得不错,请给个赞/关注。

一起交流学习,有问题随时欢迎联系,邮箱:[email protected]