用python scrapy爬虫爬取某站全部妹纸涩图,宅男福利

用python scrapy爬虫爬取某站全部妹纸涩图,宅男福利!

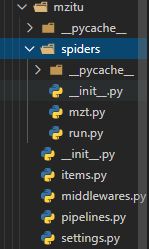

一.先创建一个scrapy项目

在cmd 或者vscode终端输入以下代码

scrapy startproject Mzitu

cd Mzitu

scrapy genspider mzt 'https://www.mzitu.com/'

二.现在我们修改mzt.py的内容

# -*- coding: utf-8 -*-

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from mzitu.items import MzituItem

class MztSpider(scrapy.Spider):

name = 'mzt'

allowed_domains = ['mzitu.com']

start_urls = ['https://www.mzitu.com/']

def parse(self, response):

for href in response.xpath(".//ul[@id='pins']/li/a/@href").extract():

# print(href)

yield scrapy.Request(

url=href,

callback=self.img_parse,

dont_filter=True

)

next_link=response.xpath(".//div[@class='nav-links']/a[contains(text(), '下一页')]/@href").extract_first() #获取下个主页的地址

if next_link is not None:

yield scrapy.Request(

url=next_link,

callback=self.parse,

dont_filter=True

)

def img_parse(self, response):

image_url=response.xpath(".//div[@class='main-image']/p/a/img/@src").extract_first()

item = MzituItem()

image_urls=[]

image_urls.append(image_url)

item["image_urls"]=image_urls #从主页获取图集地址

x,y,z=image_url.split('/')[-3:]

item['image_name']=z

#print(item['image_name'])

# try:

# yield scrapy.Request(

# url=item['href'],

# callback=self.url_parse,

# meta={'item':item},

# dont_filter=True

# )

# except expression as identifier:

# print(identifier)

yield item

next_link=response.xpath(".//span[contains(text(), '下一页')]/parent::a/@href").extract_first() #获取下个主页的地址

# print(next_link)

if next_link is not None:

yield scrapy.Request(

url=next_link,

callback=self.img_parse,

meta={'item':item},

dont_filter=True

)

else:

print('ssss'*20)

print('保存{}成功'.format(x))

三.配置item.py

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class MzituItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

image_results=scrapy.Field()

images=scrapy.Field()

image_urls=scrapy.Field()

image_name=scrapy.Field()

image_paths=scrapy.Field()四.配置pipelines.py管道文件

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

from scrapy.pipelines.images import ImagesPipeline

from scrapy import Request

from mzitu.items import MzituItem

from scrapy.exceptions import DropItem

#from scrapy.pipelines.images import ImagesPipeline

headers={

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

"Referer": "https://www.mzitu.com/",

}

class MzituPipeline():

def process_item(self, item, spider):

print(item['image_urls'])

return item

class ImageDownload(ImagesPipeline):

def get_image(self, item, info):

for image_url in item['image_urls']:

yield Request(image_url, meta={'item': item},headers=headers)

# def item_completed(self, results, item, info):

# os.rename(IMGS + '/' + results[0][1]['path'], IMGS + '/' + item['img_name'])

def item_completed(self, results, item, info):

''' 处理对象:每组item中的图片 '''

image_path = [x['path'] for ok,x in results if ok]

if not image_path:

raise DropItem('Item contains no images')

item['image_paths'] = image_path

return item

# def __del__(self):

# #完成后删除full目录

# os.removedirs(IMGS + '/' + 'full')

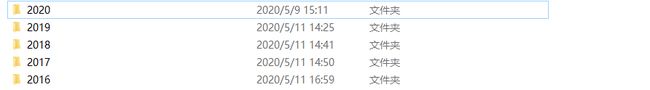

def file_path(self, request, response=None, info=None):

print('----'*20)

#image_guid = hashlib.sha1(to_bytes(request.url)).hexdigest()

x,y,z=request.url.split('/')[-3:]

image_guid='{}/{}/{}'.format(x,y,z)

#print(path)

return image_guid

# def file_path(self, request, response=None, info=None):

# image_guid = hashlib.sha1(to_bytes(request.url)).hexdigest()

# return 'full/%s.jpg' % (image_guid)五.最后我们配置setting.py

我们需要修改以下几点,请找到相对应的位置并对其进行修改

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/18.17763'

ROBOTSTXT_OBEY = False

DOWNLOAD_DELAY = 1

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

"Referer": "https://www.mzitu.com/",

}

ITEM_PIPELINES = {

'mzitu.pipelines.MzituPipeline': 300,

'mzitu.pipelines.ImageDownload':299,

}

IMAGES_STORE = 'image2'

IMAGES_NAME_FIELD = "image_name"

IMAGES_URLS_FIELD = 'image_urls'可以在spiders中创建一个run.py,写上以下代码,便于运行

from scrapy import cmdline

cmdline.execute("scrapy crawl mzt".split())