ffmpeg学习之旅三

通过学习ffmpeg官方demo hw_decode.c,通过修改使其使用dxva2完成硬解码,然后通过sdl进行播放。

代码修改部分

一、将decode_write中AVFrame变量改为全局静态变量,避免重复分配和释放;

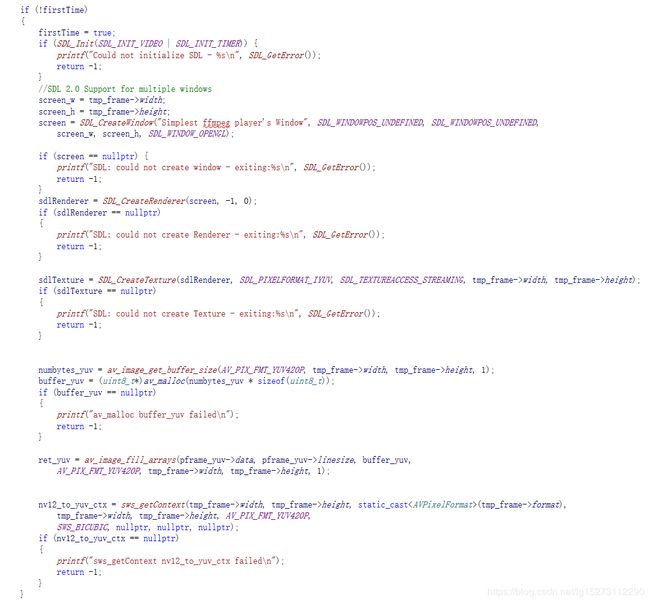

二、在decode_write函数中初始化sdl相关资源,因为这里可以获取到视频的分辨率的宽高,因只需要做一次,所以就加个变量控制。

程序运行

一、使用命令行参数,通过vs设置如下

2.使用cmd命令

start *.exe dxva2 bigbuckbunny_480x272.hevc

代码

// ffmpegDecode.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include "config.h"

extern "C"

{

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libavutil/pixfmt.h"

#include "libswscale/swscale.h"

#include "libavutil/opt.h"

#include "libavutil/imgutils.h"

#include "SDL2/SDL.h"

#include "libavutil/frame.h"

#include "libavutil/mem.h"

#include

#include

#include

#include

#include

};

#define __STDC_CONSTANT_MACROS

#pragma comment(lib, "legacy_stdio_definitions.lib")

extern "C" { FILE __iob_func[3] = { *stdin,*stdout,*stderr }; }

static AVBufferRef *hw_device_ctx = NULL;

static enum AVPixelFormat hw_pix_fmt;

//static FILE *output_file = NULL;

static AVFrame *frame = nullptr;

static AVFrame *sw_frame = nullptr;

static AVFrame *pframe_yuv = nullptr;

static uint8_t *buffer_yuv = nullptr;

static SwsContext *nv12_to_yuv_ctx = nullptr;

int screen_w = 0, screen_h = 0;

SDL_Window *screen = nullptr;

SDL_Renderer* sdlRenderer = nullptr;

SDL_Texture* sdlTexture = nullptr;

static int hw_decoder_init(AVCodecContext *ctx, const enum AVHWDeviceType type)

{

int err = 0;

if ((err = av_hwdevice_ctx_create(&hw_device_ctx, type,

NULL, NULL, 0)) < 0) {

fprintf(stderr, "Failed to create specified HW device.\n");

return err;

}

ctx->hw_device_ctx = av_buffer_ref(hw_device_ctx);

return err;

}

static enum AVPixelFormat get_hw_format(AVCodecContext *ctx,

const enum AVPixelFormat *pix_fmts)

{

const enum AVPixelFormat *p;

for (p = pix_fmts; *p != -1; p++) {

if (*p == hw_pix_fmt)

return *p;

}

fprintf(stderr, "Failed to get HW surface format.\n");

return AV_PIX_FMT_NONE;

}

static int decode_write(AVCodecContext *avctx, AVPacket *packet)

{

//static AVFrame *frame = av_frame_alloc();

//static AVFrame *sw_frame = av_frame_alloc();

AVFrame *tmp_frame = NULL;

//uint8_t *buffer = NULL;

//int size;

int ret = 0;

static bool firstTime = false;

//static AVFrame *pframe_yuv = nullptr;

//static uint8_t *buffer_yuv = nullptr;

//static SwsContext *nv12_to_yuv_ctx = nullptr;

int ret_yuv = 0;

int numbytes_yuv = 0;

ret = avcodec_send_packet(avctx, packet);

if (ret < 0) {

fprintf(stderr, "Error during decoding\n");

return ret;

}

while (1) {

if ((frame == nullptr) || (sw_frame == nullptr)) {

fprintf(stderr, "Can not alloc frame\n");

ret = AVERROR(ENOMEM);

goto fail;

}

ret = avcodec_receive_frame(avctx, frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) {

//av_frame_free(&frame);

//av_frame_free(&sw_frame);

return 0;

}

else if (ret < 0) {

fprintf(stderr, "Error while decoding\n");

goto fail;

}

if (frame->format == hw_pix_fmt) {

/* retrieve data from GPU to CPU */

if ((ret = av_hwframe_transfer_data(sw_frame, frame, 0)) < 0) {

fprintf(stderr, "Error transferring the data to system memory\n");

goto fail;

}

tmp_frame = sw_frame;

}

else

tmp_frame = frame;

if (!firstTime)

{

firstTime = true;

if (SDL_Init(SDL_INIT_VIDEO | SDL_INIT_TIMER)) {

printf("Could not initialize SDL - %s\n", SDL_GetError());

return -1;

}

//SDL 2.0 Support for multiple windows

screen_w = tmp_frame->width;

screen_h = tmp_frame->height;

screen = SDL_CreateWindow("Simplest ffmpeg player's Window", SDL_WINDOWPOS_UNDEFINED, SDL_WINDOWPOS_UNDEFINED,

screen_w, screen_h, SDL_WINDOW_OPENGL);

if (screen == nullptr) {

printf("SDL: could not create window - exiting:%s\n", SDL_GetError());

return -1;

}

sdlRenderer = SDL_CreateRenderer(screen, -1, 0);

if (sdlRenderer == nullptr)

{

printf("SDL: could not create Renderer - exiting:%s\n", SDL_GetError());

return -1;

}

sdlTexture = SDL_CreateTexture(sdlRenderer, SDL_PIXELFORMAT_IYUV, SDL_TEXTUREACCESS_STREAMING, tmp_frame->width, tmp_frame->height);

if (sdlTexture == nullptr)

{

printf("SDL: could not create Texture - exiting:%s\n", SDL_GetError());

return -1;

}

numbytes_yuv = av_image_get_buffer_size(AV_PIX_FMT_YUV420P, tmp_frame->width, tmp_frame->height, 1);

buffer_yuv = (uint8_t*)av_malloc(numbytes_yuv * sizeof(uint8_t));

if (buffer_yuv == nullptr)

{

printf("av_malloc buffer_yuv failed\n");

return -1;

}

ret_yuv = av_image_fill_arrays(pframe_yuv->data, pframe_yuv->linesize, buffer_yuv,

AV_PIX_FMT_YUV420P, tmp_frame->width, tmp_frame->height, 1);

nv12_to_yuv_ctx = sws_getContext(tmp_frame->width, tmp_frame->height, static_cast(tmp_frame->format),

tmp_frame->width, tmp_frame->height, AV_PIX_FMT_YUV420P,

SWS_BICUBIC, nullptr, nullptr, nullptr);

if (nv12_to_yuv_ctx == nullptr)

{

printf("sws_getContext nv12_to_yuv_ctx failed\n");

return -1;

}

}

//size = av_image_get_buffer_size(static_cast(tmp_frame->format), tmp_frame->width,

// tmp_frame->height, 1);

//buffer = (uint8_t *)av_malloc(size);

//if (!buffer) {

// fprintf(stderr, "Can not alloc buffer\n");

// ret = AVERROR(ENOMEM);

// goto fail;

//}

//ret = av_image_copy_to_buffer(buffer, size,

// (const uint8_t * const *)tmp_frame->data,

// (const int *)tmp_frame->linesize, static_cast(tmp_frame->format),

// tmp_frame->width, tmp_frame->height, 1);

//if (ret < 0) {

// fprintf(stderr, "Can not copy image to buffer\n");

// goto fail;

//}

//if ((ret = fwrite(buffer, 1, size, output_file)) < 0) {

// fprintf(stderr, "Failed to dump raw data.\n");

// goto fail;

//}

ret = sws_scale(nv12_to_yuv_ctx, tmp_frame->data, tmp_frame->linesize,

0, tmp_frame->height, pframe_yuv->data, pframe_yuv->linesize);

SDL_UpdateTexture(sdlTexture, nullptr, pframe_yuv->data[0], pframe_yuv->linesize[0]);

SDL_RenderClear(sdlRenderer);

SDL_RenderCopy(sdlRenderer, sdlTexture, nullptr, nullptr);

SDL_RenderPresent(sdlRenderer);

SDL_Delay(20);

SDL_ShowWindow(screen);

fail:

//av_frame_free(&frame);

//av_frame_free(&sw_frame);

//av_freep(&buffer);

if (ret < 0)

return ret;

}

}

int main(int argc, char *argv[])

{

AVFormatContext *input_ctx = NULL;

int video_stream, ret;

AVStream *video = NULL;

AVCodecContext *decoder_ctx = NULL;

AVCodec *decoder = NULL;

AVPacket packet;

enum AVHWDeviceType type = AV_HWDEVICE_TYPE_NONE;

int i;

if (argc < 3) {

fprintf(stderr, "Usage: %s \n", argv[0]);

return -1;

}

type = av_hwdevice_find_type_by_name(argv[1]);

if (type == AV_HWDEVICE_TYPE_NONE) {

fprintf(stderr, "Device type %s is not supported.\n", argv[1]);

fprintf(stderr, "Available device types:");

while ((type = av_hwdevice_iterate_types(type)) != AV_HWDEVICE_TYPE_NONE)

fprintf(stderr, " %s", av_hwdevice_get_type_name(type));

fprintf(stderr, "\n");

return -1;

}

/* open the input file */

if (avformat_open_input(&input_ctx, argv[2], NULL, NULL) != 0) {

fprintf(stderr, "Cannot open input file '%s'\n", argv[2]);

return -1;

}

if (avformat_find_stream_info(input_ctx, NULL) < 0) {

fprintf(stderr, "Cannot find input stream information.\n");

return -1;

}

/* find the video stream information */

ret = av_find_best_stream(input_ctx, AVMEDIA_TYPE_VIDEO, -1, -1, &decoder, 0);

if (ret < 0) {

fprintf(stderr, "Cannot find a video stream in the input file\n");

return -1;

}

video_stream = ret;

for (i = 0;; i++) {

const AVCodecHWConfig *config = avcodec_get_hw_config(decoder, i);

if (!config) {

fprintf(stderr, "Decoder %s does not support device type %s.\n",

decoder->name, av_hwdevice_get_type_name(type));

return -1;

}

if (config->methods & AV_CODEC_HW_CONFIG_METHOD_HW_DEVICE_CTX &&

config->device_type == type) {

hw_pix_fmt = config->pix_fmt;

break;

}

}

if (!(decoder_ctx = avcodec_alloc_context3(decoder)))

return AVERROR(ENOMEM);

video = input_ctx->streams[video_stream];

if (avcodec_parameters_to_context(decoder_ctx, video->codecpar) < 0)

return -1;

decoder_ctx->get_format = get_hw_format;

if (hw_decoder_init(decoder_ctx, type) < 0)

return -1;

decoder_ctx->thread_count = 2;

decoder_ctx->thread_type = FF_THREAD_FRAME;

AVDictionary *pOptions = nullptr;

av_dict_set(&pOptions, "max_delay", "100", 0);

av_dict_set(&pOptions, "preset", "ultrafast", 0); // 这个可以提高解码速度

av_dict_set(&pOptions, "tune", "zerolatency", 0);

av_dict_set(&pOptions, "probesize", "1024", 0);

av_dict_set(&pOptions, "max_analyze_duration", "10", 0);

av_dict_set(&pOptions, "fflags", "nobuffer", 0);

av_dict_set(&pOptions, "start_time_realtime", 0, 0);

av_dict_set(&pOptions, "rtsp_transport", "tcp", 0);

if ((ret = avcodec_open2(decoder_ctx, decoder, &pOptions)) < 0) {

fprintf(stderr, "Failed to open codec for stream #%u\n", video_stream);

return -1;

}

frame = av_frame_alloc();

if (frame == nullptr)

{

fprintf(stderr, "Failed to av_frame_alloc for frame \n");

return -1;

}

sw_frame = av_frame_alloc();

if (sw_frame == nullptr)

{

fprintf(stderr, "Failed to av_frame_alloc for sw_frame \n");

return -1;

}

pframe_yuv = av_frame_alloc();

if (pframe_yuv == nullptr)

{

fprintf(stderr, "Failed to av_frame_alloc for pframe_yuv \n");

return -1;

}

/* open the file to dump raw data */

//output_file = fopen(argv[3], "w+");

/* actual decoding and dump the raw data */

while (ret >= 0) {

if ((ret = av_read_frame(input_ctx, &packet)) < 0)

break;

if (video_stream == packet.stream_index)

ret = decode_write(decoder_ctx, &packet);

av_packet_unref(&packet);

}

/* flush the decoder */

packet.data = NULL;

packet.size = 0;

ret = decode_write(decoder_ctx, &packet);

av_packet_unref(&packet);

//if (output_file)

// fclose(output_file);

avcodec_free_context(&decoder_ctx);

avformat_close_input(&input_ctx);

av_buffer_unref(&hw_device_ctx);

if (frame != nullptr)

{

av_frame_free(&frame);

frame = nullptr;

}

if (pframe_yuv != nullptr)

{

av_frame_free(&pframe_yuv);

pframe_yuv = nullptr;

}

if (sw_frame != nullptr)

{

av_frame_free(&sw_frame);

sw_frame = nullptr;

}

if (buffer_yuv != nullptr)

{

av_freep(&buffer_yuv);

buffer_yuv = nullptr;

}

if (nv12_to_yuv_ctx != nullptr)

{

sws_freeContext(nv12_to_yuv_ctx);

nv12_to_yuv_ctx = nullptr;

}

return 0;

}