flume安装配置-采集日志到hadoop存储

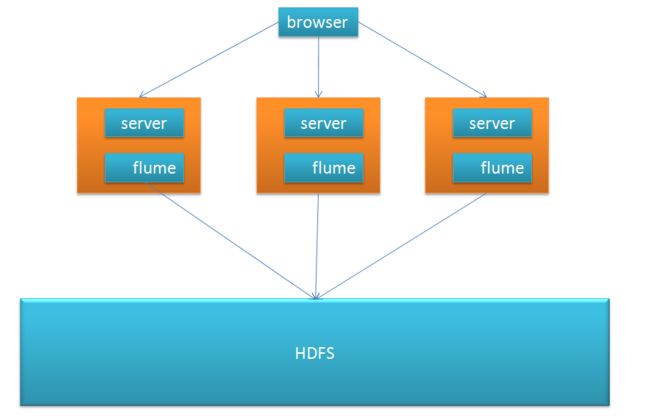

一、整体架构

flume其实就是一个日志采集agent,在每台应用服务器安装一个flume agent,然后事实采集日志到HDFS集群环境存储,以便后续使用hive或者pig等大数据分析日志,然后可转存到mysql供运维查询或分析用户行为等。

二、 fume与hadoop集群规划,hadoop集群安装参照:http://blog.csdn.net/liangjianyong007/article/details/52893234

集群规划:Hive只在一个节点(hadoop3)上安装即可

主机名 IP 安装的软件 运行的进程

hadoop1 192.168.31.10 jdk、hadoop NameNode、DFSZKFailoverController、

hadoop2 192.168.31.20 jdk、hadoop 、hive、mysql NameNode、DFSZKFailoverController、hive、mysql

hadoop3 192.168.31.30 jdk、hadoop 、flume ResourceManager、fulme

hadoop4 192.168.31.40 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

hadoop5 192.168.31.50 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain

hadoop6 192.168.31.60 jdk、hadoop、zookeeper DataNode、NodeManager、JournalNode、QuorumPeerMain三、flume下载安装

1. 下载flume:http://archive.apache.org/dist/flume/

2. 安装

tar -zxvf apache-flume-1.5.0-bin.tar.gz -C /usr/cloud/flume vim /etc/profile

export JAVA_HOME=/usr/cloud/java/jdk1.6.0_24

export HADOOP_HOME=/usr/cloud/hadoop/hadoop-2.2.0

export HBASE_HOME=/usr/cloud/hbase/hbase-0.96.2

export FLUME_HOME=/usr/cloud/flume/apache-flume-1.5.0

export PATH=$PATH:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$HBASE_HOME/bin:$FLUME_HOME/bin

source /etc/profile4.要把hadoop集群配置好的hdfs-site.xml和core-site.xml 放到hbase/conf下

#执行hdfs的配置

scp $HADOOP_HOME/etc/hadoop/core-site.xml $FLUME_HOME/conf

scp $HADOOP_HOME/etc/hadoop/hdfs-site.xml $FLUME_HOME/confsudo vim $FLUME_HOME/conf/flume-env.sh

#设置JAVA_HOME

JAVA_HOME=/usr/cloud/java/jdk1.6.0_24 scp $HADOOP_HOME/share/hadoop/common/hadoop-common-2.2.0.jar $FLUME_HOME/lib/

scp $HADOOP_HOME/share/hadoop/common/lib/hadoop-auth-2.2.0.jar $FLUME_HOME/lib/

scp $HADOOP_HOME/share/hadoop/common/lib/commons-configuration-1.6.jar $FLUME_HOME/lib/

三、配置flume source,channel,sink ,在conf下新建文件flume.conf

vim /FLUME_HOME/conf/flume.conf

#定义agent名, source、channel、sink的名称

a4.sources = r1

a4.channels = c1

a4.sinks = k1

#具体定义source

a4.sources.r1.type = spooldir

a4.sources.r1.spoolDir = /usr/cloud/flume/log

#具体定义channel

a4.channels.c1.type = memory

a4.channels.c1.capacity = 10000

a4.channels.c1.transactionCapacity = 100

#定义拦截器,为消息添加时间戳

a4.sources.r1.interceptors = i1

a4.sources.r1.interceptors.i1.type = org.apache.flume.interceptor.TimestampInterceptor$Builder

#具体定义sink

a4.sinks.k1.type = hdfs

a4.sinks.k1.hdfs.path = hdfs://ns1/flume/%Y%m%d

a4.sinks.k1.hdfs.filePrefix = events-

a4.sinks.k1.hdfs.fileType = DataStream

#不按照条数生成文件

a4.sinks.k1.hdfs.rollCount = 0

#HDFS上的文件达到128M时生成一个文件

a4.sinks.k1.hdfs.rollSize = 134217728

#HDFS上的文件达到60秒生成一个文件

a4.sinks.k1.hdfs.rollInterval = 60

#组装source、channel、sink

a4.sources.r1.channels = c1

a4.sinks.k1.channel = c1三、启动flume,保证hdfs已经启动

#编写脚本 start-flume.sh

vim $FLUME_HOME/bin/start-flume.sh

$FLUME_HOME/bin/flume-ng agent -n a4 -c conf -f $FLUME_HOME/conf/a4.conf -Dflume.root.logger=INFO,console

#启动flume

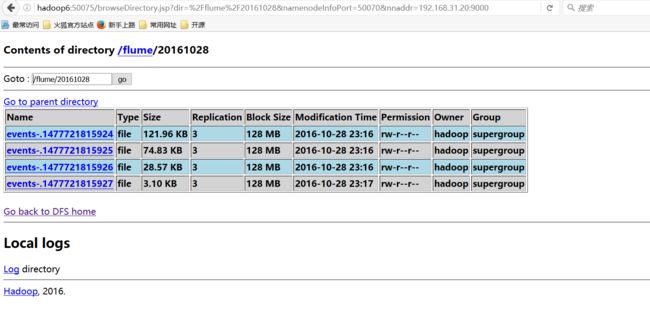

$FLUME_HOME/bin/start-flume.sh检查配置的文件是否上传到hdfs

附录:

在

/usr/cloud/flume/log在存储文件biz.log,内容:

ERROR [2016-10-27 14:44:02,482] com.alibaba.scm.common.monitor.log.ScmBizLogger:30 - |<>|traceId==>UUID:3ff0f75f-0ea8-466b-9c15-b96747b8d636<||>bizType==>3000<||>T==>107<||>SUCC==>true<||>bizName==>createInboundOrder_begain<||>IN==>{"appointmentDate":"Oct 27, 2016 2:43:52 PM","sellId":3693710345,"sellName":"天猫国际012","storeCode":"STORE_230095","customsInfoNo":"ASN00105244708","purchaseOrderNo":"PO160822222226","supplierId":300000000001402,"consignOrderNo":"CO160323143157012226","abroad":false,"demandDate":"Oct 27, 2016 2:43:52 PM","saleType":2,"shipType":0,"consignOrderItemDTOList":[{"scItemId":2100771461843,"itemId":2100770298741,"quantity":100,"gmtExpired":"Oct 10, 2016 12:00:00 AM","rejectDays":10,"managerType":3,"rowVersion":0}],"bizType":3000,"rowVersion":0} <||>bizCode==>CO160323143157012226<||>CM==>InboundOrderClientImpl.createInboundOrder<||>OUT==>{"success":true,"isRetry":false}<||>

ERROR [2016-10-27 14:44:03,716] com.alibaba.scm.common.monitor.log.ScmBizLogger:30 - |<>|traceId==>UUID:eb51567f-f83a-4dfb-8d38-9b6b58d84021<||>bizType==>3000<||>T==>1359<||>SUCC==>true<||>bizName==>createInboundOrder_end<||>IN==>{"appointmentDate":"Oct 27, 2016 2:43:52 PM","sellId":3693710345,"sellName":"天猫国际012","storeCode":"STORE_230095","customsInfoNo":"ASN00105244708","purchaseOrderNo":"PO160822222226","supplierId":300000000001402,"consignOrderNo":"CO160323143157012226","abroad":false,"demandDate":"Oct 27, 2016 2:43:52 PM","saleType":2,"shipType":0,"consignOrderItemDTOList":[{"scItemId":2100771461843,"itemId":2100770298741,"quantity":100,"gmtExpired":"Oct 10, 2016 12:00:00 AM","rejectDays":10,"managerType":3,"rowVersion":0}],"bizType":3000,"rowVersion":0} <||>bizCode==>CO160323143157012226<||>CM==>InboundOrderClientImpl.createInboundOrder<||>OUT==>{"success":true,"isRetry":false,"model":{"purchaseOrderNo":"PO160822222226","inboundNo":"IO16102714440300373001","consignOrderNo":"CO160323143157012226","storeCode":"STORE_230095","supplierId":300000000001402,"sendQuantity":100,"preArrival":"Oct 27, 2016 2:43:52 PM","status":10,"demandDate":"Oct 27, 2016 2:43:52 PM","inboundItemDOList":[{"inboundNo":"IO16102714440300373001","inboundId":373001,"scItemId":2100771461843,"itemId":2100770298741,"sendQuantity":100,"status":1,"rejectDays":10,"managerType":3,"gmtExpired":"Oct 10, 2016 12:00:00 AM","storeCode":"STORE_230095","purchaseOrderNo":"PO160822222226","bizType":3000,"attribute":"MXSDUEDATE:20161010","attributeMap":{"MXSDUEDATE":"20161010"}}],"id":373001,"bizType":3000}}<||>