KUBERNETES-1-15-Dashboard认证及分级授权

1. wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml下载文件。vim kubernetes-dashboard.yaml 修改文件。cat kubernetes-dashboard.yaml | grep -i image将镜像改为国内镜像(否则下载速度会很慢)。

[root@master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

--2018-12-17 23:35:37-- https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.228.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.228.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 4577 (4.5K) [text/plain]

Saving to: ‘kubernetes-dashboard.yaml’

100%[=============================================================================================>] 4,577 8.59KB/s in 0.5s

2018-12-17 23:35:38 (8.59 KB/s) - ‘kubernetes-dashboard.yaml’ saved [4577/4577]

[root@master ~]# ll | grep dashboard

-rw-r--r--. 1 root root 4577 Dec 17 23:35 kubernetes-dashboard.yaml

[root@master ~]# vim kubernetes-dashboard.yaml

[root@master ~]# cat kubernetes-dashboard.yaml | grep -i image

image: registry.cn-hangzhou.aliyuncs.com/k8sth/kubernetes-dashboard-amd64:v1.10.0

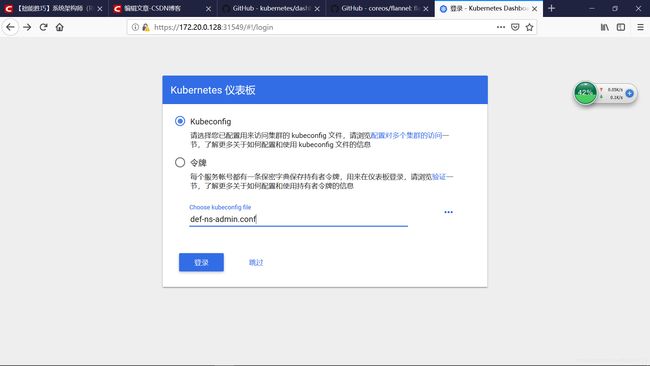

2.kubectl apply -f kubernetes-dashboard.yaml声明资源。kubectl get pods -n kube-system | grep -i dash查看Pod资源情况。kubectl get svc -n kube-system -o wide| grep -i dash查看服务资源情况(此时节点端口没有暴露)。

[root@master ~]# kubectl apply -f kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

[root@master ~]# kubectl get pods -n kube-system | grep -i dash

kubernetes-dashboard-78895ccdb5-g288c 1/1 Running 0 29s

[root@master ~]# kubectl get svc -n kube-system -o wide| grep -i dash

kubernetes-dashboard ClusterIP 10.104.129.139

3.kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kube-system修改端口类型。kubectl get svc -n kube-system -o wide| grep -i dash查看暴露端口转发情况。从物理机登陆网页界面查看(会弹出安全提示,忽略安全提示并确认继续访问)。

[root@master ~]# kubectl patch svc kubernetes-dashboard -p '{"spec":{"type":"NodePort"}}' -n kube-system

service/kubernetes-dashboard patched

[root@master ~]# kubectl get svc -n kube-system -o wide| grep -i dash

kubernetes-dashboard NodePort 10.104.129.139

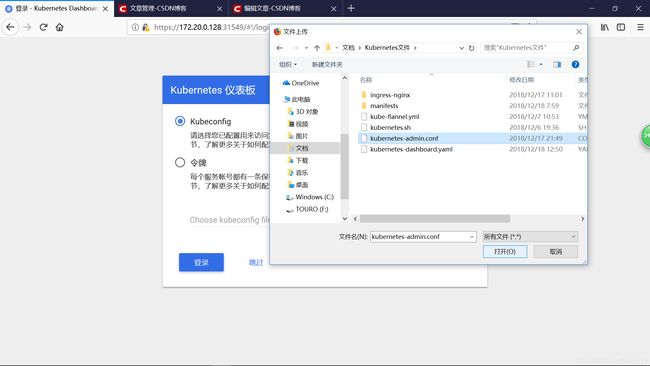

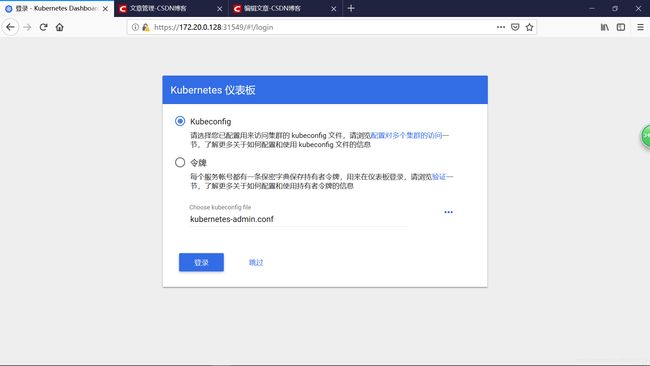

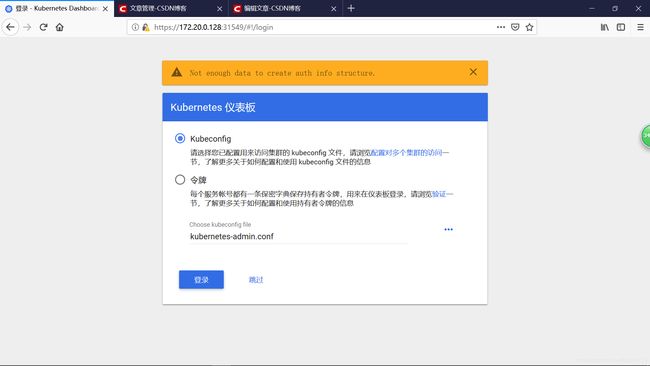

4.从物理机登陆网页界面。选择kubeconfig认证。发现登陆失败(服务器端并没有办理相关认证,这里不是serviceaccount账号登陆,而是useraccount账号,所以无法认证)。

5.cd /etc/kubernetes/pki/转到认证目录。(umask 077; openssl genrsa -out dashboard.key 2048)生成私钥。openssl req -new -key dashboard.key -out dashboard.csr -subj "/O=student/CN=dashboard"请求认证。openssl x509 -req -in dashboard.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out dashboard.crt -days 365生成公钥。

[root@master ~]# cd /etc/kubernetes/pki/

[root@master pki]# (umask 077; openssl genrsa -out dashboard.key 2048)

Generating RSA private key, 2048 bit long modulus

.+++

.........................................................................................................................................+++

e is 65537 (0x10001)

[root@master pki]# openssl req -new -key dashboard.key -out dashboard.csr -subj "/O=student/CN=dashboard"

[root@master pki]# ll | grep -i dash

-rw-r--r--. 1 root root 915 Dec 18 05:34 dashboard.csr

-rw-------. 1 root root 1675 Dec 18 05:33 dashboard.key

[root@master pki]# openssl x509 -req -in dashboard.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out dashboard.crt -days 365

Signature ok

subject=/O=student/CN=dashboard

Getting CA Private Key

6.kubectl create secret generic dashboard-cert -n kube-system --from-file=dashboard.crt=./dashboard.crt --from-file=dashboard.key=./dashboard.key创建加密资源。kubectl get secret -n kube-system | grep -i dash获取加密资源信息。

[root@master pki]# kubectl create secret generic dashboard-cert -n kube-system --from-file=dashboard.crt=./dashboard.crt --from-file=dashboard.key=./dashboard.key

secret/dashboard-cert created

[root@master pki]# kubectl get secret -n kube-system | grep -i dash

dashboard-cert Opaque 2 29s

kubernetes-dashboard-certs Opaque 0 5h

kubernetes-dashboard-key-holder Opaque 2 5h

kubernetes-dashboard-token-skwnj kubernetes.io/service-account-token 3 5h

7.wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml下载文件。cat kube-flannel.yml查看文件。kubectl apply -f kube-flannel.yml声明资源。

[root@master manifests]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2018-12-18 05:52:09-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 10599 (10K) [text/plain]

Saving to: ‘kube-flannel.yml’

100%[=============================================================================================>] 10,599 --.-K/s in 0.004s

2018-12-18 05:52:10 (2.59 MB/s) - ‘kube-flannel.yml’ saved [10599/10599]

[root@master manifests]# cat kube-flannel.yml

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm64

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: arm

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: ppc64le

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: s390x

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: true

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

[root@master manifests]# kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel configured

clusterrolebinding.rbac.authorization.k8s.io/flannel configured

serviceaccount/flannel unchanged

configmap/kube-flannel-cfg unchanged

daemonset.extensions/kube-flannel-ds-amd64 unchanged

daemonset.extensions/kube-flannel-ds-arm64 unchanged

daemonset.extensions/kube-flannel-ds-arm unchanged

daemonset.extensions/kube-flannel-ds-ppc64le unchanged

daemonset.extensions/kube-flannel-ds-s390x unchanged

8.kubectl create serviceaccount dashboard-admin -n kube-system创建serviceaccount 账户。kubectl get sa -n kube-system | grep -i dashboard获取资源中关于dashboard-admin的信息。

[root@master pki]# kubectl create serviceaccount dashboard-admin -n kube-system

serviceaccount/dashboard-admin created

[root@master pki]# kubectl get sa -n kube-system | grep -i dashboard

dashboard-admin 1 1m

kubernetes-dashboard 1 5h

9.kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin创建clusterrolebinding 资源。 kubectl get secret -n kube-system | grep -i dash获取dashboard-admin-token-4slqf信息。kubectl describe secret dashboard-admin-token-4slqf -n kube-system | grep token -A8详细查看secret信息获取token资源。

[root@master pki]# kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

clusterrolebinding.rbac.authorization.k8s.io/dashboard-cluster-admin created

[root@master pki]# kubectl get secret -n kube-system | grep -i dash

dashboard-admin-token-4slqf kubernetes.io/service-account-token 3 3m

dashboard-cert Opaque 2 22m

kubernetes-dashboard-certs Opaque 0 5h

kubernetes-dashboard-key-holder Opaque 2 5h

kubernetes-dashboard-token-skwnj kubernetes.io/service-account-token 3 5h

[root@master pki]# kubectl describe secret dashboard-admin-token-4slqf -n kube-system | grep token -A8

Name: dashboard-admin-token-4slqf

Namespace: kube-system

Labels:

Annotations: kubernetes.io/service-account.name=dashboard-admin

kubernetes.io/service-account.uid=ab124099-02b3-11e9-b463-000c290c9b7a

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tNHNscWYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiYWIxMjQwOTktMDJiMy0xMWU5LWI0NjMtMDAwYzI5MGM5YjdhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.PLO7qw_MmwXSMZFiJjE-RSNP1MIzrBH5_XnY3a01t9H7-DEG66rENdXpNzODaTXa-zwt1XYOIpoBiD3wGG2HMM75vuizsMoylUJz7XmG41yhF0b1QL8I5SPc0-7GCqM8jFWQWBnXUV8Z2-4zt34uRt6OxnofZ428cURezrsk60JUzBc8wctthDmMmAon7Itb3xxuPhF9atyDgOrIT7Ko39foZ7Kxkzc-mUt15j2XsYG2XLLRQQf_PFTih4tSODEKApKuEouhpEgovEdYaF9wqYRXOl7n17R3iQN3qXvbFLFVRDsKXoaPqtKQfjypibi9h4-pFPpv81gOvDvseLmNew

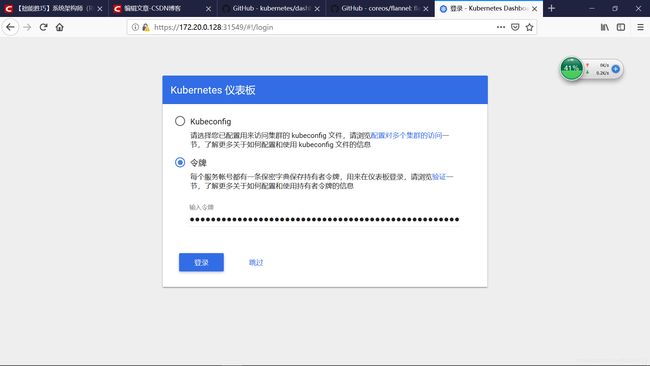

10.kubectl get svc -o wide -n kube-system确认kubernetes-dashboard 服务已经启动。访问之前的登陆页面。使用刚才获取的token进行登陆。输入刚才获取的token信息。登录dashboard可以对所有域名空间下的资源进行管理。

[root@master pki]# kubectl get svc -o wide -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kube-dns ClusterIP 10.96.0.10

kubernetes-dashboard NodePort 10.104.129.139

11.kubectl create serviceaccount def-ns-admin -n default创建serviceaccount资源。kubectl create rolebinding def-ns-admin --clusterrole=admin --serviceaccount=default:def-ns-admin使用 rolebinding将serviceaccount的clusterrole=admin权限控制在default空间。kubectl get secret -o wide | grep -i def-ns获取加密资源。kubectl describe secret def-ns-admin-token-sm7bd详细查看加密资源(token令牌)。使用token令牌登录发现只能管理default域名空间的资源。

[root@master pki]# kubectl create serviceaccount def-ns-admin -n default

serviceaccount/def-ns-admin created

[root@master pki]# kubectl create rolebinding def-ns-admin --clusterrole=admin --serviceaccount=default:def-ns-admin

rolebinding.rbac.authorization.k8s.io/def-ns-admin created

[root@master pki]# kubectl get secret -o wide | grep -i def-ns

def-ns-admin-token-sm7bd kubernetes.io/service-account-token 3 47m

[root@master pki]# kubectl describe secret def-ns-admin-token-sm7bd

Name: def-ns-admin-token-sm7bd

Namespace: default

Labels:

Annotations: kubernetes.io/service-account.name=def-ns-admin

kubernetes.io/service-account.uid=68f86bbb-02b5-11e9-b463-000c290c9b7a

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi1zbTdiZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2OGY4NmJiYi0wMmI1LTExZTktYjQ2My0wMDBjMjkwYzliN2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.O5y3ot8I4c7AiaxcyOQtPyzWVKlD4t2srJNfvFYJohNveJPel1bbaXTVOoe3hNH6-y_zn0HNGV_QRv04csi-DX9TH3vnGFcNMjlzuSMDCNzCeYhRkF297kbsSURjQ_d1iNcuYFx9X0UkEcWp9z6F1CGmbHg-x1bLWYFz22YPC1mivkh7S8bH0psDAvlCBYXfaM7pzETw_e4gbu9JQe_W9k_UDTUdYts3MC2-Ix1SGqEWp_dHaLHG_D5ZTL0zmZ1K5SfnMAVdSKYfkrH3DHXH2faN1sT7GtfcZNO16wqWJaPAcYD_KUHFqNLjkoSp2IEEbrP0Y64gZYcLJmFUozuohg

ca.crt: 1025 bytes

namespace: 7 bytes

12.kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://172.20.0.128:6443" --embed-certs=true --kubeconfig=/root/def-ns-admin.conf在配置信息中指明认证信息。kubectl config view --kubeconfig=/root/def-ns-admin.conf查看认证配置信息。

[root@master pki]# kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://172.20.0.128:6443" --embed-certs=true --kubeconfig=/root/def-ns-admin.conf

Cluster "kubernetes" set.

[root@master pki]# kubectl config view --kubeconfig=/root/def-ns-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: REDACTED

server: https://172.20.0.128:6443

name: kubernetes

contexts: []

current-context: ""

kind: Config

preferences: {}

users: []

13.kubectl get secret | grep def-ns获取加密资源。kubectl get secret def-ns-admin-token-sm7bd -o json | grep \"token\" -A1获取令牌资源。echo 一长串令牌字母 | base64 -d进行解码。DEF_NS_ADMIN_TOKEN=一长串解码的字母 对DEF_NS_ADMIN_TOKEN进行变量赋值。

[root@master pki]# kubectl get secret | grep def-ns

def-ns-admin-token-sm7bd kubernetes.io/service-account-token 3 1h

[root@master pki]# kubectl get secret def-ns-admin-token-sm7bd -o json | grep \"token\" -A1

"token": "ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklpSjkuZXlKcGMzTWlPaUpyZFdKbGNtNWxkR1Z6TDNObGNuWnBZMlZoWTJOdmRXNTBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5dVlXMWxjM0JoWTJVaU9pSmtaV1poZFd4MElpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WldOeVpYUXVibUZ0WlNJNkltUmxaaTF1Y3kxaFpHMXBiaTEwYjJ0bGJpMXpiVGRpWkNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExtNWhiV1VpT2lKa1pXWXRibk10WVdSdGFXNGlMQ0pyZFdKbGNtNWxkR1Z6TG1sdkwzTmxjblpwWTJWaFkyTnZkVzUwTDNObGNuWnBZMlV0WVdOamIzVnVkQzUxYVdRaU9pSTJPR1k0Tm1KaVlpMHdNbUkxTFRFeFpUa3RZalEyTXkwd01EQmpNamt3WXpsaU4yRWlMQ0p6ZFdJaU9pSnplWE4wWlcwNmMyVnlkbWxqWldGalkyOTFiblE2WkdWbVlYVnNkRHBrWldZdGJuTXRZV1J0YVc0aWZRLk81eTNvdDhJNGM3QWlheGN5T1F0UHl6V1ZLbEQ0dDJzckpOZnZGWUpvaE52ZUpQZWwxYmJhWFRWT29lM2hOSDYteV96bjBITkdWX1FSdjA0Y3NpLURYOVRIM3ZuR0ZjTk1qbHp1U01EQ056Q2VZaFJrRjI5N2tic1NVUmpRX2QxaU5jdVlGeDlYMFVrRWNXcDl6NkYxQ0dtYkhnLXgxYkxXWUZ6MjJZUEMxbWl2a2g3UzhiSDBwc0RBdmxDQllYZmFNN3B6RVR3X2U0Z2J1OUpRZV9XOWtfVURUVWRZdHMzTUMyLUl4MVNHcUVXcF9kSGFMSEdfRDVaVEwwem1aMUs1U2ZuTUFWZFNLWWZrckgzREhYSDJmYU4xc1Q3R3RmY1pOTzE2d3FXSmFQQWNZRF9LVUhGcU5MamtvU3AySUVFYnJQMFk2NGdaWWNMSm1GVW96dW9oZw=="

},

[root@master pki]# echo ZXlKaGJHY2lPaUpTVXpJMU5pSXNJbXRwWkNJNklpSjkuZXlKcGMzTWlPaUpyZFdKbGNtNWxkR1Z6TDNObGNuWnBZMlZoWTJOdmRXNTBJaXdpYTNWaVpYSnVaWFJsY3k1cGJ5OXpaWEoyYVdObFlXTmpiM1Z1ZEM5dVlXMWxjM0JoWTJVaU9pSmtaV1poZFd4MElpd2lhM1ZpWlhKdVpYUmxjeTVwYnk5elpYSjJhV05sWVdOamIzVnVkQzl6WldOeVpYUXVibUZ0WlNJNkltUmxaaTF1Y3kxaFpHMXBiaTEwYjJ0bGJpMXpiVGRpWkNJc0ltdDFZbVZ5Ym1WMFpYTXVhVzh2YzJWeWRtbGpaV0ZqWTI5MWJuUXZjMlZ5ZG1salpTMWhZMk52ZFc1MExtNWhiV1VpT2lKa1pXWXRibk10WVdSdGFXNGlMQ0pyZFdKbGNtNWxkR1Z6TG1sdkwzTmxjblpwWTJWaFkyTnZkVzUwTDNObGNuWnBZMlV0WVdOamIzVnVkQzUxYVdRaU9pSTJPR1k0Tm1KaVlpMHdNbUkxTFRFeFpUa3RZalEyTXkwd01EQmpNamt3WXpsaU4yRWlMQ0p6ZFdJaU9pSnplWE4wWlcwNmMyVnlkbWxqWldGalkyOTFiblE2WkdWbVlYVnNkRHBrWldZdGJuTXRZV1J0YVc0aWZRLk81eTNvdDhJNGM3QWlheGN5T1F0UHl6V1ZLbEQ0dDJzckpOZnZGWUpvaE52ZUpQZWwxYmJhWFRWT29lM2hOSDYteV96bjBITkdWX1FSdjA0Y3NpLURYOVRIM3ZuR0ZjTk1qbHp1U01EQ056Q2VZaFJrRjI5N2tic1NVUmpRX2QxaU5jdVlGeDlYMFVrRWNXcDl6NkYxQ0dtYkhnLXgxYkxXWUZ6MjJZUEMxbWl2a2g3UzhiSDBwc0RBdmxDQllYZmFNN3B6RVR3X2U0Z2J1OUpRZV9XOWtfVURUVWRZdHMzTUMyLUl4MVNHcUVXcF9kSGFMSEdfRDVaVEwwem1aMUs1U2ZuTUFWZFNLWWZrckgzREhYSDJmYU4xc1Q3R3RmY1pOTzE2d3FXSmFQQWNZRF9LVUhGcU5MamtvU3AySUVFYnJQMFk2NGdaWWNMSm1GVW96dW9oZw== | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi1zbTdiZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2OGY4NmJiYi0wMmI1LTExZTktYjQ2My0wMDBjMjkwYzliN2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.O5y3ot8I4c7AiaxcyOQtPyzWVKlD4t2srJNfvFYJohNveJPel1bbaXTVOoe3hNH6-y_zn0HNGV_QRv04csi-DX9TH3vnGFcNMjlzuSMDCNzCeYhRkF297kbsSURjQ_d1iNcuYFx9X0UkEcWp9z6F1CGmbHg-x1bLWYFz22YPC1mivkh7S8bH0psDAvlCBYXfaM7pzETw_e4gbu9JQe_W9k_UDTUdYts3MC2-Ix1SGqEWp_dHaLHG_D5ZTL0zmZ1K5SfnMAVdSKYfkrH3DHXH2faN1sT7GtfcZNO16wqWJaPAcYD_KUHFqNLjkoSp2IEEbrP0Y64gZYcLJmFUozuohg

[root@master pki]# DEF_NS_ADMIN_TOKEN=eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi1zbTdiZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2OGY4NmJiYi0wMmI1LTExZTktYjQ2My0wMDBjMjkwYzliN2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.O5y3ot8I4c7AiaxcyOQtPyzWVKlD4t2srJNfvFYJohNveJPel1bbaXTVOoe3hNH6-y_zn0HNGV_QRv04csi-DX9TH3vnGFcNMjlzuSMDCNzCeYhRkF297kbsSURjQ_d1iNcuYFx9X0UkEcWp9z6F1CGmbHg-x1bLWYFz22YPC1mivkh7S8bH0psDAvlCBYXfaM7pzETw_e4gbu9JQe_W9k_UDTUdYts3MC2-Ix1SGqEWp_dHaLHG_D5ZTL0zmZ1K5SfnMAVdSKYfkrH3DHXH2faN1sT7GtfcZNO16wqWJaPAcYD_KUHFqNLjkoSp2IEEbrP0Y64gZYcLJmFUozuohg

14.kubectl config set-credentials def-ns-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/def-ns-admin.conf生成用户资源。kubectl config view --kubeconfig=/root/def-ns-admin.conf查看配置信息。

[root@master pki]# kubectl config set-credentials def-ns-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/def-ns-admin.conf

User "def-ns-admin" set.

[root@master pki]# kubectl config view --kubeconfig=/root/def-ns-admin.conf

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: REDACTED

server: https://172.20.0.128:6443

name: kubernetes

contexts: []

current-context: ""

kind: Config

preferences: {}

users:

- name: def-ns-admin

user:

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImRlZi1ucy1hZG1pbi10b2tlbi1zbTdiZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJkZWYtbnMtYWRtaW4iLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI2OGY4NmJiYi0wMmI1LTExZTktYjQ2My0wMDBjMjkwYzliN2EiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6ZGVmYXVsdDpkZWYtbnMtYWRtaW4ifQ.O5y3ot8I4c7AiaxcyOQtPyzWVKlD4t2srJNfvFYJohNveJPel1bbaXTVOoe3hNH6-y_zn0HNGV_QRv04csi-DX9TH3vnGFcNMjlzuSMDCNzCeYhRkF297kbsSURjQ_d1iNcuYFx9X0UkEcWp9z6F1CGmbHg-x1bLWYFz22YPC1mivkh7S8bH0psDAvlCBYXfaM7pzETw_e4gbu9JQe_W9k_UDTUdYts3MC2-Ix1SGqEWp_dHaLHG_D5ZTL0zmZ1K5SfnMAVdSKYfkrH3DHXH2faN1sT7GtfcZNO16wqWJaPAcYD_KUHFqNLjkoSp2IEEbrP0Y64gZYcLJmFUozuohg

15. kubectl config set-context def-ns-admin@kubernetes --cluster=kubernetes --user=def-ns-admin --kubeconfig=/root/def-ns-admin.conf生成上下文。kubectl config use-context def-ns-admin@kubernetes --kubeconfig=/root/def-ns-admin.conf变更当前上下文。kubectl config view --kubeconfig=/root/def-ns-admin.conf | grep context查看当前上下文信息。

[root@master pki]# kubectl config set-context def-ns-admin@kubernetes --cluster=kubernetes --user=def-ns-admin --kubeconfig=/root/def-ns-admin.conf

Context "def-ns-admin@kubernetes" created.

[root@master pki]# kubectl config use-context def-ns-admin@kubernetes --kubeconfig=/root/def-ns-admin.conf

Switched to context "def-ns-admin@kubernetes".

[root@master pki]# kubectl config view --kubeconfig=/root/def-ns-admin.conf | grep context

contexts:

- context:

current-context: def-ns-admin@kubernetes

16.将def-ns-admin.conf文件放到物理机本地,使用该文件作为kubeconfig文件认证凭证。登陆后发现可以对default空间下的资源进行操作。