深度学习进阶:猫狗大战完整项目(一)

学习深度学习5个月左右,发现网上资料呈现出两极分化的趋势。简单教程如手写识别,用别人的网络替换自己的数据,前沿的资料,如github上公开的顶会论文源码,论文解读之类的还是挺多的。简单的太简单,复杂的看不懂,缺少中间路径。这就导致了包括我在内的很多人,过了配环境,调试别人代码的阶段之后没有办法更进一步。我一直试图找一个中间阶段的教程来学习,直到最近看到一本叫《practitioner bundle of deep learning for computer vision with python》的书,里面的猫狗大战项目非常合适。有如下内容:

1.下载数据集,这个数据集仅仅包含猫和狗的图片,所以我们要学会自己预处理数据集。编写预处理脚本,分割数据集脚本。

2.不同于minist那种小数据集。这个数据集较大,不可能一次性读入内存,所以需要学会在大数据量下处理数据,并保存训练结果。

3.使用keras编写Alexnet,从零开始训练。

4.如何评估网络和调参数技巧。

5.用ResNet在ImageNet dataset上迁移学习,增强训练的效果以达到leaderboard top-25水准。

肯定会踩很多坑,计划花一个星期左右的时间。

一.下载数据集

本来从官网下载,发现注册起来太过麻烦,百度一下:猫狗大战数据集 就有很多。只要下trian.zip即可,test1.zip是提交结果用的。

二.环境

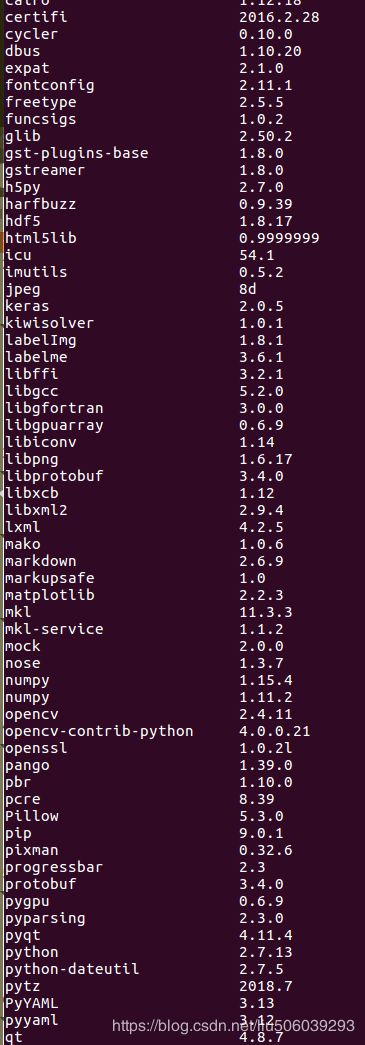

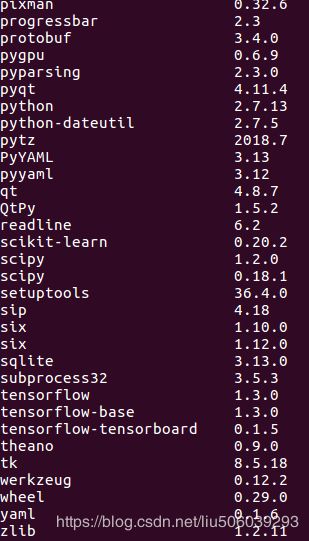

GPU:1060。环境是ubuntu16.04+anaconda+python2.7+tensorflow-gpu。配置opencv参考这篇blog https://blog.csdn.net/liu506039293/article/details/85268412

三.转化为HDF5格式

因为图片数据量很大,如果把数据保存为jpg,会严重拖慢训练速度(一是文件I/O太慢,二是jpg压缩了图片,训练时需要解压)。采用I/O优化过的HDF5格式可以大大提高训练速度,所以先将数据集转化成HDF5。

简介:HDF5(层次性数据格式)作用于大数据存储,其高效的压缩方式节约了不少硬盘空间,同时也给查询效率带来了一定的影响,压缩效率越高,查询效率越低。

3.1我们创建一个python文件作为配置文件。在其中保存我们的一些文件配置。

# define the paths to the images directory

IMAGES_PATH = "../datasets/kaggle_dogs_vs_cats/train"

# since we do not have validation data or access to the testing

# labels we need to take a number of images from the training

# data and use them instead

NUM_CLASSES = 2

NUM_VAL_IMAGES = 1250 * NUM_CLASSES

NUM_TEST_IMAGES = 1250 * NUM_CLASSES

# define the path to the output training, validation, and testing

# HDF5 files

TRAIN_HDF5 = "../datasets/kaggle_dogs_vs_cats/hdf5/train.hdf5"

VAL_HDF5 = "../datasets/kaggle_dogs_vs_cats/hdf5/val.hdf5"

TEST_HDF5 = "../datasets/kaggle_dogs_vs_cats/hdf5/test.hdf5"

# path to the output model file

MODEL_PATH = "output/alexnet_dogs_vs_cats.model"

# define the path to the dataset mean

DATASET_MEAN = "output/dogs_vs_cats_mean.json"

# define the path to the output directory used for storing plots,

# classification reports, etc.

OUTPUT_PATH = "output"

3.2 转换脚本

用到三个脚本(请根据自己的项目路径适当修改),代码均有详细的注释

build_dogs_vs_cats.py

# USAGE# python build_dogs_vs_cats.py# import the necessary packages

from config import dogs_vs_cats_config as config

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

from pyimagesearch.preprocessing import AspectAwarePreprocessor

from pyimagesearch.io import HDF5DatasetWriter#turn images to serialized file,but what is serialized file?

from imutils import paths

import numpy as np

import progressbar

import json

import cv2

import os

# grab the paths to the images

trainPaths = list(paths.list_images(config.IMAGES_PATH))#get every picture's path

trainLabels = [p.split(os.path.sep)[-1].split(".")[0]

for p in trainPaths]#split lable of images

le = LabelEncoder()

trainLabels = le.fit_transform(trainLabels)#tansfor label to onehot

# perform stratified sampling from the training set to build the

# testing split from the training data

split = train_test_split(trainPaths, trainLabels,

test_size=config.NUM_TEST_IMAGES, stratify=trainLabels,

random_state=42)

(trainPaths, testPaths, trainLabels, testLabels) = split

print(split)

# perform another stratified sampling, this time to build the

# validation data

split = train_test_split(trainPaths, trainLabels,

test_size=config.NUM_VAL_IMAGES, stratify=trainLabels,

random_state=42)

(trainPaths, valPaths, trainLabels, valLabels) = split

# construct a list pairing the training, validation, and testing

# image paths along with their corresponding labels and output HDF5

# files

datasets = [

("train", trainPaths, trainLabels, config.TRAIN_HDF5),

("val", valPaths, valLabels, config.VAL_HDF5),

("test", testPaths, testLabels, config.TEST_HDF5)]

# initialize the image pre-processor and the lists of RGB channel

# averages

aap = AspectAwarePreprocessor(256, 256)#resize images to 256*256,keeping the aspect ratio

(R, G, B) = ([], [], [])

# loop over the dataset tuples

for (dType, paths, labels, outputPath) in datasets:

# create HDF5 writer

print("[INFO] building {}...".format(outputPath))

writer = HDF5DatasetWriter((len(paths), 256, 256, 3), outputPath)

# initialize the progress bar

widgets = ["Building Dataset: ", progressbar.Percentage(), " ",

progressbar.Bar(), " ", progressbar.ETA()]

pbar = progressbar.ProgressBar(maxval=len(paths),

widgets=widgets).start()

# loop over the image paths

for (i, (path, label)) in enumerate(zip(paths, labels)):

# load the image and process it

image = cv2.imread(path)

image = aap.preprocess(image)

# if we are building the training dataset, then compute the

# mean of each channel in the image, then update the

# respective lists这个地方注意,我们顺便计算出了训练数据平均rgb的值,这个在之后的训练中是有用# 的。

if dType == "train":

(b, g, r) = cv2.mean(image)[:3]

R.append(r)

G.append(g)

B.append(b)

# add the image and label # to the HDF5 dataset

writer.add([image], [label])

pbar.update(i)

# close the HDF5 writer

pbar.finish()

writer.close()

# construct a dictionary of averages, then serialize the means to a

# JSON file

print("[INFO] serializing means...")

D = {"R": np.mean(R), "G": np.mean(G), "B": np.mean(B)}

f = open(config.DATASET_MEAN, "w")

f.write(json.dumps(D))

f.close()

AspectAwarePreprocessor这个脚本用于将图片转化为255*255

# import the necessary packages

import imutils#a package which is simple opencv

import cv2

class AspectAwarePreprocessor:

def __init__(self, width, height, inter=cv2.INTER_AREA):

# store the target image width, height, and interpolation

# method used when resizing

self.width = width

self.height = height

self.inter = inter

def preprocess(self, image):

# grab the dimensions of the image and then initialize

# the deltas to use when cropping

(h, w) = image.shape[:2]

dW = 0

dH = 0

# if the width is smaller than the height, then resize

# along the width (i.e., the smaller dimension) and then

# update the deltas to crop the height to the desired

# dimension

if w < h:

image = imutils.resize(image, width=self.width,

inter=self.inter)

dH = int((image.shape[0] - self.height) / 2.0)

# otherwise, the height is smaller than the width so

# resize along the height and then update the deltas

# crop along the width

else:

image = imutils.resize(image, height=self.height,

inter=self.inter)

dW = int((image.shape[1] - self.width) / 2.0)

# now that our images have been resized, we need to

# re-grab the width and height, followed by performing

# the crop

(h, w) = image.shape[:2]

image = image[dH:h - dH, dW:w - dW]

# finally, resize the image to the provided spatial

# dimensions to ensure our output image is always a fixed

# size

return cv2.resize(image, (self.width, self.height),

interpolation=self.inter)

hdf5datasetwriter.py这个脚本用于hdf5的写入

# import the necessary packages

import h5py

import os

class HDF5DatasetWriter:

def __init__(self, dims, outputPath, dataKey="images",

bufSize=1000):

# check to see if the output path exists, and if so, raise

# an exception

if os.path.exists(outputPath):

raise ValueError("The supplied `outputPath` already "

"exists and cannot be overwritten. Manually delete "

"the file before continuing.", outputPath)

# open the HDF5 database for writing and create two datasets:

# one to store the images/features and another to store the

# class labels

self.db = h5py.File(outputPath, "w")

self.data = self.db.create_dataset(dataKey, dims,

dtype="float")

self.labels = self.db.create_dataset("labels", (dims[0],),

dtype="int")

# store the buffer size, then initialize the buffer itself

# along with the index into the datasets

self.bufSize = bufSize

self.buffer = {"data": [], "labels": []}

self.idx = 0

def add(self, rows, labels):

# add the rows and labels to the buffer

self.buffer["data"].extend(rows)

self.buffer["labels"].extend(labels)

# check to see if the buffer needs to be flushed to disk

if len(self.buffer["data"]) >= self.bufSize:

self.flush()

def flush(self):

# write the buffers to disk then reset the buffer

i = self.idx + len(self.buffer["data"])

self.data[self.idx:i] = self.buffer["data"]

self.labels[self.idx:i] = self.buffer["labels"]

self.idx = i

self.buffer = {"data": [], "labels": []}

def storeClassLabels(self, classLabels):

# create a dataset to store the actual class label names,

# then store the class labels

dt = h5py.special_dtype(vlen=str) # `vlen=unicode` for Py2.7

labelSet = self.db.create_dataset("label_names",

(len(classLabels),), dtype=dt)

labelSet[:] = classLabels

def close(self):

# check to see if there are any other entries in the buffer

# that need to be flushed to disk

if len(self.buffer["data"]) > 0:

self.flush()

# close the dataset

self.db.close()