【Federated Learning】KubeFATE 搭建使用

由于KubeFATE使用了容器技术对FATE进行了封装,因此相对于传统的安装部署,使用KubeFATE有以下优点:

- 使用简单,免除缺失依赖软件包的烦恼。配置方便,一个配置文件就能部署多套集群。

- 目前KubeFATE支持使用Docker-Compose和Kubernetes两种方式来部署和管理FATE集群,分别面向了测试开发和生产这两种使用场景。适用于云环境。管理灵活,可按需增减集群规模.

- KubeFATE的工作流程主要分成两部分,分别是:

1). 根据用户定义的配置文件生成FATE集群的启动文件。2). 拷贝启动文件到指定机器上,并使用docker-compose命令启动容器。

1. Ubuntu 安装

- 使用vmare workstation 安装Ubuntu18.04, 下载的时候很慢, 推荐使用idm 下载器下载。

- 使用vmare tool 进行硬件加速,主虚数据复制。

- 切换镜像源,更新软件。sudo gedit /etc/apt/sources.list

- ssh安装

apt-get install openssh-server

# 安装完成之后继续在命令行输入

ps -e | grep ssh

# 启动命令

/etc/init.d/ssh start

# 重启命令

/etc/init.d/ssh restart

# 或者

service sshd restart

# 关闭命令

/etc/init.d/ssh stop

# 或者

service sshd stop

#登录主机

ssh hostname@hostIp

#同一个主机不同用户之间 也需要相互传递证书

# 1. 无密码开放式登录

vim /etc/ssh/sshd_config

#输入完成之后,你会打开一个文件修改其中如下几项:

PermitRootLogin yes

PasswordAuthentication yes

ChallengeResponseAuthentication yes

#保证这几行取消了注释,并且为yes

# 2. RSA免密码登录方式 注意是以什么用户身份执行的

#输入命令sudo passwd,然后系统会让你输入新密码并确认,此时的密码就是root新密码。修改成功后,输入命令su root,再输入新的密码就ok了

ssh-keygen -t rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

#然后授权

chmod 600 ~/.ssh/authorized_keys

#互相传送密钥

ssh-copy-id -i ~/.ssh/id_rsa.pub hostname@hostIp2. docker 安装

$ sudo apt-get update

$ sudo apt-get install \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common

$ curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

$ sudo add-apt-repository \

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

$(lsb_release -cs) \

stable"

# install the latest stable version

$ sudo apt-get update

$ sudo apt-get install docker-ce docker-ce-cli containerd.io

#for more specifically: https://docs.docker.com/engine/install/ubuntu/3. docker compose 安装

# download the current stable release of Docker Compose:

$ sudo curl -L "https://github.com/docker/compose/releases/download/1.25.4/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

#Apply executable permissions to the binary:

$ sudo chmod +x /usr/local/bin/docker-compose

# verify install

$ docker-compose --version

docker-compose version 1.25.4, build 1110ad01

# 国内镜像源

sudo curl -L "https://get.daocloud.io/docker/compose/releases/download/1.25.5/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

【方案一】docker-compose 方式

部署一套FATE集群,集群内拥有“FATE整体架构”中描述的所有部件。具体部署架构如下所示:

单机方式实验:本机ip: 192.168.30.120

# 国内

$ wget https://webank-ai-1251170195.cos.ap-guangzhou.myqcloud.com/fate_1.3.0-images.tar.gz

#docker 加载本地镜像

$ docker load -i fate_1.3.0-images.tar.gz

#查看镜像

$ docker images

REPOSITORY TAG

federatedai/egg 1.3.0-release

federatedai/fateboard 1.3.0-release

federatedai/meta-service 1.3.0-release

federatedai/python 1.3.0-release

federatedai/roll 1.3.0-release

federatedai/proxy 1.3.0-release

federatedai/federation 1.3.0-release

federatedai/serving-server 1.2.2-release

federatedai/serving-proxy 1.2.2-release

redis 5

mysql 8

#在部署机上下载KubeFATE-docker-compose.tar.gz文件

$ wget https://github.com/FederatedAI/KubeFATE/releases/download/v1.3.0-a/kubefate-docker-compose.tar.gz

$ tar -zxvf kubefate-docker-compose.tar.gz

#进入docker_deploy目录

cd docker_deploy

#编辑parties.conf如下:

user=root

dir=/data/projects/fate

partylist=(10000)

partyiplist=(192.168.30.120) #此处替换为目标机的IP

servingiplist=(192.168.30.120) #此处替换为目标机的IP

exchangeip=

$ bash generate_config.sh # 生成部署文件

$ bash docker_deploy.sh all

#或者 bash docker_deploy.sh all --training, bash docker_deploy.sh all --serving

user=root # 运行FATE容器的用户

dir=/data/projects/fate # docker-compose部署目录

partylist=(10000 9999) # 组织id

partyiplist=(192.168.7.1 192.168.7.2) # id对应训练集群ip

servingiplist=(192.168.7.1 192.168.7.2) # id对应在线预测集群ip

exchangeip= # 通信组件标识

- 在目标机上验证集群状态:

# docker ps

CONTAINER ID IMAGE COMMAND

CREATED STATUS PORTS NAMES

f8ae11a882ba fatetest/fateboard:1.3.0-release "/bin/sh -c 'cd /dat…" 5 days ago Up 5 days 0.0.0.0:8080->8080/tcp confs-10000_fateboard_1

d72995355962 fatetest/python:1.3.0-release "/bin/bash -c 'sourc…" 5 days ago Up 5 days 9360/tcp, 9380/tcp confs-10000_python_1

dffc70fc68ac fatetest/egg:1.3.0-release "/bin/sh -c 'cd /dat…" 7 days ago Up 7 days 7778/tcp, 7888/tcp, 50001-50004/tcp confs-10000_egg_1

dc23d75692b0 fatetest/roll:1.3.0-release "/bin/sh -c 'cd roll…" 7 days ago Up 7 days 8011/tcp confs-10000_roll_1

7e52b1b06d1a fatetest/meta-service:1.3.0-release "/bin/sh -c 'java -c…" 7 days ago Up 7 days 8590/tcp confs-10000_meta-service_1

50a6323f5cb8 fatetest/proxy:1.3.0-release "/bin/sh -c 'cd /dat…" 7 days ago Up 7 days 0.0.0.0:9370->9370/tcp confs-10000_proxy_1

4526f8e57004 redis:5 "docker-entrypoint.s…" 7 days ago Up 7 days 6379/tcp confs-10000_redis_1

586f3f2fe191 fatetest/federation:1.3.0-release "/bin/sh -c 'cd /dat…" 7 days ago Up 7 days 9394/tcp confs-10000_federation_1

ec434dcbbff1 mysql:8 "docker-entrypoint.s…" 7 days ago Up 7 days 3306/tcp, 33060/tcp confs-10000_mysql_1

68b1d6c68b6c federatedai/serving-proxy:1.2.2-release "/bin/sh -c 'java -D…" 32 hours ago Up 32 hours 0.0.0.0:8059->8059/tcp, 0.0.0.0:8869->8869/tcp, 8879/tcp serving-10000_serving-proxy_1

7937ecf2974e redis:5 "docker-entrypoint.s…" 32 hours ago Up 32 hours 6379/tcp serving-10000_redis_1

00a8d98021a6 federatedai/serving-server:1.2.2-release "/bin/sh -c 'java -c…" 32 hours ago Up 32 hours 0.0.0.0:8000->8000/tcp serving-10000_serving-server_1- 在目标机上验证集群是否正确安装

#在192.168.30.120上执行下列命令

$ docker exec -it confs-10000_python_1 bash #进入python组件容器内部

$ cd /data/projects/fate/python/examples/toy_example #toy_example目录

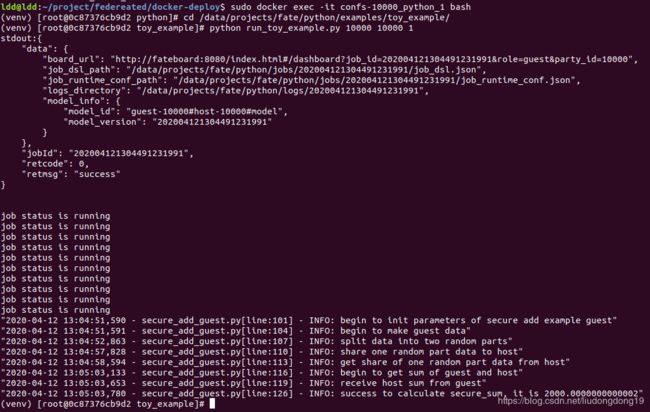

$ python run_toy_example.py 10000 10000 1 #验证- 如果测试通过,屏幕将显示类似如下消息:

- 根据jobid 查询状态 python fate_flow_client.py -f query_task -j 202004121304491231991

#删除部署

#1. 在部署机器上运行以下命令可以停止所有FATE集群:

bash docker_deploy.sh --delete all

# 2. 彻底删除在运行机器上部署的FATE,可以分别登录节点,然后运行命令

$ cd /data/projects/fate/confs-/ # 组织的id,本例中代表10000或者9999

$ docker-compose down

$ rm -rf ../confs-/ # 删除docker-compose部署文件 多机方式实验:

FATE集群的组网方式:联邦学习的训练任务需要多方参与,如图1所示,每一个party node都是一方,并且每个party node都有各自的一套FATE集群。而party node和party node之间的发现方式有两种。分别是点对点和星型。默认情况下,使用KubeFATE部署的多方集群会通过点对点的方式组网,但KubeFATE也可以单独部署Exchange服务以支持星型组网。

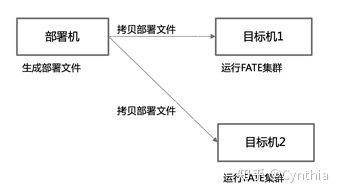

KubeFATE的使用分成两部分,第一部分是生成FATE集群的启动文件(docker-compose.yaml),第二个部分是通过docker-compose的方式去启动FATE集群。从逻辑上可将进行这两部分工作的机器分别称为部署机和目标机器。

两个主机(物理机或者虚拟机,Ubuntu或Centos7系统,允许以root用户登录);把两台主机划分为workspace1和workspace2。其中workspace1既作为部署机也作为目标机,而workspace2则作为目标机,每个机器运行一个FATE实例。这里两台主机的IP分别为192.168.7.1和192.168.7.2。用户需要根据实际情况做出修改。具体部署架构如图2所示。

1. 在workspace1上并以root用户进行, 修改配置文件

进入docker-deploy目录

# cd docker-deploy/

编辑parties.conf如下

# vi parties.conf

user=root

dir=/data/projects/fate

partylist=(10000 9999)

partyiplist=(192.168.7.1 192.168.7.2)

servingiplist=(192.168.7.1 192.168.7.2)

exchangeip=

$ bash generate_config.sh

$ bash docker_deploy.sh all

$ docker exec -it confs-10000_python_1 bash

$ cd /data/projects/fate/python/examples/toy_example

$ python run_toy_example.py 10000 9999 12. 验证Service推理

在workspace1上进行

进入python容器

# docker exec -it confs-10000_python_1 bash

进入fate_flow目录

# cd fate_flow

修改examples/upload_host.json

# vi examples/upload_host.json

{

"file": "examples/data/breast_a.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"namespace": "fate_flow_test_breast",

"table_name": "breast"

}

把“breast_a.csv”上传到系统中

# python fate_flow_client.py -f upload -c examples/upload_host.json以下操作在workspace2上进行 进入python容器

# docker exec -it confs-9999_python_1 bash

进入fate_flow目录

# cd fate_flow

修改examples/upload_guest.json

# vi examples/upload_guest.json

{

"file": "examples/data/breast_b.csv",

"head": 1,

"partition": 10,

"work_mode": 1,

"namespace": "fate_flow_test_breast",

"table_name": "breast"

}

把“breast_b.csv”上传到系统中

# python fate_flow_client.py -f upload -c examples/upload_guest.json

修改examples/test_hetero_lr_job_conf.json

# vi examples/test_hetero_lr_job_conf.json

{

"initiator": {

"role": "guest",

"party_id": 9999

},

"job_parameters": {

"work_mode": 1

},

"role": {

"guest": [9999],

"host": [10000],

"arbiter": [10000]

},

"role_parameters": {

"guest": {

"args": {

"data": {

"train_data": [{"name": "breast", "namespace": "fate_flow_test_breast"}]

}

},

"dataio_0":{

"with_label": [true],

"label_name": ["y"],

"label_type": ["int"],

"output_format": ["dense"]

}

},

"host": {

"args": {

"data": {

"train_data": [{"name": "breast", "namespace": "fate_flow_test_breast"}]

}

},

"dataio_0":{

"with_label": [false],

"output_format": ["dense"]

}

}

},

....

}提交任务对上传的数据集进行训练

$ python fate_flow_client.py -f submit_job -d examples/test_hetero_lr_job_dsl.json -c exam输出结果:

{

"data": {

"board_url": "http://fateboard:8080/index.html#/dashboard?job_id=202003060553168191842&role=guest&party_id=9999",

"job_dsl_path": "/data/projects/fate/python/jobs/202003060553168191842/job_dsl.json",

"job_runtime_conf_path": "/data/projects/fate/python/jobs/202003060553168191842/job_runtime_conf.json",

"logs_directory": "/data/projects/fate/python/logs/202003060553168191842",

"model_info": {

"model_id": "arbiter-10000#guest-9999#host-10000#model",

"model_version": "202003060553168191842"

}

},

"jobId": "202003060553168191842",

"retcode": 0,

"retmsg": "success"

}训练好的模型会存储在EGG节点中,模型可通过在上述输出中的“model_id”和“model_version”来定位。FATE Serving的加载和绑定模型操作都需要用户提供这两个值。

查看任务状态直到”f_status”为success,把上一步中输出的“jobId”方在“-j”后面。

# python fate_flow_client.py -f query_task -j 202003060553168191842 | grep f_status

output:

"f_status": "success",

"f_status": "success",修改加载模型的配置,把上一步中输出的“model_id”和“model_version”与文件中的进行替换。

# vi examples/publish_load_model.json

{

"initiator": {

"party_id": "9999",

"role": "guest"

},

"role": {

"guest": ["9999"],

"host": ["10000"],

"arbiter": ["10000"]

},

"job_parameters": {

"work_mode": 1,

"model_id": "arbiter-10000#guest-9999#host-10000#model",

"model_version": "202003060553168191842"

}

}

加载模型

# python fate_flow_client.py -f load -c examples/publish_load_model.json

修改绑定模型的配置, 替换“model_id”和“model_version”,并给“service_id”赋值“test”。其中“service_id”是推理服务的标识,该标识与一个模型关联。用户向FATE Serving发送请求时需要带上“service_id”,这样FATE Serving才会知道用哪个模型处理用户的推理请求。

# vi examples/bind_model_service.json

{

"service_id": "test",

"initiator": {

"party_id": "9999",

"role": "guest"

},

"role": {

"guest": ["9999"],

"host": ["10000"],

"arbiter": ["10000"]

},

"job_parameters": {

"work_mode": 1,

"model_id": "arbiter-10000#guest-9999#host-10000#model",

"model_version": "202003060553168191842"

}

}绑定模型

# python fate_flow_client.py -f bind -c examples/bind_model_service.json在线测试,通过curl发送以下信息到

curl -X POST -H 'Content-Type: application/json' -d '

{"head":{"serviceId":"test"},"body":{"featureData": {"x0": 0.254879,"x1": -

1.046633,"x2": 0.209656,"x3": 0.074214,"x4": -0.441366,"x5": -

0.377645,"x6": -0.485934,"x7": 0.347072,"x8": -0.287570,"x9": -0.733474}}' 'http://192.168.7.2:8059/federation/v1/inference'输出结果:

{"flag":0,"data":{"prob":0.30684422824464636,"retmsg":"success","retcode":0}若输出结果如上所示,则验证了serving-service的功能是正常的。上述结果说明有以上特征的人确诊概率为30%左右。

【install a MiniKube for Kubernetes and deploy KubeFATE sevice on it】

把两台主机划分为workspace1和workspace2。其中workspace1既作为部署机也作为目标机,而workspace2则作为目标机,每个机器运行一个FATE实例。这里两台主机的IP分别为192.168.7.1和192.168.7.2。用户需要根据实际情况做出修改。具体部署架构如图2所示。

1. kubectl

$ curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.17.3/bin/linux/amd64/kubectl

$ chmod +x ./kubectl

$ sudo mv ./kubectl /usr/bin

#测试是否安装成功

$ kubectl version

#the output:Client Version: version.Info{Major:"1", Minor:"17", GitVersion:"v1.17.3", GitCommit:"06ad960bfd03b39c8310aaf92d1e7c12ce618213", GitTreeState:"clean", BuildDate:"2020-02-11T18:14:22Z", GoVersion:"go1.13.6", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?2.Minikube

curl -LO https://github.com/kubernetes/minikube/releases/download/v1.7.3/minikube-linux-amd64 && mv minikube-linux-amd64 minikube && chmod +x minikube && sudo mv ./minikube /usr/bin

#Verify

$ minikube version

minikube version: v1.7.3

commit: 436667c819c324e35d7e839f8116b968a2d0a3ff3. KubeFate

# download kubeFate release

$ curl -LO https://github.com/FederatedAI/KubeFATE/releases/download/v1.3.0-a/kubefate-k8s-v1.3.0-a.tar.gz

$ tar -xzf ./kubefate-k8s-v1.3.0-a.tar.gz

#verity

$ ls

#output: cluster.yaml config.yaml kubefate kubefate-k8s-v1.3.0-a.tar.gz kubefate.yaml rbac-config.yaml

#Move the kubefate executable binary to path

chmod +x ./kubefate && sudo mv ./kubefate /usr/bin

#verify

$ kubefate version

* kubefate service connection error, Get http://kubefate.net/v1/version: dial tcp: lookup kubefate.net: no such host

* kubefate commandLine version=v1.0.24. Install Kubernetes with MiniKube

$ sudo minikube start --vm-driver=none

$ sudo minikube status

host: Running

kubelet: Running

apiserver: Running

kubeconfig: Configured

$ sudo minikube addons enable ingress5. Deploy KubeFate service

# Create kube-fate namespace and account for KubeFATE service

$ kubectl apply -f ./rbac-config.yaml

# Deploy KubeFATE serving to kube-fate Namespace

$ kubectl apply -f ./kubefate.yaml

# verify to see if kubeFate has been deployed success

$ kubectl get all,ingress -n kube-fate

NAME READY STATUS RESTARTS AGE

pod/kubefate-6d576d6c88-mz6ds 1/1 Running 0 16s

pod/mongo-56684d6c86-4ff5m 1/1 Running 0 16s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubefate ClusterIP 10.111.165.189 8080/TCP 16s

service/mongo ClusterIP 10.98.194.57 27017/TCP 16s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/kubefate 1/1 1 1 16s

deployment.apps/mongo 1/1 1 1 16s

NAME DESIRED CURRENT READY AGE

replicaset.apps/kubefate-6d576d6c88 1 1 1 16s

replicaset.apps/mongo-56684d6c86 1 1 1 16s

NAME HOSTS ADDRESS PORTS AGE

ingress.extensions/kubefate kubefate.net 10.160.112.145 80 16s

#Add KubeFate.net to host file

$ sudo -- sh -c "echo \"10.160.112.145 kubefate.net\" >> /etc/hosts"

# verify

$ ping -c 2 kubefate.net

PING kubefate.net (10.160.112.145) 56(84) bytes of data.

64 bytes from kubefate.net (10.160.112.145): icmp_seq=1 ttl=64 time=0.080 ms

64 bytes from kubefate.net (10.160.112.145): icmp_seq=2 ttl=64 time=0.054 ms

--- kubefate.net ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1006ms

rtt min/avg/max/mdev = 0.054/0.067/0.080/0.013 ms

#KubeFate service version

$ kubefate version

* kubefate service version=v1.0.2

* kubefate commandLine version=v1.0.2 6. install 2 Fate parties

# prepare two namespaces: fate-9999 for party 9999, while fate-10000 for party 10000.

$ kubectl create namespace fate-9999

$ kubectl create namespace fate-10000

# copy the cluster.yaml sample in the working folder

$ cp ./cluster.yaml fate-9999.yaml && cp ./cluster.yaml fate-10000.yaml

#modify the config files for each

# install thesw two Fate cluster via KubeFate

$ kubefate cluster install -f ./fate-9999.yaml

create job success, job id=a3dd184f-084f-4d98-9841-29927bdbf627

$ kubefate cluster install -f ./fate-10000.yaml

create job success, job id=370ed79f-637e-482c-bc6a-7bf042b64e67

# verify

$ kubefate job ls7. verify the deployment

# use toy_example to verify the deployment

# find the python container party 10000 with kubectl

$ kubectl get pod -n fate-10000|grep python*

python-dc94c9786-8jsgh 2/2 Running 0 3m13s

# enter the python pod

$ kubectl exec -it python-dc94c9786-8jsgh -n fate-10000 -- /bin/bash

# run the toy_example

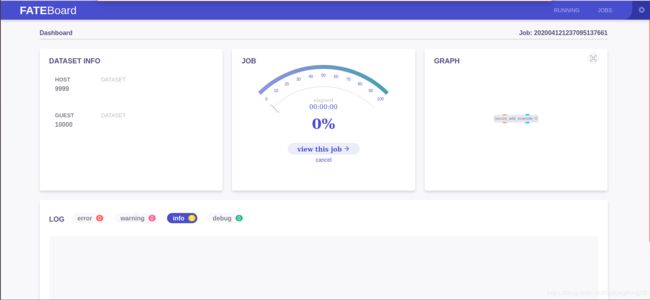

$ cd examples/toy_example/ && python run_toy_example.py 10000 9999 18. check the Fate-Dashboard

# the FATE-Dashboard is exposed as `http://${party_id}.fateboard.${serviceurl}` in KubeFATE deployments.

# Party 9999 FATE-Dashboard: http://9999.fateboard.kubefate.net/

# Party 10000 FATE-Dashboard: http://10000.fateboard.kubefate.net/

# if not config the dns

$ sudo -- sh -c "echo \"10.160.112.145 9999.fateboard.kubefate.net\" >> /etc/hosts"

$ sudo -- sh -c "echo \"10.160.112.145 10000.fateboard.kubefate.net\" >> /etc/hosts"

【错误记录】

1. The following packages have unmet dependencies:

software-properties-common : Depends: python3-software-properties (= 0.92.37.8) but it is not going to be installed

E: Unable to correct problems, you have held broken packages.解决方案:换了阿里镜像源,问题解决了