springcloudgateway实现自定义限流

目录

概要

1,限流的原理

2,自定义限流的代码实现

3,限流工具的使用

复杂例子和完整代码

疑难杂症1:限流不起作用

疑难杂症2:限流的类取不到前面HeaderDealFilter中我放入header中的信息

概要

springcloudgateway的限流网上有很多介绍的,官网上说的也很详细。我想说的是限流的key要有不同的限流配置参数,

实现自定义限流。

通用的例子是,你定义一个KeyResolver,然后再在yml配置限流需要得2个参数:redis-rate-limiter.replenishRate(限流速率),redis-rate-limiter.burstCapacity(令牌桶大小)。

这样限流就做好了。

例子不再贴代码。

这样的限流是根据你定义的KeyResolver(可以是url,IP,系统名称,用户参数等,总之就是一个字符串,这就是限流的key或者叫限流的维度),用配置的replenishRate(限流速率)和burstCapacity(令牌桶大小)去限流。

问题是:我想在KeyResolver上,每个具体的KeyResolver值有不同的replenishRate(限流速率)和burstCapacity(令牌桶大小),

而不是用yml中写死的那个。比如你要根据IP进行限流,你定义你的KeyResolver为:

@Bean

public KeyResolver hostAddrKeyResolver2() {

return exchange -> Mono.just(exchange.getRequest().getRemoteAddress().getHostName());

}定义的限流参数为:

redis-rate-limiter.replenishRate: 1

redis-rate-limiter.burstCapacity: 2

那么请求的所有请求来得IP都是这一个限流配置。

我想实现自定义限流,比如同样按照IP进行限流,但是192.168.2.11的限流是

redis-rate-limiter.replenishRate: 1

redis-rate-limiter.burstCapacity: 2

192.168.2.12的限流是

redis-rate-limiter.replenishRate: 100

redis-rate-limiter.burstCapacity: 200

192.168.2.13的限流是

redis-rate-limiter.replenishRate: 500

redis-rate-limiter.burstCapacity: 2000

这才是实际场景下的客户需求。那要怎么实现呢?

这就是我要说的自定义限流。

文章结构主要如下,只讲干货:

1,限流的原理

限流目前通用的是令牌桶算法,有2个关键参数 replenishRate(限流速率),burstCapacity(令牌桶大小)

限流速率可以理解为,1秒钟放出几个令牌到令牌桶。

令牌桶大小就是令牌桶最多能放几个令牌。

限流的核心思路就是,每过来一个请求,必须从令牌桶取一个令牌,有了这个通行证,才不会被限流。

想象一个水管往一个桶匀速的注入水(1秒2滴),有个桶能装100滴水。

有请求过来后,从桶里取一滴水才能通行,取不到水的请求就被拦截了。

这个结构,令牌桶不是必须的,因为直接从水管取水也是可以的,这就是漏桶算法。

加一个令牌桶就是有机会缓存一桶水。当请求少的时候,令牌桶的水是多余流速 (1秒2滴)的。

这样就发生一种现象,最开始的时候,桶里可能存满了水(100滴),这时候有大量请求一起访问。

那么在最初的1秒,这个令牌桶结构可以提供100滴水,而不是2滴。假如后面请求还是很多,那么

令牌桶的水就取决取水管流速的大小(即注入的水马上就被抢光了),这时候也就是限流的阶段了。

这个令牌桶也是原来漏桶算法的改进。

回到代码实现,在代码中怎么实现这个原理呢?

首先对请求限流,首先想到的必然是filter拦截器。

满足条件的放行,不满足的拒绝。

然后,限流维度的定义。

我们既然要根据请求的不同特征取限流,那必然要从request中获取这些信息。

所以,request能获取到的信息都可以作为限流的维度。

比如,你可以从request获取到IP地址,URL,parameter,cookie,还能从header中获取一些信息。

这些信息都可以作为限流的维度。

最后,令牌桶要根据具体的限流维度的key值,运转水管和水桶。

这个临时信息放在那里呢?

令牌桶的算法是固定的,你桶里目前有没有水,这个信息是实时变化的。必须存起来,下一个请求过来时,

才能决定限流是否通过。

这些临时信息存储的地方也是有很多选择。

比如,我可以放在java的内存中,可以放在数据库中,可以放在文件中。

还可以放在redis中。

这些方案最终影响是不同的。

在多实例分布式的场景中,如果放在内存冲,如果没有做内存同步,那么每个实例内存中的令牌桶都是各自为营。

你定义的限流速率和令牌桶大小都是每个实例中各一套。

如果我想所有应用实例总共的请求做一个公共的限流,那么就必须把令牌桶放在一个公共的地方,

这样不管哪个应用实例来得请求,都是在公共的这个令牌桶排队,也就是全局的限流。

目前通用的方案都是redis。redis速度快,本身在分布式架构中做session和缓存服务器,所以

目前通用的限流都依赖redis。

说了这么多,看看springcloudgateway的filter怎么实现的:

# - name: RequestRateLimite

# args:

# key-resolver: "#{@uriKeyResolverMy}"

# redis-rate-limiter.replenishRate: 1

# redis-rate-limiter.burstCapacity: 2这个filter其实是:RequestRateLimiterGatewayFilterFactory

源码:

/*

* Copyright 2013-2017 the original author or authors.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*

*/

package org.springframework.cloud.gateway.filter.factory;

import org.springframework.cloud.gateway.filter.GatewayFilter;

import org.springframework.cloud.gateway.filter.ratelimit.KeyResolver;

import org.springframework.cloud.gateway.filter.ratelimit.RateLimiter;

import org.springframework.cloud.gateway.route.Route;

import org.springframework.cloud.gateway.support.ServerWebExchangeUtils;

import org.springframework.http.HttpStatus;

import java.util.Map;

/**

* User Request Rate Limiter filter. See https://stripe.com/blog/rate-limiters and

*/

public class RequestRateLimiterGatewayFilterFactory extends AbstractGatewayFilterFactory {

public static final String KEY_RESOLVER_KEY = "keyResolver";

private final RateLimiter defaultRateLimiter;

private final KeyResolver defaultKeyResolver;

public RequestRateLimiterGatewayFilterFactory(RateLimiter defaultRateLimiter,

KeyResolver defaultKeyResolver) {

super(Config.class);

this.defaultRateLimiter = defaultRateLimiter;

this.defaultKeyResolver = defaultKeyResolver;

}

public KeyResolver getDefaultKeyResolver() {

return defaultKeyResolver;

}

public RateLimiter getDefaultRateLimiter() {

return defaultRateLimiter;

}

@SuppressWarnings("unchecked")

@Override

public GatewayFilter apply(Config config) {

KeyResolver resolver = (config.keyResolver == null) ? defaultKeyResolver : config.keyResolver;

RateLimiter 关键代码就是:

@SuppressWarnings("unchecked")

@Override

public GatewayFilter apply(Config config) {

KeyResolver resolver = (config.keyResolver == null) ? defaultKeyResolver : config.keyResolver;

RateLimiter那么继续看这个limiter.isAllowed的实现代码

package org.springframework.cloud.gateway.filter.ratelimit;

import java.time.Instant;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.HashMap;

import java.util.List;

import java.util.concurrent.atomic.AtomicBoolean;

import javax.validation.constraints.Min;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.jetbrains.annotations.NotNull;

import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

import org.springframework.beans.BeansException;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.data.redis.core.ReactiveRedisTemplate;

import org.springframework.data.redis.core.script.RedisScript;

import org.springframework.validation.Validator;

import org.springframework.validation.annotation.Validated;

/**

* See https://stripe.com/blog/rate-limiters and

* https://gist.github.com/ptarjan/e38f45f2dfe601419ca3af937fff574d#file-1-check_request_rate_limiter-rb-L11-L34

*

* @author Spencer Gibb

*/

@ConfigurationProperties("spring.cloud.gateway.redis-rate-limiter")

public class RedisRateLimiter extends AbstractRateLimiter implements ApplicationContextAware {

@Deprecated

public static final String REPLENISH_RATE_KEY = "replenishRate";

@Deprecated

public static final String BURST_CAPACITY_KEY = "burstCapacity";

public static final String CONFIGURATION_PROPERTY_NAME = "redis-rate-limiter";

public static final String REDIS_SCRIPT_NAME = "redisRequestRateLimiterScript";

public static final String REMAINING_HEADER = "X-RateLimit-Remaining";

public static final String REPLENISH_RATE_HEADER = "X-RateLimit-Replenish-Rate";

public static final String BURST_CAPACITY_HEADER = "X-RateLimit-Burst-Capacity";

private Log log = LogFactory.getLog(getClass());

private ReactiveRedisTemplate redisTemplate;

private RedisScript> script;

private AtomicBoolean initialized = new AtomicBoolean(false);

private Config defaultConfig;

// configuration properties

/** Whether or not to include headers containing rate limiter information, defaults to true. */

private boolean includeHeaders = true;

/** The name of the header that returns number of remaining requests during the current second. */

private String remainingHeader = REMAINING_HEADER;

/** The name of the header that returns the replenish rate configuration. */

private String replenishRateHeader = REPLENISH_RATE_HEADER;

/** The name of the header that returns the burst capacity configuration. */

private String burstCapacityHeader = BURST_CAPACITY_HEADER;

public RedisRateLimiter(ReactiveRedisTemplate redisTemplate,

RedisScript> script, Validator validator) {

super(Config.class, CONFIGURATION_PROPERTY_NAME, validator);

this.redisTemplate = redisTemplate;

this.script = script;

initialized.compareAndSet(false, true);

}

public RedisRateLimiter(int defaultReplenishRate, int defaultBurstCapacity) {

super(Config.class, CONFIGURATION_PROPERTY_NAME, null);

this.defaultConfig = new Config()

.setReplenishRate(defaultReplenishRate)

.setBurstCapacity(defaultBurstCapacity);

}

public boolean isIncludeHeaders() {

return includeHeaders;

}

public void setIncludeHeaders(boolean includeHeaders) {

this.includeHeaders = includeHeaders;

}

public String getRemainingHeader() {

return remainingHeader;

}

public void setRemainingHeader(String remainingHeader) {

this.remainingHeader = remainingHeader;

}

public String getReplenishRateHeader() {

return replenishRateHeader;

}

public void setReplenishRateHeader(String replenishRateHeader) {

this.replenishRateHeader = replenishRateHeader;

}

public String getBurstCapacityHeader() {

return burstCapacityHeader;

}

public void setBurstCapacityHeader(String burstCapacityHeader) {

this.burstCapacityHeader = burstCapacityHeader;

}

@Override

@SuppressWarnings("unchecked")

public void setApplicationContext(ApplicationContext context) throws BeansException {

if (initialized.compareAndSet(false, true)) {

this.redisTemplate = context.getBean("stringReactiveRedisTemplate", ReactiveRedisTemplate.class);

this.script = context.getBean(REDIS_SCRIPT_NAME, RedisScript.class);

if (context.getBeanNamesForType(Validator.class).length > 0) {

this.setValidator(context.getBean(Validator.class));

}

}

}

/* for testing */ Config getDefaultConfig() {

return defaultConfig;

}

/**

* This uses a basic token bucket algorithm and relies on the fact that Redis scripts

* execute atomically. No other operations can run between fetching the count and

* writing the new count.

*/

@Override

@SuppressWarnings("unchecked")

public Mono isAllowed(String routeId, String id) {

if (!this.initialized.get()) {

throw new IllegalStateException("RedisRateLimiter is not initialized");

}

Config routeConfig = getConfig().getOrDefault(routeId, defaultConfig);

if (routeConfig == null) {

throw new IllegalArgumentException("No Configuration found for route " + routeId);

}

// How many requests per second do you want a user to be allowed to do?

int replenishRate = routeConfig.getReplenishRate();

// How much bursting do you want to allow?

int burstCapacity = routeConfig.getBurstCapacity();

try {

List keys = getKeys(id);

// The arguments to the LUA script. time() returns unixtime in seconds.

List scriptArgs = Arrays.asList(replenishRate + "", burstCapacity + "",

Instant.now().getEpochSecond() + "", "1");

// allowed, tokens_left = redis.eval(SCRIPT, keys, args)

Flux> flux = this.redisTemplate.execute(this.script, keys, scriptArgs);

// .log("redisratelimiter", Level.FINER);

return flux.onErrorResume(throwable -> Flux.just(Arrays.asList(1L, -1L)))

.reduce(new ArrayList(), (longs, l) -> {

longs.addAll(l);

return longs;

}) .map(results -> {

boolean allowed = results.get(0) == 1L;

Long tokensLeft = results.get(1);

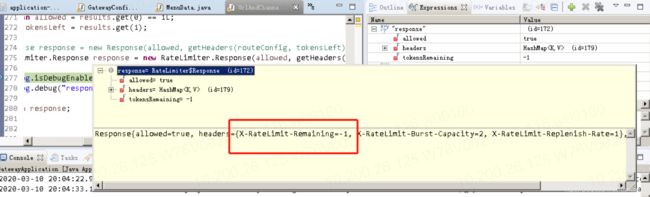

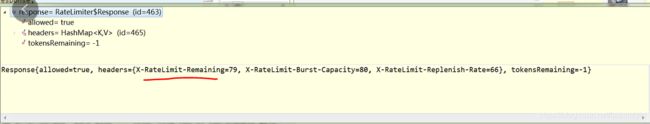

Response response = new Response(allowed, getHeaders(routeConfig, tokensLeft));

if (log.isDebugEnabled()) {

log.debug("response: " + response);

}

return response;

});

}

catch (Exception e) {

/*

* We don't want a hard dependency on Redis to allow traffic. Make sure to set

* an alert so you know if this is happening too much. Stripe's observed

* failure rate is 0.01%.

*/

log.error("Error determining if user allowed from redis", e);

}

return Mono.just(new Response(true, getHeaders(routeConfig, -1L)));

}

@NotNull

public HashMap getHeaders(Config config, Long tokensLeft) {

HashMap headers = new HashMap<>();

headers.put(this.remainingHeader, tokensLeft.toString());

headers.put(this.replenishRateHeader, String.valueOf(config.getReplenishRate()));

headers.put(this.burstCapacityHeader, String.valueOf(config.getBurstCapacity()));

return headers;

}

static List getKeys(String id) {

// use `{}` around keys to use Redis Key hash tags

// this allows for using redis cluster

// Make a unique key per user.

String prefix = "request_rate_limiter.{" + id;

// You need two Redis keys for Token Bucket.

String tokenKey = prefix + "}.tokens";

String timestampKey = prefix + "}.timestamp";

return Arrays.asList(tokenKey, timestampKey);

}

@Validated

public static class Config {

@Min(1)

private int replenishRate;

@Min(1)

private int burstCapacity = 1;

public int getReplenishRate() {

return replenishRate;

}

public Config setReplenishRate(int replenishRate) {

this.replenishRate = replenishRate;

return this;

}

public int getBurstCapacity() {

return burstCapacity;

}

public Config setBurstCapacity(int burstCapacity) {

this.burstCapacity = burstCapacity;

return this;

}

@Override

public String toString() {

return "Config{" +

"replenishRate=" + replenishRate +

", burstCapacity=" + burstCapacity +

'}';

}

}

}

这个默认就是RedisRateLimiter

关键代码:

@Override

@SuppressWarnings("unchecked")

public Mono isAllowed(String routeId, String id) {

if (!this.initialized.get()) {

throw new IllegalStateException("RedisRateLimiter is not initialized");

}

Config routeConfig = getConfig().getOrDefault(routeId, defaultConfig);

if (routeConfig == null) {

throw new IllegalArgumentException("No Configuration found for route " + routeId);

}

// How many requests per second do you want a user to be allowed to do?

int replenishRate = routeConfig.getReplenishRate();

// How much bursting do you want to allow?

int burstCapacity = routeConfig.getBurstCapacity();

try {

List keys = getKeys(id);

// The arguments to the LUA script. time() returns unixtime in seconds.

List scriptArgs = Arrays.asList(replenishRate + "", burstCapacity + "",

Instant.now().getEpochSecond() + "", "1");

// allowed, tokens_left = redis.eval(SCRIPT, keys, args)

Flux> flux = this.redisTemplate.execute(this.script, keys, scriptArgs);

// .log("redisratelimiter", Level.FINER);

return flux.onErrorResume(throwable -> Flux.just(Arrays.asList(1L, -1L)))

.reduce(new ArrayList(), (longs, l) -> {

longs.addAll(l);

return longs;

}) .map(results -> {

boolean allowed = results.get(0) == 1L;

Long tokensLeft = results.get(1);

Response response = new Response(allowed, getHeaders(routeConfig, tokensLeft));

if (log.isDebugEnabled()) {

log.debug("response: " + response);

}

return response;

});

}

catch (Exception e) {

/*

* We don't want a hard dependency on Redis to allow traffic. Make sure to set

* an alert so you know if this is happening too much. Stripe's observed

* failure rate is 0.01%.

*/

log.error("Error determining if user allowed from redis", e);

}

return Mono.just(new Response(true, getHeaders(routeConfig, -1L)));

} 看到限流的关键参数了吗?

int replenishRate = routeConfig.getReplenishRate();

int burstCapacity = routeConfig.getBurstCapacity();

这个参数会传入redis的执行脚本中。令牌桶算法就是用redis的脚本来实现的。这个脚本我们可以不用去研究。

// The arguments to the LUA script. time() returns unixtime in seconds.

List

Instant.now().getEpochSecond() + "", "1");

// allowed, tokens_left = redis.eval(SCRIPT, keys, args)

Flux

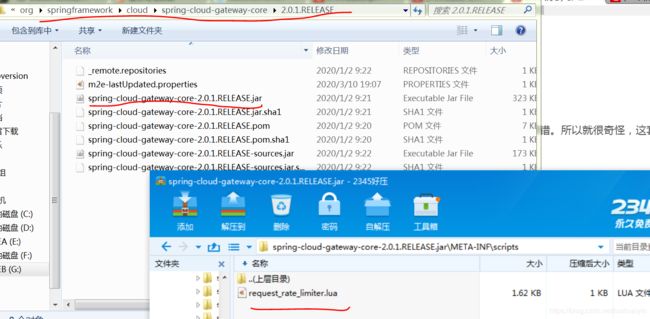

这个redis脚本藏在spring-cloud-gateway-core-2.0.1.RELEASE.jar 中的 \META-INF\scripts

request_rate_limiter.lua

代码就不贴了。

这个方法public Mono

中的参数routeId和id就是调用他的类RequestRateLimiterGatewayFilterFactory

这样传进去的:

limiter.isAllowed(route.getId(), key).flatMap。。。

这个route.getId()就是我们微服务的名字,这个key就是我们自己定义的KeyResolver的返回值。

所以,你知道为什么限流必须定义KeyResolver了吗。

这个KeyResolver我们按照自己的业务逻辑随便写,来定义我们按照什么限流。

到目前为止,我们搞懂了限流算法以及这个算法具体的代码实现。

下面就要修改这个代码实现我们的自定义限流。

2,自定义限流的代码实现

从限流的原理上看,令牌桶需要3个关键参数。replenishRate(限流速率),burstCapacity(令牌桶大小)。

还有一个就是限流的维度(或者叫限流的key)。

你可以理解为刚才讲的那一套水管和桶就是一个限流维度。

你如,你要按照请求的IP进行限流,那么限流的维度就是IP。

实际情况就是,当请求过来时,一个IP地址就创建一套令牌桶。

就好比你去买票,卖票的窗口是按照地域来限流的。假定,每个窗口的售票员公祖能力都一样,

每秒只能服务2个人(限流速率)。每个窗口都一样。

那么从河北来的人排一队,从山西来的人排一队。

河北和山西就是你的请求IP,每个窗口就是一套令牌桶。

springcloudgateway默认支持按照各种维度排队,只要我们定义一个KeyResolver就可以了。

这个你想怎么定义都可以,比如我想按照性别来排队,按照年龄,职业来排队等等。

这个网上都有很多例子,核心就是定义好这个KeyResolver就可以。

例如:

@Bean

public KeyResolver hostAddrKeyResolver2() {

return exchange -> Mono.just(exchange.getRequest().getRemoteAddress().getHostName());

}

还是回到自定义限流的问题上。

现在无论是按哪种类型来排队,都没问题。自定义限流要做的就是:

根据排队的人,来改变售票员的能力。

当一个普通人来买票时,售票员还是1秒只能服务2个人。

当有社会名流,军人,有紧急事情的人,来买票时。就单独再给这类人开一个窗口,

这个窗口的售票员能力也可以改变。比如军人窗口的售票员1秒服务5个人。

代码上要实现自定义限流要改3个地方:

1,限流维度(从request获取的数据做业务逻辑上的处理,创造一个字符串作为限流的key,放入header,方便后面获取)

2,获取限流维度,即定义KeyResolver

3,修改RedisRateLimiter实现,重写public Mono

1,写一个GlobalFilter: HeaderDealFilter用于在header放一个字符串,作为我们自定义限流的key

package com.example.gate.filter;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.function.Consumer;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cloud.gateway.filter.GatewayFilterChain;

import org.springframework.cloud.gateway.filter.GlobalFilter;

import org.springframework.core.Ordered;

import org.springframework.http.HttpHeaders;

import org.springframework.http.server.reactive.ServerHttpRequest;

import org.springframework.util.MultiValueMap;

import org.springframework.web.server.ServerWebExchange;

import reactor.core.publisher.Mono;

/**

* 实现业务处理逻辑,最终往header中放一个渠道标识channel和请求的IP地址

*

* @author lsy

*

*/

public class HeaderDealFilter implements GlobalFilter, Ordered {

Logger logger = LoggerFactory.getLogger(HeaderDealFilter.class);

@Override

public int getOrder() {

// TODO Auto-generated method stub

return 1;

}

@Override

public Mono filter(ServerWebExchange exchange, GatewayFilterChain chain) {

logger.info("HeaderDealFilter开始............");

String channelParam = exchange.getRequest().getQueryParams().getFirst("channelParam");//这里为了方便测试,改成从Params取

String IPParam = exchange.getRequest().getQueryParams().getFirst("IPParam");//这里为了方便测试,改成从Params取

logger.info("HeaderDealFilter channelParam=="+channelParam+",IPParam=="+IPParam);

String urlPath = exchange.getRequest().getURI().getPath();

String urlPath2=urlPath.replace("/", "_");// /one/hello 改成 _one_hello

String urlandchannel = urlPath2+"@"+channelParam;

//下面的写法是不行的

// exchange.getRequest().getHeaders().add("channel", channelParam);

Consumer httpHeadersNew = httpHeader -> {

httpHeader.set("channel", channelParam);

httpHeader.set("IP-Address", IPParam);

httpHeader.set("urlandchannel", urlandchannel);// _one_hello@channelA

};

ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().headers(httpHeadersNew).build();//构建header

// ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().header("channel", channelParam).build();//另一种写法,添加单个值

//将现在的request 变成 change对象

ServerWebExchange changeNew = exchange.mutate().request(serverHttpRequestNew).build();

logger.info("HeaderDealFilter header放入渠道标识:"+channelParam);

logger.info("HeaderDealFilter header放入IP标识:"+IPParam);

logger.info("HeaderDealFilter header放入url加渠道标识:"+urlandchannel);

// return chain.filter(exchange);

return chain.filter(changeNew);

}

}

定义bean

@Bean

public GlobalFilter headerDealFilter() {//处理请求中跟header相关的逻辑

return new HeaderDealFilter();

}上面的代码中,我为了实现按渠道channel,IP,URL加渠道 的限流,就做了3个key。

比如我要用channel限流。那么下面,我就定义KeyResolver

2,定义KeyResolver限流维护的获取

@Bean

public KeyResolver selfChannelKeyResolver() {//限流维度:header中的channel,由前面的filter处理

//这里注意KeyResolver的返回值不能为空。为空的话,请求发不通。所以这里做了空判断,保证返回一个字符串。

return exchange -> Mono.just(exchange.getRequest().getHeaders().getFirst("channel")==null?"default":exchange.getRequest().getHeaders().getFirst("channel"));

}3,重写限流逻辑,实现自定义限流。

首先,我要定义我限流的配置bootstrap.yml

#限流参数格式: 系统名称_限流key: 限流速率@令牌桶大小.另做了一个开关参数,方便我们改配置。

selfratelimiter:

rateLimitChannel:

default: 5@10

one_channelA: 2@3

one_channelB: 1@2

two_channelA: 1@2

two_channelB: 2@4说明:one_channelA: 2@3

one是应用名,channelA是渠道A,2是限流速率,3是令牌桶大小。

因为有的请求可能获取不到channel,对这样的请求有个默认的配置: default: 5@10

这个channelA和default是在前面的filter和KeyResolver定义的。

对应的读取类:

package com.example.gate.limit.self;

import java.util.Iterator;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

import javax.annotation.PostConstruct;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

/**

* 读取yml配置的属性类

*

* @author lsy

*

*/

@Component

@ConfigurationProperties(prefix = "selfratelimiter")

public class RateLimiterConfig {

public static final String SPILT_KEY="_";//限流key分隔符

public static final String SPILT_SPEED="@";//限流参数分隔符

//限流速率@令牌桶大小

//按照业务渠道自定义限流

private Map rateLimitChannel = new ConcurrentHashMap(){};

public Map getRateLimitChannel() {

return rateLimitChannel;

}

public void setRateLimitChannel(Map rateLimitChannel) {

this.rateLimitChannel = rateLimitChannel;

}

} 这样yml文件信息读取到这个类中了。

重写限流逻辑:新写一个类ChannelRedisRateLimiter

package com.example.gate.limit.self;

import java.time.Instant;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import java.util.concurrent.atomic.AtomicBoolean;

import javax.validation.constraints.Min;

import javax.validation.constraints.NotNull;

import org.apache.commons.logging.Log;

import org.apache.commons.logging.LogFactory;

import org.springframework.beans.BeansException;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.cloud.gateway.filter.ratelimit.AbstractRateLimiter;

import org.springframework.cloud.gateway.filter.ratelimit.RateLimiter;

import org.springframework.cloud.gateway.filter.ratelimit.RateLimiter.Response;

import org.springframework.context.ApplicationContext;

import org.springframework.context.ApplicationContextAware;

import org.springframework.data.redis.core.ReactiveRedisTemplate;

import org.springframework.data.redis.core.script.RedisScript;

import org.springframework.validation.Validator;

import org.springframework.validation.annotation.Validated;

import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

/**

* See https://stripe.com/blog/rate-limiters and

* https://gist.github.com/ptarjan/e38f45f2dfe601419ca3af937fff574d#file-1-check_request_rate_limiter-rb-L11-L34

*

* @author lsy

*

* 实现按照业务逻辑自定义限流

*

*/

//@ConfigurationProperties("spring.cloud.gateway.redis-rate-limiter")

public class ChannelRedisRateLimiter extends AbstractRateLimiter implements ApplicationContextAware {

// @Deprecated

public static final String REPLENISH_RATE_KEY = "replenishRate";//修改

// @Deprecated

public static final String BURST_CAPACITY_KEY = "burstCapacity";//修改

public static final String CONFIGURATION_PROPERTY_NAME = "channel-redis-rate-limiter";//修改

public static final String REDIS_SCRIPT_NAME = "redisRequestRateLimiterScript";

public static final String REMAINING_HEADER = "X-RateLimit-Remaining";

public static final String REPLENISH_RATE_HEADER = "X-RateLimit-Replenish-Rate";

public static final String BURST_CAPACITY_HEADER = "X-RateLimit-Burst-Capacity";

private Log log = LogFactory.getLog(getClass());

private ReactiveRedisTemplate redisTemplate;

private RedisScript> script;

private AtomicBoolean initialized = new AtomicBoolean(false);

private Config defaultConfig;

// configuration properties

/** Whether or not to include headers containing rate limiter information, defaults to true. */

private boolean includeHeaders = true;

/** The name of the header that returns number of remaining requests during the current second. */

private String remainingHeader = REMAINING_HEADER;

/** The name of the header that returns the replenish rate configuration. */

private String replenishRateHeader = REPLENISH_RATE_HEADER;

/** The name of the header that returns the burst capacity configuration. */

private String burstCapacityHeader = BURST_CAPACITY_HEADER;

public ChannelRedisRateLimiter(ReactiveRedisTemplate redisTemplate,

RedisScript> script, Validator validator) {

super(Config.class, CONFIGURATION_PROPERTY_NAME, validator);

this.redisTemplate = redisTemplate;

this.script = script;

initialized.compareAndSet(false, true);

}

public ChannelRedisRateLimiter(int defaultReplenishRate, int defaultBurstCapacity) {

super(Config.class, CONFIGURATION_PROPERTY_NAME, null);

this.defaultConfig = new Config()

.setReplenishRate(defaultReplenishRate)

.setBurstCapacity(defaultBurstCapacity);

}

public boolean isIncludeHeaders() {

return includeHeaders;

}

public void setIncludeHeaders(boolean includeHeaders) {

this.includeHeaders = includeHeaders;

}

public String getRemainingHeader() {

return remainingHeader;

}

public void setRemainingHeader(String remainingHeader) {

this.remainingHeader = remainingHeader;

}

public String getReplenishRateHeader() {

return replenishRateHeader;

}

public void setReplenishRateHeader(String replenishRateHeader) {

this.replenishRateHeader = replenishRateHeader;

}

public String getBurstCapacityHeader() {

return burstCapacityHeader;

}

public void setBurstCapacityHeader(String burstCapacityHeader) {

this.burstCapacityHeader = burstCapacityHeader;

}

private RateLimiterConfig rateLimiterConfig;//自定义限流的配置

@Override

@SuppressWarnings("unchecked")

public void setApplicationContext(ApplicationContext context) throws BeansException {

log.info("setApplicationContext自定义限流配置:RateLimiterConf=="+(rateLimiterConfig==null));

this.rateLimiterConfig = context.getBean(RateLimiterConfig.class);

if (initialized.compareAndSet(false, true)) {

this.redisTemplate = context.getBean("stringReactiveRedisTemplate", ReactiveRedisTemplate.class);

this.script = context.getBean(REDIS_SCRIPT_NAME, RedisScript.class);

if (context.getBeanNamesForType(Validator.class).length > 0) {

this.setValidator(context.getBean(Validator.class));

}

}

}

/* for testing */ Config getDefaultConfig() {

return defaultConfig;

}

/**

* 获取自定义限流的配置

*

* @param routeId

* @param id

* @return

*/

public Map getConfigFromMap(String routeId, String id){

Map res=new HashMap();

res.put("replenishRate", -1);

res.put("burstCapacity", -1);

int replenishRate = -1;

int burstCapacity = -1;

/*********************自定义限流start**************************/

//限流的key是参数id。我们要向实现根据key的不同自定义限流,那么就要在这里实现根据参数id获取我们的配置,包括replenishRate(限流速率),burstCapacity(令牌桶大小)

//这个类实现的是按照“渠道”限流,这是个业务上规定的字段,我们在获取渠道的时候逻辑写在前面的filter,传到KeyResolver,这里就能拿到这个值了。

//自定义限流要做的就是具体不同的key有不同的replenishRate(限流速率),burstCapacity(令牌桶大小),在这里做个配置映射就实现了。

// log.info("ChannelRedisRateLimiter routeId["+routeId+"],id["+id+"]"+"的初始配置:replenishRate=="+replenishRate+",burstCapacity=="+burstCapacity);

if(rateLimiterConfig==null) {

log.error("RedisRateLimiter rateLimiterConfig is not initialized");

throw new IllegalStateException("RedisRateLimiter rateLimiterConfig is not initialized");

}

Map rateLimitConfigMap = rateLimiterConfig.getRateLimitChannel();

if(rateLimitConfigMap==null) {

log.error("RedisRateLimiter rateLimitConfigMap is not initialized");

throw new IllegalStateException("RedisRateLimiter rateLimitConfigMap is not initialized");

}

int speed = -1;

int capacity = -1;

String configKey=routeId+RateLimiterConfig.SPILT_KEY+id;

//这里要做一个判断,如果id为空或者为“default”,那么说明我们在限流key在request没有获取到,那就要按照默认的配置(yml中default配置)进行限流

if(id==null || id.trim().equals("")|| id.trim().equals("default")) {

configKey = "default";

log.error("RedisRateLimiter id is null or default,use the default value for configKey...");

}

String configValue=(String)rateLimitConfigMap.get(configKey);

String configDefaultValue=(String)rateLimitConfigMap.get("default");

if(configValue==null || configValue.trim().equals("")) {

log.error("RedisRateLimiter configValue is not match ,use the default value...configDefaultValue=="+configDefaultValue);

if(configDefaultValue==null || configDefaultValue.trim().equals("")) {

log.error("RedisRateLimiter is not match ,and the default value is also null");

throw new IllegalStateException("RedisRateLimiter is not match ,and the default value is also null");

}else {

configValue = configDefaultValue;//获取不到匹配的配置,就获取默认配置

}

}

String[] defValues = configValue.split(RateLimiterConfig.SPILT_SPEED);

if(defValues == null || defValues.length<2) {//配置内容不合法

log.error("RedisRateLimiter rateLimitConfigMap defValues is not initialized");

throw new IllegalStateException("RedisRateLimiter rateLimitConfigMap defValues is not initialized");

}

try {

// one_channelA: 2@3

speed = Integer.valueOf(defValues[0].trim());

capacity = Integer.valueOf(defValues[1].trim());

} catch (Exception e) {

log.error(e.getMessage()+defValues[0]+","+defValues[1]);

throw new IllegalStateException("RedisRateLimiter rateLimitConfigMap defValues is not valided!");

}

replenishRate = speed;

burstCapacity = capacity;

log.info("ChannelRedisRateLimiter routeId["+routeId+"],id["+id+"]"+"的匹配后的配置:replenishRate=="+replenishRate+",burstCapacity=="+burstCapacity);

/*********************自定义限流end**************************/

//防止因为yml配置不正确导致报错,我们可以再加一层判断

if(replenishRate==-1 || burstCapacity==-1) {

replenishRate=Integer.MAX_VALUE;

burstCapacity=Integer.MAX_VALUE;

}

res.put("replenishRate", replenishRate);

res.put("burstCapacity", burstCapacity);

return res;

}

/**

* This uses a basic token bucket algorithm and relies on the fact that Redis scripts

* execute atomically. No other operations can run between fetching the count and

* writing the new count.

*/

@Override

@SuppressWarnings("unchecked")

public Mono isAllowed(String routeId, String id) {

if (!this.initialized.get()) {

throw new IllegalStateException("RedisRateLimiter is not initialized");

}

//修改:注释掉

// Config routeConfig = getConfig().getOrDefault(routeId, defaultConfig);

//

// if (routeConfig == null) {

// throw new IllegalArgumentException("No Configuration found for route " + routeId);

// }

//

// // How many requests per second do you want a user to be allowed to do?

// int replenishRate = routeConfig.getReplenishRate();

//

// // How much bursting do you want to allow?

// int burstCapacity = routeConfig.getBurstCapacity();

//修改:获取自定义限流参数映射关系

Map configMap = this.getConfigFromMap(routeId, id);

int replenishRate = configMap.get("replenishRate");

int burstCapacity = configMap.get("burstCapacity");

if(replenishRate==-1 || burstCapacity==-1) {

log.error("RedisRateLimiter rateLimiterConfig values is not valid...");

throw new IllegalStateException("RedisRateLimiter rateLimiterConfig values is not valid...");

}

try {

List keys = getKeys(id);

// The arguments to the LUA script. time() returns unixtime in seconds.

List scriptArgs = Arrays.asList(replenishRate + "", burstCapacity + "",

Instant.now().getEpochSecond() + "", "1");

// allowed, tokens_left = redis.eval(SCRIPT, keys, args)

Flux> flux = this.redisTemplate.execute(this.script, keys, scriptArgs);

// .log("redisratelimiter", Level.FINER);

return flux.onErrorResume(throwable -> Flux.just(Arrays.asList(1L, -1L)))

.reduce(new ArrayList(), (longs, l) -> {

longs.addAll(l);

return longs;

}) .map(results -> {

boolean allowed = results.get(0) == 1L;

Long tokensLeft = results.get(1);

// Response response = new Response(allowed, getHeaders(routeConfig, tokensLeft));//修改:注释掉

RateLimiter.Response response = new RateLimiter.Response(allowed, getHeaders(replenishRate , burstCapacity , tokensLeft));//修改

if (log.isDebugEnabled()) {

log.debug("response: " + response);

}

return response;

});

}

catch (Exception e) {

/*

* We don't want a hard dependency on Redis to allow traffic. Make sure to set

* an alert so you know if this is happening too much. Stripe's observed

* failure rate is 0.01%.

*/

log.error("Error determining if user allowed from redis", e);

}

// return Mono.just(new Response(true, getHeaders(routeConfig, -1L)));//修改:注释掉

return Mono.just(new RateLimiter.Response(true, getHeaders(replenishRate , burstCapacity , -1L)));//修改

}

//修改:注释掉

// @NotNull

// public HashMap getHeaders(Config config, Long tokensLeft) {

// HashMap headers = new HashMap<>();

// headers.put(this.remainingHeader, tokensLeft.toString());

// headers.put(this.replenishRateHeader, String.valueOf(config.getReplenishRate()));

// headers.put(this.burstCapacityHeader, String.valueOf(config.getBurstCapacity()));

// return headers;

// }

//修改

public HashMap getHeaders(Integer replenishRate, Integer burstCapacity , Long tokensLeft) {

HashMap headers = new HashMap<>();

headers.put(this.remainingHeader, tokensLeft.toString());

headers.put(this.replenishRateHeader, String.valueOf(replenishRate));

headers.put(this.burstCapacityHeader, String.valueOf(burstCapacity));

return headers;

}

static List getKeys(String id) {

// use `{}` around keys to use Redis Key hash tags

// this allows for using redis cluster

// Make a unique key per user.

// String prefix = "request_rate_limiter.{" + id;

String prefix = "request_channel_rate_limiter.{" + id;//修改

// You need two Redis keys for Token Bucket.

String tokenKey = prefix + "}.tokens";

String timestampKey = prefix + "}.timestamp";

return Arrays.asList(tokenKey, timestampKey);

}

@Validated

public static class Config {

@Min(1)

private int replenishRate;

@Min(1)

private int burstCapacity = 1;

public int getReplenishRate() {

return replenishRate;

}

public Config setReplenishRate(int replenishRate) {

this.replenishRate = replenishRate;

return this;

}

public int getBurstCapacity() {

return burstCapacity;

}

public Config setBurstCapacity(int burstCapacity) {

this.burstCapacity = burstCapacity;

return this;

}

@Override

public String toString() {

return "Config{" +

"replenishRate=" + replenishRate +

", burstCapacity=" + burstCapacity +

'}';

}

}

}

关键逻辑是获取配置的方法getConfigFromMap

因为我定义的key是从header中拿取我自己放进去的channel。所以也就实现了按照channel渠道来限流。

但是要注意,这个限流配置必须有个默认值,如果你的配置都获取不到,那就会报错。

比如,有的请求获取不到channel,那么就按照一个默认配置。就在我yml中定义的 default: 5@10

我们写的代码重要实现就是根据不同的限流key,找到对应的replenishRate和burstCapacity,问不是官网上提供的智能在yml中配置的同一个值。这样就实现了自定义限流。

比如one_channelA: 2@3

从渠道channelA来的请求,访问微服务one,限流速率是2,令牌桶是3.

one_channelB: 1@2

从渠道channelB来的请求,访问微服务one,限流速率是1,令牌桶是2.

如果无法判断渠道,还可以用默认配置:

default: 5@10

3,限流工具的使用

上面我写了一个按照渠道channel限流的例子(渠道的获取和定义是跟业务相关的,需要你自己写获取和放入header的逻辑)

为了测试和模仿不同渠道来的请求是否有不同的限流配置,我获取渠道的逻辑比较简单,就说从request中获取一个参数

String channelParam = exchange.getRequest().getQueryParams().getFirst("channelParam");

放入header:

Consumer

httpHeader.set("channel", channelParam);

httpHeader.set("IP-Address", IPParam);

httpHeader.set("urlandchannel", urlandchannel);// _one_hello@channelA

};

ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().headers(httpHeadersNew).build();//构建header

// ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().header("channel", channelParam).build();//另一种写法,添加单个值

//将现在的request 变成 change对象

ServerWebExchange changeNew = exchange.mutate().request(serverHttpRequestNew).build();

为了测试限流,我做了一个网关应用gate和一个微服务one。

在one中定义一个普通的get请求:

package com.example.one.controller;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

@RestController

@RequestMapping("/limit")

public class LimitTestController {

@Value("${spring.cloud.client.ip-address}")

private String ip;

@Value("${spring.application.name}")

private String servername;

@Value("${server.port}")

private String port;

@GetMapping(value="/sprint")

public String sprint() {

String[] colorArr=new String[] {"red","blue","green","pink","gray"};

DateTimeFormatter df = DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss");//LocalDateTime startTime = LocalDateTime.now();

String timeNow=LocalDateTime.now().format(df);

String message="sprint ! I am ["+servername+":"+ip+":"+port+"]"+"..."+timeNow;

System.out.println(message);

return message;

}

@GetMapping(value="/sprint2")

public String sprint2() {

String[] colorArr=new String[] {"red","blue","green","pink","gray"};

DateTimeFormatter df = DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss");//LocalDateTime startTime = LocalDateTime.now();

String timeNow=LocalDateTime.now().format(df);

String message="sprint ! I am ["+servername+":"+ip+":"+port+"]"+"..."+timeNow;

System.out.println(message);

return message;

}

}

就是简单的2个get请求,当有请求来是,打印一行字,并返回一行字。

gateway:

routes:

- id: one

uri: lb://one

predicates:

- Path=/one/**

filters:

- StripPrefix=1

- name: RequestRateLimiter

args:

rate-limiter: "#{@channelRedisRateLimiter}"

key-resolver: "#{@selfChannelKeyResolver}"

现在要开始测试了。

我使用的压测工具有2个,一个是jmeter,一个是http_load。

压测http的工具有很多,postman也可以。

http_load小巧就几M,jmeter做的比较酷炫,有操作界面,功能强大,也就60多M。

先说http_load的使用:

解压后将cygwin1.dll 放置到 C:\Windows\system目录下,将http_load.exe 放置到 C: \Windows\System32目录下。

进入cmd命令行,输入命令http_load显示相关说明则配置成功。

使用方法:

http_load -p 10 -s 60 -r 3 -f 100 C:\urllist.txt

参数的含义:

-parallel 简写-p :并发的用户进程数

-fetches 简写-f :总计的访问请求次数

-rate 简写-r :每秒的访问频率

-seconds简写-s :总计的访问时间

准备URL文件:urls.txt,文件格式是每行一个URL。例如 https://www.baidu.com/

我准备测试的urls.txt内容:

http://localhost:8888/one/limit/sprint?channelParam=channelA

http_load -p 10 -s 60 -r 5 -f 100 C:\urllist.txt

我们起10个线程模拟10个用户同时访问,早60秒内完成访问,每秒访问5次,总共访问100次。

打开cmd窗口,输入命令,开始压测:

C:\Users\lsy>

C:\Users\lsy>http_load -p 10 -s 60 -r 5 -f 100 C:\urllist.txt

cygwin warning:

MS-DOS style path detected: C:\urllist.txt

Preferred POSIX equivalent is: /cygdrive/c/urllist.txt

CYGWIN environment variable option "nodosfilewarning" turns off this warning.

Consult the user's guide for more details about POSIX paths:

http://cygwin.com/cygwin-ug-net/using.html#using-pathnames

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

http://localhost:8888/one/limit/sprint?channelParam=channelA: byte count wrong

100 fetches, 5 max parallel, 4085 bytes, in 20.0281 seconds

40.85 mean bytes/connection

4.99297 fetches/sec, 203.963 bytes/sec

msecs/connect: 0.76004 mean, 3 max, 0 min

msecs/first-response: 53.9731 mean, 941.054 max, 10.001 min

57 bad byte counts

HTTP response codes:

code 200 -- 43

code 429 -- 57

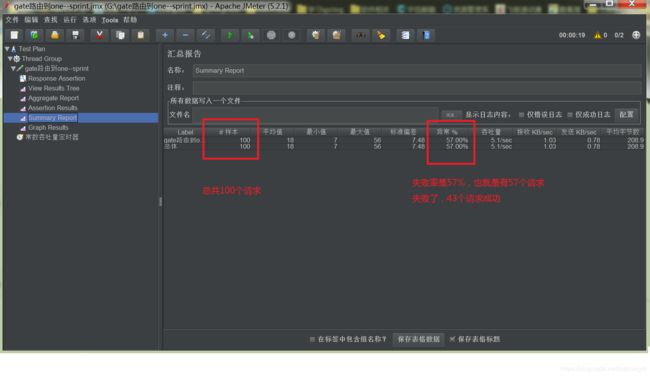

C:\Users\lsy>总共100个请求,成功43个,失败57个。

我们定义渠道A的限流是one_channelA: 2@3

每秒2个,令牌桶最大3.

也就是从渠道A来得请求,每秒智能处理2个请求。每秒的5个请求有2个通过,有3个失败。总共100个请求。

这样算下来,应该是2/5的通过应该是40个请求通过才对。为什么会有43个通过?

这就是前面介绍的令牌桶的作用了。刚开始的时候,令牌桶是蛮的,有3个令牌。当请求过来后,一下子被耗干。

后面的速率就取决于注入的速率,即限流速率replenishRate

从请求后台的打印善上也能看出来,前几秒是有3个请求先通过了。

后面的请求通过都是1秒2个。

请求打印:

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:36

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:36

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:36

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:36

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:36

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:37

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:37

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:38

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:38

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:39

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:39

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:40

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:40

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:41

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:41

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:42

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:42

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:43

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:43

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:44

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:44

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:45

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:45

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:46

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:46

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:47

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:47

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:48

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:48

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:49

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:49

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:50

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:50

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:51

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:51

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:52

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:52

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:53

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:53

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:54

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:54

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:55

sprint ! I am [one:192.168.124.17:8901]...2020-03-09 22:05:55

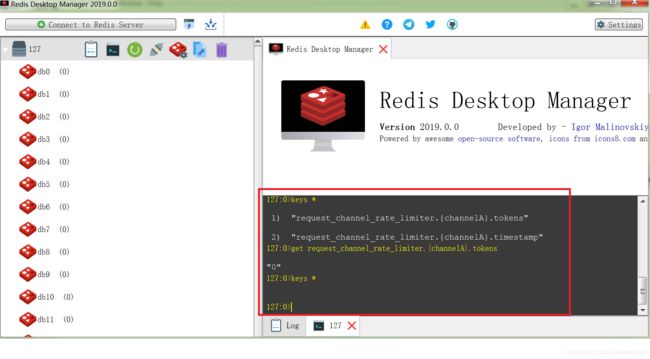

在请求的过程中,可以去访问redis看下是否有限流key,这个限流key是在请求过后就删除了

这样证明了确实是依赖redis限流的,所以在你的工程里必须配置redis。同时也要把redis起起来。

有幸可以去研究那个脚本。

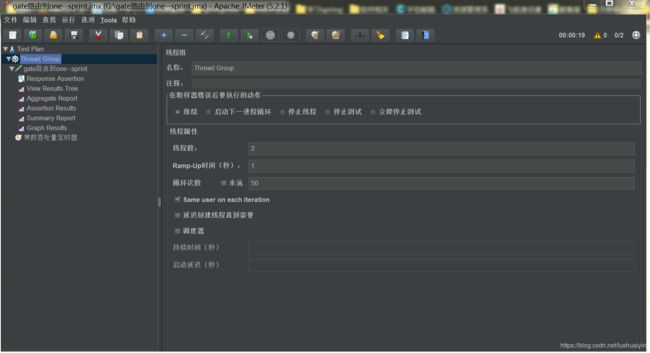

再介绍另一个http压测工具jmeter

官网可直接下载 https://jmeter.apache.org/

加压后,双击jmeter.bat

这个工具提供了多国语言,有简体中文,还提供了多个皮肤样式。

首先把中文调出来,点击菜单options--choose language ---chinese simplified

皮肤就是选项--外观,自己找好看的选。

jemter的使用,网上有很多教程,我这里简单说一下。

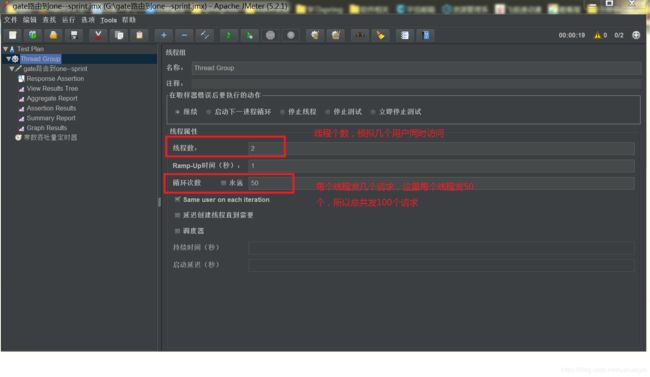

首先要建一个线程组:默认有一个测试计划,鼠标右键 添加--线程(用户)--线程组

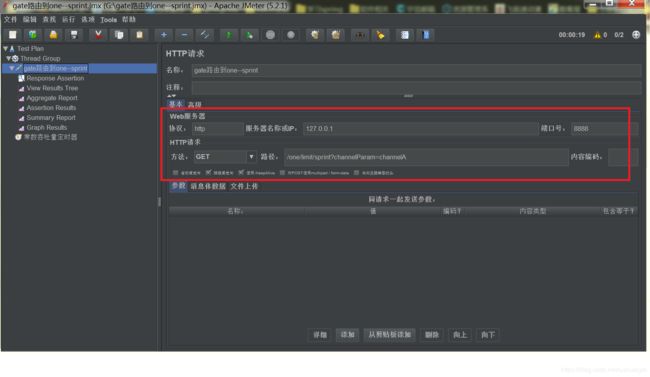

下面添加访问的请求, 添加---取样器---http请求

这个就不用多说了,都是请求需要的信息。

下面要调出来几个分析报表。当压测后,我们要看结果,jmeter提供了好多类似于报表的工具,方便我们查看和分析结果。

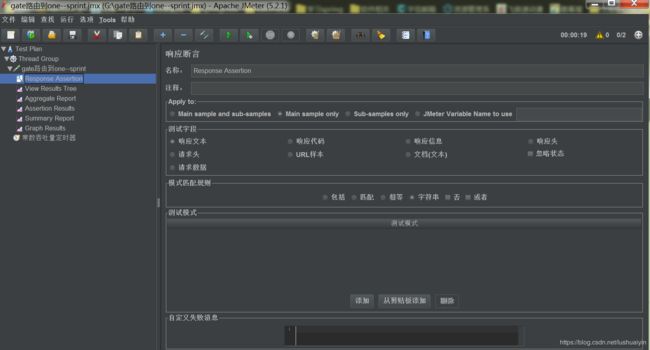

添加断言(这个必须有,是对请求结果的过滤,这里添加上就用默认配置)

添加 监听器-->察看结果树、断言结果、聚合报告、概要结果

这里就不细说了,这个工具很强大,这些类似于报表的东西自己去研究

我这里主要看结果树和概要结果

还有一个重要的东西,那就是 添加---定时器--常数吞吐量定时器。

我们要用2个线程,发100个请求。当开始后,这100个请求在1秒内可能局发完了。很快。

我们为了测限流,其实是想每秒发几个请求,比如,我的限流速率是每秒2个请求。要测试限流是否起作用,

那么压测就需要每秒发2个以上请求,最好固定每秒发N个。

我这里想每秒发5个请求,为了让jmeter每秒固定发5个请求,而不是一股脑全发出去,就必须要设置一个定时器。

我们要设置每秒发5个请求,也就是每分钟300个请求。

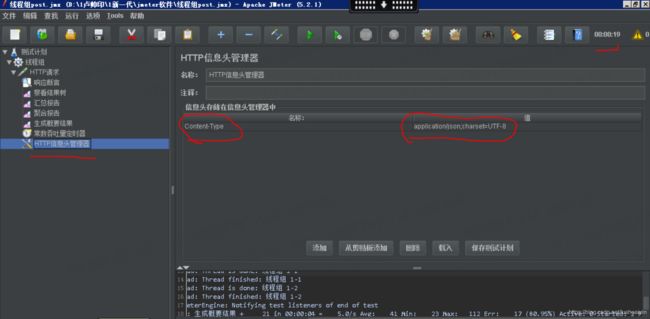

还有一个重要的功能就是设置请求的header。

我设置请求的Content-Type.

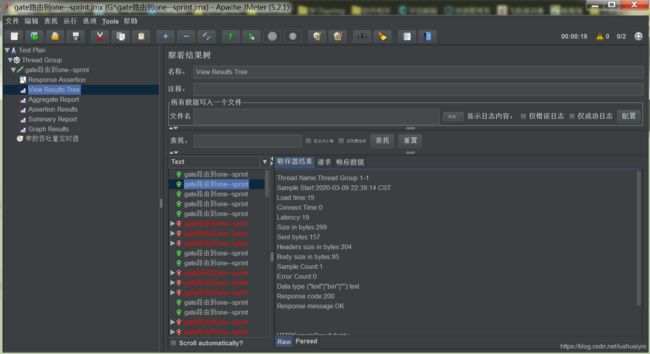

设置好后,点击启动按钮。右上角有个计时器,如果请求发完了,这个计时器就停止了,点击黄色叹号,底下显示概要信息。

发完后,看结果树,有每个请求的详细信息:绿色的标识成功,红色的失败

再看下统计结果,概要结果:

这个节骨和我们用http_load测试是一样的。

至此,springcloudgateway的自定义限流就完成了。

复杂例子和完整代码

后面,我要补充一个我业务上实际的限流需求。

需求是这样的:要按照请求的url和请求来源渠道进行综合限流。比如微服务one的一个请求:/one/limit/sprint

同一样的服务url要针对不同的请求渠道进行不同的限流。

从渠道A来的请求限流速率是每秒1个,令牌桶2.

从渠道B来的请求限流速率是每秒2个,令牌桶3.

按照上面的思路,我们,需要3步:

写一个filter,处理我们限流的维度,把这个字符串写入header,让后面的限流组件使用

定义一个KeyResolver,获取这个限流维度key

重写RedisRateLimiter在限流获取限流速率replenishRate和令牌桶大小burstCapacity的地方,加入我们的逻辑,

从我们的配置文件中读配置,修改这2个值,实现自定义限流。

2个应用:网关gate和微服务one

现在one中定义一个用于测试的controller

主要配置在网关这边:

filter:

package com.example.gate.config;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cloud.gateway.filter.GlobalFilter;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import com.example.gate.filter.HeaderDealFilter;

import com.example.gate.filter.PostBodyFilter;

import com.example.gate.filter.PrintFilter;

/**

* 这个是配置类,只配置Filter相关的内容

*

* @author lsy

*

*/

@Configuration

public class FilterConfig {

Logger logger = LoggerFactory.getLogger(FilterConfig.class);

/*filter按顺序写,这样方便阅读*/

@Bean

public GlobalFilter postBodyFilter() {//获取post请求体,放入Attributes中,方便后面的拦截器和微服务使用postbody数据

return new PostBodyFilter();

}

@Bean

public GlobalFilter headerDealFilter() {//处理请求中跟header相关的逻辑

return new HeaderDealFilter();

}

// @Bean

// public GlobalFilter tokenFilter() {//一个校验token的简单例子

// return new TokenFilter();

// }

@Bean

public GlobalFilter printFilter() {//打印之前filter的内容,用于验证

return new PrintFilter();

}

//跟yml配置一样效果

// @Bean

// public RouteLocator urlFilterRouteLocator(RouteLocatorBuilder builder) {

// logger.info("FilterConfig---urlFilterRouteLocator---");

// return builder.routes()

// .route(r -> r.path("/one/**")

// .filters(f -> f.stripPrefix (1).filter(new UrlFilter())

// .addResponseHeader("urlFilterFlag", "pass"))

// .uri("lb://one")

// .order(0)

// .id("one")

// )

// .build();

// }

}

package com.example.gate.filter;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.function.Consumer;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cloud.gateway.filter.GatewayFilterChain;

import org.springframework.cloud.gateway.filter.GlobalFilter;

import org.springframework.core.Ordered;

import org.springframework.http.HttpHeaders;

import org.springframework.http.server.reactive.ServerHttpRequest;

import org.springframework.util.MultiValueMap;

import org.springframework.web.server.ServerWebExchange;

import reactor.core.publisher.Mono;

/**

* 实现业务处理逻辑,最终往header中放一个渠道标识channel和请求的IP地址

*

* @author lsy

*

*/

public class HeaderDealFilter implements GlobalFilter, Ordered {

Logger logger = LoggerFactory.getLogger(HeaderDealFilter.class);

@Override

public int getOrder() {

// TODO Auto-generated method stub

return 1;

}

@Override

public Mono filter(ServerWebExchange exchange, GatewayFilterChain chain) {

logger.info("HeaderDealFilter开始............");

String channelParam = exchange.getRequest().getQueryParams().getFirst("channelParam");//这里为了方便测试,改成从Params取

String IPParam = exchange.getRequest().getQueryParams().getFirst("IPParam");//这里为了方便测试,改成从Params取

logger.info("HeaderDealFilter channelParam=="+channelParam+",IPParam=="+IPParam);

String urlPath = exchange.getRequest().getURI().getPath();

String urlPath2=urlPath.replace("/", "_");// /one/hello 改成 _one_hello

String urlandchannel = urlPath2+"@"+channelParam;

//下面的写法是不行的

// exchange.getRequest().getHeaders().add("channel", channelParam);

Consumer httpHeadersNew = httpHeader -> {

httpHeader.set("channel", channelParam);

httpHeader.set("IP-Address", IPParam);

httpHeader.set("urlandchannel", urlandchannel);// _one_hello@channelA

};

ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().headers(httpHeadersNew).build();//构建header

// ServerHttpRequest serverHttpRequestNew = exchange.getRequest().mutate().header("channel", channelParam).build();//另一种写法,添加单个值

//将现在的request 变成 change对象

ServerWebExchange changeNew = exchange.mutate().request(serverHttpRequestNew).build();

logger.info("HeaderDealFilter header放入渠道标识:"+channelParam);

logger.info("HeaderDealFilter header放入IP标识:"+IPParam);

logger.info("HeaderDealFilter header放入url加渠道标识:"+urlandchannel);

// return chain.filter(exchange);

return chain.filter(changeNew);

}

}

还有个filter是为了打印前面filter放入header的信息,其实没啥用

package com.example.gate.filter;

import java.net.InetSocketAddress;

import java.net.URI;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cloud.gateway.filter.GatewayFilterChain;

import org.springframework.cloud.gateway.filter.GlobalFilter;

import org.springframework.core.Ordered;

import org.springframework.http.HttpHeaders;

import org.springframework.http.HttpMethod;

import org.springframework.http.server.RequestPath;

import org.springframework.http.server.reactive.ServerHttpRequest;

import org.springframework.util.MultiValueMap;

import org.springframework.web.server.ServerWebExchange;

import reactor.core.publisher.Mono;

/**

* 前置拦截器的最后一个,用于打印之前的filter处理的一些数据

*

*

* @author lsy

*

*/

public class PrintFilter implements GlobalFilter, Ordered {

Logger logger = LoggerFactory.getLogger(PrintFilter.class);

@Override

public int getOrder() {

// TODO Auto-generated method stub

return 100;

}

@Override

public Mono filter(ServerWebExchange exchange, GatewayFilterChain chain) {

if(false) {

URI urii = exchange.getRequest().getURI();

logger.info("PrintFilter开始...........getURI.URI==="+urii.toString());

String getPath = exchange.getRequest().getURI().getPath();

logger.info("PrintFilter开始.........getURI...getPath==="+getPath);

HttpMethod httpMethod = exchange.getRequest().getMethod();

logger.info("PrintFilter开始............HttpMethod==="+httpMethod.toString());

String methodValue= exchange.getRequest().getMethodValue();

logger.info("PrintFilter开始............getMethodValue==="+methodValue);

RequestPath getPath2 =exchange.getRequest().getPath();

logger.info("PrintFilter开始............getPath2==="+getPath2.toString());

InetSocketAddress inetSocketAddress =exchange.getRequest().getRemoteAddress();

logger.info("PrintFilter开始............getRemoteAddress==="+inetSocketAddress.toString());

String getHostString = exchange.getRequest().getRemoteAddress().getHostString();

logger.info("PrintFilter开始............getRemoteAddress-getHostString==="+getHostString);

String getHostName = exchange.getRequest().getRemoteAddress().getHostName();

logger.info("PrintFilter开始............getRemoteAddress-getHostName==="+getHostName);

}

logger.info("PrintFilter开始...........打印header所有内容。。。.");

getAllHeadersRequest(exchange.getRequest());

logger.info("");

String channel = exchange.getRequest().getHeaders().getFirst("channel");

String IPAddress = exchange.getRequest().getHeaders().getFirst("IP-Address");//这里为了方便测试,改成从Params取

logger.info("PrintFilter打印header中...........channel=="+channel+",IP-Address=="+IPAddress);

//没有被if条件拦截,就放行

return chain.filter(exchange);

}

private Map getAllParamtersRequest(ServerHttpRequest request) {

logger.info("PrintFilter getAllParamtersRequest开始............");

Map map = new HashMap();

MultiValueMap paramNames = request.getQueryParams();

Iterator it= paramNames.keySet().iterator();

while (it.hasNext()) {

String paramName = (String) it.next();

List paramValues = paramNames.get(paramName);

if (paramValues.size() >= 1) {

String paramValue = paramValues.get(0);

logger.info("request参数取第一个:"+paramName+",值:"+paramValue);

map.put(paramName, paramValue);

for(int i=0;i=1) {

logger.info("request参数,size=="+paramValues.size()+"...key=="+paramName+",值:"+paramValueTmp);

}

}

}

}

return map;

}

private Map getAllHeadersRequest(ServerHttpRequest request) {

logger.info("PrintFilter getAllHeadersRequest开始............");

Map map = new HashMap();

HttpHeaders hearders = request.getHeaders();

Iterator it= hearders.keySet().iterator();

while (it.hasNext()) {

String keyName = (String) it.next();

List headValues = hearders.get(keyName);

if (headValues.size() >= 1) {

String kvalue = headValues.get(0);

logger.info("request header取第一个key:"+keyName+",值:"+kvalue);

map.put(keyName, kvalue);

for(int i=0;i=1) {

logger.info("request header size=="+headValues.size()+"...key:"+keyName+",值:"+kvalueTmp);

}

}

}

}

return map;

}

/**

* 在之前的一个filter我们获取了post请求的body数据,放入了Attributes中

* exchange.getAttributes().put("PostBodyData", bodyStr);

* 所以在这里就可以直接取了。

*

*

* post请求的传参获取相对比较麻烦一些,gateway采用了webflux的方式来封装的请求体。

* 我们知道post常用的两种传参content-type是application/x-www-form-urlencoded和application/json,这两种方式还是有区别的。

*

*

* @param exchange

* @return

*/

private Object getPostBodyData(ServerWebExchange exchange) {

logger.info("PrintFilter getPostBodyData开始............");

Object res=exchange.getAttributes().get("PostBodyData");

if(res!=null) {

logger.info("getPostBodyData获取前面filter放入Attributes中的数据为========\r\n"+res.toString());

}else {

logger.info("getPostBodyData获取前面filter放入Attributes中的数据为null");

}

return res;

}

}

另一个GlobalFilter是为了解决获取post请求体的问题,跟限流也没关系。

package com.example.gate.filter;

import java.io.IOException;

import java.io.InputStream;

import java.io.UnsupportedEncodingException;

import java.net.InetSocketAddress;

import java.net.URI;

import java.nio.CharBuffer;

import java.nio.charset.Charset;

import java.nio.charset.StandardCharsets;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Map;

import java.util.Objects;

import java.util.concurrent.atomic.AtomicReference;

import java.util.stream.Collectors;

import org.apache.commons.io.IOUtils;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.cloud.gateway.filter.GatewayFilterChain;

import org.springframework.cloud.gateway.filter.GlobalFilter;

import org.springframework.cloud.gateway.support.BodyInserterContext;

import org.springframework.cloud.gateway.support.CachedBodyOutputMessage;

import org.springframework.cloud.gateway.support.DefaultServerRequest;

import org.springframework.core.Ordered;

import org.springframework.core.io.buffer.DataBuffer;

import org.springframework.core.io.buffer.DataBufferUtils;

import org.springframework.core.io.buffer.NettyDataBufferFactory;

import org.springframework.http.HttpHeaders;

import org.springframework.http.HttpMethod;

import org.springframework.http.MediaType;

import org.springframework.http.server.RequestPath;

import org.springframework.http.server.reactive.ServerHttpRequest;

import org.springframework.http.server.reactive.ServerHttpRequestDecorator;

import org.springframework.web.reactive.function.BodyInserter;

import org.springframework.web.reactive.function.BodyInserters;

import org.springframework.web.reactive.function.server.ServerRequest;

import org.springframework.web.server.ServerWebExchange;

import io.netty.buffer.ByteBufAllocator;

import io.netty.buffer.UnpooledByteBufAllocator;

import reactor.core.publisher.Flux;

import reactor.core.publisher.Mono;

/**

*

* Ordered 负责filter的顺序,数字越小越优先,越靠前。

*

* GatewayFilter: 需要通过spring.cloud.routes.filters 配置在具体路由下,

* 只作用在当前路由上或通过spring.cloud.default-filters配置在全局,作用在所有路由上。

* 需要用代码的形式,配置一个RouteLocator,里面写路由的配置信息。

*

* GlobalFilter:

* 全局过滤器,不需要在配置文件中配置,作用在所有的路由上,最终通过GatewayFilterAdapter包装成GatewayFilterChain可识别的过滤器,

* 它为请求业务以及路由的URI转换为真实业务服务的请求地址的核心过滤器,不需要配置,系统初始化时加载,并作用在每个路由上。

* 代码配置需要声明一个GlobalFilter对象。

*

*

* 对一个应用来说,GatewayFilter和GlobalFilter是等价的,order也会按照顺序进行拦截。所以两个order不要写一样!

*

*

* post请求的传参获取相对比较麻烦一些,springcloudgateway采用了webflux的方式来封装的请求体。

* 我们知道post常用的两种传参content-type是application/x-www-form-urlencoded和application/json,这两种方式还是有区别的。

*

* 解决post请求体获取不到和获取不正确的关键是:

* ModifyRequestBodyGatewayFilterFactory

*

* 修改响应体的关键 ModifyResponseBodyGatewayFilterFactory

*

*

* @author lsy

*

*/

public class PostBodyFilter implements GlobalFilter, Ordered {

Logger logger = LoggerFactory.getLogger(PostBodyFilter.class);

public static final String PostBodyData = "PostBodyData";

public static final String PostBodyByteData = "PostBodyByteData";

@Override

public int getOrder() {

return 0;

}

@Override

public Mono filter(ServerWebExchange exchange, GatewayFilterChain chain) {

logger.info("PostBodyFilter开始............");

// URI urii = exchange.getRequest().getURI();

// logger.info("PostBodyFilter开始...........getURI.URI==="+urii.toString());

//

// String getPath = exchange.getRequest().getURI().getPath();

// logger.info("PostBodyFilter开始.........getURI...getPath==="+getPath);

//

// HttpMethod httpMethod = exchange.getRequest().getMethod();

// logger.info("PostBodyFilter开始............HttpMethod==="+httpMethod.toString());

//

// String methodValue= exchange.getRequest().getMethodValue();

// logger.info("PostBodyFilter开始............getMethodValue==="+methodValue);

//

//

// RequestPath getPath2 =exchange.getRequest().getPath();

// logger.info("PostBodyFilter开始............getPath2==="+getPath2.toString());

//

// InetSocketAddress inetSocketAddress =exchange.getRequest().getRemoteAddress();

// logger.info("PostBodyFilter开始............getRemoteAddress==="+inetSocketAddress.toString());

//

// String getHostString = exchange.getRequest().getRemoteAddress().getHostString();

// logger.info("PostBodyFilter开始............getRemoteAddress-getHostString==="+getHostString);

//

// String getHostName = exchange.getRequest().getRemoteAddress().getHostName();

// logger.info("PostBodyFilter开始............getRemoteAddress-getHostName==="+getHostName);

ServerRequest serverRequest = new DefaultServerRequest(exchange);

// mediaType

MediaType mediaType = exchange.getRequest().getHeaders().getContentType();

if(mediaType!=null) {

logger.info("PostBodyFilter3.....getType==="+mediaType.getType());

}

// read & modify body

Mono modifiedBody = serverRequest.bodyToMono(String.class).flatMap(body -> {

logger.info("PostBodyFilter3.....原始length==="+body.length()+",内容==="+body);

String method = exchange.getRequest().getMethodValue();

if ("POST".equals(method)) {

// if (MediaType.APPLICATION_FORM_URLENCODED.isCompatibleWith(mediaType)) {

//

// // origin body map

// Map bodyMap = decodeBody(body);

//

// //TODO

//

// // new body map

// Map newBodyMap = new HashMap<>();

// return Mono.just(encodeBody(newBodyMap));

// }

//这里对application/json;charset=UTF-8的数据进行截获。

if (MediaType.APPLICATION_JSON.isCompatibleWith(mediaType)

|| MediaType.APPLICATION_JSON_UTF8.isCompatibleWith(mediaType)) {

String newBody;

try {

newBody = body;//可以修改请求体

} catch (Exception e) {

return processError(e.getMessage());

}

logger.info("PostBodyFilter3.....newBody长度==="+newBody.length()+",newBody内容====\r\n"+newBody);

exchange.getAttributes().put(PostBodyData, newBody);//为了向后传递,放入exchange.getAttributes()中,后面直接取

return Mono.just(newBody);

}

}

logger.info("PostBodyFilter3.....empty or just haha===");

// return Mono.empty();

return Mono.just(body);

});

BodyInserter bodyInserter = BodyInserters.fromPublisher(modifiedBody, String.class);

HttpHeaders headers = new HttpHeaders();

headers.putAll(exchange.getRequest().getHeaders());

// the new content type will be computed by bodyInserter

// and then set in the request decorator

headers.remove(HttpHeaders.CONTENT_LENGTH);

CachedBodyOutputMessage outputMessage = new CachedBodyOutputMessage(exchange, headers);

return bodyInserter.insert(outputMessage, new BodyInserterContext()).then(Mono.defer(() -> {

ServerHttpRequestDecorator decorator = new ServerHttpRequestDecorator(exchange.getRequest()) {

public HttpHeaders getHeaders() {

long contentLength = headers.getContentLength();

HttpHeaders httpHeaders = new HttpHeaders();

httpHeaders.putAll(super.getHeaders());

if (contentLength > 0) {

httpHeaders.setContentLength(contentLength);

} else {

httpHeaders.set(HttpHeaders.TRANSFER_ENCODING, "chunked");

}

return httpHeaders;

}

public Flux getBody() {

return outputMessage.getBody();

}

};

return chain.filter(exchange.mutate().request(decorator).build());

}));

}

private Map decodeBody(String body) {

return Arrays.stream(body.split("&")).map(s -> s.split("="))

.collect(Collectors.toMap(arr -> arr[0], arr -> arr[1]));

}

private String encodeBody(Map map) {

return map.entrySet().stream().map(e -> e.getKey() + "=" + e.getValue()).collect(Collectors.joining("&"));

}

private Mono processError(String message) {

/*

* exchange.getResponse().setStatusCode(HttpStatus.UNAUTHORIZED); return

* exchange.getResponse().setComplete();

*/

logger.error(message);

return Mono.error(new Exception(message));

}

}

bootstrap.yml:

#限流参数格式: 系统名称_限流key: 限流速率@令牌桶大小.另做了一个开关参数,方便我们改配置。

selfratelimiter:

rateLimitChannel:

default: 5@10

one_channelA: 2@3

one_channelB: 1@2

two_channelA: 1@2

two_channelB: 2@4

rateLimitIP:

default: 55@70

one_192.168.124.17: 2@3

two_192.168.124.18: 1@2

two_192.168.124.19: 5@10

rateLimitUrlAndChannel:

default: 66@80

url_1: /one/limit/sprint

channel_url_1: channelA

limit_url_1: 1@2

url_2: /one/limit/sprint2

channel_url_2: channelA

limit_url_2: 2@3

url_3: /one/limit/sprint

channel_url_3: channelB

limit_url_3: 3@4

这要说一下,rateLimitUrlAndChannel的结构跟上面的不一样。

因为我按照url和channel做限流,需要把url和channel联合做成key。

但是发现放入map中,斜杠有问题,所以把url和渠道分开了,这样要按照一个url和channel一起限流就需要

3行配置:

url_1: /one/limit/sprint #请求url

channel_url_1: channelA #请求的渠道

limit_url_1: 1@2 #限流速率@令牌桶大小

这个结构是方便在yml文件写了,但是不方便程序读。

因为代码传过来的限流key 一定是 url加渠道 这个结构,

这个放入map中获取才会快,否则你去遍历map,那么效率就低了。

所以为了实现在map中放入 这个结构:

key : value

url加渠道 : 限流速率@令牌桶大小

我必须在程序读取yml配置文件后对这个原始map进行数据结构重构。代码:

RateLimiterConfig

package com.example.gate.limit.self;

import java.util.Iterator;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

import javax.annotation.PostConstruct;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

/**

* 读取yml配置的属性类

*

* @author lsy

*

*/

@Component

@ConfigurationProperties(prefix = "selfratelimiter")

public class RateLimiterConfig {

public static final String SPILT_KEY="_";//限流key分隔符

public static final String SPILT_SPEED="@";//限流参数分隔符

//限流速率@令牌桶大小

//按照业务渠道自定义限流

private Map rateLimitChannel = new ConcurrentHashMap(){};

//按照IP进行自定义限流(跟全局的统一配置不同,每个ip可配置限流参数)

private Map rateLimitIP = new ConcurrentHashMap(){};

//按照url和对应的业务渠道自定义限流

private Map rateLimitUrlAndChannel = new ConcurrentHashMap(){};

public Map getRateLimitChannel() {

return rateLimitChannel;

}

public void setRateLimitChannel(Map rateLimitChannel) {

this.rateLimitChannel = rateLimitChannel;

}

public Map getRateLimitIP() {

return rateLimitIP;

}

public void setRateLimitIP(Map rateLimitIP) {

this.rateLimitIP = rateLimitIP;

}

public Map getRateLimitUrlAndChannel() {

return rateLimitUrlAndChannel;

}

public void setRateLimitUrlAndChannel(Map rateLimitUrlAndChannel) {

this.rateLimitUrlAndChannel = rateLimitUrlAndChannel;

}

@Override

public String toString() {

return "RateLimiterConfig [rateLimitChannel=" + rateLimitChannel + ", rateLimitIP=" + rateLimitIP

+ ", rateLimitUrlAndChannel=" + rateLimitUrlAndChannel + "]";

}

/**

* 因为yml里的数据结构不是我们代码需要的数据结构,所以要在bean初始化后进行数据结构重构。

*

yml的数据定义:

rateLimitUrlAndChannel:

default: 66@80

url_1: /one/limit/sprint

channel_url_1: channelA

limit_url_1: 1@2

我们实际需要放在map中的结构:

default: 66@80

_one_limit_sprint@channelA : 1@2

因为url的斜杠放在map中有问题,所以,url的斜杠替换成_。

这样限流的key的结构就是 : 替换/为_的url@渠道

对应的限流参数还是用 @分割。

我们定义了限流key的结构是_one_limit_sprint@channelA=1@2

那么在定义KeyResolver对象时,也要对应这么取数据。

在KeyResolver之前,还要在filter中村这样的数据。

*/

@PostConstruct

public void init() {

Map resMap = new ConcurrentHashMap(){};

if(this.getRateLimitUrlAndChannel()!=null) {

Iterator it = this.getRateLimitUrlAndChannel().keySet().iterator();

while(it.hasNext()) {

String key = (String)it.next();

String value = (String)this.getRateLimitUrlAndChannel().get(key);//

if(key!=null) {

if(key.trim().equals("default")) {

resMap.put(key, value);//default : 66@80

}

if(key.trim().startsWith("url_")) {//具体url加渠道限流 url_1

String channelKey="channel_"+key;//channel_url_1

String limitKey="limit_"+key;//limit_url_1

String channelValue = (String)this.getRateLimitUrlAndChannel().get(channelKey);//channelA

String limitValue = (String)this.getRateLimitUrlAndChannel().get(limitKey);//1@2

String urlKey=value.replace("/", "_");///one/limit/sprint 转化 _one_limit_sprint

String resKey=urlKey+"@"+channelValue;//_one_limit_sprint@channelA : 1@2

resMap.put(resKey, limitValue);//_one_limit_sprint@channelA : 1@2

}

continue;

}

}//end while

this.getRateLimitUrlAndChannel().clear();

this.getRateLimitUrlAndChannel().putAll(resMap);

}

System.out.println("初始化rateLimitUrlAndChannel数据结构。。。"+this.toString());

}

} 使用注解@PostConstruct来实现。这样最后这个map的结构不是yml中结果而是我们改造后的

这样在系统启动后,我打印了这个配置类中的信息:

初始化rateLimitUrlAndChannel数据结构。。。RateLimiterConfig [rateLimitChannel={default=5@10, one_channelB=1@2,

one_channelA=2@3, two_channelB=2@4, two_channelA=1@2}, rateLimitIP={default=55@70, two_192.168.124.19=5@10,

one_192.168.124.17=2@3, two_192.168.124.18=1@2}, rateLimitUrlAndChannel={_one_limit_sprint2@channelA=2@3,

default=66@80, _one_limit_sprint@channelA=1@2, _one_limit_sprint@channelB=3@4}]

看到,rateLimitUrlAndChannel这个map的key和value:

_one_limit_sprint2@channelA 2@3

这样的结构就和上面的按渠道限流的配置类似了。

这个url的斜杠我改成了下划线。

限流key:LimitConfig

package com.example.gate.config;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.cloud.gateway.filter.ratelimit.KeyResolver;

import org.springframework.cloud.gateway.filter.ratelimit.RedisRateLimiter;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.context.annotation.Primary;

import org.springframework.data.redis.core.ReactiveRedisTemplate;

import org.springframework.data.redis.core.script.RedisScript;

import org.springframework.validation.Validator;

import com.example.gate.limit.self.ChannelRedisRateLimiter;

import com.example.gate.limit.self.IPRedisRateLimiter;

import com.example.gate.limit.self.UrlAndChannelRedisRateLimiter;

import reactor.core.publisher.Mono;

/**

* 限流的配置

*

*

*RequestRateLimiter 即

*RequestRateLimiterGatewayFilterFactory

*关键类 RedisRateLimiter

*

*他的配置需要

*KeyResolver (限流的key,或者说限流的维度,按什么限流)

*RateLimiter (限流的2个参数:(replenishRate=50,burstCapacity=100) )

*

*

*org.springframework.cloud.gateway.config.GatewayAutoConfiguration

*HTTP 429 - Too Many Requests

*

* @author lsy

*

*/

@Configuration

public class LimitConfig {

Logger logger = LoggerFactory.getLogger(LimitConfig.class);

//---------渠道自定义限流

@Bean

public KeyResolver selfChannelKeyResolver() {//限流维度:header中的channel,由前面的filter处理

//这里注意KeyResolver的返回值不能为空。为空的话,请求发不通。所以这里做了空判断,保证返回一个字符串。

return exchange -> Mono.just(exchange.getRequest().getHeaders().getFirst("channel")==null?"default":exchange.getRequest().getHeaders().getFirst("channel"));

}

@Bean

// @Primary

ChannelRedisRateLimiter channelRedisRateLimiter(ReactiveRedisTemplate redisTemplate,

@Qualifier(ChannelRedisRateLimiter.REDIS_SCRIPT_NAME) RedisScript> script, Validator validator) {

return new ChannelRedisRateLimiter(redisTemplate, script, validator);// 使用自己定义的限流类

}

//-----------IP自定义限流

@Bean

public KeyResolver selfIPKeyResolver() {//限流维度:header中的IP-Address,由前面的filter处理

//这里注意KeyResolver的返回值不能为空。为空的话,请求发不通。所以这里做了空判断,保证返回一个字符串。

return exchange -> Mono.just(exchange.getRequest().getHeaders().getFirst("IP-Address")==null?"default":exchange.getRequest().getHeaders().getFirst("IP-Address"));

}

@Bean(name="iPRedisRateLimiter")

// 使用自己定义的限流类

IPRedisRateLimiter iPRedisRateLimiter(ReactiveRedisTemplate redisTemplate,

@Qualifier(IPRedisRateLimiter.REDIS_SCRIPT_NAME) RedisScript> script, Validator validator) {

return new IPRedisRateLimiter(redisTemplate, script, validator);

}

//---------稍微复杂一点的按照url加渠道进行自定义限流

@Bean

public KeyResolver selfUrlAndChannelKeyResolver() {//限流维度:header中的urlandchannel,由前面的filter处理