flume 对日志监控,和日志数据正则清洗最后实时集中到hbase中的示例

今天学习了flume的简单用法,顺便思考了一下,对标准日志格式的数据实时清洗和集中存储

今天介绍一下运用正则表达式对数据进行实时清洗,将数据存储到hbase中,前面简单的不分列的存储,就直接贴代码

1、运用flume的HBasesink--SimpleHbaseEventSerializer

代码如下

###define agent

a5_hbase.sources = r5

a5_hbase.channels = c5

a5_hbase.sinks = k5

#define sources

a5_hbase.sources.r5.type = exec

a5_hbase.sources.r5.command = tail -f /opt/module/cdh/hive-0.13.1-cdh5.3.6/logs/hive.log

a5_hbase.sources.r5.checkperiodic = 50

#define channels

a5_hbase.channels.c5.type = file

a5_hbase.channels.c5.checkpointDir = /opt/module/cdh/flume-1.5.0-cdh5.3.6/flume_file/checkpoint

a5_hbase.channels.c5.dataDirs = /opt/module/cdh/flume-1.5.0-cdh5.3.6/flume_file/data

#define sinks

a5_hbase.sinks.k5.type = org.apache.flume.sink.hbase.AsyncHBaseSink

a5_hbase.sinks.k5.table = flume_table5

a5_hbase.sinks.k5.columnFamily = hivelog_info

a5_hbase.sinks.k5.serializer = org.apache.flume.sink.hbase.SimpleAsyncHbaseEventSerializer

a5_hbase.sinks.k5.serializer.payloadColumn = hiveinfo

#bind

a5_hbase.sources.r5.channels = c5

a5_hbase.sinks.k5.channel = c5

可以对hive.log的日志更新,存储到hiveinfo这个列中,具体储存的值,可以运行几个hql,看看具体存储的值,以及对value是按照怎样的格式进行取值存储

2、利用RegexHbaseEventSerializer序列化模式,对日志数据进行解析

日志格式如下

抽取其中两条做实验

"27.38.5.159" "-" "31/Aug/2015:00:04:37 +0800" "GET /course/view.php?id=27 HTTP/1.1" "303" "440" - "http://www.ibeifeng.com/user.php?act=mycourse" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "-" "learn.ibeifeng.com"

"27.38.5.159" "-" "31/Aug/2015:00:04:37 +0800" "GET /login/index.php HTTP/1.1" "303" "465" - "http://www.ibeifeng.com/user.php?act=mycourse" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "-" "learn.ibeifeng.com"针对这个日志数据我们正则表达式如下所示

###define agent

a6_hive.sources = r6

a6_hive.channels = c6

a6_hive.sinks = k6

#define sources

a6_hive.sources.r6.type = exec

a6_hive.sources.r6.command = tail -f /opt/module/cdh/hive-0.13.1-cdh5.3.6/logs/hive.log

a6_hive.sources.r6.checkperiodic = 50

#define channels

a6_hive.channels.c6.type = file

a6_hive.channels.c6.checkpointDir = /opt/module/cdh/flume-1.5.0-cdh5.3.6/flume_file/checkpoint

a6_hive.channels.c6.dataDirs = /opt/module/cdh/flume-1.5.0-cdh5.3.6/flume_file/data

#define sinks

a6_hive.sinks.k6.type = org.apache.flume.sink.hbase.HBaseSink

#a6_hive.sinks.k6.type = hbase

a6_hive.sinks.k6.table = flume_table_regx2

a6_hive.sinks.k6.columnFamily = log_info

a6_hive.sinks.k6.serializer = org.apache.flume.sink.hbase.RegexHbaseEventSerializer

a6_hive.sinks.k6.serializer.regex = \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ (.*?)\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"\\ \\"(.*?)\\"

a6_hive.sinks.k6.serializer.colNames = ip,x1,date_now,web,statu1,statu2,user,web2,type,user2,web3

#bind

a6_hive.sources.r6.channels = c6

a6_hive.sinks.k6.channel = c6我们先启动flume的这个agent,打开监控页面

bin/flume-ng agent \

--name a6_hive \

--conf conf \

--conf-file conf/a6_hive.conf \

-Dflume.root.logger=DEBUG,console对hive.log数据进行更新

echo '"27.38.5.159" "-" "31/Aug/2015:00:04:37 +0800" "GET /login/index.php HTTP/1.1" "303" "465" - "http://www.ibeifeng.com/user.php?act=mycourse" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/31.0.1650.63 Safari/537.36" "-" "learn.ibeifeng.com"' >> /opt/module/cdh/hive-0.13.1-cdh5.3.6/logs/hive.log我们可以看到监控页面的情况,在对数据进行解析

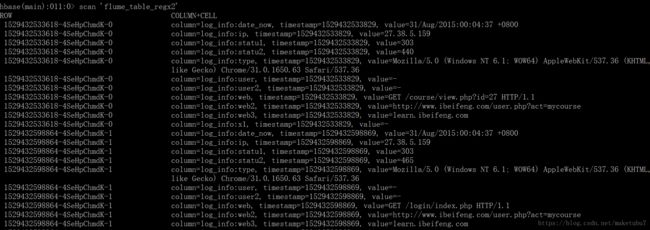

随后我们查看,hbase的表中的数据,如下,可以看到得到的数据是符合我们的要求的,只要在对其中的数据进行函数分析,就可以得到我们想要的结果,这个当然在 hive中完成,可以参考如下

https://blog.csdn.net/maketubu7/article/details/80513072

以上。