Selenium 实现的网络爬虫

根据设计的模板,实现抓取动作,并将抓取后的结果根据当时的配置文件返回为具体的Json对象。示例代码已经上传至github,欢迎大家一起完善。

完整代码已经上传至https://git.oschina.net/newkdd/Crawler

因Selenium版本对浏览器的支持不一致,该示例环境如下:

- Selenium 2.53.1

- Firefox64位47.0.2

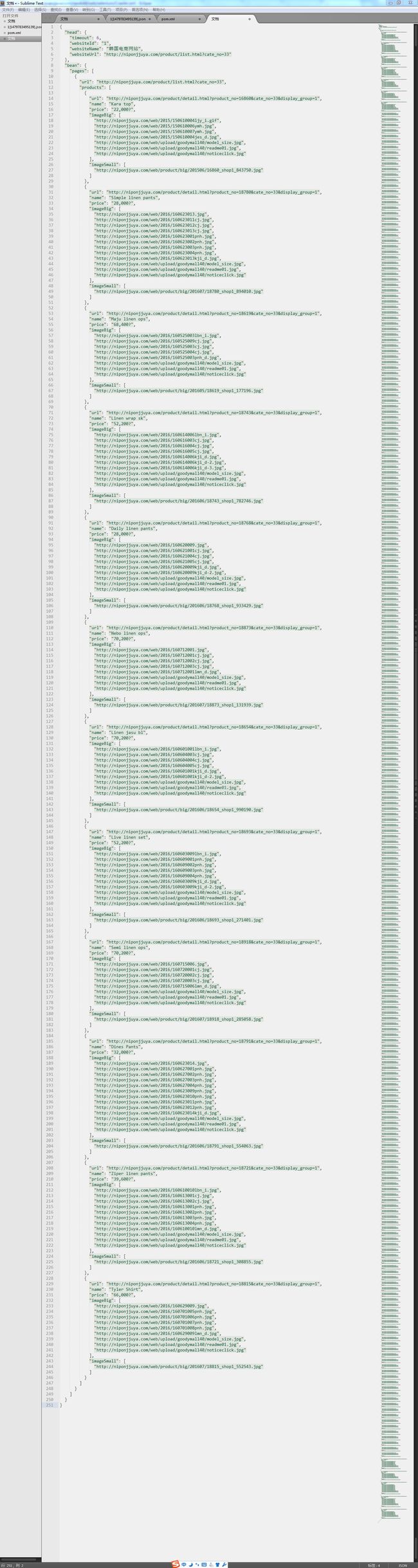

抓取后的结果

第一步:配置抓取规则

1

韩国电商网站

http://niponjjuya.com/product/list.html?cate_no=33

6

location

location

text

text

src

src

package com.newkdd.web.selenium;

import java.util.ArrayList;

import java.util.List;

/**

* 网络爬虫的通用变量

* @author Mike

*

*/

public class CrawlerConfig {

/**默认超时时间*/

public final static Integer TIME_OUT_DEFAULT=10;

/**浏览器安装路径*/

public final static String WEB_DRIVER_PATH="C:/Program Files/Mozilla Firefox/firefox.exe";

/**Jquery引用地址*/

public final static String JQUERY_PATH="http://code.jquery.com/jquery-1.8.0.min.js";

/**节点类型*/

public static enum NODE_TYPE{

LIST,STRING

}

/**节点取值对象*/

public static enum NODE_VALUE_FROM{

HREF,SRC,TEXT,LOCATION,VALUE

}

/**忽略的标签,忽略后不会添加到返回的JSON中*/

public static List NODE_IGNORE=new ArrayList();

static{

NODE_IGNORE.add("SCRIPT");

};

}

package com.newkdd.web.selenium;

import org.apache.commons.lang3.StringUtils;

import org.dom4j.Element;

/**

* 抓取网站相关信息

* @author Mike

*

*/

public class CrawlerHead {

/** 网站ID */

private String websiteId;

/** 网站地址 */

private String websiteUrl;

/** 网站名称 */

private String websiteName;

/** 超时加载时间单位秒 */

private Integer timeout;

public String getWebsiteId() {

return websiteId;

}

public void setWebsiteId(String websiteId) {

this.websiteId = websiteId;

}

public String getWebsiteUrl() {

return websiteUrl;

}

public void setWebsiteUrl(String websiteUrl) {

this.websiteUrl = websiteUrl;

}

public String getWebsiteName() {

return websiteName;

}

public void setWebsiteName(String websiteName) {

this.websiteName = websiteName;

}

public Integer getTimeout() {

return timeout;

}

public void setTimeout(Integer timeout) {

this.timeout = timeout;

}

/**

* 解析配置规则,超时时间如果没有配置获取默认的超时时间

* @param element * 节点配置信息

* @return

*/

public static CrawlerHead parse(Element element){

CrawlerHead headNode=new CrawlerHead();

// 打开地址

String websiteId = element.element("head").element("id").getText();

// 站点名称

String websiteName = element.element("head").element("name").getText();

// 站点地址

String websiteUrl = element.element("head").element("url").getText();

Integer timeout=CrawlerConfig.TIME_OUT_DEFAULT;

//设置默认超时时间

if(element.element("head").element("timeout")!=null&&StringUtils.isNotBlank(element.element("head").element("timeout").getText())){

try{

timeout=Integer.valueOf(element.element("head").element("timeout").getText());

}catch(Exception e){

}

}

headNode.setWebsiteId(websiteId);

headNode.setWebsiteName(websiteName);

headNode.setWebsiteUrl(websiteUrl);

headNode.setTimeout(timeout);

return headNode;

}

}

package com.newkdd.web.selenium;

import org.dom4j.Element;

/**

* 爬虫单节点的配置规则信息

* @author Mike

*

*/

public class CrawlerNode {

/**

* 值类型[List:数组;Null:字符串]

*/

private String type;

/**

* 元素定位对应的XPath

*/

private String xpath;

/**

* 转换后的属性名称,例如[sex:男],则name为sex

*/

private String name;

/**

* 属性值获取的来源[src,href,text,value,location]

*/

private String value;

/**

* 该元素对应的事件[redirect]

*/

private String method;

/**

* 需要执行的脚步

*/

private String script;

/**

* 元素去重依据

*/

private String distinct;

/**

*

*/

private Element element;

public String getType() {

return type;

}

public void setType(String type) {

this.type = type;

}

public String getXpath() {

return xpath;

}

public void setXpath(String xpath) {

this.xpath = xpath;

}

public String getName() {

return name;

}

public void setName(String name) {

this.name = name;

}

public String getValue() {

return value;

}

public void setValue(String value) {

this.value = value;

}

public String getMethod() {

return method;

}

public void setMethod(String method) {

this.method = method;

}

public String getDistinct() {

return distinct;

}

public void setDistinct(String distinct) {

this.distinct = distinct;

}

public static CrawlerNode parse(Element element){

CrawlerNode crawlerNode=new CrawlerNode();

crawlerNode.element=element;

String type=(element.attribute("type")==null?"":element.attribute("type").getValue());

crawlerNode.setType(type);

String xpath=(element.attribute("xpath")==null?"":element.attribute("xpath").getValue());

crawlerNode.setXpath(xpath);

String name=element.getName();

crawlerNode.setName(name);

String value=(element.getText()==null?"":element.getText());

crawlerNode.setValue(value);

String method=(element.attribute("method")==null?"":element.attribute("method").getValue());

crawlerNode.setMethod(method);

String script=((element.element("script")==null||element.element("script").getText()==null)?"":element.element("script").getText());

crawlerNode.setScript(script);

String distinct=(element.attribute("distinct")==null?"":element.attribute("distinct").getValue());

crawlerNode.setDistinct(distinct);

return crawlerNode;

}

public String getScript() {

return script;

}

public void setScript(String script) {

this.script = script;

}

public Element getElement() {

return element;

}

public void setElement(Element element) {

this.element = element;

}

@Override

public String toString() {

return "CrawlerNode [type=" + type + ", xpath=" + xpath + ", name=" + name + ", value=" + value + ", method="

+ method + ", distinct=" + distinct + "]";

}

}

第三步,解析配置文件,并实现数据抓取

package com.newkdd.web.selenium;

import java.io.File;

import java.io.FileWriter;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Date;

import java.util.HashMap;

import java.util.Iterator;

import java.util.List;

import java.util.Map.Entry;

import java.util.concurrent.TimeUnit;

import org.apache.commons.lang3.StringUtils;

import org.dom4j.Document;

import org.dom4j.Element;

import org.dom4j.io.SAXReader;

import org.openqa.selenium.By;

import org.openqa.selenium.JavascriptExecutor;

import org.openqa.selenium.TimeoutException;

import org.openqa.selenium.WebDriver;

import org.openqa.selenium.WebDriverException;

import org.openqa.selenium.WebElement;

import org.openqa.selenium.firefox.FirefoxDriver;

import net.sf.json.JSONArray;

import net.sf.json.JSONObject;

public class CrawlerMain {

/** 时间格式化工具 */

private final static SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd hh:mm:ss");

/** 浏览器驱动 */

private static WebDriver driver;

/** 爬虫分析结果 */

private static JSONObject resultJson = new JSONObject();

/**缓存单个站点抓取过的URL结合*/

private static List urls = new ArrayList();

/**站点的配置信息*/

private static CrawlerHead headNode;

/**

* 测试

*

* @param args

*/

public static void main(String[] args) {

System.out.println("//抓取开始时间 \t start:" + dateFormat.format(new Date()));

try {

parse("Crawler.xml");

} catch (Exception e) {

e.printStackTrace();

}

System.out.println("//抓取结束时间 \t end:" + dateFormat.format(new Date()));

}

public static void parse(String xml) throws Exception {

// Dom4j解释Xml文档

String fileName = CrawlerMain.class.getResource(xml).getPath();

SAXReader reader = new SAXReader();

Document document = reader.read(new File(fileName));

// 获取根节点

Element root = document.getRootElement();

try {

headNode=CrawlerHead.parse(root);

resultJson.put("head", JSONObject.fromObject(headNode));

//启动浏览器

System.setProperty("webdriver.firefox.bin", CrawlerConfig.WEB_DRIVER_PATH);

driver = new FirefoxDriver();

//加载网站首页

load(headNode.getWebsiteUrl());

// 缓存处理,用于去重操作

urls = new ArrayList();

// 开始解析配置文件中的对象

Element content = root.element("content");

resultJson = parse(content, resultJson);

System.out.println(resultJson.toString());

write(resultJson.toString());

} catch (Exception e) {

System.out.println(e.getMessage());

} finally {

driver.close();

}

}

public static void write(String json){

FileWriter fw = null;

try {

File jsonFile=new File(CrawlerMain.class.getResource("/").getPath()+headNode.getWebsiteId()+"["+System.currentTimeMillis()+"]"+".json");

if(jsonFile.exists()){

jsonFile.delete();

}

jsonFile.createNewFile();

fw = new FileWriter(jsonFile);

fw.write(json);

fw.close();

} catch (Exception e) {

e.printStackTrace();

}finally {

try {

fw.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/**

* 无限循环,解析规则

* @param parentElement * 配置规则

* @param inputJson * 上次拼装完成的JSON串

* @return

*/

@SuppressWarnings("unchecked")

public static JSONObject parse(Element parentElement, JSONObject inputJson) {

// 循环XML

for (Iterator it = parentElement.elementIterator(); it.hasNext();) {

Element element = it.next();

//获取属性的获取配置规则

CrawlerNode crawlerNode = CrawlerNode.parse(element);

//忽略的节点信息,不予以解析

if(CrawlerConfig.NODE_IGNORE.contains(crawlerNode.getName().toUpperCase())){

continue;

}

//未配置默认获取单值属性

if(StringUtils.isBlank(crawlerNode.getType())){

inputJson.put(crawlerNode.getName(), parseObject(crawlerNode).get(crawlerNode.getName()));

}

//解析数组属性

else if (CrawlerConfig.NODE_TYPE.LIST.toString().equals(crawlerNode.getType().toUpperCase())) {

inputJson.put(crawlerNode.getName(), parseList(crawlerNode));

}

//解析单值属性

else {

inputJson.put(crawlerNode.getName(), parseObject(crawlerNode).get(crawlerNode.getName()));

}

}

return inputJson;

}

/**

* 解析单值属性

* @param crawlerNode * 配置规则

* @return

*/

private static JSONObject parseObject(CrawlerNode crawlerNode){

JSONObject jsonObject=new JSONObject();

//解析数值

jsonObject= htmlValue(crawlerNode);

//解析子属性

if (crawlerNode.getElement().elements().size() > 0) {

parse(crawlerNode.getElement(), jsonObject.getJSONObject(crawlerNode.getName()));

}

return jsonObject;

}

/**

* 解析数组属性

* @param crawlerNode * 配置信息

* @return

*/

private static JSONArray parseList(CrawlerNode crawlerNode){

JSONArray jsonArray = new JSONArray();

//解析子属性

if (crawlerNode.getElement().elements().size() > 0) {

// 元素个数可以确认

if (StringUtils.isNotBlank(crawlerNode.getXpath())) {

parseListByXpath(jsonArray,crawlerNode);

}

// 元素不能确认,通过脚步的方式执行

else if (StringUtils.isNotBlank(crawlerNode.getScript())) {

parseListByScript(jsonArray,crawlerNode);

}

}

//解析数组属性

else {

jsonArray = htmlValues(crawlerNode);

}

return jsonArray;

}

/**

* 根据XPath方式解析集合信息

* @param jsonArray

* @param crawlerNode

*/

private static void parseListByXpath(JSONArray jsonArray,CrawlerNode crawlerNode){

java.util.Map webElements = getWebElements(crawlerNode.getXpath(),

crawlerNode.getDistinct());

for (Entry entry : webElements.entrySet()) {

JSONObject jsonObject = new JSONObject();

if (StringUtils.isNotBlank(crawlerNode.getMethod())

&& "redirect".toUpperCase().equals(crawlerNode.getMethod().toUpperCase())) {

if (urls.contains(entry.getKey())) {

continue;

}

urls.add(entry.getKey());

load(entry.getKey());

}

jsonObject = parse(crawlerNode.getElement(), jsonObject);

jsonArray.add(jsonObject);

}

}

/**

* 根据JavaScript的方式解析集合信息

* @param jsonArray

* @param crawlerNode

*/

private static void parseListByScript(JSONArray jsonArray,CrawlerNode crawlerNode){

//缓存链接地址

List tempURLs=new ArrayList();

while (true) {

Boolean loaded;

if(!tempURLs.contains(driver.getCurrentUrl())){

tempURLs.add(driver.getCurrentUrl());

}

//根据脚步切换页面

try {

loaded = (Boolean) ((JavascriptExecutor) driver).executeScript(crawlerNode.getScript());

} catch (WebDriverException e) {

loaded = false;

}

//分页结束退出循环

if (!loaded) {

break;

}

}

//集合

for(String url:tempURLs){

load(url);

JSONObject jsonObject = new JSONObject();

jsonObject = parse(crawlerNode.getElement(), jsonObject);

jsonArray.add(jsonObject);

urls.add(url);

}

}

/**

* 加载URL地址,默认超时时间为5秒

*

* @param url

*/

public static void load(String url) {

try {

driver.manage().timeouts().pageLoadTimeout(headNode.getTimeout(), TimeUnit.SECONDS);

driver.get(url);

} catch (TimeoutException e) {

System.out.println("time out of "+headNode.getTimeout()+" S :" + url);

((JavascriptExecutor) driver).executeScript("window.stop()");

} finally {

injectjQueryIfNeeded();

}

}

/**

* 加载URL地址,指定页面加载超时时间

*

* @param url

* * 需要加载的地址

* @param timeout

* * 页面加载超时时间,单位秒

*/

public static void load(String url, Integer timeout) {

if (null == timeout) {

timeout = headNode.getTimeout();

}

try {

driver.manage().timeouts().pageLoadTimeout(timeout, TimeUnit.SECONDS);

driver.get(url);

} catch (TimeoutException e) {

System.out.println("time out of "+timeout+" S :" + url);

// 超时停止浏览器加载

((JavascriptExecutor) driver).executeScript("window.stop()");

}

}

/**

* 获取单值

*

* @param jsonObject

* @param crawlerNode

* @return

*/

public static JSONObject htmlValue(CrawlerNode crawlerNode) {

JSONObject jsonObject = new JSONObject();

if(null==crawlerNode.getValue()){

jsonObject.put(crawlerNode.getName(), new JSONObject());

}else if (CrawlerConfig.NODE_VALUE_FROM.LOCATION.toString().equals(crawlerNode.getValue().toUpperCase())) {

jsonObject.put(crawlerNode.getName(), driver.getCurrentUrl());

}else if (StringUtils.isNotBlank(crawlerNode.getXpath())) {

try {

WebElement webElement = driver.findElement(By.xpath(crawlerNode.getXpath()));

if (CrawlerConfig.NODE_VALUE_FROM.TEXT.toString().equals(crawlerNode.getValue().toUpperCase())) {

jsonObject.put(crawlerNode.getName(),webElement.getText());

} else {

jsonObject.put(crawlerNode.getName(),webElement.getAttribute(crawlerNode.getValue()));

}

} catch (Exception e) {

}

}else{

jsonObject.put(crawlerNode.getName(), new JSONObject());

}

return jsonObject;

}

/**

* 获取集合

*

* @param crawlerNode

* @return

*/

public static JSONArray htmlValues(CrawlerNode crawlerNode) {

JSONArray result = new JSONArray();

if (StringUtils.isNotBlank(crawlerNode.getXpath())) {

try {

List collection = new ArrayList();

List webElements = driver.findElements(By.xpath(crawlerNode.getXpath()));

for (int i = 0; i < webElements.size(); i++) {

WebElement webElement = webElements.get(i);

//未配置值从哪里获取

if(StringUtils.isBlank(crawlerNode.getValue())){

collection.add("");

}

//值为Text文本

else if (CrawlerConfig.NODE_VALUE_FROM.TEXT.toString().equals(crawlerNode.getValue().toUpperCase())) {

if (!collection.contains(webElement.getText())) {

collection.add(webElement.getText());

}

}

//值为属性值

else {

if (!collection.contains(webElement.getAttribute(crawlerNode.getValue()))) {

collection.add(webElement.getAttribute(crawlerNode.getValue()));

}

}

}

result = JSONArray.fromObject(collection);

} catch (Exception e) {

}

}

return result;

}

/**

* 获取WebElements,根据值进行去重处理

*

* @param xpath

* @param distinctValue

* @return

*/

public static java.util.Map getWebElements(String xpath, String distinctValue) {

java.util.Map map = new HashMap();

if (StringUtils.isNotBlank(xpath)) {

List webElements = driver.findElements(By.xpath(xpath));

int index = 0;

for (int i = 0; i < webElements.size(); i++) {

WebElement webElement = webElements.get(i);

if (StringUtils.isBlank(distinctValue)) {

index++;

map.put(index + "", webElement);

} else {

map.put(webElement.getAttribute(distinctValue), webElement);

}

}

}

return map;

}

/**

* 加载必要的插件[jQuery]

*/

public static void injectjQueryIfNeeded() {

if (!jQueryLoaded()) {

injectjQuery();

}

}

/**

* 如果没有加载,从官网加载jQuery

*

* @return

*/

public static Boolean jQueryLoaded() {

Boolean loaded;

try {

loaded = (Boolean) ((JavascriptExecutor) driver).executeScript("return " + "jQuery()!=null");

} catch (WebDriverException e) {

loaded = false;

}

return loaded;

}

/**

* 注入jQuery

*/

public static void injectjQuery() {

((JavascriptExecutor) driver).executeScript(" var headID = " + "document.getElementsByTagName(\"head\")[0];"

+ "var newScript = document.createElement('script');" + "newScript.type = 'text/javascript';"

+ "newScript.src = " + "'"+CrawlerConfig.JQUERY_PATH+"';"

+ "headID.appendChild(newScript);");

}

} 最终上POM

4.0.0

com.newkdd.web

Crawler

0.0.1-SNAPSHOT

org.seleniumhq.selenium

selenium-java

2.53.1

dom4j

dom4j

1.6.1

xml-apis

xml-apis

1.4.01

net.sf.json-lib

json-lib

2.4

jdk15

完整代码,下载地址:https://git.oschina.net/newkdd/Crawler