《Machine Learning(Tom M. Mitchell)》读书笔记——11、第十章

1. Introduction (about machine learning)

2. Concept Learning and the General-to-Specific Ordering

3. Decision Tree Learning

4. Artificial Neural Networks

5. Evaluating Hypotheses

6. Bayesian Learning

7. Computational Learning Theory

8. Instance-Based Learning

9. Genetic Algorithms

10. Learning Sets of Rules

11. Analytical Learning

12. Combining Inductive and Analytical Learning

13. Reinforcement Learning

10. Learning Sets of Rules

One of the most expressive and human readable representations for learned hypothe- ses is sets of if-then rules. This chapter explores several algorithms for learning such sets of rules.

10.1 INTRODUCTION

As shown in Chapter 3, one way to learn sets of rules is to first learn a decision tree, then translate the tree into an equivalent set of rules-one rule for each leaf node in the tree. A second method, illustrated in Chapter 9, is to use a genetic algorithm that encodes each rule set as a bit string and uses genetic search operators to explore this hypothesis space. In this chapter we explore a variety of algorithms that directly learn rule sets and that differ from these algorithms in two key respects. First, they are designed to learn sets of first-order rules(一阶规则) that contain variables. This is significant because first-order rules are much more expressive than propositional rules(命题规则). Second, the algorithms discussed here use sequential covering algorithms(序列覆盖算法) that learn one rule at a time to incrementally grow the final set of rules.

In this chapter we begin by considering algorithms that learn sets of propositional rules; that is, rules without variables. Algorithms for searching the hypothesis space to learn disjunctive sets of rules are most easily understood in this setting. We then consider extensions of these algorithms to learn first-order rules. Two general approaches to inductive logic programming(归纳逻辑编程) are then considered, and the fundamental relationship between inductive and deductive inference(归纳推理和演绎推理) is explored.

10.2 SEQUENTIAL COVERING ALGORITHMS

Here we consider a family of algorithms for learning rule sets based on the strategy of learning one rule, removing the data it covers, then iterating this process. Such algorithms are called sequential covering algorithms. A prototypical sequential covering algorithm is described in Table 10.1.

This sequential covering algorithm is one of the most widespread approaches to learning disjunctive sets of rules. It reduces the problem of learning a disjunctive set of rules to a sequence of simpler problems, each requiring that a single conjunctive rule be learned. Because it performs a greedy search, formulating a sequence of rules without backtracking, it is not guaranteed to find the smallest or best set of rules that cover the training examples.

10.2.1 General to Specific Beam Search(一般到特殊柱状搜索)

10.3 LEARNING RULE SETS: SUMMARY

This section considers several key dimensions(关键维度) in the design space of such rule learning algorithms.

First, sequential covering algorithms learn one rule at a time, removing the covered examples and repeating the process on the remaining examples. In contrast, decision tree algorithms such as ID3 learn the entire set of disjuncts simultaneously as part of the single search for an acceptable decision tree. We might, therefore, call algorithms such as ID3 simultaneous covering algorithms(并行覆盖算法), in contrast to sequential covering algorithms such as CN2. Which should we prefer? The key difference occurs in the choice made at the most primitive step in the search. At each search step ID3 chooses among alternative attributes by comparing the partitions of the data they generate. In contrast, CN2 chooses among alternative attribute-value pairs, by comparing the subsets of data they cover.

A second dimension along which approaches vary is the direction of the search in LEARN-ONE-RUILn E. the algorithm described above, the search is from general to specijic hypotheses. Other algorithms we have discussed (e.g., FIND-S from Chapter 2) search from specijic to general.

A third dimension is whether the LEARN-ONE-RULE search is a generate then test search through the syntactically legal hypotheses, as it is in our suggested implementation, or whether it is example-driven so that individual training examples constrain the generation of hypotheses. One important advantage of the generate and test approach is that each choice in the search is based on the hypothesis performance over many examples, so that the impact of noisy data is minimized. In contrast, example-driven algorithms that refine the hypothesis based on individual examples are more easily misled by a single noisy training example and are therefore less robust to errors in the training data.

A fourth dimension is whether and how rules are post-pruned.

A final dimension is the particular definition of rule PERFORMANCE used to guide the search in LEARN-ONE-RULE.

Relative frequency(相对频率): Nc / N

m-estimate of accuracy(精度的m-估计): (Nc + M*p) / (N + M)

(negative of) Entropy.

10.4 LEARNING FIRST-ORDER RULES

In the previous sections we discussed algorithms for learning sets of propositional (i.e., variable-free) rules. In this section, we consider learning rules that contain variables-in particular, learning first-order Horn theories. Our motivation for considering such rules is that they are much more expressive than propositional rules.

10.4.1 First-Order Horn Clauses

The problem is that propositional representations offer no general way to describe the essential relations among the values of the attributes.

First-order Horn clauses may also refer to variables in the preconditions that do not occur in the postconditions.

It is also possible to use the same predicates in the rule postconditions and preconditions, enabling the description of recursive rules.

10.4.2 Terminology(术语)

常量、变量、谓词、函数

项

文字、基本文字、负文字、正文字

子句、be universally quantified(被全称量化)、be existentially quantified(被存在量化)

Horn子句

置换

合一置换

10.5 LEARNING SETS OF FIRST-ORDER RULES: FOIL

The FOIL algorithm is summarized in Table 10.4.

Notice the outer loop corresponds to a variant of the SEQUENTIAL-COVERING algorithm discussed earlier; that is, it learns new rules one at a time, removing the positive examples covered by the latest rule before attempting to learn the next rule. The inner loop corresponds to a variant of our earlier LEARN-ONE-RULE algorithm, extended to accommodate first-order rules.

The hypothesis space search performed by FOIL is best understood by viewing it hierarchically(层次的). Each iteration through FOIL'S outer loop adds a new rule to its disjunctive hypothesis, Learned_rules. The effect of each new rule is to generalize the current disjunctive hypothesis (i.e., to increase the number of instances it classifies as positive), by adding a,new disjunct. Viewed at this level, the search is a specific-to-general search through the space of hypotheses, beginning with the most specific empty disjunction and terminating when the hypothesis is sufficiently general to cover all positive training examples. The inner loop of FOIL performs a finer-grained search to determine the exact definition of each new rule. This inner loop searches a second hypothesis space, consisting of conjunctions of literals, to find a conjunction that will form the preconditions for the new rule. Within this hypothesis space, it conducts a general-to-specific, hill-climbing search, beginning with the most general preconditions possible (the empty precondition), then adding literals one at a time to specialize the rule until it avoids all negative examples.

10.5.1 Generating Candidate Specializations in FOIL: Candidate_literals

The negation of either of the above forms of literals.

10.5.2 Guiding the Search in FOIL: Foil_Gain(L, R)

10.5.3 Learning Recursive Rule Sets

...

10.5.4 Summary of FOIL

In the case of noise-free training data, FOIL may continue adding new literals to the rule until it covers no negative examples. To handle noisy data, the search is continued until some tradeoff occurs between rule accuracy, coverage, and complexity. FOIL uses a minimum description length approach to halt the growth of rules, in which new literals are added only when their description length is shorter than the description length of the training data they explain. The details of this strategy are given in Quinlan (1990). In addition, FOIL post-prunes each rule it learns, using the same rule post-pruning strategy used for decision trees (Chapter 3).

10.6 INDUCTION AS INVERTED DEDUCTION(作为逆演绎的归纳)

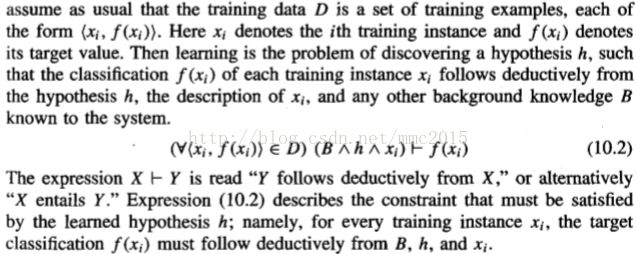

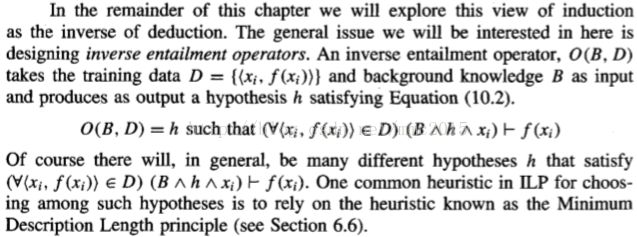

each training instance xi follows deductively from(由h演绎派生) the hypothesis h; X entails(蕴涵) Y

Research on inductive logic programing following this formulation has encountered several practical difficulties: noisy training data, the number of hy- potheses is so large, the complexity of the hypothesis space search increases as background knowledge B is increased.

In the following section, we examine one quite general inverse entailment operator that constructs hypotheses by inverting a deductive inference rule.

10.7 INVERTING RESOLUTION(逆归纳)

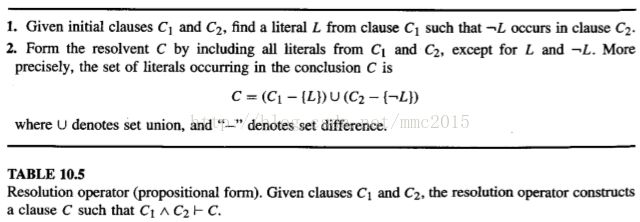

Resolution operator(归纳算子) of propositional form:

Inverse resolution operator (propositional form).:

10.7.1 First-Order Resolution(一阶归纳)

The resolution rule extends easily to first-order expressions. As in the propositional case, it takes two clauses as input and produces a third clause as output. The key difference from the propositional case is that the process is now based on the notion of unifying substitutions(合一置换).

10.7.2 Inverting Resolution(逆归纳): First-Order Case

10.7.4 Generalization, 8-Subsumption, and Entailment

10.8 SUMMARY AND FURTHER READING

The sequential covering algorithm learns a disjunctive set of rules by first learning a single accurate rule, then removing the positive examples covered by this rule and iterating the process over the remaining training examples. It provides an efficient, greedy algorithm for learning rule sets, and an alternative to top-down decision tree learning algorithms such as ID3, which can be viewed as simultaneous, rather than sequential covering algorithms.

In the context of sequential covering algorithms, a variety of methods have been explored for learning a single rule. These methods vary in the search strategy they use for examining the space of possible rule preconditions. One popular approach, exemplified by the CN2 program, is to conduct a general-to-specific beam search, generating and testing progressively more specific rules until a sufficiently accurate rule is found. Alternative approaches search from specific to general hypotheses, use an example-driven search rather than generate and test, and employ different statistical measures of rule accuracy to guide the search.

Sets of first-order rules (i.e., rules containing variables) provide a highly expressive representation. For example, the programming language PROLOG represents general programs using collections of first-order Horn clauses. The problem of learning first-order Horn clauses is therefore often referred to as the problem of inductive logic programming.

One approach to learning sets of first-order rules is to extend the sequential covering algorithm of CN2 from propositional to first-order representations. This approach is exemplified by the FOIL program, which can learn sets of first-order rules, including simple recursive rule sets.

Following the view of induction as the inverse of deduction, some programs search for hypotheses by using operators that invert the well-known opera- tors for deductive reasoning. For example, CIGOL uses inverse resolution, an operation that is the inverse of the deductive resolution operator commonly used for mechanical theorem proving. PROGOL combines an inverse entail- ment strategy with a general-to-specific strategy for searching the hypothesis space.