elasticsearch进行日志信息的采集(按照指定格式)

filter表示过滤

终端输入

终端输出stdin

rubydebug 改变输出格式

第一类:储存信息到指定文件

[root@server1 tmp]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# vim message.conf

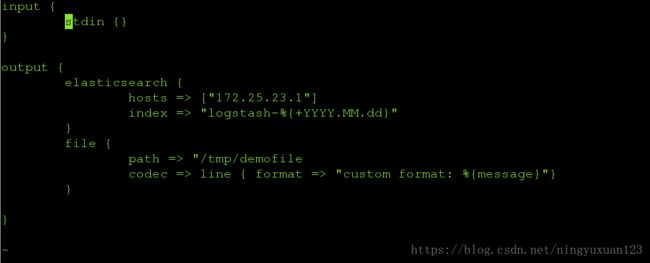

input {

stdin {}

}

output {

elasticsearch {

hosts => ["172.25.23.1"]

index => "logstash-%{+YYYY.MM.dd}"

}

file {

path => "/tmp/demofile

codec => line { format => "custom format: %{message}"}

}

}~ [root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf

Settings: Default pipeline workers: 1

Pipeline main started

love

{

"message" => "love",

"@version" => "1",

"@timestamp" => "2018-08-25T03:20:25.772Z",

"host" => "server1"

}

love

{

"message" => "love",

"@version" => "1",

"@timestamp" => "2018-08-25T03:20:28.239Z",

"host" => "server1"

}

dead

{

"message" => "dead",

"@version" => "1",

"@timestamp" => "2018-08-25T03:20:30.673Z",

"host" => "server1"

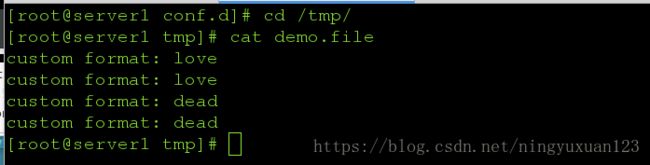

[root@server1 conf.d]# cd /tmp在文件里指定的目录查找到相应的信息重点内容

[root@server1 tmp]# ls

demo.file hsperfdata_elasticsearch hsperfdata_root jna--1985354563

[root@server1 tmp]# cat demo.file

custom format: love

custom format: love

custom format: dead

custom format: dead第二类:文件模块的使用方法

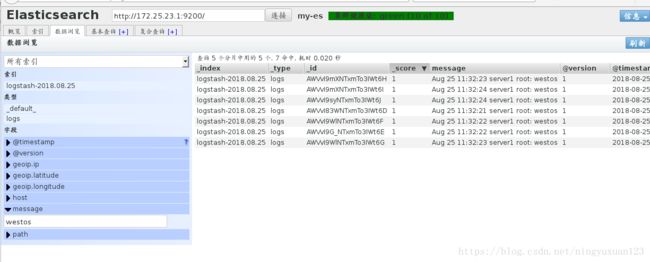

采集日志:

[root@server1 tmp]# cd /etc/logstash/conf.d/

[root@server1 conf.d]# vim message.conf

input {

file {

path => "/var/log/messages"

start_position => "beginning"

}

}

output {

elasticsearch {

hosts => ["172.25.23.1"]

index => "logstash-%{+YYYY.MM.dd}"

}

}

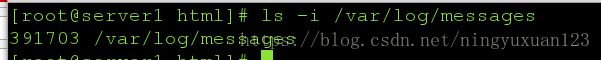

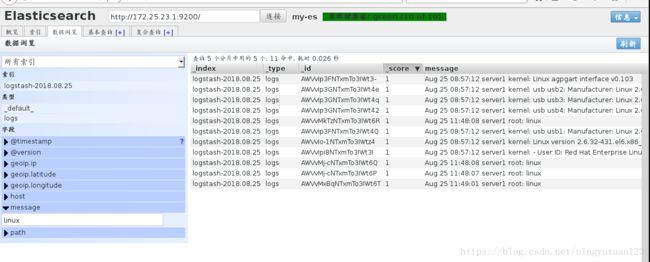

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/message.conf[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

391703 0 64768 32486

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger westos

[root@server1 ~]# logger linux

[root@server1 ~]# logger linux

[root@server1 ~]# logger linux

[root@server1 ~]# cat .sincedb_452905a167cf4509fd08acb964fdb20c

391703 0 64768 32743

301703为文件的编号。最后一个数字为采集的数目

[root@server1 html]# ls -i /var/log/messages

391703 /var/log/messages

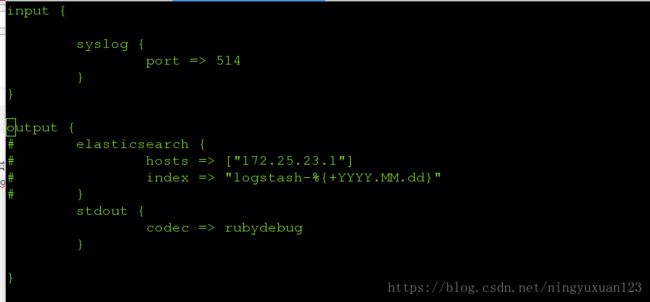

第三类:远程采集信息

[root@server1 conf.d]# vim syslog.conf

input {

syslog {

port => 514

}

}

output {

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/syslog.conf 测试:

[root@server2 elasticsearch]# vim /etc/rsyslog.conf

*.* @@172.25.23.1:514

[root@server2 elasticsearch]# /etc/init.d/rsyslog restart

Shutting down system logger: [ OK ]

Starting system logger: [ OK ]

[root@server2 elasticsearch]# logger westos

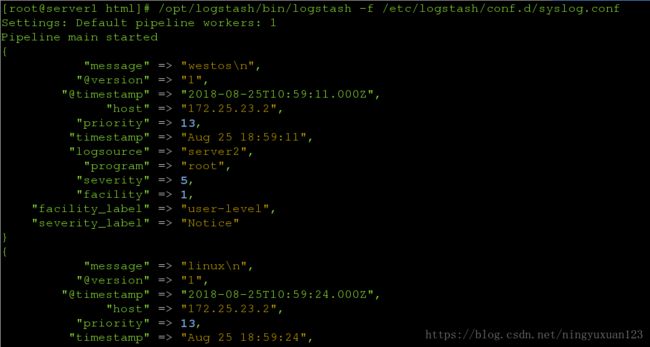

[root@server2 elasticsearch]# logger linux[root@server1 conf.d]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/syslog.conf

Settings: Default pipeline workers: 1

Pipeline main started

{

"message" => "westos\n",

"@version" => "1",

"@timestamp" => "2018-08-25T03:44:29.000Z",

"host" => "172.25.23.2",

"priority" => 13,

"timestamp" => "Aug 25 11:44:29",

"logsource" => "server2",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

}

{

"message" => "linux\n",

"@version" => "1",

"@timestamp" => "2018-08-25T03:44:43.000Z",

"host" => "172.25.23.2",

"priority" => 13,

"timestamp" => "Aug 25 11:44:43",

"logsource" => "server2",

"program" => "root",

"severity" => 5,

"facility" => 1,

"facility_label" => "user-level",

"severity_label" => "Notice"

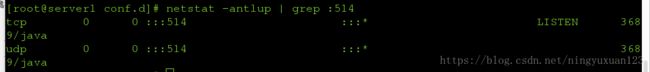

开启服务之后查看服务端口514

重新开启shell查看

[root@server1 ~]# netstat -antlup | grep :514

tcp 0 0 :::514 :::* LISTEN 2446/java

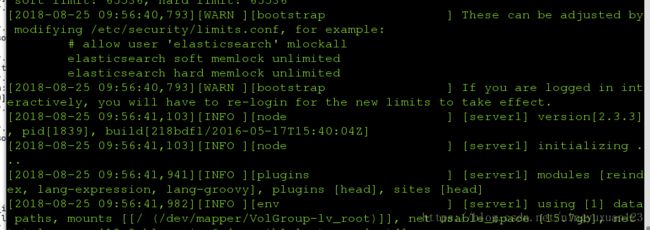

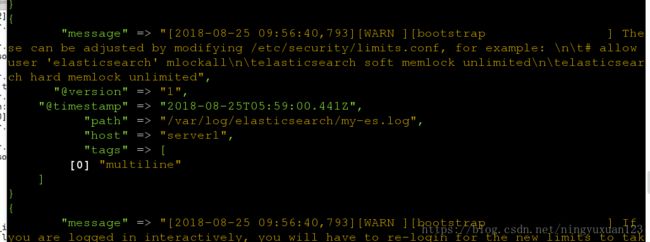

udp 0 0 :::514 :::* 2446/java 第三类:多行采集日志(有的一个日志信息占用多行)

占用多行的日志:

[root@server1 elasticsearch]# cat my-es.log

[2018-08-25 09:56:40,793][WARN ][bootstrap ] These can be adjusted by modifying /etc/security/limits.conf, for example:

# allow user 'elasticsearch' mlockall

elasticsearch soft memlock unlimited

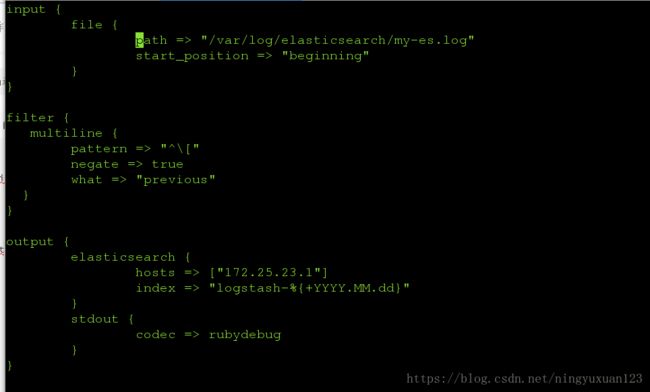

elasticsearch hard memlock unlimited编写采集过滤文件的条件文件

[root@server1 elasticsearch]# cd /etc/logstash/

[root@server1 logstash]# ls

conf.d

[root@server1 logstash]# cd conf.d/

[root@server1 conf.d]# pwd

/etc/logstash/conf.d

[root@server1 conf.d]# ls

es.conf message.conf syslog.conf

[root@server1 conf.d]# cp message.conf cs.conf

[root@server1 conf.d]# vim cs.conf

input {

file {

path => "/var/log/elasticsearch/my-es.log"

start_position => "beginning"

}

}

filter {

multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

output {

elasticsearch {

hosts => ["172.25.23.1"]

index => "logstash-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

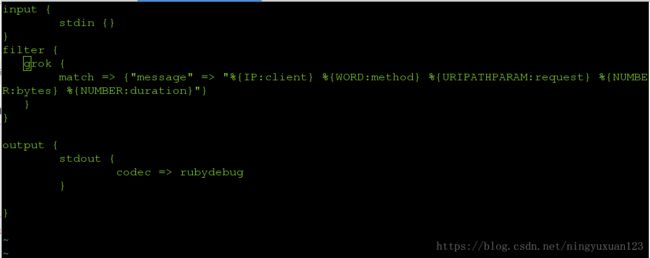

}[root@server1 conf.d]/opt/logstash/bin/logstash -f /etc/logstash/conf.d/cs.conf 第五类:对apache的日志进行采集

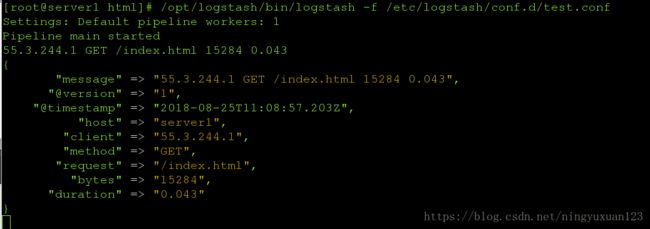

[root@server1 conf.d]vim test.conf

input {

stdin {}

}

filter {

grok {

match => {"message" => "%{IP:client} %{WORD:method} %{URIPATHPARAM:request} %{NUMBER:bytes} %{NUMBER:duration}"}

}

}

output {

stdout {

codec => rubydebug

}

}

[root@server1 conf.d]/opt/logstash/bin/logstash -f /etc/logstash/conf.d/cs.conf

使用手动添加格式[root@server1 conf.d]yum install httpd

[root@server1 conf.d]/etc/init.d/httpd start

浏览器输入172.25.23.1 产生apache的日志,便于之后进行按照格式输出

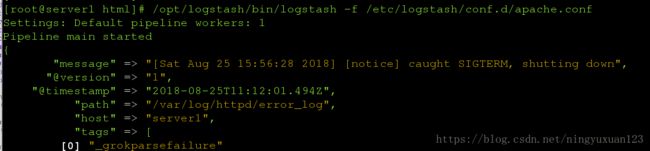

[root@server1 conf.d]# vim apache.conf

input {

file {

path => ["/var/log/httpd/access_log","/var/log/httpd/error_log"]

start_position => "beginning"

}

}

filter {

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

}

}

output {

elasticsearch {

hosts => ["172.25.23.1"]

index => "apache-%{+YYYY.MM.dd}"

}

stdout {

codec => rubydebug

}

}

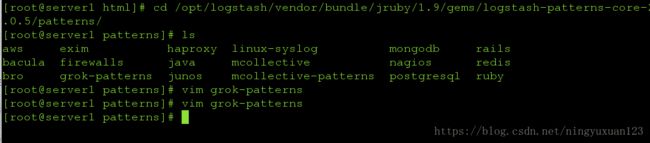

文件对apache变量使用是系统的,下列是变量的位置,可供了解验证

[root@server1 html]# cd /opt/logstash/vendor/bundle/jruby/1.9/gems/logstash-patterns-core-2.0.5/patterns/

[root@server1 patterns]# ls

aws exim haproxy linux-syslog mongodb rails

bacula firewalls java mcollective nagios redis

bro grok-patterns junos mcollective-patterns postgresql ruby

[root@server1 patterns]# vim grok-patterns

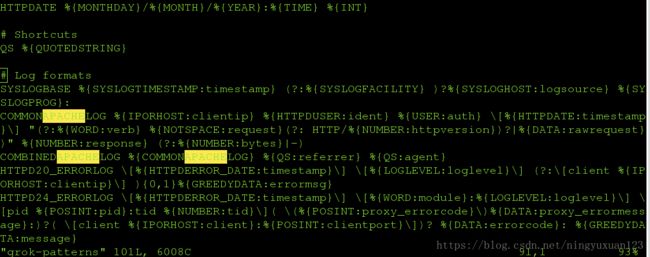

COMMONAPACHELOG %{IPORHOST:clientip} %{HTTPDUSER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] "(?:%{WORD:verb} %{NOTSPACE:request}(?: HTTP/%{NUMBER:httpversion})?|%{DATA:rawrequest})" %{NUMBER:response} (?:%{NUMBER:bytes}|-)

[root@server1 html]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/apache.conf