QT+ffmpeg打造跨平台多功能播放器

概述

此程序用QT的Qwidget做视频渲染,QT Mutimedia做音频渲染,ffmpeg作为音视频编解码内核,以CMake作跨平台编译。

编译参数:

DepsPath : ffmpeg库cmake路径

QT_Dir: Qt cmake路径

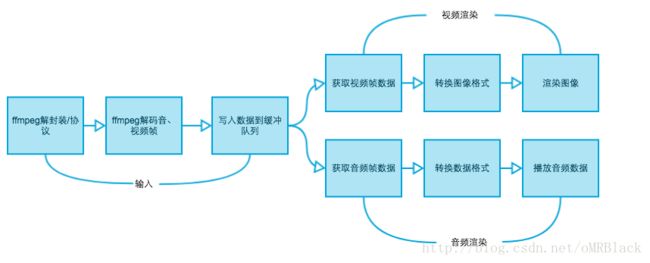

程序分为输入以及渲染两个部分,输入负责打开视频流并从中解码出音/视频帧数据并分开保存到对应的数据队列,渲染部分负责从队列中获取到数据然后转换对应图像/音频格式,最后通过QT渲染出来。

程序流程如下图:

数据模块

数据模块封装了数据队列以及图像格式转换以及音频数据格式转换。包含AudioCvt ImageCvt 以及DataContext 几个类以及对AvFrame数据的再封装;

图像转换

ImageConvert::ImageConvert(AVPixelFormat in_pixelFormat, int in_width, int in_height,

AVPixelFormat out_pixelFormat, int out_width, int out_height)

{

this->in_pixelFormat = in_pixelFormat;

this->out_pixelFormat = out_pixelFormat;

this->in_width = in_width;

this->in_height = in_height;

this->out_width = out_width;

this->out_height = out_height;

this->swsContext = sws_getContext(in_width, in_height, in_pixelFormat,

out_width, out_height, out_pixelFormat,

SWS_POINT, nullptr, nullptr, nullptr);

this->frame = av_frame_alloc();

this->buffer = (uint8_t*)av_malloc(avpicture_get_size(out_pixelFormat, out_width, out_height));

avpicture_fill((AVPicture *)this->frame, this->buffer, out_pixelFormat, out_width, out_height);

}

ImageConvert::~ImageConvert()

{

sws_freeContext(this->swsContext);

av_frame_free(&this->frame);

}

void ImageConvert::convertImage(AVFrame *frame)

{

sws_scale(this->swsContext, (const uint8_t *const *) frame->data, frame->linesize, 0,

this->in_height, this->frame->data, this->frame->linesize);

this->frame->width = this->out_width;

this->frame->height = this->out_height;

this->frame->format = this->out_pixelFormat;

this->frame->pts = frame->pts;

}

音频转换

AudioConvert::AudioConvert(AVSampleFormat in_sample_fmt, int in_sample_rate, int in_channels,

AVSampleFormat out_sample_fmt, int out_sample_rate, int out_channels)

{

this->in_sample_fmt = in_sample_fmt;

this->out_sample_fmt = out_sample_fmt;

this->in_channels = in_channels;

this->out_channels = out_channels;

this->in_sample_rate = in_sample_rate;

this->out_sample_rate = out_sample_rate;

this->swrContext = swr_alloc_set_opts(nullptr,

av_get_default_channel_layout(out_channels),

out_sample_fmt,

out_sample_rate,

av_get_default_channel_layout(in_channels),

in_sample_fmt,

in_sample_rate, 0, nullptr);

this->invalidated = false;

swr_init(this->swrContext);

this->buffer = nullptr;

this->buffer = (uint8_t**)calloc(out_channels,sizeof(**this->buffer));

}d

AudioConvert::~AudioConvert()

{

swr_free(&this->swrContext);

av_freep(&this->buffer[0]);

}

int AudioConvert::allocContextSamples(int nb_samples)

{

if(!this->invalidated)

{

this->invalidated = true;

return av_samples_alloc(this->buffer, nullptr, this->out_channels,

nb_samples, this->out_sample_fmt, 0);

}

return 0;

}

int AudioConvert::convertAudio(AVFrame *frame)

{

int len = swr_convert(this->swrContext, this->buffer, frame->nb_samples,

(const uint8_t **) frame->extended_data, frame->nb_samples);

this->bufferLen = this->out_channels * len * av_get_bytes_per_sample(this->out_sample_fmt);

return this->bufferLen;

}

输入

输入部分由一个解码线程组成,解码线程负责解码音视频数据然后存储到对应的数据队列,包含两个类:InputThread以及InputFormat,InputFormat是对ffmpeg解码音视频过程的封装,InputThread实例化InputFormat然后从中读取数据并存储到对应的音视频队列。

视频渲染

众所周知的,视频其实就是一个连续的在屏幕上按照一定的时间序列播放的图像序列。用QT渲染视频只需要将采集到的AvFrame转换成图像就能够在QT上显示。QWidget能够通过获取到QPainter在其paintEvent事件中能够将图像(QImage/QPixmap)渲染出来,要做到用QWidget渲染视频只需要从队列中取出AVFrame然后转换成QImage的格式就可以将QImage绘制到对应的QWidget上。相关代码如下:

渲染QIMage

void VideoRender::paintEvent(QPaintEvent *event)

{

QPainter painter(this);

painter.setRenderHint(QPainter::Antialiasing, true);

painter.setBrush(QColor(0xcccccc));

painter.drawRect(0,0,this->width(),this->height());

if(!frame.isNull())

{

painter.drawImage(QPoint(0,0),frame);

}

}图像转换

void VideoThread::run()

{

AvFrameContext *videoFrame = nullptr;

ImageConvert *imageContext = nullptr;

int64_t realTime = 0;

int64_t lastPts = 0;

int64_t delay = 0;

int64_t lastDelay = 0;

while (!isInterruptionRequested())

{

videoFrame = dataContext->getFrame();

if(videoFrame == nullptr)

break;

if(imageContext != nullptr && (imageContext->in_width != videoFrame->frame->width ||

imageContext->in_height != videoFrame->frame->height||

imageContext->out_width != size.width() ||

imageContext->out_height != size.height()))

{

delete imageContext;

imageContext = nullptr;

}

if(imageContext == nullptr)

imageContext = new ImageConvert(videoFrame->pixelFormat,

videoFrame->frame->width,

videoFrame->frame->height,

AV_PIX_FMT_RGB32,

size.width(),

size.height());

imageContext->convertImage(videoFrame->frame);

if(audioRender != nullptr)

{

realTime = audioRender->getCurAudioTime();

if(lastPts == 0)

lastPts = videoFrame->pts;

lastDelay = delay;

delay = videoFrame->pts - lastPts;

lastPts = videoFrame->pts;

if(delay < 0 || delay > 1000000)

{

delay = lastDelay != 0 ? lastDelay : 0;

}

if(delay != 0)

{

if(videoFrame->pts > realTime)

QThread::usleep(delay * 1.5);

// else

// QThread::usleep(delay / 1.5);

}

}

QImage img(imageContext->buffer, size.width(), size.height(), QImage::Format_RGB32);

emit onFrame(img);

delete videoFrame;

}

delete imageContext;

}

音频渲染

音频渲染器使用QT Mutimedia模块QAudioOutput渲染。打开一个音频输出,然后音频转换线程从数据队列取出数据然后转换格式之后写入到音频输出的buffer。通过写入时的音频帧时间戳-缓冲区中剩余的音频数据所需要的时间就是当前音频播放的时间,此时间可以作为同步时钟,视频渲染线程根据音频渲染器的当前时间来进行音视频同步。

时间计算方法如下:

int64_t AudioRender::getCurAudioTime()

{

int64_t size = audioOutput->bufferSize() - outputBuffer->bytesAvailable();

int bytes_per_sec = 44100 *2 * 2;

int64_t pts = this->curPts - static_cast<double>(size) / bytes_per_sec * 1000000;

return pts;

}

本文代码地址:https://github.com/Keanhe/QtVideoPlayer