ELK日志收集系统(三):docker-compose部署ELK单机与集群

ELK日志收集系统(三):docker-compose部署ELK单机与集群

- 一:安装docker-compose

- 二:docker-compose小型web案例

- 三:单机环境ELK系统搭建

- 四:多主机ELK部署

- 4.1 开启docker swarm

- 4.2 启动集群

一:安装docker-compose

官方安装文档见:docker-compose install

安装方式一:

# 注意下面url中的docker-compose版本

sudo curl -L "https://github.com/docker/compose/releases/download/1.23.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

安装方式二:

pip install docker-compose

二:docker-compose小型web案例

所用到的文件目录如下:

[root@node01 case1-flask-web]# tree

.

├── docker-compose.yml

├── Dockerfile

└── flask-web-code

├── app.py

└── requirements.txt

1 directory, 4 files

app.py文件内容如下:

#!/usr/bin/env python

# encoding: utf-8

import time

import redis

from flask import Flask

app = Flask(__name__)

cache = redis.Redis(host='172.17.2.36', port = 3306)

def get_hit_count():

retries = 5

while True:

try:

return cache.incr('hits')

except redis.execeptions.ConnectError as exec:

if retries = 0:

raise exec

retries -= 1

time.sleep(0.5)

@app.route('/')

def main():

count = get_hit_count()

return "欢迎访问!网站已累计访问{}次\n".format(count)

if __name__ == '__main__':

app.run(host = '0.0.0.0', debug = True)

requirements.txt为上面app.py文件运行所需的依赖,内容如下:

[root@node01 case1-flask-web]# cat flask-web-code/requirements.txt

redis

flask

Dockerfile文件内容如下:

[root@node01 case1-flask-web]# cat Dockerfile

# flask web app v1.0

FROM python:alpine3.6

COPY ./flask-web-code /code

WORKDIR /code

RUN pip install -r requirements.txt

CMD ["python","app.py"]

docker-compose文件内容如下:

[root@node01 case1-flask-web]# cat docker-compose.yml

version: "3"

services:

flask-web:

build: .

ports:

- "5000:5000"

container_name: flask-web

networks:

- web

redis:

image: redis

container_name: redis

networks:

- web

volumes:

- redis-data:/data

networks: # 创建一个名为web的网络

web:

driver: "bridge"

volumes: # 创建一个名为redis-data的数据卷

redis-data:

driver: "local"

检测docker-compose.yml文件语法是否有错:

docker-compose config # 在docker-compose.yml文件目录下运行

运行docker-compose:

docker-compose up -d

三:单机环境ELK系统搭建

Elasticsearch官方docker-compose安装文档:

https://www.docker.elastic.co/#

https://www.elastic.co/guide/en/elasticsearch/reference/6.6/docker.html

docker-compose.yml文件内容如下:

version: '2.2'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:6.6.2

container_name: elasticsearch

environment:

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata1:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- esnet

elasticsearch2:

image: docker.elastic.co/elasticsearch/elasticsearch:6.6.2

container_name: elasticsearch2

environment:

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "discovery.zen.ping.unicast.hosts=elasticsearch"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata2:/usr/share/elasticsearch/data

networks:

- esnet

logstash:

image: docker.elastic.co/logstash/logstash:6.6.2

container_name: logstash

networks:

- esnet

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

depends_on:

- elasticsearch

- elasticsearch2

kibana:

image: docker.elastic.co/kibana/kibana:6.6.2

container_name: kibana

networks:

- esnet

ports:

- "5601:5601"

depends_on:

- elasticsearch

- elasticsearch2

volumes:

esdata1:

driver: local

esdata2:

driver: local

networks:

esnet:

测试访问elasticsearch:

[root@node01 elk]# curl 127.0.0.1:9200

{

"name" : "TlZE8Cd",

"cluster_name" : "docker-cluster",

"cluster_uuid" : "g6xjoIdsTvuH2R0ibq86JA",

"version" : {

"number" : "6.6.2",

"build_flavor" : "default",

"build_type" : "tar",

"build_hash" : "3bd3e59",

"build_date" : "2019-03-06T15:16:26.864148Z",

"build_snapshot" : false,

"lucene_version" : "7.6.0",

"minimum_wire_compatibility_version" : "5.6.0",

"minimum_index_compatibility_version" : "5.0.0"

},

"tagline" : "You Know, for Search"

}

测试访问kibana:http://IP:5601

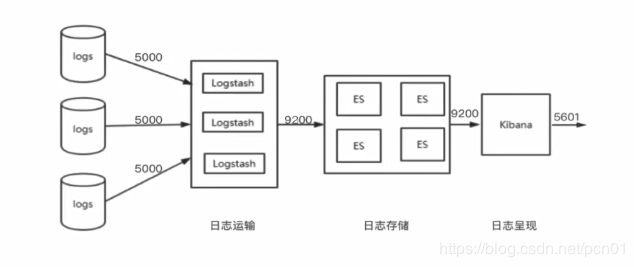

四:多主机ELK部署

这里以两台主机为例,部署ELK集群。多主机的集群部署需要借助docker swarm工具来完成。

4.1 开启docker swarm

[root@node01 ~]# docker swarm init

Swarm initialized: current node (10yazab81gljnr55d6rgzwdkt) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join \

--token SWMTKN-1-4vkyt0jr4ws4yklpwrp1koas213fzwpqnj8xceb324dst7k1ca-16rcj6szgan37o1zd8s6j04na \

172.17.2.239:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

# 备份上面的docker swarm jon ... 命令

# 其他主机需要通过这条命令加入集群

查看已有的集群节点:

[root@node01 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

10yazab81gljnr55d6rgzwdkt * node01.adminba.com Ready Active Leader

# 上面那台主机就是本机

在其他主机上执行命令加入集群:

[root@node02 ~]# docker swarm join --token SWMTKN-1-4vkyt0jr4ws4yklpwrp1koas213fzwpqnj8xceb324dst7k1ca-16rcj6szgan37o1zd8s6j04na 172.17.2.239:2377

This node joined a swarm as a worker.

再次查看集群节点:

[root@node01 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

10yazab81gljnr55d6rgzwdkt * node01.adminba.com Ready Active Leader

zl62ro2c1qg4l9bowqdoof1j2 node02.adminba.com Ready Active

当docker swarm启动后会增加两个网络:

[root@node01 ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

0a0f9dc6cc3c bridge bridge local

e68ced357b66 docker_gwbridge bridge local # 新增加的,用来访问外网

1220950fefa2 host host local

c2z09todfds5 ingress overlay swarm # 新增加的,集群中各个节点的相互通信

02b0466d2fda none null local

4.2 启动集群

docker-compose.yml文件内容如下:

# 对比上面单机版的docker-compose文件内容,少了的选项即为不支持的选项

[root@node01 swarm-elk]# cat docker-compose.yml

version: "3.6"

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:6.6.2

environment:

- cluster.name=docker-cluster

- bootstrap.memory_lock=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

volumes:

- esdata1:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- esnet

deploy:

placement:

constraints:

- node.role == manager

elasticsearch2:

image: docker.elastic.co/elasticsearch/elasticsearch:6.6.2

environment:

- cluster.name=docker-cluster

- bootstrap.memory_lock=false

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

- "discovery.zen.ping.unicast.hosts=elasticsearch"

volumes:

- esdata2:/usr/share/elasticsearch/data

networks:

- esnet

deploy:

placement:

constraints:

- node.role == worker

logstash:

image: docker.elastic.co/logstash/logstash:6.6.2

networks:

- esnet

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

deploy:

replicas: 2

logstash2:

image: docker.elastic.co/logstash/logstash:6.6.2

networks:

- esnet

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

deploy:

replicas: 2

kibana:

image: docker.elastic.co/kibana/kibana:6.6.2

networks:

- esnet

ports:

- "5601:5601"

deploy:

placement:

constraints:

- node.role == manager

volumes:

esdata1:

driver: local

esdata2:

driver: local

networks:

esnet:

driver: "overlay"

启动命令:

docker stack deploy -c docker-compose.yml elk