带你玩转kubernetes-k8s(第一篇:k8s kubeadm的安装)

这是我第一次下决心写一个完整的专栏,以后每周晚上12点之前为大家更新相关内容。相信来看这篇文章的朋友们都是有运维相关经验的朋友。

言规正传,我们看接下来的内容;本人不打算一上来就讲解一大堆的概念,这样会使各位朋友看着很累,看得云里雾里的,摸不着头脑,第一天我就先带领大家把k8s的环境搭建起来。

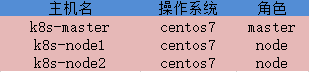

一、环境准备及角色规

1.关闭selinux。

2.关闭防火墙。

3.配置hosts,修改hostname

4.时间同步

yum -y install ntp

ntptime

timedatectl

systemctl enable ntpd

systemctl restart ntpd.service

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime二、安装docker

二标题内的操作需要在三台服务器上均操作

1.安装yum管理工具及其依赖

yum install -y yum-utils device-mapper-persistent-data lvm22.添加yum源

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo3.查看docker的可用版本

我们这里使用的是docker-ce-18.09.6版本

yum list docker-ce --showduplicates | sort -r yum -y install docker-ce-18.09.64.启动docker服务并激活开机自动启动

systemctl start docker && systemctl enable docker5.配置国内镜像加速器

echo '{"registry-mirrors":["https://361z9p6b.mirror.aliyuncs.com"]}' > /etc/docker/daemon.json三、使用kubeadm安装kubernetes集群

1.添加k8s的yum源,由于我们无法从谷歌直接下载,我们这里切换成阿里的源(ps:三台虚拟机均安装)

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF 2.临时关闭swap(ps:三台虚拟机均执行)

Kubernetes 1.8 开始要求关闭系统的Swap,如果不关闭,默认配置下 kubelet 将无法启动。可以通过修改 kubelet 的启动参数/etc/sysconfig/kubelet中 --fail-swap-on=false 更改这个限制(4小节的配置文件)

swapoff -a 3.安装并启动(我们这里安装k8s1.14.2版本,ps:三台虚拟机均执行)

yum install -y kubelet-1.14.2 kubeadm-1.14.2 kubectl-1.14.2 --disableexcludes=kubernetes

#--disableexcludes=kubernetes 禁掉除了这个之外的别的仓库

systemctl enable kubelet && systemctl start kubelet4.更改swap限制(ps:三台虚拟机均执行)

# cat /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS=--fail-swap-on=false5.新建init-config.yaml文件定制镜像仓库地址和Pod地址段(ps:仅在k8s-master上创建改文件)

podSubnet:ip根据自己的喜欢设置

# cat init-config.yaml

apiVersion: kubeadm.k8s.io/v1beta1

kind: ClusterConfiguration

imageRepository: docker.io/dustise

kubernetesVersion: v1.14.0

networking:

podSubnet: "192.168.0.0/16"6.下载k8s-master所需镜像及其依赖(ps:仅在k8s-master操作)

这个过程需要等待一段时间,具体看网速。

# kubeadm config images pull --config=init-config.yaml

[config/images] Pulled docker.io/dustise/kube-apiserver:v1.14.0

[config/images] Pulled docker.io/dustise/kube-controller-manager:v1.14.0

[config/images] Pulled docker.io/dustise/kube-scheduler:v1.14.0

[config/images] Pulled docker.io/dustise/kube-proxy:v1.14.0

[config/images] Pulled docker.io/dustise/pause:3.1

[config/images] Pulled docker.io/dustise/etcd:3.3.10

[config/images] Pulled docker.io/dustise/coredns:1.3.17.编辑文件/usr/lib/systemd/system/docker.service(ps:在三台虚拟机上均操作)

ExecStart=/usr/bin/dockerd --exec-opt native.cgroupdriver=systemd修改文件之后docker.deamon加载该文件,并重新启动docker

systemctl daemon-reload

systemctl restart docker8.安装k8s-Master(ps:仅在k8s-master操作)

kubeadm init --config=init-config.yaml

如果执行过程中报以下错误:

[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]:

/proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1解决方案执行以下命令即可:

echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables再次进行kubeadm 初始化。

提示如下:

[root@k8s-1master ~]# kubeadm init --config=init-config.yaml

[init] Using Kubernetes version: v1.14.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-1master localhost] and IPs [20.0.40.50 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-1master localhost] and IPs [20.0.40.50 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-1master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 20.0.40.50]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 17.001456 seconds

[upload-config] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.14" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --experimental-upload-certs

[mark-control-plane] Marking the node k8s-1master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-1master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 3wtp5h.dmuu9sn3bryfg4mj

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 20.0.40.50:6443 --token 3wtp5h.dmuu9sn3bryfg4mj \

--discovery-token-ca-cert-hash sha256:833c7a61fe7500401789395477ce874199986209205a145f6c8052d56fd92fe1

按照提示复制配置文件到用户目录下

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config查看ConfigMap

查看初始化情况

9.安装网络插件weave

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"10.配置node加入集群

在k8s-master上查看添加node的命令

kubeadm token create --print-join-command在k8s-node1,k8s-node2上均执行查看到的命令

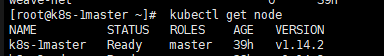

查看node状态

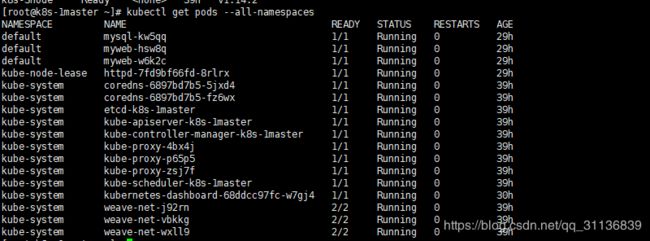

kubectl get node查看pods安装进度

kubectl get pods --all-namespaces

到这里我们的k8s基本已经部署完成了。

谢谢大家的浏览,如果部署过程中出现什么问题,可以随时留言,谢谢!

明天将带领大家装dashboard仪表盘,部署实例等内容