再弹ELK+filebeat(二)

也不知道是不是升级的原因,还是一年前没有把搭建踩坑没记录下来,导致一年后的今天项目要使用 又要填坑一次

elk的搭建网上比较全而杂,官网https://www.elastic.co/guide/index.html清晰明了,会有部分小细节未。。安装方式和配置就不多说,

1:filebeat 采取宿主机安装,规避docker容器考虑到(filebeat)容器内读取各实例挂载的log文件,会比较繁琐(curl -L -O https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.6.1-linux-x86_64.tar.gz)参考:elastic官网底子好

2:Step 2: Configure Filebeat(https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-configuration.html)

按惯例:这里我贴上自己 filebeat配置文件,elk7.6.1 以tag作标识->logstash->es

#filebeat.config:

# modules:

# path: ${path.config}/modules.d/*.yml

# reload.enabled: false

#filebeat.autodiscover:

# providers:

# - type: docker

# hints.enabled: true

#processors:

#- add_cloud_metadata: ~

#output.elasticsearch:

# hosts: ['172.18.141.169:9200']

#username: '${ELASTICSEARCH_USERNAME:}'

#password: '${ELASTICSEARCH_PASSWORD:}'

#setup.kibana:

# host: "172.18.141.169:5601"

filebeat.inputs:

- type: log

enabled: true

paths:

# 需要收集的日志所在的位置,可使用通配符进行配置

- /alex/data/hnjk-web-rest-prod/logs/info.log # 监控的日志

tags: ["rest-info"] # 用于实现多日志收集

- type: log

enabled: true

paths:

- /alex/data/hnjk-web-rest-prod/logs/error.log

tags: ["rest-error"]

- type: log

enabled: true

paths:

- /alex/data/hnjk-cec-oas-prod/logs/info.log

tags: ["oas-info"]

- type: log

enabled: true

paths:

- /alex/data/hnjk-cec-oas-prod/logs/error.log

tags: ["oas-error"]

#日志输出配置(采用 logstash 收集日志,5044为logstash端口)

output.logstash:

enabled: true

hosts: ['172.18.141.169:5044']2:elk用docker 跑的,为了方便参考之前的文章https://blog.csdn.net/qq_32447321/article/details/86539610

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

filter {

# if "beats_input_codec_plain_applied" in [tags] {

mutate {

remove_tag => ["beats_input_codec_plain_applied"]

}

# }

# mutate {

# split => ["message", "|"]

# }

# split {

# field => "message"

# terminator => "|"

# }

grok {

# 筛选过滤

match => {

#logstash 时间转换 eg:在日志文件中原生日志是这样子的:2020-03-20 18:04:15.331 ISO8601形式重点是后面的”.782“,后面附加以毫秒为单位的。那么grok插件中可以这样子定义匹配的规则(%{TIMESTAMP_ISO8601:dataTime})

"message" => "(?m)\s*%{TIMESTAMP_ISO8601:dataTime}\|(?[A-Za-z0-9/-]{4,40})\|(?[A-Z]{4,5})\s\|(?[A-Za-z0-9/.]{4,40})\|(?.*) "

# "message" => "(?\d{4}-\d{2}-\d{2}\s\d{2}:\d{2}:\d{2}.\d{3})\|(?[A-Za-z0-9/-]{4,40})\|(?[A-Z]{4,5})\s\|(?[A-Za-z0-9/.]{4,40})\|(?.*)"

}

remove_field => ["message"]

}

}

output {

if "rest-info" in [tags] { # 通过判断标签名,为不同的日志配置不同的index

elasticsearch {

hosts => ["172.18.141.169:9200"]

index => "rest-info-%{+YYYY.MM.dd}" # 索引名不能大写

template_overwrite => true

}

stdout { codec => rubydebug }

}

else if "rest-error" in [tags] {

elasticsearch {

hosts => ["172.18.141.169:9200"]

index => "rest-error-%{+YYYY.MM.dd}"

template_overwrite => true

}

stdout { codec => rubydebug }

}

else if "oas-info" in [tags] {

elasticsearch {

hosts => ["172.18.141.169:9200"]

index => "oas-info-%{+YYYY.MM.dd}"

template_overwrite => true

}

stdout { codec => rubydebug }

}

else if "oas-error" in [tags] {

elasticsearch {

hosts => ["172.18.141.169:9200"]

index => "oas-error-%{+YYYY.MM.dd}"

template_overwrite => true

}

stdout { codec => rubydebug }

}

else{

elasticsearch {

hosts => ["172.18.141.169:9200"]

index => "hnjk-other-%{+YYYY.MM.dd}"

template_overwrite => true

}

stdout { codec => rubydebug }

}

} $ ./logstash -t -f /etc/logstash/confi.d/30-logstash.conf #上面文件if判断了{}有点多,可以用-t来验证一下配置文件而不启动,结尾出现下面就是可以了

-

Configuration OK [2017-12-07T19:38:07,082][INFO ][logstash.runner ] Using config.test_and_exit mode. Config Validation Result: OK. Exiting Logstash -

#logstash 时间转换 eg:在日志文件中原生日志是这样子的:2020-03-20 18:04:15.331 ISO8601形式重点是后面的”.782“,后面附加以毫秒为单位的。那么grok插件中可以这样子定义匹配的规则(%{TIMESTAMP_ISO8601:dataTime})。

参考:https://github.com/elastic/elasticsearch/tree/master/libs/grok/src/main/resources/patterns

-

$ ./logstash -t -f /etc/logstash/confi.d/logstash-sample.conf #指定配置文件前台启动,我们先看看输出。

使用到工具 :

Grok位于正则表达式说明: 参考https://blog.csdn.net/qq_41262248/article/details/79977925

校验grok地址:http://grok.51vagaa.com/

2020-03-20 18:04:15.331|http-nio-8080-exec-3|WARN |c.e.h.i.StopWatchHandlerInterceptor|/api/bigScreen/day/infaultTop20 consume 785 millis

(?\d{4}-\d{2}-\d{2}\s\d{2}:\d{2}:\d{2}.\d{3})\|(?[A-Za-z0-9/-]{4,40})\|(?[A-Z]{4,5})\s\|(?[A-Za-z0-9/.]{4,40})\|(?.*) elk运行遇到问题:

step 1 ------#filebeat启动 lumberjack protocol error : 链接:https://www.jianshu.com/p/5df9db5cda8f

/etc/logstash/conf.d/02-beats-input.conf修改成如下图所示:将以下三行删除掉。这三行的意思是是否使用证书,本例是不使用证书的,如果你需要使用证书,将logstash.crt拷贝到客户端,然后在filebeat.yml里面添加路径即可

ssl => true

ssl_certificate => "/pki/tls/certs/logstash.crt"

ssl_key => "/pki/tls/private/logstash.key"

注意:sebp/elk docker是自建立了一个证书logstash.crt,默认使用*通配配符,如果你使用证书,filebeat.yml使用的服务器地址必须使用域名,不能使用IP地址,否则会报错

这里如果不去掉这三行配置的话,在后面启动filebeat时,会提示如下错误:

2018-09-12T10:01:29.770+0800 ERROR logstash/async.go:252 Failed to publish events caused by: lumberjack protocol error

2018-09-12T10:01:29.775+0800 ERROR logstash/async.go:252 Failed to publish events caused by: client is not connected

2018-09-12T10:01:30.775+0800 ERROR pipeline/output.go:109 Failed to publi

step 2---------

在kinana里面仅有一个filebeat-*的索引,tag有(rest-info/rest-error/oas-info/oas-err)不过每条记录都多出了一个beats_input_codec_plain_applied(es默认的);百度下发现是logstash -> es默认的, 怎么办?那就最容易想到的删了呗

# if "beats_input_codec_plain_applied" in [tags] {

mutate {

remove_tag => ["beats_input_codec_plain_applied"]

}

# }

这里存在一个疑问:明明我已经自定义了4个匹配规则logstash 的output 即使没有匹配上也会到hnjk-else索引的;为什么还是会用beats_input_codec_plain_applied;不行还是有问题没解决:

没有丝路那就看启动elk的日志吧,elk封装模块(es,logstash,kinaba),当然也罢日志封装了;docker虽然方便 了但是这里就体现了不方便;想起来ab在央视采访是说到“上帝给了我这个容颜,一定会让我得到些什么,又会失去些什么”shit happens..

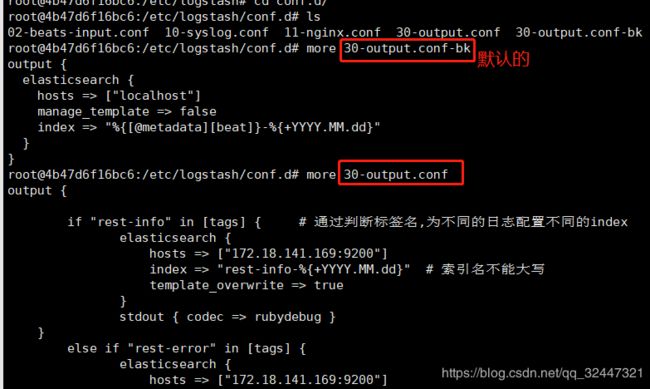

虽然logstash在output里面进行自定义index和filter 但是kibana里面依然没有自动加载到es的索引(rest-info-*/rest-error-*/oas-info-*/oas-err-*)还要继续找原因... 后续根据docker启动elk的日志发现 logstash 加载的环境在/etc/logstash/confi.d/

02-beats-input.conf 10-syslog.conf 11-nginx.conf 30-output.conf 30-output.conf-bk(源)

看到这个output就明白七七八八了,缘来logstash配置默认加载的文件在这里藏着阿,我自以为定义的logstash-sample.conf(/opt/logstash/conf/logstash-sample.conf)都是假象; 改之,果然ok了

修改完后,改有的都有了,不再仅仅一个filebeat-*索引了(30-output-conf-bk 也印证了之前 就filebeat-*这个一个索引问题,并非是es不再自动加载index的原因); 这才是真正的自定义;当时还真信了官网的鬼话,es7.6.1以后要自己添加索引,不会自动读取logstash中的配置,shit 。后续补充:(其实人家只是说自己模板)

至此:已完成elk+filebeat部署,正式环境准备用kafaka 替掉filebeat作 ,待续

0605补充:

今天跑了三个月的日志把磁盘挤爆了,所以所以不得已,要做个日志删除策略,保留14天的;先把日期手动处理再作策略

curl -i localhost:9200/_cat/indices?v

975 curl -i localhost:9200/_cat/indices?v|grep m

979 vi cleanelk.sh

980 date -d "14days ago" +%Y.%m.%d

981 cat cleanelk.sh

982 curl -XDELETE "http://localhost:9200/oas-info-2020.04.*"

983 df -h

984 curl -XDELETE "http://localhost:9200/oas-info-2020.05.*"993 vi /etc/crontab

994 ls

995 chmod 755 cleanelk.sh

996 ./cleanelk.sh

997 cat cleanelk.sh

998 bash -x cleanelk.sh

[root@hnjk-ecs-01 ~]# cat /etc/crontab SHELL=/bin/bash PATH=/sbin:/bin:/usr/sbin:/usr/bin MAILTO=root # For details see man 4 crontabs # Example of job definition: # .---------------- minute (0 - 59) # | .------------- hour (0 - 23) # | | .---------- day of month (1 - 31) # | | | .------- month (1 - 12) OR jan,feb,mar,apr ... # | | | | .---- day of week (0 - 6) (Sunday=0 or 7) OR sun,mon,tue,wed,thu,fri,sat # | | | | | # * * * * * user-name command to be executed 0 0 * * * root bash /root/cleanelk.sh [root@hnjk-ecs-01 ~]# cd /root/ [root@hnjk-ecs-01 ~]# ls AliAqsInstall_64.sh busybox.tar cleanelk.sh data realTimeNotificationFromDxp?type=5 redis-test-jar-with-dependencies.jar rinetd rinetd.tar.gz tmp [root@hnjk-ecs-01 ~]# cat cleanelk.sh #!/bin/bash CLEAN_14=`date -d "14days ago" +%Y.%m.%d` curl -XDELETE "http://localhost:9200/oas-info-$CLEAN_14" [root@hnjk-ecs-01 ~]#